Google Plans 1000x AI Infrastructure Scale-Up as Demand Outpaces Supply

6 Sources

6 Sources

[1]

Google tells employees it must double capacity every 6 months to meet AI demand

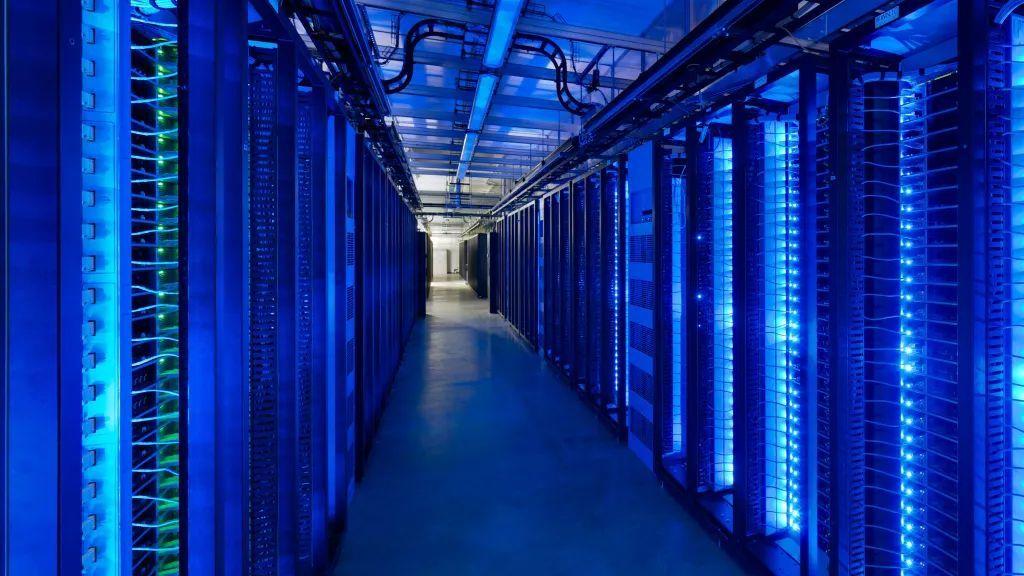

While AI bubble talk fills the air these days, with fears of overinvestment that could pop at any time, something of a contradiction is brewing on the ground: Companies like Google and OpenAI can barely build infrastructure fast enough to fill their AI needs. During an all-hands meeting earlier this month, Google's AI infrastructure head Amin Vahdat told employees that the company must double its serving capacity every six months to meet demand for artificial intelligence services, reports CNBC. Vahdat, a vice president at Google Cloud, presented slides showing the company needs to scale "the next 1000x in 4-5 years." While a thousandfold increase in compute capacity sounds ambitious by itself, Vahdat noted some key constraints: Google needs to be able to deliver this increase in capability, compute, and storage networking "for essentially the same cost and increasingly, the same power, the same energy level," he told employees during the meeting. "It won't be easy but through collaboration and co-design, we're going to get there." It's unclear how much of this "demand" Google mentioned represents organic user interest in AI capabilities versus the company integrating AI features into existing services like Search, Gmail, and Workspace. But whether users are using the features voluntarily or not, Google isn't the only tech company struggling to keep up with a growing user base of customers using AI services. Major tech companies are in a race to build out data centers. Google competitor OpenAI is planning to build six massive data centers across the US through its Stargate partnership project with SoftBank and Oracle, committing over $400 billion in the next three years to reach nearly 7 gigawatts of capacity. The company faces similar constraints serving its 800 million weekly ChatGPT users, with even paid subscribers regularly hitting usage limits for features like video synthesis and simulated reasoning models. "The competition in AI infrastructure is the most critical and also the most expensive part of the AI race," Vahdat said at the meeting, according to CNBC's viewing of the presentation. The infrastructure executive explained that Google's challenge goes beyond simply outspending competitors. "We're going to spend a lot," he said, but noted the real objective is building infrastructure that is "more reliable, more performant and more scalable than what's available anywhere else."

[2]

Google must double AI compute every 6 months to meet demand, AI infrastructure boss tells employees

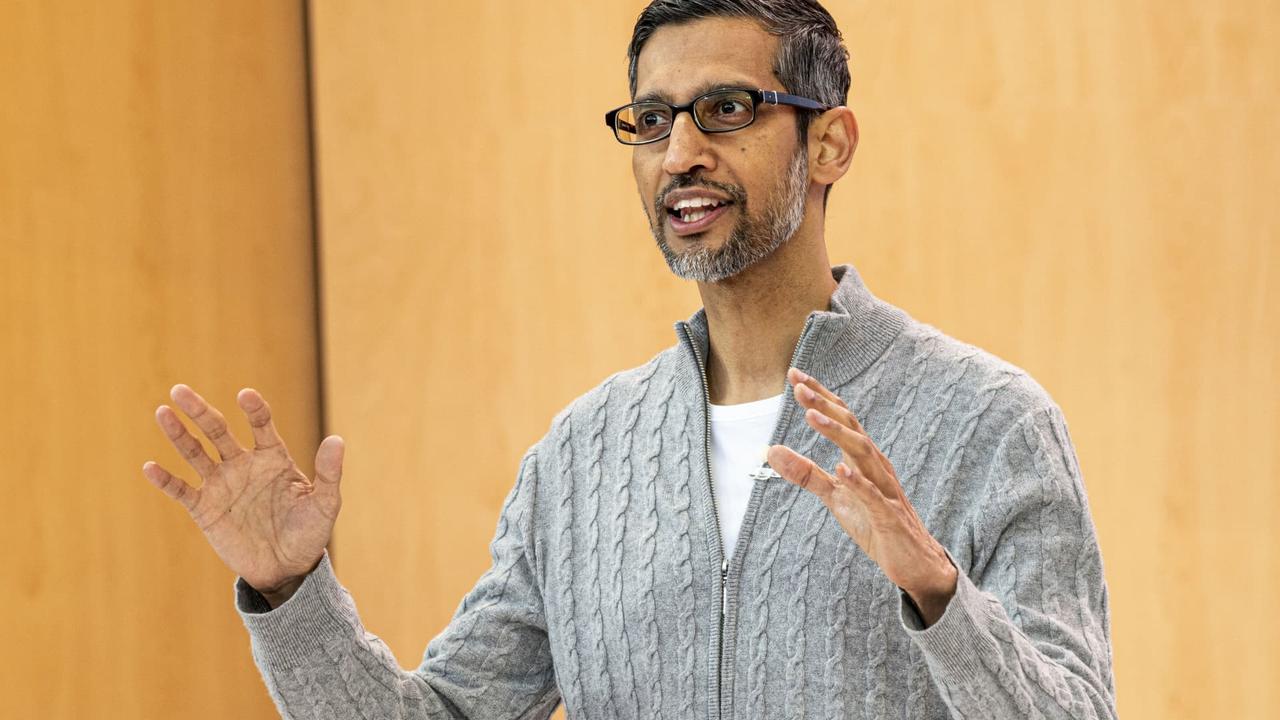

Amin Vahdat, VP of Machine Learning, Systems and Cloud AI at Google, holds up TPU Version 4 at Google headquarters in Mountain View, California, on July 23, 2024. Google 's AI infrastructure boss told employees that the company has to double its compute capacity every six months in order to meet demand for artificial intelligence services. At an all-hands meeting on Nov. 6, Amin Vahdat, a vice president at Google Cloud, gave a presentation, viewed by CNBC, titled "AI Infrastructure," which included a slide on "AI compute demand." The slide said, "Now we must double every 6 months.... the next 1000x in 4-5 years." "The competition in AI infrastructure is the most critical and also the most expensive part of the AI race," Vahdat said at the meeting, where Alphabet CEO Sundar Pichai and CFO Anat Ashkenazi also took questions from employees. The presentation was delivered a week after Alphabet reported better-than-expected third-quarter results and raised its capital expenditures forecast for the second time this year, to a range of $91 billion to $93 billion, followed by a "significant increase" in 2026. Hyperscaler peers Microsoft, Amazon and Meta also boosted their capex guidance, and the four companies now expect to collectively spend more than $380 billion this year. Google's "job is of course to build this infrastructure but it's not to outspend the competition, necessarily," Vahdat said. "We're going to spend a lot," he said, adding that the real goal is to provide infrastructure that is far "more reliable, more performant and more scalable than what's available anywhere else." In addition to infrastructure buildouts, Vahdat said Google bolsters capacity with more efficient models and through its custom silicon. Last week, Google announced the public launch of its seventh generation Tensor Processing Unit called Ironwood, which the company says is nearly 30 times more power efficient than its first Cloud TPU from 2018. Vahdat said the company has a big advantage with DeepMind, which has research on what AI models can look like in future years. Google needs to "be able to deliver 1,000 times more capability, compute, storage networking for essentially the same cost and increasingly, the same power, the same energy level," Vahdat said. "It won't be easy but through collaboration and co-design, we're going to get there."

[3]

Google Exec Claims Company Needs to Double Its AI Serving Capacity 'Every Six Months': Report

Tech companies are racing to build out their infrastructure as their increasingly resource-intensive AI products gobble up capacity, clean out chipmakers' supply, and require more power. Google, once dubbed the "King of the Web," is one of those companies, and a high-level exec for The Big G is reported to have told staff that the company needs to scale up its serving capabilities exponentially if it wishes to keep up with the demand for its AI services. CNBC got its hands on a recent presentation given by Amin Vahdat, VP of Machine Learning, Systems, and Cloud AI at Google. The presentation includes a slide on “AI compute demand†that asserts that Google "must double every 6 months.... the next 1000x in 4-5 years.†“The competition in AI infrastructure is the most critical and also the most expensive part of the AI race,†Vahdat reportedly said at the all-hands meeting where the presentation took place. Google's “job is of course to build this infrastructure, but it’s not to outspend the competition, necessarily,†he added. “We’re going to spend a lot,†he said, in an effort to create AI infrastructure that is “more reliable, more performant and more scalable than what’s available anywhere else.†Since CNBC's story was published, Google has quibbled with the reporting. While CNBC originally quoted Vahdat as saying that the company would need to "double" its compute capacity every six months, a Google spokesperson told Gizmodo that the executive's words were taken out of context. The spokesperson further explained that Vahdat "was not talking about a capital buildout of anything approaching the magnitude suggested. In reality, he simply noted that demand for AI services means we are being asked to provide significantly more computing capacity, which we are driving through efficiency across hardware, software, and model optimizations, in addition to new investments." CNBC has since updated its reporting from "compute" to "serving" capacity. Serve capacity would refer to Google's ability to handle a rising tide of user requests, while compute capacity woud refer to the company's overall infrastructure dedicated to AI, including what is needed to train new models and other expenditures. When asked for further clarification about the difference between the two, the spokesperson said that the original headline "read as if he was implying that we are doubling the amount of compute we have -- either measured by the # of chips we operate or the amount of MW of electricity." Instead, "the capacity increases Amin described will be reached in a number of ways, including new more capable chips and model efficiency and optimization," they added. Whatever's happening under the hood, it would appear that Googleâ€"like its competitorsâ€"needs to scale up its operations to support its nascent AI infrastructure business. Vahdat's comments come not long after the tech giant reported some chunky profits from its Cloud business, with the company announcing it plans to ramp up spending in the coming year. During his presentation, Vahdat also reportedly claimed that Google needs to “be able to deliver 1,000 times more capability, compute, storage networking [than its competitors] for essentially the same cost and increasingly, the same power, the same energy level." He admitted that it "won’t be easy" but said that "through collaboration and co-design, we’re going to get there.†The race to build data centersâ€"or "AI infrastructure" as the tech industry calls itâ€"is getting crazy. Like Google, Microsoft, Amazon, and Meta all claim they are going to ramp up their capital expenditures in an effort to build out the future of computing (cumulatively, Big Tech is expected to spend at least $400 billion in the next twelve months). As these facilities go up, they are causing all sorts of drama in the communities where they reside. Environmental and economic concerns abound. Some communities have begun to protest data center projectsâ€"and, in some cases, they're successfully repelling them. Still, given the sheer amount of money invested in this industry, it will be an ongoing fight for Americans who don't want the AI colossus in their backyards.

[4]

Google tells employees they need to double their work every 6 months to keep up with AI

Reducing reliance on third parties could help solve some cost and efficiency concerns Google's AI and Infrastructure VP, Amin Vahdat, has reportedly warned employees that the company must double serving capacity every six months in order to keep up with demand for AI tools. CNBC reported the news hit staff in a company all-hands, during which Vahdat revealed that Google would need to scale "the next 1000x in 4-5 years." Vahdat noted all of this was needed while maintaining the same cost and power consumption, spelling out a challenging future of both colossal capacity increases and equally mighty efficienty improvements. Vahdat explained the 1000x scale, targeted for around the end of the decade, would need to come at "essentially the same cost and increasingly, the same power, the same energy level." Clearly, the roadmap consists of multiple elements. Google continues to work on expanding its infrastructure, like AI and cloud data centers, but it's also rolling out more of its own hardware (like TPUs) to reduce reliance on third-party companies. Nvidia has profited hugely from that, for instance. Google's seventh-generation TPU, Ironwood, claims a 30x power efficiency boost over 2018 models. And on those third-party concerns, many Nvidia chips are being flagged as 'sold out,' per The Verge, which has slowed down some rollouts across the industry, including Google's own AI features. Separately, Google CEO Sundar Pichai has also warned 2026 would be "intense" due to AI competition and compute demand. Google has publicly acknowledged AI bubble concerns, but Pichai deems underinvesting in AI even riskier.

[5]

As Google eyes exponential surge in serving capacity, analyst says we're entering 'stage two of AI' where bottlenecks are physical constraints | Fortune

Google's AI infrastructure boss warned the company needs to scale up its tech to accommodate a massive influx of users and complex requests being handled by AI products -- and it may be a sign that fears of a bubble are overblown. Amin Vahdat, a VP who leads the global AI and infrastructure team at Google, said during a presentation at a Nov. 6 all-hands meeting that the company needs to double its serving capacity every six months, with "the next 1000x in 4-5 years," CNBC reported. This refers to Google's ability to ensure that Gemini and other AI products depending on Google Cloud can still work well when queried by a skyrocketing number of users. That's different from compute, or the physical infrastructure involved in training AI. A Google spokesperson told Fortune that "demand for AI services means we are being asked to provide significantly more computing capacity, which we are driving through efficiency across hardware, software, and model optimizations, in addition to new investments," pointing to the company's Ironwood chips as an example of its own hardware driving improvements in computing capacity. In previous years, every hyperscaler -- think Google Cloud but also Amazon and Microsoft Azure -- rushed to increase compute in anticipation of an influx of AI users. Now, the users are here, said Shay Boloor, chief market strategist at Futurum Equities. But as each company ratchets up its AI offerings, serving capacity is emerging as the next major challenge to tackle. "We're entering the stage two of AI where serving capacity matters even more than the compute capacity, because the compute creates the model, but serving capacity determines how widely and how quickly that model can actually reach the users," he told Fortune. Google, with its vast capital expenditures and past strategic moves to develop its own AI chips, is likely capable of doubling its serving capacity every six months, said Boloor. Yet Google and its competitors are still facing an uphill battle, he added, especially as AI products start to deal with more complex requests, including advanced search queries and video. "The bottleneck is not ambition, it's just truly the physical constraints, like the power, the cooling, the networking bandwidth and the time needed to build these energized data center capacities," he said. However, the fact that Google is seemingly facing so much demand for its AI infrastructure that it is pushing to double its serving capacity so quickly might be a sign that gloomy predictions made by AI pessimists aren't entirely accurate, said Boloor. Such concerns sent all three major stock indexes down by 1.9% or more this past week -- including the tech-heavy Nasdaq. "This is not like speculative enthusiasm, it's just unmet demand sitting in backlog," he said. "If things are slowing down a bit more than a lot of people hope for, it's because they're all constrained on the compute and more serving capacity."

[6]

Google needs to double its AI serving capacity 'every six months' and scale 'the next 1000x in 4-5 years' according to an internal presentation

If there's one thing sure to ruin your day at work, it's receiving an unrealistic target from your higher-ups. Pour one out, then, for the Google employees who attended an all-hands meeting earlier this month, only to be told that they need to double its serving capacity every six months to meet AI compute demand. That's according to CNBC, as the news outlet got a look at a presentation reportedly given to employees by Google AI infrastructure chief Amin Vahdat earlier this month. A slide entitled "AI compute demand" informed the team that "Now we must double every six months... the next 1000x in 4-5 years." CNBC also reports some direct quotes from the meeting, which was also said to be attended by Alphabet CEO Sundar Pichai. While Microsoft, Amazon, and Meta have been pouring huge sums of money into data center expansion in recent months to boost their own AI serving capabilities, it appears that Google's lofty expansion goals might not be receiving the same budget. Vahdat reportedly told employees that "[Google's] job is of course to build this infrastructure, but it's not going to outspend the competition, necessarily" and that the real goal will be providing infrastructure that is "more reliable, more performant, and more scalable than what's available anywhere else." That's not all. Vahdat is also claimed to have said Google needs to "be able to deliver 1,000 times more capability, compute, storage networking, for essentially the same cost and increasingly, the same power, the same energy level." "It won't be easy," the Google AI chief said. "But through collaboration and co-design, we're going to get there." That sounds like quite the task, doesn't it? I can only imagine the blank faces in the room as the Google engineers wrapped their heads around the idea of expanding their infrastructure on a truly gigantic scale, in record timeframes, without substantially increasing the budget -- both literally and figuratively. Management consultancy firm McKinsey & Company estimates that AI data centers are expected to require $5.3 trillion in capital expenditures by 2030, or roughly $1 trillion over Nvidia's current market cap, if you prefer to think of it that way. Certainly, if efficiencies could be made to enable unbelievably rapid AI compute expansion without pushing data center power requirements and budgets further into the stratosphere, the company to do so would be in a prime position to make the most of the AI boom. If, indeed, it lasts that long to begin with, given mounting concerns over a massive, potentially economy-wrecking AI bubble. Still, they don't call it a moon shot for nothing. Get to work, Google AI infrastructure engineers. It sounds like an impossible task, but Vahdat seems to believe in your work ethic. You didn't need a lunch break anyway, did you?

Share

Share

Copy Link

Google's AI infrastructure chief reveals the company must double serving capacity every six months to meet soaring demand, targeting a 1000-fold increase over 4-5 years while maintaining cost and energy efficiency.

Google Announces Massive AI Infrastructure Scale-Up

Google's AI infrastructure leadership has revealed ambitious plans to dramatically expand the company's serving capacity to meet surging demand for artificial intelligence services. During an all-hands meeting on November 6, Amin Vahdat, Vice President of Machine Learning, Systems and Cloud AI at Google, told employees that the company must double its serving capacity every six months, with a goal of achieving "the next 1000x in 4-5 years"

1

2

.The presentation, viewed by CNBC, outlined Google's strategy to scale AI infrastructure while maintaining cost efficiency and energy consumption at current levels. "We need to be able to deliver 1,000 times more capability, compute, storage networking for essentially the same cost and increasingly, the same power, the same energy level," Vahdat explained to employees

2

.Industry-Wide Infrastructure Race Intensifies

Google's announcement comes amid a broader industry push to expand AI infrastructure capacity. The company recently raised its capital expenditure forecast for the second time this year to a range of $91 billion to $93 billion, with plans for a "significant increase" in 2026

2

. This follows similar moves by hyperscaler peers Microsoft, Amazon, and Meta, with the four companies collectively expected to spend more than $380 billion this year on infrastructure buildouts.The competition extends beyond Google's immediate rivals. OpenAI is planning to build six massive data centers across the US through its Stargate partnership with SoftBank and Oracle, committing over $400 billion over the next three years to reach nearly 7 gigawatts of capacity

1

. The company faces similar capacity constraints serving its 800 million weekly ChatGPT users, with even paid subscribers regularly hitting usage limits for advanced features.Technical Solutions and Efficiency Gains

Google plans to achieve its ambitious scaling goals through multiple approaches beyond raw infrastructure expansion. The company is leveraging its custom silicon development, including the recent launch of its seventh-generation Tensor Processing Unit called Ironwood, which Google claims is nearly 30 times more power efficient than its first Cloud TPU from 2018

2

4

.Vahdat emphasized that Google's strategy involves "efficiency across hardware, software, and model optimizations" rather than simply outspending competitors

3

. The company also benefits from its DeepMind research division, which provides insights into future AI model architectures and requirements.Related Stories

Market Implications and Physical Constraints

Analysts suggest Google's capacity challenges signal a shift in the AI industry's development phase. "We're entering the stage two of AI where serving capacity matters even more than the compute capacity, because the compute creates the model, but serving capacity determines how widely and how quickly that model can actually reach the users," explained Shay Boloor, chief market strategist at Futurum Equities

5

.The infrastructure demands reflect genuine user adoption rather than speculative investment, according to industry observers. Physical constraints including power, cooling, and networking bandwidth are emerging as primary bottlenecks rather than financial limitations or lack of ambition

5

.Google's infrastructure expansion faces additional challenges from supply chain constraints, with many Nvidia chips flagged as "sold out," slowing rollouts across the industry

4

. This has accelerated the company's focus on developing proprietary hardware solutions to reduce dependence on third-party suppliers.References

Summarized by

Navi

[2]

[3]

[4]

Related Stories

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation