Google's Android XR: Revolutionizing Augmented Reality with AI-Powered Glasses

43 Sources

43 Sources

[1]

Googles New AI Glasses - Android XR powered by Gemini 2.0

Google, in collaboration with Samsung and Qualcomm, has unveiled Android XR, a new platform that merges artificial intelligence (AI) with extended reality (XR) to transform wearable devices like glasses and headsets. Central to this innovation is the Gemini 2.0 AI model, which redefines how you interact with technology. By combining innovative hardware and software, Android XR provides an open platform for developers, manufacturers, and users to create applications that seamlessly integrate into everyday life, enhancing productivity, communication, and convenience. Imagine a world where your glasses do more than just help you see -- they guide you through unfamiliar streets, translate foreign languages in real time, or even teach you how to cook a new recipe, all without lifting a finger. Whether it's glasses or headsets, these devices promise to make technology more intuitive, accessible, and, most importantly, useful. At the heart of this breakthrough is Gemini 2.0, Google's advanced AI model, which powers natural, real-time interactions tailored to your needs. But this isn't just about flashy tech -- it's about solving real problems and enhancing everyday experiences. From navigating a busy city to managing tasks hands-free, Android XR is designed to simplify life in ways that feel effortless. In this article, we'll explore how this platform works, the exciting possibilities it unlocks, and what it means for the future of wearable technology. Get ready to see the world -- and your tech -- in a whole new way. Android XR establishes a robust foundation for the next generation of wearable technology. Using Google's existing augmented reality (AR) tools, including ARCore, Lens, and geospatial APIs, the platform enables precise spatial computing. This capability allows devices to interpret and interact with the physical world in real time, opening up new possibilities for immersive and practical applications. The platform is designed to be open and collaborative, encouraging developers and device manufacturers to contribute to a diverse ecosystem of applications. By fostering innovation, Android XR aims to unlock new opportunities for both personal and professional use. Whether it's enhancing workplace efficiency or simplifying daily tasks, this platform is poised to redefine how technology integrates into your life. At the core of Android XR is the Gemini 2.0 AI model, a sophisticated system capable of processing complex inputs and delivering intuitive, real-time interactions. This AI model is designed to adapt to your needs, making sure a seamless and responsive experience across various scenarios. For instance, imagine navigating a bustling city with turn-by-turn directions displayed directly in your field of view. The AI not only guides you but also adapts to your surroundings, providing contextual assistance. Similarly, when cooking a new recipe, Gemini 2.0 can offer step-by-step instructions, adjusting to your pace and making sure you stay on track. This level of real-time support simplifies complex tasks, making them more efficient and manageable. Take a look at other insightful guides from our broad collection that might capture your interest in Artificial Intelligence (AI). Android XR supports two primary categories of wearable devices, each tailored to specific use cases and user preferences: For example, XR glasses can enhance your travel experience by offering real-time language translation and navigation assistance. Whether you're exploring a foreign city or attending a business meeting, these devices ensure you stay connected and informed. The development of Android XR is the result of a strategic partnership between Google, Samsung, and Qualcomm, each contributing their unique expertise: This collaboration ensures that Android XR is not only technologically advanced but also user-friendly and accessible. By pooling their resources and expertise, these industry leaders aim to accelerate the adoption of XR technologies and create a unified ecosystem that benefits developers, manufacturers, and users alike. The versatility of Android XR opens the door to a wide range of practical applications, enhancing both personal and professional experiences. Some key use cases include: These applications demonstrate how Android XR can transform everyday activities, making them more efficient, engaging, and accessible. The launch of Android XR marks a pivotal moment in the evolution of wearable technology. By transitioning from traditional screens to XR-enabled glasses and headsets, Google envisions a future where technology becomes an unobtrusive and integral part of your daily life. This shift has the potential to redefine how you interact with the digital and physical worlds, creating new opportunities for innovation and integration. As AI and XR technologies continue to advance, the Android XR platform is expected to expand the Android ecosystem, encouraging broader adoption across industries such as healthcare, education, and entertainment. By fostering collaboration and innovation, Google, Samsung, and Qualcomm are paving the way for a future where wearable devices are not just tools but essential companions in your everyday life.

[2]

Google AI Glasses and Android XR : The Future of Augmented Reality

Imagine a world where your glasses do more than just help you see -- they guide you through unfamiliar streets, translate foreign languages in real time, or even assist you with cooking dinner. Sounds like something out of a sci-fi movie, right? Well, Google, in partnership with Samsung and Qualcomm, is turning that vision into reality with their latest innovation: Android XR. This new platform combines augmented reality (AR) and artificial intelligence (AI) to seamlessly integrate digital tools into your everyday life. Whether it's navigating a new city or tackling a home improvement project, Android XR promises to make these tasks more intuitive, immersive, and efficient. But what exactly does this mean for you? Think AI-powered glasses that feel like an extension of your smartphone or headsets that transport you into fully immersive digital environments. Android XR isn't just about flashy tech -- it's about making technology work for you in ways that feel natural and practical. In this guide by AIGRID explore how this platform, powered by tools like Gemini AI and ARCore, is set to redefine the way we interact with the world around us. Android XR is Google's latest platform, purpose-built to deliver enhanced augmented reality experiences via wearable devices. By building on existing AR technologies such as ARCore and geospatial APIs, it creates a robust ecosystem for developers and users alike. The platform bridges the gap between the physical and digital realms, allowing you to interact with your surroundings in more intuitive and meaningful ways. Whether you are navigating a new city, collaborating on a project, or exploring creative ideas, Android XR provides tools that make these tasks more immersive and efficient. This platform is not just a technological upgrade but a reimagining of how AR can be integrated into daily life. By focusing on usability and adaptability, Android XR ensures that its applications are both practical and engaging, catering to a wide range of needs. The Android XR platform introduces two distinct categories of hardware, each designed to cater to specific use cases and preferences: This hardware ecosystem ensures that Android XR can cater to both immersive and practical applications, making it versatile enough to suit a variety of user needs. At the core of Android XR lies Gemini AI, Google's advanced artificial intelligence engine. This technology processes real-world inputs in real time, delivering contextual assistance that is both relevant and actionable. For example, while traveling, Gemini AI can overlay navigation directions, translate foreign text, or provide insights about landmarks and local attractions. In your daily life, it can guide you through complex tasks such as cooking, assembling furniture, or troubleshooting appliances. By combining real-time reasoning with AR capabilities, Gemini AI ensures that the information you receive is not only accurate but also tailored to your specific needs. This integration of AI and AR creates a dynamic user experience that adapts to your environment and activities, making technology feel more intuitive and accessible. Android XR is designed to enhance various aspects of your life by offering practical and innovative solutions. Here are some of its key applications: These applications highlight the versatility of Android XR, showcasing its potential to improve both practical tasks and leisure activities. The development of Android XR is a collaborative effort between Google, Samsung, and Qualcomm. Each partner brings unique expertise to the table, making sure a well-rounded and powerful platform: This partnership fosters a cohesive ecosystem that supports developers, designers, and manufacturers in creating innovative applications and devices. By working together, these companies ensure that Android XR integrates seamlessly with existing technologies while paving the way for future advancements. Google's roadmap for Android XR begins with the launch of immersive headsets, starting with Samsung's Project Muhan device, which is set to debut next year. This initial rollout marks the beginning of a broader adoption of AR and AI technologies in wearable devices. As the platform evolves, it is expected to introduce even more features and applications, further enhancing its utility and appeal. By integrating AR and AI into everyday interactions, Android XR has the potential to redefine how you experience and interact with technology. Whether through immersive headsets or AI-powered glasses, this platform offers a glimpse into a future where digital tools are seamlessly embedded into your daily life, making tasks more intuitive, efficient, and engaging. Android XR stands out with several innovative features that enhance its usability and functionality: These features underscore the platform's commitment to delivering a user-friendly and versatile AR experience, making Android XR a promising addition to the world of wearable technology.

[3]

Android XR: Google and Samsung bet big to finish Vision Pro

Google and Samsung have announced the development of Android XR, a new operating system aimed at enhancing virtual and augmented reality experiences through headsets and glasses. This collaboration marks a significant entry into the wearable tech market, directly competing with Apple's Vision Pro and Meta's Quest 3. The first headset, code-named Project Moohan, will be launched in 2025, although pricing details remain undisclosed. Android XR seeks to leverage advancements in AI to deliver seamless interactions between users and devices. The platform is designed for headsets that provide immersive environments while maintaining interface options for real-world engagement. Gemini, Google's AI assistant, will be integrated, enabling conversational interactions and supporting user tasks such as planning and research. The launch of Android XR highlights Google and Samsung's efforts to create a supportive ecosystem for developers and device manufacturers. Tools like ARCore, Jetpack Compose, and OpenXR will facilitate app development, ensuring a range of content is available at launch. Companies including Lynx, Sony, and XREAL are collaborating on hardware options powered by Android XR, indicating a broad intent to meet the diverse needs of consumers and businesses alike. The anticipated Project Moohan headset aims to provide a versatile viewing experience, integrating popular Google applications like YouTube, Google Photos, and Google Maps into the XR environment. Users will have access to multitasking features with Chrome on virtual screens, while Circle to Search will offer immediate information through simple gestures. Android applications currently available will also be compatible, allowing users to enjoy their favorite mobile experiences. Looking towards the future, Android XR will extend beyond headsets to include stylish glasses designed for daily wear. These glasses will integrate Gemini for easy access to real-time information, such as directions and translations, presented seamlessly in the user's line of sight. Initial testing is set to begin soon with a small group of users, aiming to refine product features and address privacy concerns. The partnership between Google and Samsung reflects a strategic push against established competitors like Apple and Meta, with both companies using their respective strengths in AI and consumer technology. Analysts expect Samsung to price its XR headset below Apple's $3,499 Vision Pro, positioning itself favorably in a market still considered niche. This market dynamic creates opportunities for growth and experimentation, making XR products a focus for ongoing technological development. Despite the excitement around these advancements, experts caution that immediate demand may remain low, with wearable tech categorized as "nice to have" rather than essential. The history of Google's past ventures into wearable tech, such as Google Glass, serves as a reminder of the challenges faced in gaining widespread adoption. However, the integration of Google's AI capabilities into the Android XR operating system may provide a new avenue for success as the competition heats up. Google's Ted Mortonson believes the Android XR platform could influence how users interact with technology, potentially changing consumer expectations. As the launch date approaches, interest in how these developments will reshape the landscape of wearable technology continues to grow. The industry is watching closely, understanding that both AI and XR have transformative potential, and they may soon play a greater role in everyday technology interactions. Currently, the focus lies on shaping Android XR's ecosystem and preparing for its market introduction, with open invitations extended to developers and creators to contribute to its advancement.

[4]

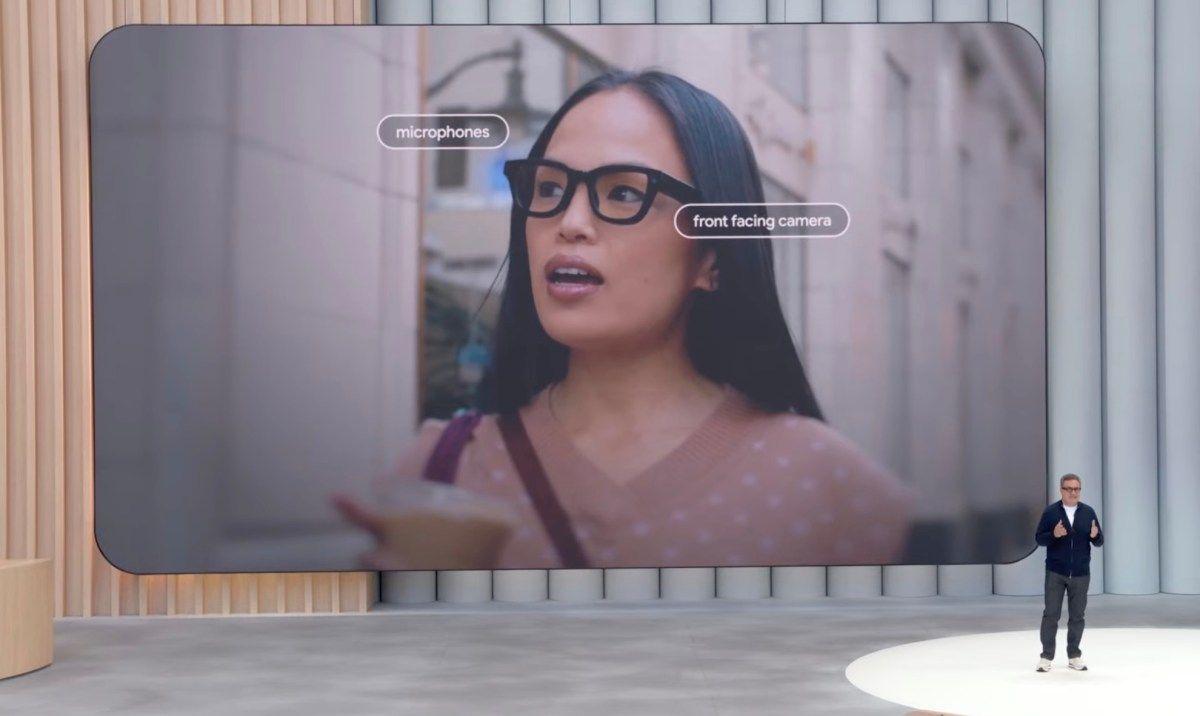

How Google's AI Glasses Are Redefining Augmented Reality with Android XR

Google, in collaboration with Samsung, is transforming the landscape of augmented reality (AR) and artificial intelligence (AI) with the introduction of the Android XR platform. Powered by the Gemini 2.0 AI engine, this advanced system seamlessly integrates AR and AI into daily life through wearable technology. Focusing on accessibility, real-time functionality, and intuitive interaction, these AI glasses aim to redefine how you engage with both digital and physical worlds. By merging innovative technology with practical design, Google is paving the way for a more connected and immersive future. Imagine a world where your glasses do more than help you see -- they help you navigate, communicate, and interact with your surroundings in ways you never thought possible. Whether you're walking through a bustling city, managing a packed schedule, or staying connected without constantly reaching for your phone, technology that blends seamlessly into your daily life feels like a dream. But what if that dream is closer to reality than you think? Google, in collaboration with Samsung, is on the brink of transforming how we experience technology with the Android XR platform and AI-powered glasses. These aren't just gadgets -- they're tools designed to make life simpler, smarter, and more intuitive. At the heart of this innovation lies the Gemini 2.0 AI system, a powerhouse of intelligence that promises to transform how we interact with both digital and physical worlds. From projecting real-time navigation onto your lenses to offering contextual information at a glance, these glasses are more than just a tech upgrade -- they're a glimpse into a future where technology works with you, not against you. What makes these AI glasses truly exciting isn't just their features but their focus on accessibility and practicality, making sure they fit seamlessly into your everyday routine. So, how exactly does Google plan to make this vision a reality? The Android XR platform is a next-generation AR and AI ecosystem co-developed by Google and Samsung. Specifically tailored for headsets and glasses, it provides developers with the tools to create immersive and practical applications. This platform minimizes user friction by allowing intuitive interactions, making AR and AI more accessible than ever before. Whether you're navigating a crowded city, managing complex tasks, or simply enhancing your daily routines, the Android XR platform serves as the foundation for a smarter, more connected experience. By using this platform, developers can create applications that seamlessly blend digital content with the physical world. The result is a system that not only enhances productivity but also enriches your overall interaction with technology. At the heart of the Android XR platform lies the Gemini 2.0 AI system, a sophisticated engine capable of processing multiple inputs such as voice commands, visual data, and contextual cues. This system delivers natural, immersive experiences by using spatial computing to ensure that digital overlays are contextually relevant and seamlessly integrated into your environment. For example, Gemini 2.0 can project real-time navigation directions or display contextual information directly onto your glasses' lenses. This capability enhances your interaction with the world around you, allowing you to stay informed and connected without disrupting your focus on the physical environment. By combining advanced AI processing with intuitive design, Gemini 2.0 represents a significant step forward in wearable technology. Google's AI glasses are designed with both practicality and comfort in mind, offering a lightweight form factor that resembles traditional eyewear. This design ensures that the glasses are suitable for extended use, making them a versatile tool for various scenarios. Key features include: This combination of functionality and comfort positions the glasses as a practical solution for both personal and professional use, bridging the gap between technology and everyday life. The Android XR platform supports a wide range of applications, catering to both episodic and continuous use cases. These applications demonstrate the versatility of XR technology and its potential to enhance various aspects of your life: For instance, while navigating a busy city, the glasses can project step-by-step navigation instructions onto your lenses, helping you stay oriented without the need to check your phone. Similarly, in professional settings, they can provide instant access to critical information, streamlining workflows and improving efficiency. Google's partnership with Samsung and other hardware providers, such as Sony XR, underscores the importance of a unified ecosystem. By collaborating with these industry leaders, Google ensures seamless integration between hardware, software, and user experience. This collaborative approach accelerates innovation and fosters a robust developer community, allowing the creation of diverse applications tailored to your needs. Through these partnerships, Google is not only advancing the capabilities of AR and AI but also making sure that these technologies are accessible and practical for a wide range of users. This emphasis on collaboration highlights the company's commitment to delivering a cohesive and user-friendly experience. The potential applications for AI glasses are vast and varied, offering solutions that simplify and enrich your daily life. Here are a few examples of how these glasses could transform your interactions with technology: These use cases illustrate how AI glasses can bridge the gap between the digital and physical worlds, making technology more intuitive and accessible. By integrating AR and AI into wearable devices, Google is allowing a new level of interaction that enhances both convenience and functionality. Google's vision for AI glasses aligns with similar efforts by competitors like Meta and Apple, who are also exploring the integration of AR and AI into wearable devices. Meta's AR glasses and Apple's Vision Pro share the goal of creating immersive and practical experiences. However, Google's emphasis on accessibility and real-world functionality sets it apart. By prioritizing lightweight designs and practical applications, Google aims to make AI glasses a mainstream tool rather than a niche product. This focus on usability ensures that the technology is not only innovative but also relevant to everyday life, positioning Google as a leader in the wearable technology space. Currently in the late prototype stages, Google's AI glasses are not yet available to the public. However, the company's commitment to innovation and collaboration suggests that these glasses could soon become a staple of everyday life. By combining AR, AI, and wearable technology, Google envisions a future where computing is more natural, intuitive, and seamlessly integrated into your surroundings. As the development of these glasses progresses, the potential for new applications and use cases continues to grow. From enhancing productivity to simplifying daily tasks, Google's AI glasses represent a significant step forward in the evolution of wearable technology.

[5]

Android XR -- everything you need to know about Google's answer to Apple's visionOS

Google is introducing its own spin on headsets and smart glasses with the announcement of Android XR: a new operating system built for extended reality (XR) devices. As announced, Google will fully use Gemini AI and collaborate with Samsung and Qualcomm to bring "helpful experiences to headsets and glasses" through Android XR. The first device to show off what it can do is Samsung's Project Moohan -- which is set to be available in 2025. With Android now entering the XR space, the Apple Vision Pro and visionOS will see some heavy competition. But what is Android XR, and what can it do for a new generation of AI-powered headsets and glasses? Here's everything we know so far for all your burning questions about Android XR. Android XR is Google's new operating system for extended reality devices, including XR headsets and glasses. It extends the tech giant's Android operating system to smartphones, tablets and TVs. The operating system aims to allow developers and device makers to create new XR experiences for headsets and glasses using familiar Android apps and tools. This includes Google's suite of apps, such as Google Photos, Google Maps, Google TV, Chrome, YouTube, and more. This means you'll get to use these apps in a virtual landscape, similar to what the Meta Quest 3 and Quest 3S mixed-reality (MR) experiences have to offer. And, of course, the Apple Vision Pro. As Google states, Android XR allows developers to build apps and games for its devices. The initial tools include ARCore, Android Studio, Jetpack Compose, Unity and OpenXR. Along with partnering with Samsung to develop the operating system, Google will get support from chip maker Qualcomm to power these devices. Qualcomm partners like Sony, Lynx, and XREAL can make their own devices using Android XR. "Advancements in AI are making interacting with computers more natural and conversational," the VP of XR at Google announced in the blog post. "This inflection point enables new extended reality (XR) devices, like headsets and glasses, to understand your intent and the world around you, helping you get things done in entirely new ways." It will be a while before we see Android XR in action on headsets, but we're already excited about Android XR in smart glasses. If you're familiar with the capabilities of the Apple Vision Pro or Meta Quest 3 headsets, imagine Android XR adding a "Google" spin on things. While developers will create different XR experiences based on Android XR, we already have a sneak peek at what it can do. For example, headsets can switch from a fully immersive virtual environment to a real-world setting. As with Apple and Meta headsets, it allows you to "fill the space around you" with different apps and content -- layering them on top of what you can see in the real world and a virtual setting. Google Gemini will also be a highlight. If asked, the AI assistant will tell you what you see and allow you to control the device. The Ray-Ban Meta smart glasses have a similar approach with AI capabilities, and Google is following suit. Then there's the apps. As mentioned, Google will bring many of its apps straight to an Android XR device, but in a "reimagined" way. So, you can watch YouTube videos and shows on Google TV on a virtual big screen, see your Google Photos pictures and albums in 3D, and create several virtual screens when surfing the web on Google Chrome. Plus, Circle to Search is added, allowing you to look up anything you see with a "simple gesture" (which I expect will be a circular motion with a finger). As another perk, mobile and tablet apps on the Google Play Store will be available for Android XR headsets, with Google announcing that more XR-focused apps, games and content will be available next year. As for glasses, Gemini will take center stage, with Google wanting its AI to be "one tap away." As expected, Android XR glasses will be able to translate what you see and hear in real-time (as seen in the video above), offer directions to destinations you need to go and show message summaries you received on your phone. Google expects Android XR to "seamlessly" work with other Android devices, and since it runs on the same operating system, I can imagine it doing so. As with the Ray-Ban Meta glasses, Google wants to make Android XR "stylish, comfortable glasses you'll love to wear every day." There's still much to learn about Android XR, but we already know what Google's new operating system will bring to XR headsets and glasses. It aims to be a unified platform for developers and manufacturers to create new XR experiences and devices. We will surely see even more headsets and glasses targeting the Apple Vision Pro and Ray-Ban Meta smart glasses. Google will soon be testing Android XR on prototype glasses to a small group of users, but there's no word yet of when smart glasses with Android XR support will arrive. In the meantime, we have Samsung's XR headset to look forward to, which will be the first device to bring Android XR. While we wait to see what else Android XR brings to the table, check out how Xreal's smart glasses are a leap forward for AR.

[6]

Android XR Is Google's Final Shot to Overthrow Meta's AR, VR

Samsung's first XR headset, codenamed Project Moohan, is expected to debut next year. Earlier this year, Meta built the 'Android' of AR/VR devices. But its biggest competitor, Google, took one notch up and beat them in the game by releasing Android XR, integrating AI, AR, and VR for innovative headset and glasses experiences. The tech giant partnered with Samsung for this product. The first extended reality (XR) headset, codenamed Project Moohan, is expected to debut next year. Google expects more hardware devices, including glasses, to adopt Android XR in the future. Google said it wants to offer a variety of stylish and comfortable glasses that not only people would enjoy wearing daily but also work seamlessly with other Android devices. This headset will allow users to effortlessly transition between virtual spaces and the real world. Google Gemini offers natural conversations to assist users in interacting with devices, planning, researching, and completing tasks. In a recent interview, Google DeepMind chief Demis Hassabis shared that the company is exploring new form factors and devices for more intuitive AI interactions. Using cooking as an example, Hassabis pointed out that hands are often occupied, and while seeking advice from AI agent Astra, one would prefer a wearable device that they wouldn't have to hold. Google's track record with AR/VR has been less than favourable. Last year, the tech giant cancelled its 'Project Iris', while Google Glass was effectively discontinued in January 2015. However, with Android XR, Google is attempting a comeback in this category. This time, the tech giant is taking a different approach. Instead of focusing on hardware, the company is developing an operating system similar to what Meta is pursuing with its Horizon OS. "Played around with the Android XR simulator. Seems like a great foundation for a spatial OS, much more so than Horizon OS, in my opinion. Overall, spatial aspects are quite similar to visionOS, which is a good thing," Dylan McDonald, owner of Sun Apps LLC, said in a post on X. "Android XR worked well in my tests on the Samsung headset, but the interface is essentially an 'Android-ified' version of visionOS. I think most consumers wouldn't even be able to tell them apart. It can also run phone/tablet apps from Google Play," Bloomberg journalist Mark Gurman, who tested the product, said. Android XR is supported by tools like ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR, making it easier for developers to create apps for upcoming devices. "Literally all AndroidXR has to do to win is be more open than visionOS and be less predatory than Meta," a user said on X. This time, Google has gained an edge with Astra, its smart assistant that processes text, voice, images, and video. The assistant allows it to understand and respond to situations more naturally and in real-time. In the latest Project Astra demo video, Google introduced its new glasses, equipped with a camera, and an internal display and always connected to Astra for instant information. Interestingly, these glasses can also recall things one does and says. Google isn't alone in this competition. Meta is already all-in with its Ray-Ban Meta glasses. According to Yann LeCun, Meta's chief AI scientist, smartphones are expected to be replaced by augmented reality glasses in the next 10-15 years. Notably, during his recent trip to India, he used these glasses to take selfies and photos and was also spotted wearing them in various public appearances. Moreover, at Meta Connect 2024, the social media giant unveiled Orion, which Meta chief Mark Zuckerberg described as "the most advanced AR glasses the world has ever seen". The company also launched the Quest 3S VR headset, priced at $299.99 - almost half the price of its predecessor Quest 3. Meanwhile, Apple hasn't been able to taste much success with its Apple Vision Pro. According to a report, Apple has sold fewer than 5 lakh units of the device, with many buyers using it less than Apple had hoped for. On a recent Y Combinator (YC) Podcast, Dianu Hu, a group partner at YC, discussed how the hardware in AR/VR glasses must become more lightweight, a factor she feels is hindering their popularity. "There are actually constraints with physics to fit all that hardware into such a small form factor. Fitting enough computing power and optics is just super challenging" "There's still more actual engineering and physics that needs to be discovered, and that's it. I think the algorithms are there, but it's just lots of really hard hardware and optics problems," she added. Many expect OpenAI to announce a hardware device soon, as the company is collaborating with legendary former Apple design chief Jony Ive. Ive's design firm, LoveFrom, which he co-founded after leaving Apple in 2019, is working on the initiative. Currently, it is in its early stages with a small team of about ten people, including former Apple designers Tang Tan and Evans Hankey, who played key roles in developing the iPhone. OpenAI recently launched its advanced voice mode with vision, which appears to be the next logical step if the company intends to enter the AI hardware market. So far, no company has fully succeeded. While startups like Rabbit and Humane AI have emerged, they have been struggling to win over customers.

[7]

Google lays out its vision for an Android XR ecosystem

With VR, augmented reality and mixed reality in play, it's much more than Google Glass 2.0. Google's latest push into extended reality is taking shape. While the company isn't entirely ready to show off any products just yet, it has laid out a vision for a unified Android XR ecosystem that will span a range of devices -- such as virtual reality headsets and mixed reality glasses -- . This is evidently Alphabet's latest attempt to compete with the likes of Meta and Apple on the extended reality front. The company has dabbled in this arena in the past with the likes of , Daydream and , programs that have found their way to the . Android XR seems much more ambitious, and having some big-name partners on board from the jump indicates that Alphabet is much more serious about extended reality this time around. Google has been beavering away on XR behind the scenes despite shutting down some of its higher-profile projects in that realm. "Google is not a stranger to this category," Sameer Samat, president of Android Ecosystem at Google, told reporters ahead of the announcement. "We, like many others, have made some attempts here before. I think the vision was correct, but the technology wasn't quite ready." One area where Google thinks that technology has advanced to the point where it's ready to try again with XR is artificial intelligence. will be deeply integrated into Android XR. By tapping into the power of the chatbot and having a user interface based around voice and natural conversation, Google and its partners are aiming to deliver experiences that aren't exactly possible to pull off using gestures and controllers. "We are fully in what we refer to as the Gemini Era, and the breakthroughs in AI with muti-modal models are giving all of us totally new ways of interacting with computers," Samat said. "We believe a digital assistant integrated with your XR experience is the killer app for the form factor, like what email or texting was for the smartphone." Google believes that smart glasses and headsets are a more natural form factor to explore this tech with, rather than holding up your smartphone to something in the world that you want Gemini to take a look at. To that end, the wide array of XR devices that are popping up, such as VR headsets with passthrough (the ability to see the outside world while wearing one) is another factor in Google's push into that space. We'll get our first real look at Android XR products next year, including one that . The first headset, currently dubbed Project Moohan (which means "infinity" in Korean), will feature "state-of-the-art displays," passthrough and natural multi-modal input, according to Samsung. It's slated to be a lightweight headset that's ergonomically designed to maximize comfort. Renderings of the Moohan prototype (pictured above) suggest the headset will look a little like the Apple Vision Pro, perhaps with a glass visor on the front. Along with the headset, Samsung is working on Google XR glasses, with more details to come soon. But nailing the hardware won't matter much if you can't do anything interesting with it. As such, Google is now looking to to create apps and products for Android XR. The company is offering developers APIs, an emulator and hardware development kits to help them build out XR experiences. On its side of things, Google is promising an "infinite desktop" for those using the platform for productivity. Its core apps are being reimagined for extended reality as well. Those include Chrome, Photos, Meet, Maps (with an immersive view of landmarks) and Google Play. On top of that, mobile and tablet apps from Google Play are said to work out of the box. On YouTube, it looks like you'll be able to easily transition from augmented reality into a VR experience. And in Google TV, you'll be able to switch from an AR view to a virtual home movie theater when you start a film. A demo video showed a headset wearer using a combination of their voice and a physical keyboard and mouse to navigate a series of Chrome windows. Circle to Search will be one of the many features. After you've used the tool to look up something, you can use a Gemini command to refine the results. It'll be possible to pull 3D image renderings from image search results and manipulate them with gestures. As for AR glasses -- essentially next-gen Google Glass -- it seems that you'll be able to use those to translate signage and speech, then ask Gemini questions about the details of, say, a restaurant menu. Other use cases include advice on how to position shelves on a wall (and perhaps asking Gemini to help you find a tool you put down somewhere), getting directions to a store and summarizing group chats while you're on the go. Thanks to advances in technology, AR glasses look much like regular spectacles these days, as we've seen from the likes of Meta and Snap. That should help Google avoid the whole "Glass-holes" discourse this time around given that there shouldn't be an obscenely obvious camera attached to the front. But the advancements might give cause for concern when it comes to privacy and letting those caught in the camera's cone of vision know that they're perhaps being filmed. Privacy is an important consideration for Android XR. Google says it's building new privacy controls for Gemini on the platform. More details about those will be revealed next year. Meanwhile, games could play a major factor in the success of Android XR. They're a focus for Meta's Quest headsets, of course. On the heels of its , Google is hoping to make it as easy as possible for developers to port their games to its ecosystem. Not only that, Unity is one of the companies that's supporting Android XR. Developers will be able to create experiences for it using the engine. Unity says it will offer full support for Android XR, including documentation and optimizations to help devs get started. They can do that now in public experimental versions of Unity 6. Resolution Games (Demeo) and Google's own Owlchemy Labs (Job Simulator) are among the studios that plan to bring titles built in Unity to Android XR. The process is said to be straightforward. "This is as simple a port as you're ever going to encounter," Owlchemy Labs CEO Andrew Eiche said in a statement. Meanwhile, Unity has teamed up with Google and film director Doug Liman's studio 30 Ninjas to make a "new and innovative immersive film app that will combine AI and XR to redefine the cinematic experience." Since gaming is set to play a sizable role in Android XR, it stands to reason that physical controllers will still be a part of the ecosystem. Not many people are going to want to play games using their voice. But that's the key: Android XR is shaping up to be a broad ecosystem of devices, not just one. This strategy has paid dividends for Google, given the spectrum of phones, tablets, cars and TVs that variants of Android are available on. It will be hoping to replicate that success with Android XR.

[8]

Samsung’s AR Headsets Will Run on AI-Centric â€~Android XR’

Samsung’s â€~Project Moohan’ will also facilitate Gemini and Galaxy AI. It will be the first headset running on Google’s Android XR. Google and Samsung are again letting their Wonder Twins powers activate, this time in the form of an all-new VR and AR platform dubbed “Android XR.†The companies dropped the details of their plans for upcoming headsets and glasses that will arrive soon. All that new hardware will have a UI that will facilitate Google’s Gemini AI model in a way that will offer controls “beyond gestures.†If Google and Samsung are following Apple into the VR rabbit hole, they’re doing it with a more cautious descent. The first device to use this all-new Android XR isn’t actually here yet. Samsung calls it “Project Moohan,†based on the Korean word for “infinity.†As you can see above, Samsung provided a rendering of its initial headset design. Based on the image, Samsung’s initial XR headset has a constrained design without many obvious AR sensors like the one you see on the Meta Quest 3, Quest 3S, or Apple Vision Pro. Samsung hinted about its XR plans last month but didn’t offer many specifics about its upcoming devices. The company said its headsets and glasses will have internal displays and passthrough capabilities. The big difference between these goggles and glasses compared to others on the market is that it's AI-forward. Samsung said you should be able to control it with your voice, akin to the Ray-Ban Meta smart glasses. The device will likely have some kind of eye and hand tracking, but Samsung said you should be able to use “natural conversation†to access the device or any of your apps. Android XR is supposed to facilitate virtual reality, augmented reality, and mixed reality features with a host of typical Android apps like Google Maps or YouTube. Samsung shared a video of this Android XR platform in which a user clicks on a YouTube VR video set in Florence, Italy. This feature is also available in the Meta Quest through the still-glitchy YouTube app. Just this week, Google announced its Gemini 2.0 model. The Android maker said the whole point of its new model is to facilitate AI agentsâ€"essentially AI that can take over control of your device on your behalf. That’s the general concept behind Android XR as well. In a statement, Google’s president of Android, Sameer Samat, said his company's AR platform’s multimodal AI will “enable natural and intuitive ways†to use these devices. Google and Samsung seem to indicate they, too, have bought into the idea of AR glasses, eventually eclipsing the phone as everybody’s go-to tech. Meta is currently building out its Orion true AR glasses. Apple is also looking to get into the smart glasses game. Conversely, Google abandoned its hold in the AR glasses space after the end of Google Glass. Now, it’s happy to let Samsung take the reins while it builds out its new form of the Android ecosystem.

[9]

Google Announces Android XR

We started Android over a decade ago with a simple idea: transform computing for everyone. Android powers more than just phones -- it's on tablets, watches, TVs, cars and more. Now, we're taking the next step into the future. Advancements in AI are making interacting with computers more natural and conversational. This inflection point enables new extended reality (XR) devices, like headsets and glasses, to understand your intent and the world around you, helping you get things done in entirely new ways. Today, we're introducing Android XR, a new operating system built for this next generation of computing. Created in collaboration with Samsung, Android XR combines years of investment in AI, AR and VR to bring helpful experiences to headsets and glasses. We're working to create a vibrant ecosystem of developers and device makers for Android XR, building on the foundation that brought Android to billions. Today's release is a preview for developers, and by supporting tools like ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR from the beginning, developers can easily start building apps and games for upcoming Android XR devices. For Qualcomm partners like Lynx, Sony and XREAL, we are opening a path for the development of a wide array of Android XR devices to meet the diverse needs of people and businesses. And, we are continuing to collaborate with Magic Leap on XR technology and future products with AR and AI. Blending technology with everyday life, with help from AI Android XR will first launch on headsets that transform how you watch, work and explore. The first device, code named Project Moohan and built by Samsung, will be available for purchase next year. With headsets, you can effortlessly switch between being fully immersed in a virtual environment and staying present in the real world. You can fill the space around you with apps and content, and with Gemini, our AI assistant, you can even have conversations about what you're seeing or control your device. Gemini can understand your intent, helping you plan, research topics and guide you through tasks. We're also reimagining some of your favorite Google apps for headsets. You can watch YouTube and Google TV on a virtual big screen, or relive your cherished memories with Google Photos in 3D. You'll be able to explore the world in new ways with Google Maps, soaring above cities and landmarks in Immersive View. And with Chrome, multiple virtual screens will let you multitask with ease. You can even use Circle to Search to quickly find information on whatever's in front of you, with just a simple gesture. Plus, because it's Android, your favorite mobile and tablet apps from Google Play will work right out of the box, with even more apps, games and immersive content made for XR arriving next year. Android XR will also support glasses for all-day help in the future. We want there to be lots of choices of stylish, comfortable glasses you'll love to wear every day and that work seamlessly with your other Android devices. Glasses with Android XR will put the power of Gemini one tap away, providing helpful information right when you need it -- like directions, translations or message summaries without reaching for your phone. It's all within your line of sight, or directly in your ear. As we shared yesterday, we'll soon begin real-world testing of prototype glasses running Android XR with a small group of users. This will help us create helpful products and ensure we're building in a way that respects privacy for you and those around you. Building the XR ecosystem Android XR is designed to be an open, unified platform for XR headsets and glasses. For users, this means more choice of devices and access to apps they already know and love. For developers, it's a unified platform with opportunities to build experiences for a wide range of devices using familiar Android tools and frameworks. We're inviting developers, device makers and creators everywhere to join us in shaping this next evolution of computing. If you're a developer, check out the Android Developers Blog to get started. For everyone else, stay tuned for updates on device availability next year and learn more about Android XR on our website. Source: Google Android XR

[10]

Google renews push into mixed reality headgear

The software is designed to power augmented and virtual reality experiences enhanced with artificial intelligence, XR vice president Shahram Izadi said in a blog post. "With headsets, you can effortlessly switch between being fully immersed in a virtual environment and staying present in the real world," Izadi said.Google is ramping up its push into smart glasses and augmented reality headgear, taking on rivals Apple and Meta with help from its sophisticated Gemini artificial intelligence. The internet titan on Thursday unveiled an Android XR operating system created in a collaboration with Samsung, which will use it in a device being built in what is called internally "Project Moohan," according to Google. The software is designed to power augmented and virtual reality experiences enhanced with artificial intelligence, XR vice president Shahram Izadi said in a blog post. "With headsets, you can effortlessly switch between being fully immersed in a virtual environment and staying present in the real world," Izadi said. "You can fill the space around you with apps and content, and with Gemini, our AI assistant, you can even have conversations about what you're seeing or control your device." Google this week announced the launch of Gemini 2.0, its most advanced artificial intelligence model to date, as the world's tech giants race to take the lead in the fast-developing technology. CEO Sundar Pichai said the new model would mark what the company calls "a new agentic era" in AI development, with AI models designed to understand and make decisions about the world around you. Android XR infused with Gemini promises to put digital assistants into eyewear, tapping into what users are seeing and hearing. An AI "agent," the latest Silicon Valley trend, is a digital helper that is supposed to sense surroundings, make decisions, and take actions to achieve specific goals. "Gemini can understand your intent, helping you plan, research topics and guide you through tasks," Izadi said. "Android XR will first launch on headsets that transform how you watch, work and explore." The Android XR release was a preview for developers so they can start building games and other apps for headgear, ideally fun or useful enough to get people to buy the hardware. This is not Google's first foray into smart eyewear. Its first offering, Google Glass, debuted in 2013 only to be treated as an unflattering tech status symbol and met with privacy concerns due to camera capabilities. The market has evolved since then, with Meta investing heavily in a Quest virtual reality headgear line priced for mainstream adoption and Apple hitting the market with pricey Vision Pro "spacial reality" gear. Google plans to soon begin testing prototype Android XR-powered glasses with a small group of users. Google will also adapt popular apps such as YouTube, Photos, Maps, and Google TV for immersive experiences using Android XR, according to Izadi. Gemini AI in glasses will enable tasks like directions and language translations, he added. "It's all within your line of sight, or directly in your ear," Izadi said.

[11]

Google wants you to strap Android to your face with Android XR

In brief: A primary selling point of Apple's Vision Pro is a software platform many users are already familiar with. Google is trying to repeat the strategy with Android XR but with a couple of crucial differences - availability on devices from third-party manufacturers and a heavy emphasis on GenAI. Google recently unveiled Android XR, a version of its mobile operating system redesigned for extended reality headsets and glasses. A developer preview is available now, and the first compatible devices are planned for release in 2025. Although third-party developers have only just begun working with Android XR, Google demonstrated how it is using XR to transform some of its in-house apps. YouTube and Google TV are viewable in large virtual screens, Google Photos displays images in 3D, Google Maps receives an immersive view mode, and Chrome can display multiple windows around users. Furthermore, users can interact with Android XR apps through hand-tracked gestures. One example shows someone viewing a picture of a soccer player in Chrome and drawing a circle around their shoes to Google search for similar pairs. Android XR also supports all Google Play phone and tablet apps. In contrast to Apple's visionOS, which doesn't employ Apple Intelligence, some Android XR apps incorporate Google's recently unveiled Gemini 2.0 GenAI model. AI agents can recognize what users look at, converse with users, and access device controls through natural language prompts. Google hasn't announced plans for a flagship headset, but Samsung is expected to release the first Android XR-supported device next year. Lynx, Sony, and XREAL might also soon begin developing headsets running on Qualcomm processors. At least one of them will probably undercut the Apple Vision Pro's $3,500 price tag. Moreover, Google is taking the opportunity to cautiously gauge interest in another attempt at smart glasses with its new XR OS. The company plans to begin testing XR glasses prototypes soon but didn't mention plans for a full release. Demo clips show Android XR offering turn-by-turn navigation through Google Maps, translating a restaurant menu a user looks at, and showing directions for building a shelf. The company is obviously trying to avoid a repeat of Google Glass. The early attempt at smart glasses first appeared over a decade ago but failed to gain traction and raised significant privacy concerns.

[12]

Android XR: The Gemini era comes to headsets and glasses

We're working to create a vibrant ecosystem of developers and device makers for Android XR, building on the foundation that brought Android to billions. Today's release is a preview for developers, and by supporting tools like ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR from the beginning, developers can easily start building apps and games for upcoming Android XR devices. For Qualcomm partners like Lynx, Sony and XREAL, we are opening a path for the development of a wide array of Android XR devices to meet the diverse needs of people and businesses. And, we are continuing to collaborate with Magic Leap on XR technology and future products with AR and AI. Android XR will first launch on headsets that transform how you watch, work and explore. The first device, code named Project Moohan and built by Samsung, will be available for purchase next year. With headsets, you can effortlessly switch between being fully immersed in a virtual environment and staying present in the real world. You can fill the space around you with apps and content, and with Gemini, our AI assistant, you can even have conversations about what you're seeing or control your device. Gemini can understand your intent, helping you plan, research topics and guide you through tasks. We're also reimagining some of your favorite Google apps for headsets. You can watch YouTube and Google TV on a virtual big screen, or relive your cherished memories with Google Photos in 3D. You'll be able to explore the world in new ways with Google Maps, soaring above cities and landmarks in Immersive View. And with Chrome, multiple virtual screens will let you multitask with ease. You can even use Circle to Search to quickly find information on whatever's in front of you, with just a simple gesture. Plus, because it's Android, your favorite mobile and tablet apps from Google Play will work right out of the box, with even more apps, games and immersive content made for XR arriving next year.

[13]

A week on, Google's Android XR is stealing the VR/AR spotlight from Meta

Android XR is proving popular with software developers and hardware makers Google's Android XR was only announced last week, but the mixed reality platform is already making a splash, securing software and hardware partners right out of the gate and impressively throwing down the gauntlet to Meta's Horizon OS. While Meta's operating system has a clear head start, Google's promise of an open, unified platform (similar to the Android OS that powers smartphones, tablets, TVs, smartwatches, and even cars) offers a bright future for the consumer and enterprise XR market by delivering a true standard for all to benefit from. As Resolution Games, the developer behind the impressive digital tabletop RPG Demeo -- one of the first games expected to arrive on Android XR through Samsung's Project Moohan headset, CTO Johan Gastrin tells Laptop Mag: "Android XR has the potential to speed up standardization in the XR space." Android XR may be the rising tide that raises all boats, granting hardware manufacturers and software developers an even foothold from which to build. In turn, they're free to build customized experiences backed by a dependable library of tools and frameworks tailored to various devices. Gastrin tells Laptop Mag, "Android XR has the potential to bring XR to a wide variety of XR devices, ranging from immersive VR headsets to AR glasses, all using the same ecosystem." It's an enticing promise, and it's one that's already attracted the attention of developers like Resolution Games, not to mention potential hardware partners in Samsung, Sony, Lynx, and XREAL. Announced on December 12, the Android XR operating system is poised to fuse mixed reality and artificial intelligence to power a new generation of VR headsets and smart glasses -- in direct competition with Horizon OS, which powers Meta's impressive Quest headsets. It's a bold challenge to Meta's dominance in this space, but Google has a wealth of experience and a vast ecosystem in its corner heading into this fight. Resolution Games' Johan Gastrin tells Laptop Mag, "Sharing a common ecosystem across devices from multiple manufacturers is great for both developers and consumers." In speaking of that ecosystem, Gastrin continues: "Android XR also has the benefit of having an existing portfolio of games and applications that will make the platform vibrant from the get-go." Following Android XR's announcement, Resolution Games became one of the first developers in the XR space to announce it would be porting its most popular title to the platform. While the impressive Demeo may be among the first games to join Google's new platform, we wouldn't expect it to be the last. Highlighting the ease at which developers can shift from a Meta-familiar landscape to Android XR, Gastrin tells Laptop Mag, "Both Android XR and Horizon OS adhere to OpenXR. That, plus us using Unity for the majority of our titles, makes the development process very similar." That's good news for gaming hopefuls when it comes to Google's new platform. Aside from Demeo, many of Meta's most popular games are built using the Unity game engine, including Beat Saber, Bonelab, Walkabout Mini Golf, LEGO Bricktales, and even Batman: Arkham Shadow. The latter of those games is unlikely to make the switch due to it being a Meta Quest exclusive title, something that Android XR has yet to claim. When asked whether Resolution Games would be interested in developing exclusively for the platform, Gastrin tells Laptop Mag, "Right now we are focusing on taking Demeo to as many players as possible on whatever platforms they wish to play." "We will continue to support as many XR platforms as possible, and the support for the OpenXR open standard from all major vendors have greatly improved our ability to do so." Clearly, Google's Android XR has an excellent opportunity when it comes to delivering great software. However, from what we know so far, Google won't be bringing Android XR to users through its own dedicated hardware -- something Meta can rely on in pairing its Horizon OS platform with its Quest headsets. However, while Google won't be supplying its own headsets or smart glasses, Android XR isn't short of interest from manufacturers looking to adopt the platform for their own devices. As part of the Android XR announcement, Google revealed that Lynx, Sony, and AR smart glasses maker XREAL are all interested in making use of the platform to power future devices. one of which may be the XR head-mounted display showcased by Sony in January. Meta also has plans to open Horizon OS for third parties, with Asus, Lenovo, and Microsoft all interested in adopting the platform, but little has been heard of this since its announcement in April. Having only been announced last week, already being lined up to appear on Samsung's future headset, and with many parties interested in bringing the platform to other devices, Android XR is off to a great start out of the gate. It serves as an excellent expansion of the Android ecosystem and brings AI to the forefront of Google's vision for a mixed-reality future. Whether the Android XR platform outpaces Meta's remains to be seen, but each provides the other with the necessary element to see innovation thrive: competition.

[14]

Google debuts Android XR operating system for VR and AR devices - SiliconANGLE

Google debuts Android XR operating system for VR and AR devices Google LLC today debuted Android XR, a new operating system for virtual reality and augmented devices. The software will initially ship with headsets. Down the road, Google will also enable hardware partners to integrate Android XR into smart glasses. The search giant has already developed several prototype glasses internally as part of an initiative called Project Astra. "Android XR is designed to be an open, unified platform for XR headsets and glasses," Shahram Izadi, Google's vice president and general manager of XR, wrote in a blog post. "For users, this means more choice of devices and access to apps they already know and love. For developers, it's a unified platform with opportunities to build experiences for a wide range of devices using familiar Android tools and frameworks." Android XR ships with Gemini, Google's flagship large language model lineup. The integration will allow consumers to look at an object and have Gemini explain it. A user could, for example, ask the LLM for pointers on how to assemble a piece of furniture. Gemini also lends itself to other tasks. An Android XR device can use the AI to fetch information such as weather updates, as well as overlay turn-by-turn navigation instructions on the user's field of view. The latter feature is powered by an integration with Google Maps. Alongside the mapping service, Android XR can run all the mobile apps in Google's Play Store. It also supports several popular development tools. That means software teams won't have to replace their existing toolchains to build apps for the operating system. Android Studio, Google's flagship desktop application for building mobile apps, is among the supported applications. The first device that will ship with Android XR is a headset from Samsung Electronics Co. Ltd, which helped develop the operating system. The device (pictured) is codenamed Project Moohan. It's reportedly similar in design to Apple's Vision Pro, but lighter and more comfortable to wear for extended periods of time. The headset runs on Qualcomm Inc.'s XR2 Gen 2 system-on-chip, which is specifically designed to power AR and VR devices. The chip's central processing unit and graphics processing units have higher clock speeds than the chipmaker's previous-generation silicon. As a result, the XR2 Gen 2 can support headsets featuring up to a dozen external cameras and internal displays with a resolution of 4,300 pixels by 4,00 pixels. According to Samsung, users can double-tap the side of the headset to switch between VR and AR modes. In the latter configuration, the wearer sees not only rendered content but also the outside world. Samsung's headset draws power from a standalone battery pack that attaches via a USB-C cable. According to Bloomberg, the company could offer multiple packs with different levels of battery life. Samsung plans to launch Project Moohan next year. Android XR will later become available with headsets from other companies and, down the line, in smart glasses. Google has not yet shared a time frame for when the latter devices will become available. As part of an internal initiative dubbed Project Astra, Google has developed several prototype smart glasses. The use a display technology called microLED that can provide higher resolutions than many current screens. It's also less susceptible to certain times of malfunctions and uses less power.

[15]

Google unveils Android XR for next-gen extended reality devices

Google has introduced Android XR, a new operating system designed for the next generation of computing, combining years of AI, AR, and VR advancements. Developed in collaboration with Samsung, Android XR is aimed at creating a new ecosystem for XR headsets and glasses, bringing immersive experiences to users. Shahram Izadi, VP & GM of XR at Google, stated that Android XR aims to build a vibrant ecosystem for developers and device manufacturers. The platform is designed to leverage the success of Android, providing developers with tools such as ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR to easily develop apps and games for XR devices. Google is partnering with companies like Lynx, Sony, and XREAL to create a wide range of devices, with further collaborations with Magic Leap to push the boundaries of XR technology. Android XR will first launch on headsets, transforming the way users work, watch, and explore. The first headset, code-named "Project Moohan" and developed by Samsung, is set to be available for purchase next year. These devices will allow users to seamlessly switch between virtual and real environments, offering an enhanced experience with the help of Gemini, Google's AI assistant. Gemini can guide users, help them plan tasks, and provide real-time information based on what users see, all through simple conversations. Google is adapting popular apps for the XR platform. Users will be able to watch YouTube and Google TV on a virtual big screen or revisit memories in 3D with Google Photos. Google Maps will offer an Immersive View, allowing users to explore cities and landmarks from new perspectives. Chrome will support multiple virtual screens for efficient multitasking, while Circle Search will enable users to find information using simple gestures. Android XR will also support mobile and tablet apps from Google Play, with more apps and games optimized for XR coming next year. Android XR is also paving the way for glasses that will assist users throughout the day. These glasses will seamlessly integrate with Android devices, putting the power of Gemini at users' fingertips. Android XR is designed as an open, unified platform, providing more device options for users and a consistent development experience for creators. Developers can use familiar Android tools and frameworks to build apps and experiences for various XR devices. Google encourages developers, device makers, and creators to contribute to shaping the future of XR. Google has also launched the Android XR SDK, a comprehensive development kit for building XR apps. The SDK allows developers to create immersive experiences that blend digital and physical worlds. Key features of the SDK include: The Jetpack XR SDK offers new libraries for creating spatial UI layouts and integrating existing Android apps into XR. The new libraries include: Google has partnered with Unity to integrate its real-time 3D engine with Android XR. Unity developers can now use the Unity OpenXR: Android XR package to bring multi-platform XR experiences to Android XR. Additionally, Chrome on Android XR supports the WebXR standard, allowing developers to create immersive web-based experiences with frameworks like Three.js or A-Frame. Android XR is built on open standards such as OpenXR 1.1 and includes advanced capabilities like AI-powered hand mesh, detailed depth textures, and light estimation. These features ensure that digital content blends seamlessly with the real world. The SDK also supports formats like glTF 2.0 for 3D models and OpenEXR for high-dynamic-range environments. To get started with development, visit the Android XR developer site, where tools and resources are available. Google also invites developers to participate in the Android XR Developer Bootcamp in 2025, offering opportunities to collaborate on the future of XR and access pre-release hardware.

[16]

Google announces Android XR platform, will launch first on Samsung's Project Moohan device

Google said that it is launching a new Android-based XR platform on Thursday to accommodate AI features. The company said the platform, called Android XR, will support app development on different devices, including headsets and glasses. The company is releasing Android XR's first developer preview on Thursday, which already supports existing tools, including ARCore, Android Studio, Jetpack Compose, Unity, and OpenXR. Project Moohan headset The company noted that Android XR will first launch with the Smasung-built Project Moohan headset, which will be available for purchase next year. Samsung, Google, and Qualcomm announced a partnership to produce an XR device early last year. After the announcement, reports of a power struggle between Google and Samsung about the control of the project emerged. In July, Business Insider reported that while the headset was supposed to be shipped earlier this year, the launch was delayed. Google noted that the headset will be able to easily switch between a fully immersive experience and augment content on top of real-world surroundings. Plus, users will be able to control the device with Gemini and ask questions about the app and content that they are looking at. App ecosystem and Gemini Google said that because Android XR is based on Android, most mobile and tablet apps on the Play Store will automatically be compatible with it. This means anyone buying a headset with an Android headset will already have a library full of apps through the Android XR Play Store. This is possibly the company's play to counter Apple's $3600 Vision Pro, which hasn't taken off as the company would have expected. Vision Pro had a limited number of apps at launch, which has grown over time. However, the cost is a prohibitive factor for more users to adopt the headset. It's not clear where Samsung and Google will price this headset, but the company is positioning itself so that users will have better app access. Google is redesigning YouTube, Google TV, Chrome, Maps, and Google Photos for an immersive screen. Notably, Google didn't release a YouTube app for Vision Pro and also made developer Christian Selig pull out his app Juno for YouTube viewing from the App Store. The company is also adding an Android XR Emulator to Android Studio so developers can visualize their apps in a virtual environment. The emulator has XR controls for using a keyboard and a mouse to emulate navigation in a spatial environment. The company is pushing Gemini for Android XR, too. Apart from screen control and contextual information, it will also support Circle to search feature. Support for other devices Google said that it hopes that Android XR will support glasses with "all-day help" in the future. The company is seeding its prototype glasses to some users as well, but it hasn't specified a date for consumer launch. The search giant showed a demo where a person asked Gemini to summarize a group chat and ask for recommendations to buy a card for a friend. Another demo showed the person wearing glasses asking Gemini for a way to hang shelves through. Google said companies such as Lynx, Sony, and XReal, which utilize Qualcomm's XR solutions, will be able to launch more devices with Android XR. The Mountain View-based also specified that it will continue to work with Magic Leap on XR. However, it is unclear if Magic Leap will utilize Android XR.

[17]

Google steps into "extended reality" once again with Android XR

Citing "years of investment in AI, AR, and VR," Google is stepping into the augmented reality market once more with Android XR. It's an operating system that Google says will power future headsets and glasses that "transform how you watch, work, and explore." The first version you'll see is Project Moohan, a mixed reality headset built by Samsung. It will be available for purchase next year, and not much more is known about it. Developers have access to the new XR version of Android now. "We've been in this space since Google Glass, and we have not stopped," said Juston Payne, director of product at Google for XR in Android XR's launch video. Citing established projects like Google Lens, Live View for Maps, instant camera translation, and, of course, Google's general-purpose Gemini AI, XR promises to offer such overlays in both dedicated headsets and casual glasses. There are few additional details right now beyond a headset rendering, examples in Google's video labeled as "visualization for concept purposes," and Google's list of things that will likely be on-board includes Gemini, Maps, Photos, Translate, Chrome, Circle to Search, and Messages. And existing Android apps, or at least those updated to do so, should make the jump, too.

[18]

Google wants Android XR to power your next VR headset and smart glasses