Google's Gemini AI Beats Pokémon Blue: A Milestone with Caveats

2 Sources

2 Sources

[1]

Why Google Gemini's Pokémon success isn't all it's cracked up to be

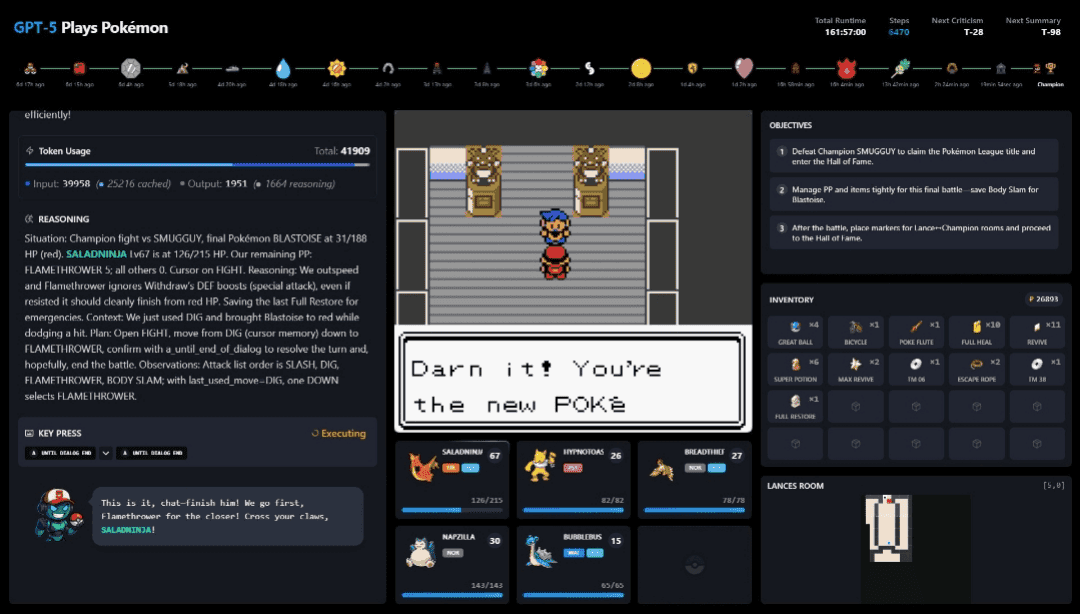

Earlier this year, we took a look at how and why Anthropic's Claude LLM was struggling to beat Pokémon Red (a game, let's remember, designed for young children). But while Claude 3.7 is still struggling to make consistent progress at the game weeks later, a similar Twitch-streamed effort using Google's Gemini 2.5 model managed to finally complete Pokémon Blue this weekend across over 106,000 in-game actions, earning accolades from followers, including Google CEO Sundar Pichai. Before you start using this achievement as a way to compare the relative performance of these two AI models -- or even the advancement of LLM capabilities over time -- there are some important caveats to keep in mind. As it happens, Gemini needed some fairly significant outside help on its path to eventual Pokémon victory. Strap in to the agent harness Gemini Plays Pokémon developer JoelZ (who's unaffiliated with Google) will be the first to tell you that Pokémon is ill-suited as a reliable benchmark for LLM models. As he writes on the project's Twitch FAQ, "please don't consider this a benchmark for how well an LLM can play Pokémon. You can't really make direct comparisons -- Gemini and Claude have different tools and receive different information. ... Claude's framework has many shortcomings so I wanted to see how far Gemini could get if it were given the right tools." The difference between those "framework" tools between the Claude and Gemini gameplay experiments could go a long way in explaining the relative performance of the two Pokémon-playing models here. As LessWrong's Julian Bradshaw lays out in an excellent overview, Gemini actually gets a bit more information about the game through its custom "agent harness." This harness is the scaffolding that provides an LLM with information about the state of the game (both specific and general), helps the model summarize and "remember" previous game actions in its context window, and offers basic tools for moving around and interacting with the game. Since these gameplay harnesses were developed independently, they give slightly different levels of support to the different Pokémon-playing models. While both models use a generated overlay to help make sense of the game's tile-based map screens, for instance, Gemini's harness goes further by adding important information about which tiles are "passable" or "navigable." That extra information could be crucial to helping Gemini overcome some key navigation challenges that Claude struggles with. "It's pretty easy for me to understand that [an in-game] building is a building and that I can't walk through a building, and that's [something] that's pretty challenging for Claude to understand," Claude Plays Pokémon creator David Hershey told Ars in March. "It's funny because it's just kind of smart in different ways, you know?" Gemini also gets help with navigation through a "textual representation" of a minimap, which the harness generates as Gemini explores the game. That kind of direct knowledge of the wider map context outside the current screen can be extremely helpful as Gemini tries to find its way around the Pokémon world. But in his FAQ, JoelZ says he doesn't consider this additional information to be "cheating" because "humans naturally build mental maps while playing games, something current LLMs can't do independently yet. The minimap feature compensates for that limitation." The base Gemini model playing the game also occasionally needs some external help from secondary Gemini "agents" tailored for specific tasks. One of these agents reasons through a breadth-first-search algorithm to figure out paths through complex mazes. Another is dedicated to generating potential solutions for the Boulder Puzzle on Victory Road. While Gemini is making use of its own model and reasoning process for these tasks, it's telling that JoelZ had to specifically graft these specialized agents onto the base model to help it get through some of the game's toughest challenges. As JoelZ writes, "My interventions improve Gemini's overall decision-making and reasoning abilities." What are we testing here? Don't get me wrong, massaging an LLM into a form that can beat a Pokémon game is definitely an achievement. But the level of "intervention" needed to help Gemini with those things that "LLMs can't do independently yet" is crucial to keep in mind as we evaluate that success. We already know that specially designed reinforcement learning tools can beat Pokémon quite efficiently (and that even a random number generator can beat the game quite inefficiently). The particular resonance of an "LLM plays Pokémon" test is in seeing if a generalized language model can reason out its own solution to a complicated game on its own. The more hand-holding we give the model -- through external information, tools, or "harnesses" -- the less useful the game is as that kind of test. Anthropic said in February that Claude Plays Pokémon showed "glimmers of AI systems that tackle challenges with increasing competence, not just through training but with generalized reasoning." But as Bradshaw writes on LessWrong, "without a refined agent harness, [all models] have a hard time simply making it through the very first screen of the game, Red's bedroom!" Bradshaw's subsequent gameplay tests with harness-free LLMs further highlight how these models frequently wander aimlessly, backtrack pointlessly, or even hallucinate impossible game situations. In other words, we're still a long way from the kind of envisioned future where an Artificial General Intelligence can figure out a way to beat Pokémon just because you asked it to.

[2]

Google's Gemini AI Is now a Pokémon Master

Gemini played the game with some light developer intervention, but mostly on its own Google's Gemini AI may not have passed the Turing test yet, but it would be very popular in the schoolyard three decades ago after winning a game of Pokémon Blue. The Gemini 2.5 Pro is now both Google's most advanced AI model and a Pokémon Master, as demonstrated in a Twitch livestream called "Gemini Plays Pokémon" run by an engineer unaffiliated with Google named Joel Z. Even Google CEO Sundar Pichai joined the celebration, sharing a clip of the victory on X. You might wonder why an AI model beating a thirty-year-old game drew so much attention. It's partly because of the spectacle, but also because of AI model rivalry. Back in February, Anthropic showcased the progress its Claude model was making in beating Pokémon Red. They used the game to show off Claude's "extended thinking and agent training" and launched a "Claude Plays Pokémon" Twitch stream, inspiring Joel Z. Before crowning Gemini as the one true AI Ash Ketchum, it's worth noting a few caveats. For one, Claude hasn't technically beaten Pokémon Red yet, but that doesn't automatically make Gemini better, as they employed different tools, known as "agent harnesses." The models don't play the game directly like a human with a controller would. Instead, they're fed screenshots of the game environment along with overlays of key information, then asked to generate the next best action. That decision is then translated into an actual button press in the game. And Gemini hasn't been going it entirely alone. Joel admitted he occasionally stepped in to make improvements, though he has made a point of doing so only to improve some of Gemini's reasoning. He also plans to continue working on the Gemini Plays Pokémon project to make further improvements. What makes this more than a quirky internet stunt is what it implies about where AI is headed. Playing a game like Pokémon Blue isn't about fast reflexes or memorizing controller inputs. It's about long-term strategy, adapting to surprises, and navigating ambiguous challenges. These are all areas where AI usually needs improvement. That Gemini could not only hold its own but finish the game (with minimal nudging) suggests that models like it are getting better at extended strategy. It's also the kind of milestone the average person can understand. You can intuitively understand what the AI is doing when bumbling through Lavender Town or misreading a battle tactic, and compare it to the choices you'd make in that context. Of course, you shouldn't overstate what this means. AI can now finish a game you probably beat in middle school, but it also highlights how much human effort still goes into making AI seem autonomous. Whether or not Claude or Gemini become true Pokémasters doesn't matter so much as what they're playing means for AI's development. Showing that AI won't just crunch numbers or generate spam emails could change how people think of what AI can do, even with help. And if this is how AI models start learning how to operate in unpredictable, open-ended environments, well, beating Mewtwo might just be a stepping stone to something a lot more profound. Or at least, a bit more productive.

Share

Share

Copy Link

Google's Gemini AI model completes Pokémon Blue, sparking discussions about AI capabilities and benchmarking. However, the achievement comes with important caveats regarding external assistance and specialized tools.

Google's Gemini AI Conquers Pokémon Blue

In a significant development for artificial intelligence, Google's Gemini 2.5 Pro model has successfully completed the classic game Pokémon Blue. This achievement, demonstrated on a Twitch livestream called "Gemini Plays Pokémon," has garnered attention from tech enthusiasts and even Google's CEO Sundar Pichai

1

2

.The Achievement and Its Context

The Gemini AI completed Pokémon Blue over the course of 106,000 in-game actions, marking a notable milestone in AI gaming capabilities. This accomplishment comes in the wake of Anthropic's Claude model's ongoing attempts to beat Pokémon Red, providing an interesting point of comparison between different AI models

1

.Caveats and Considerations

While impressive, experts caution against using this achievement as a direct benchmark for comparing AI models. Several important factors need to be considered:

-

Custom "Agent Harness": Gemini utilized a specially designed framework that provided additional information about the game state, including details about navigable tiles and a minimap representation

1

. -

External Assistance: The base Gemini model received help from secondary Gemini "agents" tailored for specific tasks, such as solving complex mazes and puzzles

1

. -

Developer Intervention: Joel Z, the developer behind the project (unaffiliated with Google), occasionally stepped in to make improvements to Gemini's reasoning abilities

2

.

Comparison with Other AI Models

The differences in tools and information provided to various AI models make direct comparisons challenging. For instance, Gemini's "agent harness" offered more comprehensive game information compared to the framework used by Claude, potentially explaining the disparity in their performances

1

.Related Stories

Implications for AI Development

Despite the caveats, this achievement holds significance for several reasons:

-

Strategic Thinking: Pokémon games require long-term strategy, adaptation, and navigation of ambiguous challenges, areas where AI traditionally struggles

2

. -

Relatable Benchmark: The game provides an intuitive way for the general public to understand and assess AI capabilities

2

. -

Future Potential: This experiment hints at AI's growing ability to operate in unpredictable, open-ended environments, which could have broader implications beyond gaming

2

.

Limitations and Future Prospects

It's crucial to note that without refined "agent harnesses," most AI models struggle with even basic game navigation. This underscores the ongoing challenges in developing truly autonomous AI systems capable of generalizing their learning across diverse tasks

1

.As AI continues to evolve, experiments like "Gemini Plays Pokémon" serve as interesting case studies in the field's progress. However, they also highlight the significant human intervention still required to achieve such milestones, reminding us of the long road ahead in the quest for more advanced and truly autonomous AI systems

1

2

.References

Summarized by

Navi

[2]

Related Stories

Google's Gemini AI Struggles with Pokémon Blue, Taking Over 800 Hours to Complete

19 Jun 2025•Technology

Anthropic's AI Agent Claude Struggles to Master Pokémon Red, Highlighting Challenges in AI Development

22 Mar 2025•Technology

Claude AI Takes on Pokémon Red: A Fascinating Experiment in AI Gaming

26 Feb 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research