Nvidia skips gaming GPUs at CES 2026, doubles down on AI as shortages squeeze PC gamers

14 Sources

14 Sources

[1]

Nvidia leans on DLSS improvements to make up for a lack of GPUs at CES

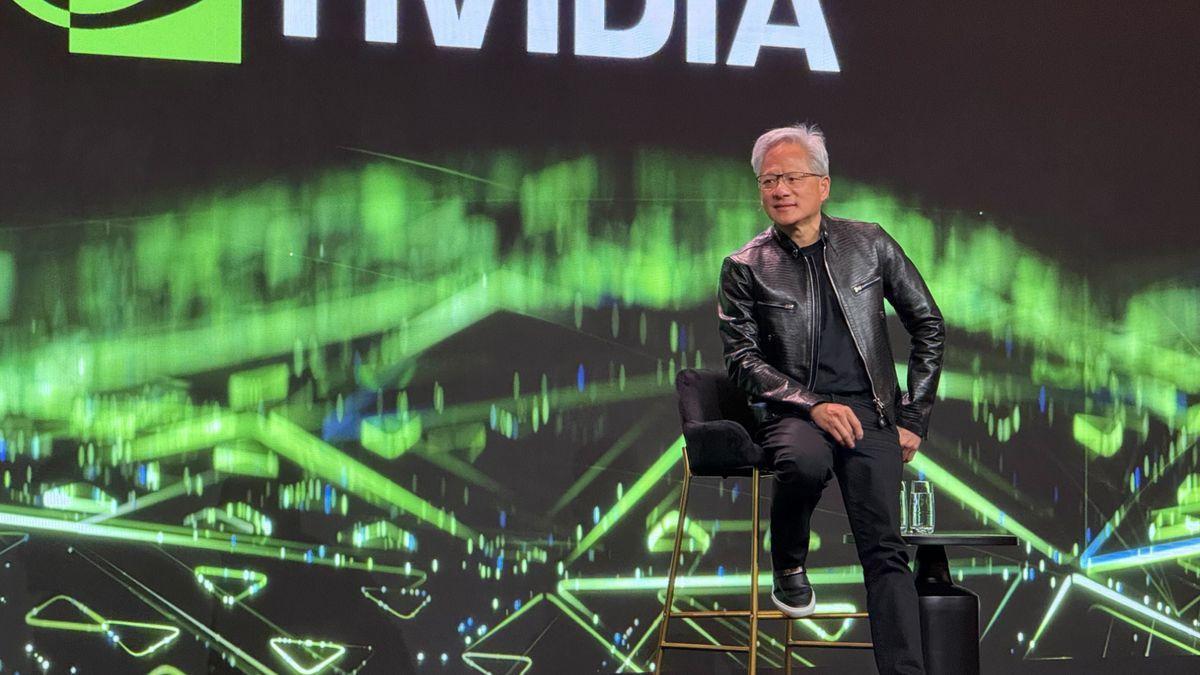

For the first time in years, Nvidia declined to introduce new GeForce graphics card models at CES. CEO Jensen Huang's characteristically sprawling and under-rehearsed 90-minute keynote focused almost entirely on the company's dominant AI business, relegating the company's gaming-related announcements to a separate video posted later in the evening. Instead, the company focused on software improvements for its existing hardware. The biggest announcement in this vein is DLSS 4.5, which adds a handful of new features to Nvidia's basket of upscaling and frame generation technologies. DLSS upscaling is being improved by a new "second-generation transformer model" that Nvidia says has been "trained on an expanded data set" to improve its predictions when generating new pixels. According to Nvidia's Bryan Catanzaro, this is particularly beneficial for image quality in the Performance and Ultra Performance modes, where the upscaler has to do more guessing because it's working from a lower-resolution source image. DLSS Multi-Frame Generation is also improving, increasing the number of AI-generated frames per rendered frame from three to five. This new 6x mode for DLSS MFG is being paired with something called Dynamic Multi-Frame Generation, where the number of AI-generated frames can dynamically change, increasing generated frames during "demanding scenes," and decreasing the number of generated frames during simpler scenes "so it only computes what's needed." The standard caveats for Multi-Frame Generation still apply: It still needs an RTX 50-series GPU (the 40-series can still only generate one frame for every rendered frame, and older cards can't generate extra frames at all), and the game still needs to be running at a reasonably high base frame rate to minimize lag and weird rendering artifacts. It remains a useful tool for making fast-running games run faster, but it won't help make an unplayable frame rate into a playable one. To Nvidia's credit, the new DLSS 4.5 transformer model runs on GeForce 20- and 30-series GPUs, giving users of older-but-still-usable graphics cards access to the improved upscaling. But Nvidia said that owners of those GPUs would see more of a performance hit from enabling DLSS 4.5 than users of GeForce 40- and 50-series cards would, and initial testing from some outlets seems to be bearing that out. Compared to the DLSS 4.0 transformer model, Mostly Positive Reviews found that the new DLSS 4.5 model reduced performance on an RTX 3080 Ti by between 14 and 24 percent depending on the game and the settings, though performance is still presumably better than it would be on the same card if you tried to run those games at native resolutions. The DLSS 4.5 transformer model is available now, after a driver update, and you can use the Nvidia App to select the new transformer model in games that already support DLSS upscaling (whether the option appears in any in-game menus remains up to the game developer). The Multi-Frame Generation updates will be available sometime in spring 2026. One feature that hasn't broken cover yet? Nvidia's Reflex 2, an update to its input lag reduction technology that the company announced at CES last year. Upscaling and frame generation technologies generally, something that Reflex helps to offset, and the company claimed an up to 75 percent reduction in lag when using Reflex 2 on a 50-series card. But Nvidia didn't mention Reflex 2 this year, and hasn't provided any updates on when it might be released. Why no GPUs? In another era, CES 2026 would have been a good time to introduce a 50-series Super update, a mid-generation spec bump to keep the lineup fresh while Nvidia works on whatever will become the GeForce 60-series. The company used CES 2024 to launch the RTX 40-series Super cards, helping improve the value proposition for a bunch of cards that had launched at prices many reviewers and users grumbled about. Indeed, the rumor mill suggested that Nvidia was working on a 50-series Super refresh for the 2025 holiday season, and its biggest improvement was going to be a 50 percent bump in RAM capacity, said to be possible because of a switch from 2GB RAM chips to 3GB chips. This would have given the theoretical 5070 Super 18GB of RAM, and the 5070 Ti Super and 5080 Super were said to have 24GB of memory. Assuming those rumors are correct, those plans could have been dashed by the abrupt RAM shortages and price spikes that started late last year. These have been caused at least in part by sky-high demand for RAM from AI data centers; given that modern-day Nvidia is mainly an AI company that sells consumer GPUs on the side, it stands to reason that the company would allocate all the RAM it can get to its more profitable AI GPUs, rather than a mid-generation GeForce refresh. In fact, none of the major dedicated GPU manufacturers has introduced new products at CES this year. AMD and Intel announced products with improved integrated GPUs, which use system RAM rather than requiring their own. But there wasn't a peep about any new dedicated Radeon cards, and Intel hasn't introduced a new dedicated Arc graphics card in almost a year, despite some signs that a midrange Arc B770 card exists and could be nearly ready to launch.

[2]

Nvidia CEO Jensen Huang says "the future is neural rendering" at CES 2026, teasing DLSS advancements -- RTX 5090 could represent the pinnacle of traditional raster

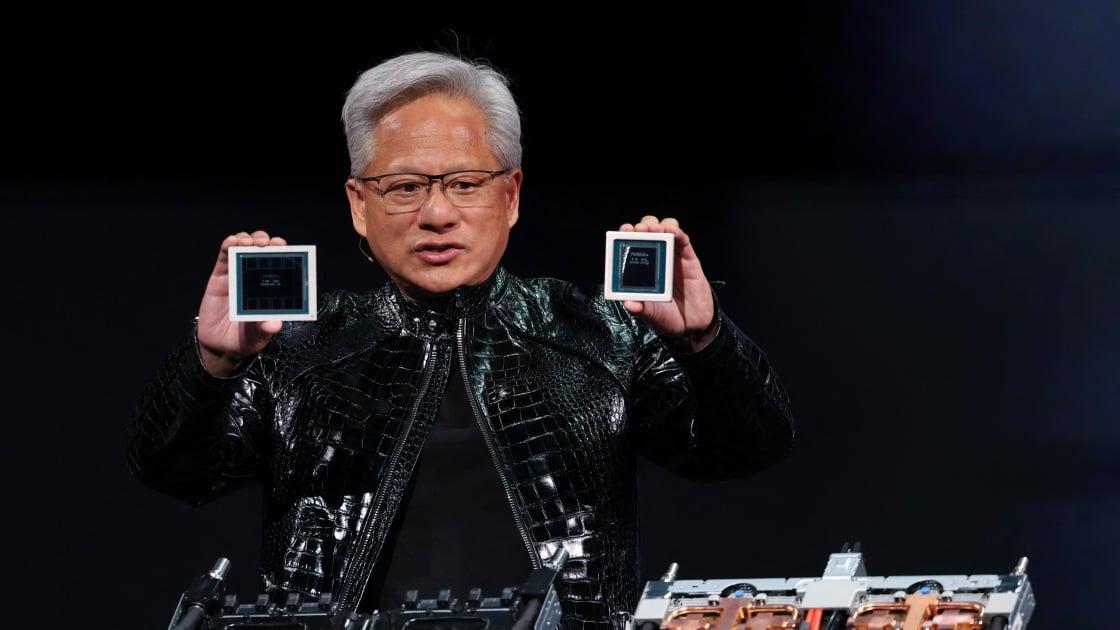

For the first time in five years, Nvidia, the largest GPU manufacturer in the world, didn't announce any new GPUs at CES. The company instead brought the next-gen Vera Rubin AI supercomputer to the party. Gaming wasn't entirely sidelined, though, as DLSS 4.5 and MFG 6X both made their debut, major upgrades to AI-powered rendering that seems even more crucial given the comments that have followed its announcement. At a Q&A session with Nvidia CEO Jensen Huang, attended by Tom's Hardware in Las Vegas, the executive offered his thoughts about the future of AI as it pertains to gaming toPC World's Adam Patrick Murray, who asked Huang: "Is the RTX 5090 the fastest GPU that gamers will ever see in traditional rasterization, and what does AI gaming look like in the future?" Jensen responded by saying: "I think that the answer is hard to predict. Maybe another way of saying it is that the future is neural rendering. It is basically DLSS. That's the way graphics ought to be. I think you're going to see more and more advances of DLSS... I would expect that the ability for us to generate imagery of almost any style -- from photo realism, extreme photo realism, basically a photograph interacting with you at 500 frames a second, all the way to cartoon shading if you want -- that entire range is going to be quite sensible to expect." Huang further speculated that the future of rendering likely involves more AI operations on fewer, extremely high quality pixels, and shared that "we're working on things in the lab that are just utterly shocking and incredible." With the way games are optimized (or not) these days, upscaling and even frame-gen are expected parts of the performance equation at this point. Developers often count DLSS as part of the default system requirements now, so Jensen's enthusiasm for the tech is timely and, of course, characteristic. Going as far as to say that the "future is neural rendering" is a strong indication that the raster race might be over, and that it's "basically DLSS" that will push us past the finishing line now. As companies experiment with more and more neural techniques for operations like texture compression and decompression, neural radiance fields, frame generation, and even an entire neural rendering replacement for the traditional graphics pipeline, it's clear that matrix math acceleration and purpose-built AI models will play ever larger roles in real-time rendering going forward. The CEO extended his passion for AI by talking about how in-game characters will also be overtaken by AI, built from scratch with neural networks at the center of them, turning NPCs lifelike. It's not just photorealism, but also emotional realism, perhaps taking a load off the CPU that would otherwise compute logic for random characters. Nvidia's ACE platform has already been working toward this for a while now and is currently present across the landscape. "You should also expect that future video games are essentially AI characters within them. Every character will have their own AI, and every character will be animated robotically using AI. The realism of these games is going to really, really climb in the next several years, and it's going to be quite extraordinary." Beyond the photorealism, this aspect of AI can actually help cut development times since no studio will tirelessly animate and breathe life into every single NPC. We'd walk away with a more polished outcome, but it will still lack that human touch, the sheer creativity that many put up as the argument against generative AI today. It's important to note that Jensen himself never said that the RTX 5090 represents the peak of traditional raster, but he didn't push back on that comment. The 5090 is a ludicrously powerful GPU, and it will still be a while before that kind of performance trickles down to the masses. But it seems like the traditional shader compute ceiling may no longer grow as much or as fast, and AI-reliant features like DLSS are likely to beocme the new frontier of innovation. We're already seeing this happen, and it might become our permanent reality if the AI boom doesn't cool off soon. For now, though, there's no escaping AI when it comes to computer hardware, both in a literal and metaphysical sense. The very thing that caused the current component crisis is being touted as its antidote. Jensen ended his answer to this question by saying, "I think this is a great time to be in video games."

[3]

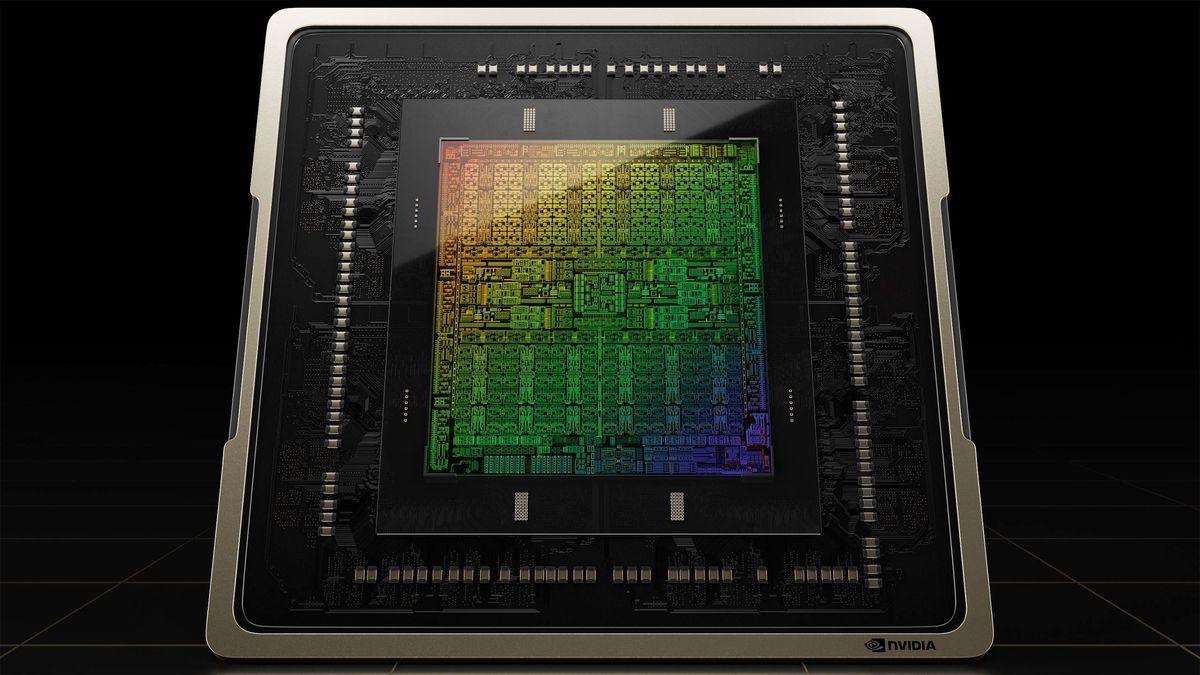

Where's the Gaming GPU? Nvidia Remains All About AI at CES Event

Sorry PC gamers, but Nvidia didn't talk at all about graphics cards or gaming at the company's speech ahead of CES, the annual electronics trade show. Instead, the event was firmly fixated on the company's newest AI chips meant to help the largest tech companies unleash even more generative AI programs. For nearly two hours, Nvidia's CEO Jensen Huang discussed the company's enterprise business, especially for AI data centers, which makes up nearly 90% of its revenue. The most significant news was that Nvidia has started "full production" of the company's Rubin platform, the successor to the Blackwell architecture. The company has talked plenty about Rubin before, but Nvidia is now ready to start pumping out the cutting-edge AI chips, which will be packed into servers meant for the newest data centers. The Rubin platform isn't one AI chip, but six. The main two are the Rubin GPU, which spans 336 billion transistors, along with the Vera CPU, which features 88 "custom Olympus cores." The Rubin GPU itself promises to offer a five times performance increase in AI inference compared to Blackwell, thus lowering the costs and energy demands to run and train AI programs such as chatbots. The company plans on packing hundreds of Rubin GPUs and the related AI chips into servers, effectively selling the systems like supercomputers. All the major AI labs including Anthropic, OpenAI, Meta, and Elon Musk's xAI are looking to adopt the Rubin platform, according to Nvidia. The company will also sell the AI chips through partners, such as server makers, starting in the second half of 2026. Meanwhile, major cloud providers in Amazon's AWS, Microsoft and Google are slated to deploy the Rubin platform as well. In addition, Nvidia plans on delivering its AI chips on an "annual cadence," instead of a two-year cycle, giving the largest tech companies more options to build out their new data centers to chase AI development. However, Jensen made no mention of the technology ever porting over to the consumer-side. Old rumors that Nvidia has been preparing enhanced "Super" editions of the RTX 5000 gaming GPUs didn't materialize either. The company did tell PCMag that the event would be about AI and enterprise. But if you were a gamer who tuned into Jensen's speech hoping for some PC-related news, you'd be disappointed. Naturally, we can't help but wonder if Nvidia has been forced to revise its gaming GPU plans. In a bad sign for PC building, the AI data center demand has been creating a serious shortage for memory chips, which is raising concerns about GPU supplies. The same shortage is already causing consumer DDR5 RAM and SSD storage to jump in price and is expected to inflate costs for completed consumer electronics too, including PCs and phones. Still, Nvidia is preparing a live stream tonight at 9pm PST on both YouTube and Twitch that's slated to offer some consumer-focused announcements related to the company's GeForce brand. So stay tuned for our coverage.

[4]

CEO Jensen Huang says Nvidia could possibly increase supply of older gen GPUs to address shortages -- adding more AI features to older cards is also an option

Nvidia took to the stage at CES in Las Vegas this week, but new consumer GPU hardware was conspicuously absent as CEO Jensen Huang instead touted the latest and greatest the company has to offer in the realm of heavyweight AI computing. With DDR5 prices skyrocketing, SSDs not far behind, and Nvidia's flagship RTX 5090 now fetching an eye-watering $4,000 at some retailers, bad news is everywhere for PC builders. GPU pricing is facing a squeeze from both ends, with both increasing RAM costs and likely dwindling supply causing a price increase on Nvidia's top GPUs. There are, of course, ways to address this, one notable option being the reintroduction of older GPUs that rely on older process nodes, less DRAM, and older technologies. Sketchy rumors have been floating around about the return of the Ampere-based RTX 3060 in 2026. Most notably, AMD has teased the return of some Zen 3 AM4 chips to ease the strain on PC gamers looking for upgrades, revealing that spinning up old tech isn't beyond the realms of possibility. So we straight up asked Nvidia. At a Q&A with the company in Las Vegas for CES 2026, Tom's Hardware put the question to CEO Jensen Huang regarding ways Nvidia could ease pressure on the consumer GPU market. "Hi Jensen, Paul from Tom's Hardware. The prices of gaming GPUs, especially the latest and greatest, are really becoming high, which might be due to some restrictions on supply and production capacity, one would assume. Do you think that maybe spinning up production on some of the older generation GPUs, on older process nodes where there might be more available production capacity, would help that, or maybe also increasing the supply of GPUs with lower amounts of DRAM? Are there steps that could be taken, or any specific color you could give us on that? Huang: "Yeah, possibly, and we could possibly, depending on which generation, we could also bring the latest generation AI technology to the previous generation GPUs, and that will require a fair amount of engineering, but it's also within the realm of possibility. I'll go back and take a look at this. It's a good idea." Huang's non-committal answer probably sheds some light on the rumors of a return of some older GPU -- it doesn't sound like it's something the company is planning anytime soon. This may signal that Nvidia doesn't think the issue is getting out of control just yet. Supply will inevitably improve as time passes and chipmakers dramatically increase production on the latest process nodes, but we are likely years away from GPU supply reaching a healthy enough balance to feed both the consumer and AI data center markets. In the meantime, an increase in the number of GPUs built on older architectures and process nodes might be our only hope for affordable gaming GPUs. On the software side, the possibility of newer AI-driven features that will boost performance to those older GPUs would also be a boon not only for those with existing gear but also for those who would be forced to grudgingly buy discrete older-gen GPUs as the only affordable option. And while lower VRAM capacities absolutely have an impact on performance, newer AI-driven features like DLSS do help offset that enough to make them at least serviceable. At least until some unknown point in the future where a GPU with plenty of VRAM doesn't cost as much as a used car. There remain trade-offs; Nvidia's latest DLSS 4.5 model cuts performance on older GPUs significantly, so as Jensen says, Nvidia would have to do an awful lot of work behind the scenes to make that prospect a reality.

[5]

NVIDIA is reportedly bringing back 2021's RTX 3060 GPU because AI is eating all of the newer cards

Why is the world's most valuable company reportedly bringing back such an antiquated graphics card? You know the answer. It's the endless gaping maw known as AI. Tech companies have been hoovering up PC parts for AI applications with reckless abandon. It has become a legitimate challenge for a regular person to buy RAM and graphics cards, which has led to price increases across the board and companies like Crucial closing up shop. It's particularly difficult to get ahold of GDDR7 RAM, which is needed for the newer RTX 5060 cards. So NVIDIA's solution looks to be a hop in the time machine to 2021. Gamers will need something, after all, and the 3060 technically gets the job done. Any downgrade in graphics and performance will be worth it once you watch an AI-generated video of Kurt Cobain singing in heaven with Albert Einstein, am I right? It's hilarious because they never got to meet in real life. The RTX 3060 is still pretty popular, despite NVIDIA phasing out the card back in 2024. We don't know how much the company plans on charging for this trip down memory lane. The GPU originally cost around $329. One would think that five-year-old technology could easily hit a much lower price point, but NVIDIA has us in a chokehold here and it can pretty much charge whatever it wants. Again, no price is too high when considering the magical wonders of generative AI. You can watch Tupac hang out with Mr. Rogers for five seconds.

[6]

Nvidia's Jensen Huang calls releasing older GPUs featuring the latest AI tech a "good idea"

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. What just happened? Nvidia CEO Jensen Huang has lent credence to the recent rumor that the company will restart production of its older GPUs - a result of the current AI-driven memory crisis. But rather than just releasing the same old card, Huang suggested reintroducing the products with modern features, such as the latest performance-increasing AI technology. There were rumors this week that Nvidia is set to restart production of the RTX 3060, which launched in 2021 and was discontinued in 2024. With AI data centers gobbling up all the DRAM, GPU prices are climbing: there are reports that the RTX 5090 could reach $5,000. During a Q&A session at CES, Tom's Hardware reporter Paul Alcorn asked Huang if restarting production on older GPUs on legacy processor nodes could help ease the current supply and production issues. Huang seemed open to the idea and even suggested a way to make the re-released cards more appealing. "Yeah, possibly, and we could possibly, depending on which generation, we could also bring the latest generation AI technology to the previous generation GPUs, and that will require a fair amount of engineering, but it's also within the realm of possibility," the CEO said. "I'll go back and take a look at this. It's a good idea." Most popular GPUs among Steam survey participants during December The concept of releasing the old cards with AI features to improve their performance does sound interesting. The RTX 3060 is currently the most popular GPU among Steam survey participants, illustrating its lasting appeal. Relaunching the card (hopefully it would be the original 12GB version and not the cut-down 8GB variant) with some extra bells and whistles and a compelling price point could be a smart move on Nvidia's part. One new AI feature Jensen could be referring to is DLSS 4.5. The latest version of Nvidia's upscaling tech introduces a slew of graphical and stability improvements, but it comes at the cost of a massive 20+% performance drop compared to DLSS 4 when used on RTX 3000 and RTX 2000 GPUs. Nvidia felt the need to confirm that there would be no new (or old) GPUs announced at CES this year. However, there has been plenty for gamers to get excited about. In addition to DLSS 4.5, Nvidia revealed details about its updated G-Sync Pulsar tech. Not only will the latest version further reduce motion blur, claiming to make 360Hz monitors look like 1,000Hz, but it also adjusts a monitor's brightness and color based on ambient room lighting.

[7]

For the first time in 5 years, Nvidia will not announce any new GPUs at CES -- company quashes RTX 50 Super rumors as AI expected to take center stage

As the entire industry plunges into a component drought, Nvidia has just announced on X that its CES 2026 keynote will have "no new GPUs," throwing cold water on the little hope left for new PC builders. This breaks a five-year long streak of consistently announcing new GPUs -- desktop or mobile -- at CES; instead, this time, there will be no new hardware at all. Most of the presentation will likely focus on AI advancements. The Green Team has had new silicon to show at CES every year since 2021. Most recently, the RTX 50-series debuted at those iconic Las Vegas floors, and there have been rumblings and rumors of an RTX 50 Super series coming as well, aligning with the dates for CES 2026. While there was never any official confirmation, the DRAM shortage may have derailed this launch, because otherwise, Nvidia did release the RTX 40 Super series at CES 2024, a year after the initial Ada Lovelace cards came out. Moreover, the company's latest Blackwell GPUs use GDDR7 memory, which is harder to produce. The situation has gotten so bad that wild rumors of Nvidia restarting RTX 3060 production have started floating around, since that card uses GDDR6 instead and is fabricated on Samsung's older 8nm process. Sourcing memory is a big part of the problem. Nvidia can't announce new GPUs if the factories behind are entirely choked. Only three companies in the world, Micron, SK Hynix, and Samsung, are capable of manufacturing cutting-edge DRAM to begin with, and they're all more than happy selling to AI clients for fatter margins. The hunger for AGI has led companies like OpenAI to chart record-breaking computing pursuits, ambitions that far exceed what our supply chains can even handle. Some of you might be wondering why the government doesn't step in to help consumers here; isn't regulating the markets their job? Unfortunately, geopolitics further complicate this situation as frontier AI represents another arms race, and Washington wants to maintain its lead against China. At the end of the day, there's no savior coming. Like the RAM crisis of 2014 and the various GPU shortages in the past decade, we'll have to wait until the AI boom goes stagnant. As of right now, Nvidia graphics cards still haven't experienced a price hike, so this might be the final few moments before we return to real scalping issues. Still, some people in the community, such as Sapphire's PR manager, are hopeful that even this storm can be ultimately weathered.

[8]

Nvidia CEO: 'The future [of GPUs] is neural rendering. That's the way graphics ought to be'

We asked what an AI gaming GPU would look like in the future. This was the response. Love or hate it, upscaling technology like Nvidia's DLSS have expanded the definition around gaming performance. And while hardware enthusiasts still want to know what to expect for raster performance, free of any software tricks, we'll have to wait a while longer for a definitive answer. When PCWorld's own Adam Patrick Murray asked about the RTX 5090 and the future of AI gaming GPUs at a CES 2026 Q&A session for media and analysts, Nvidia CEO Jensen Huang dove briefly into his current view on GPUs, AI, and gaming -- one where upcoming video games will house layers and layers of AI: (Transcript lightly edited for clarity.) PCWorld: Adam, with PCWorld, I'd like to talk about gaming for a second -- Huang: Yes, it's awesome. Me too! PCWorld: So Nvidia continues to push DLSS to be better and faster with what's been introduced -- Huang: Pretty amazing, right? Just quickly: Before GeForce brought CUDA to the world, which brought AI to the world, and then after that, we used AI to bring RTX to gamers and DLSS to gamers. And so, you know, without GeForce, there would be no AI today. Without AI, there would be no DLSS today. That's great. PCWorld: It's harmonious, yeah. My question, one of my questions, is -- is the RTX 5090 the fastest GPU that gamers will ever see in traditional rasterization? And what does an AI gaming GPU look like in the future? Huang: I think that the answer is hard to predict. Maybe another way of saying it is that the future is neural rendering. It is basically DLSS. That's the way graphics ought to be. And so, I think you're going to see more and more advances of DLSS. We're working on things in the lab that are just utterly shocking and incredible. And so I would expect that the ability for us to generate imagery of almost any style from photo realism, extreme photo realism, basically a photograph interacting with you at 500 frames a second, all the way to cartoon shading, if you like. All that entire range is going to be quite sensible to expect. You should also expect that future video games are essentially AI characters within them, and so it's almost as if every character will have their own AI, and every character will be animated robotically using AI. The realism of these games is going to really, really climb in the next several years, and it's going to be quite extraordinary, you know. And so I think this is a great time to be in video games, frankly. With the surprise announcement of DLSS 4.5, which will offer RTX gamers further resolution boosts and frame rate bumps, Nvidia does appear to be focused less on where gaming performance improvements come from. Its launch of G-Sync Pulsar display tech (available in select monitors starting this Wednesday, January 7) also reflects this stance, improving motion clarity with a combination of high-end panel specs and clever software control.

[9]

High-end PC gaming is in big trouble, thanks to AI - and RTX 5090 price hikes are prime examples

It appears to be a direct impact from the RAM crisis due to AI demand We're officially in 2026, and last year's rumors regarding GPU price hikes on Nvidia and AMD GPUs (due to the ongoing RAM crisis) appear to be accurate, which may prove very unfortunate for PC gamers. As reported by VideoCardz, Nvidia GeForce RTX 5090 prices have increased significantly above the original retail pricing, with models reaching up to $4,000 across multiple retailers. The GeForce RTX 5090 Founders Edition is still priced at $1,999 / £1,799 / AU$4,039, so the price hikes appear to be coming directly from retailers and private sellers. RAM kits have become much pricier over the last few months, due to the current AI boom, and appear to be the reason behind these GPU price increases (since GPUs also use VRAM). It's likely resulted in retailers seeking ways to get customers to pay more for PC hardware across the board due to higher demand, and the looming threat of potential price hikes directly from Team Green and AMD on RTX and Radeon GPUs, respectively. A prime example is on Best Buy, with the Asus ROG Astral RTX 5090 now available for a staggering $3,610.78, almost double the Founders Edition's MSRP, and a significant chunk above the third-party GPU's standard pricing (around $2,799.99). The same price jumps above MSRP have occurred with AMD Radeon RX 9070 XT GPUs long before the RAM crisis, and have continued - the XFX Mercury Radeon RX 9070 XT OC Edition on Best Buy is now at $849.99, up from its $599 retail price. None of these price hikes come straight from Nvidia or AMD (yet...), and despite recent rumors suggesting that Team Green plans to raise the RTX 5090's MSRP to $5,000, there's no confirmation of that - and frankly, it's unlikely that we'll see such a ludicrously aggressive price increase. If there is anything to blame, though, it's clearly the meteoric rise of AI technology. AI training and operation datacenters have increased the demand for RAM (and some other components) to unprecedented levels, which has ultimately trickled down to impact consumers, and it doesn't seem like it's slowing down any time soon. While Nvidia does have an indirect part to play in these price hikes due to its heavy involvement in the AI boom, it's not solely responsible; there's been a concerted push to develop and use AI from numerous companies around the globe, and they're all jointly at fault. Desktop DDR4 and DDR5 RAM play a significant part in PC building, more so than GPUs; basically, you can build a PC without a discrete GPU, but with no RAM, good luck getting your PC to boot. With the AI boom making RAM far more expensive than it should be for consumers, it's enough to leave worries that this could become the new normal in 2026 and beyond - effectively killing the custom PC gaming dream for all but the wealthiest gamers. The heavy reliance on AI is becoming more noticeable as time goes on, and as it continues, I can certainly see prices for all PC hardware increasing from retailers and private sellers. It's an incredibly problematic situation, not only for high-end PC gaming, but also for those looking to dive into the PC gaming ecosystem for the first time. If you've been contemplating building your first gaming PC, I'd do it fast; these prices are likely to keep climbing, at least for now.

[10]

Nvidia's CEO says bringing new AI tech to older generation GPUs is 'within the realm of possibility'

With the memory crisis being, well, a crisis, and the potential of the RTX 3060 making a comeback, the temporary solution may be further optimising older generation graphics cards. Nvidia's Jensen Huang thinks that's "a good idea". In a Q&A attended by Tom's Hardware, Huang was asked if spinning up production on older GPUs on older process nodes could be a way of combating supply instabilities and the price demands that come with it. Huang says that is a possibility, and "we could also bring the latest generation AI technology to the previous generation GPUs, and that will require a fair amount of engineering, but it's also within the realm of possibility. I'll go back and take a look at this. It's a good idea." AI technology here refers to techniques like DLSS, which renders games at a lower resolution and upscales them with the help of AI. It also refers to frame generation, which uses AI image generation and interpolation to create new 'fake' frames in between 'real' rendered ones. When the RTX 50 series launched, it came with MFG (Multi Frame Generation), which adds three frames for every one 'real' frame. Just this week, it was announced that figure will go all the way up to 6x -- five fake frames for every one real one -- but MFG is currently only available on RTX 50-series GPUs. Huang's comment is interesting as Nvidia's AI performance bumps are linked to specific hardware in the cards. For instance, MFG uses things hardware Flip Metering, which helps with frame pacing. This is only present in Blackwell GPUs. When Huang says bringing newer tech to older cards would need engineering, it's unclear if he's suggesting that engineers can work around hardware limitations, and which ones Nvidia could potentially work around. Ironically, given the memory shortage is largely caused by huge demand from the AI industry, the problem and temporary solution are both AI-driven. As pointed out by Tom's Hardware, this answer from Huang is rather non-committal. It's not as small as 'we'll look into it' but definitely not as big as 'we'll do that'. However, it does show the idea is on Nvidia's radar. The fact that Huang is even discussing this idea is a testament to how dire PC hardware is looking right now. PC Gaming has never been super cheap, and even good budget rigs will cost you a pretty penny, but things are set to look worse, not better. The RTX 3060 launched five years ago and was a budget-to-mid-range pick even then. A small silver lining to stock issues and potential workarounds that may come with it is that you have even less of a reason to upgrade if you've been rocking a 30-series card since its launch. If Nvidia does, in fact, make older cards work with the latest AI techniques, you could see a performance bump without having to swap out any parts. That silver lining is a small one in the very dark cloud that is PC gaming right now.

[11]

AMD and Nvidia will reportedly raise GPU prices "significantly" in 2026

According to a new report, both AMD and Nvidia will raise the prices of their consumer GPUs "significantly" this year. If this report is true, both companies will have pushed these price hikes by next month. This information comes from Newsis, which claims these price hikes will come as a result of the high cost of memory in the computer hardware market right now. This increased cost has been caused by construction of widespread AI data centres, which has resulted in a huge demand for such components. This price hike will continue to get worse too, according to Newsis industry sources. While an initial price increase will reportedly go into place in January for AMD and February for Nvidia, both companies will continue gradually increasing the price throughout the year. Expectations are that these price increases will impact certain GPUs, including Nvidia's GeForce RTX 50 series and AMD's Radeon RX 9000 series which are already expensive. According to Newsis, the Nvidia RTX 5090 which was released at a price point of $1,999 could eventually increase to $5,000 this year. The reasons why these price hikes are coming are clear. Not only are GPus getting more expensive to make due to the increased cost of construction, but AI companies such as OpenAI are hardware guzzling behemoths. Nvidia CEO Jensen Huang stated that next generation AI will need "100 times more compute" than older models, and Microsoft's CEO mused that they don't have the electricity to install the GPUs sitting in their inventory. Whether or not you buy into the hype regarding Generative AI and LLMs, the reality is these companies are buying GPUs (and RAM) with the expectation that they'll need an absurd amount of hardware for future models, and the consumer demand for those models. Thus, we have a massive demand for GPUs, thus the price increase. Nvidia and AMD know they can charge AI companies more too, as these companies rely on such hardware for continued growth. What this means for the average consumer of PC parts for gaming purposes is that your PC parts are going to get drastically more expensive over the coming months if this report is accurate, and they'll likely get more expensive due to basic supply / demand problems in the hardware market. All the while video game companies state their intention to embrace AI through development. Take Square Enix, which intends to replace 70% of its QA wit hAI by 2027, and Ubisoft whose CEO believes AI will be as big a revolution as the shift to 3D.

[12]

Nvidia's Jensen Huang has a clear vision for the future of its gaming GPUs and is going to be all about neural rendering: 'It is basically DLSS. That's the way graphics ought to be'

Perhaps to the surprise of no one, Nvidia's time at CES 2026 was all about one thing: AI. That said, PC gaming wasn't entirely ignored, as DLSS 4.5 was ninja-launched with the promise of '4K 240 Hz path traced gaming'. However, DLSS is still AI-based and in a Q&A session with members of the press, CEO Jensen Huang made it clear that artificial intelligence isn't just for improving performance, it's how graphics needs to be done in the future. This much we already know, as Nvidia banged its neural rendering drum starting at last year's CES and then throughout 2025, and it wasn't the only graphics company to do so. Microsoft announced the addition of cooperative vectors to Direct3D, which is pretty much required to implement neural rendering in games, and AMD's FSR Redstone is as AI-based as anything from Intel and Nvidia. So, when PC World's Adam Patrick Murray asked Huang, "Is the RTX 5090 the fastest GPU that gamers will ever see in traditional rasterization? And what does an AI gaming GPU look like in the future?", it wasn't surprising that Nvidia's co-founder avoided the first question entirely and skipped straight to the topic of AI. "I think that the answer is hard to predict. Maybe another way of saying it is that the future is neural rendering. It is basically DLSS. That's the way graphics ought to be." He then expanded with some examples of what he meant by this: "I would expect that the ability for us to generate imagery of almost any style from photo realism, extreme photo realism, basically a photograph interacting with you at 500 frames a second, all the way to cartoon shading, if you like." The keyword here is generate. If one wishes to be pedantic, all graphics are generated, either through rasterization or neural networks. It's all just a massive heap of mathematics, broken down into logic operations on GPUs, crunching through endless streams of binary values. But there is one important difference with neural rendering, and it's that it requires far less input data to generate the same graphical output as rasterization. Fire up the original Crisis from 2007, and all those beautiful visuals are generated from lists of vertices, piles of texture maps, and a veritable mountain of resources that are created during the process of rendering (e.g. depth buffers, G-buffers, render targets, and so on). That's still the case almost 20 years on, and the size and quantity of those resources are now truly massive. As DLSS Super Resolution proves, though, they don't need to be in the era of AI graphics. Nvidia's upscaling system works by reducing the frame resolution for rendering, scaling it back up once finished, and then applying a neural network to the frame to clean up artefacts. One idea behind neural rendering is take that a step further and to use lower resolution assets in the first place, and generate higher quality stuff as and when required. Does it ultimately matter how a game's graphics are produced as long as they look absolutely fine and run smoothly? I dare say most people will say 'no', but we don't have any games right now that use neural rendering for any part of the graphics pipeline, other than upscaling and/or frame generation. Everything else is still rasterization (even if ray tracing is used, raster is still there behind the scenes). That means GeForce GPUs of the future, both near and far, will still need to progress in rasterization to ensure games of tomorrow look and run as intended. But with Nvidia being dead set on neural rendering (I don't think Huang said "That's the way graphics ought to be" lightly), have RTX graphics cards reached a plateau in that respect? Does the company now expect that all generational performance increments will come from better DLSS? Will GPUs of the future be nothing more than ASICs for AI? How would such chips process older graphics routines? Is PC gaming heading backwards in time to the era when you needed a new GPU for every major new game, because previous chips didn't support the tech inside? Answers that generate more questions than they resolve certainly aren't a bad thing, but in this case, I wish Nvidia would give us a much clearer picture as to its roadmap for gaming GPUs and how it plans to support games of the past, present, and future.

[13]

Nvidia Bringing Back RTX 3060 As DRAM Shortage Continues

The rising cost of PC parts is getting so bad that Nvidia is reportedly going to start making RTX 3060s again, so people have something to buy in the future while all the new shiny tech gets consumed by the AI industry. You've likely already noticed that in 2025, it became more expensive to build or upgrade a PC. Thanks to hyperscalers and AI-focused tech companies gobbling up PC parts to build datacenters, it's becoming quite pricey and challenging for your average person to buy PC RAM and graphics cards. Even the prices on prebuilt PCs from companies like HP, Dell, and Asus will increase by 15 to 20 percent, according to PC World. Not great! This will also lead to PC handhelds getting more expensive, and experts expect consoles will undergo price increases too, thanks to growing demand for specific parts. And Nvidia, a company that is making billions from all of this, has a solution to provide PC players with a somewhat affordable GPU in 2026: Start making and selling its old RTX 3060 again. As recently reported by reliable Nvidia leaker Hongxing2020 and spotted by Wccftech, Nvidia is seemingly planning to restart production on the RTX 3060 in Q1 of this year. The card first launched in 2021 and is still one of the most popular gaming GPUs around, according to data from Steam. In 2024, Nvidia began retiring the card as it moved on to the 40 and 50 series of GPUs. However, due to DRAM being rapidly consumed for data centers, it has become harder and more expensive to procure the GDDR7 needed to produce the newer, more powerful RTX 5060. (Sidenote: Basically, every datacenter built or being built will be out of date in the near future, meaning the billions invested in them will be basically a waste!) So it seems like the plan is for Nvidia to start producing 3060s again, using cheaper, easier-to-acquire components that aren't top of the line and therefore aren't being sought after by AI tech giants for their massive datacenters. If that is the case, hopefully Nvidia at least prices the new 3060s at $200 or less, as the only market for these cards will be gamers looking to upgrade a PC from an older GPU to something (somewhat) modern. But greed is a nasty thing and has infected all tech companies, including Nvidia, so I wouldn't hold my breath on a sub-$200 price point despite the 3060 entering its fifth year in 2026.

[14]

NVIDIA Hints At Relaunching Older Gaming GPUs With New Technologies To Tackle Challenges In Current Market, Says Future of Graphics Is Neural Rendering

NVIDIA CEO has highlighted that Neural Rendering is the future of graphics, & also hinted that older gaming GPUs might be coming back. During a Q&A session hosted by NVIDIA's CEO, Jensen Huang, at CES 2026, several questions on the current gaming landscape were laid out of which two questions in particular held out. The first important question was asked by Tom's Hardware's Editor, Paul Alcorn, who asked Jensen about the current market challenges that affect almost all gaming PC hardware. The question also asked if the company would be willing to ressurect older GPUs with new technologies and to that, NVIDIA's CEO said that it was entirely possible though relaunching an older GPU with new technologies, especially the AI advancements that NVIDIA has made on the DLSS and RTX side such as DLSS 4.5 and MFG6X, would still require extra R&D. So while it will be possible, it remains to be seen whether NVIDIA would take this approach. "Hi Jensen, Paul Alcorn from Tom's Hardware. The prices of gaming GPUs, especially the latest and greatest, are really becoming high, which might be due to some restrictions on supply and production capacity, one would assume. Do you think that maybe spinning up production on some of the older generation GPUs, on older process nodes where there might be more available production capacity, would help that, or maybe also increasing the supply of GPUs with lower amounts of DRAM? Are there steps that could be taken, or any specific color you could give us on that? Huang: "Yeah, possibly, and we could possibly, depending on which generation, we could also bring the latest generation AI technology to the previous generation GPUs, and that will require a fair amount of engineering, but it's also within the realm of possibility. I'll go back and take a look at this. It's a good idea." via Tom's Hardware A few days ago, it was rumored that NVIDIA was bringing the GeForce RTX 3060 to life. The card saw severely reduced production output to meet the supply of newer GPUs, but has maintained the top spot on Steam as the most popular graphics card used by gamers. While the RTX 3060 is going to come back, it is definitely not going to feature any new technology such as MFG, advanced RT, Neural Shader, or Neural Rendering support. Those are changes within the GPU IP, and the chip for the RTX 3060 is still using the same Ampere architecture that launched back with the RTX 30 series. Older RTX GPUs, such as the RTX 20, RTX 30, and RTX 40 series, do benefit from new AI model updates such as DLSS 4.5 RTX Super Resolution, but even then, they have limitations due to their FP16 architecture versus the newer FP8 capability found on the latest RTX Blackwell lineup. This leads to bigger performance drops when DLSS 4.5 is enabled, as reported here. With that said, these older GPUs can still offset some supply challenges in the gaming markets. The second question was asked by PC World's Adram Patrick Muray about the future of gaming graphics and what role AI has to play. Answering this question, Jensen stated that Neural Rendering is the way forward for gaming and graphics in general. He pointed out that DLSS will continue to advance graphics, and we will see even more images and frames being generated by AI. There are currently three main applications for RTX Neural Shaders, all of which we previously covered from NVIDIA's research papers: RTX Neural Texture Compression, RTX Neural Materials, and RTX Neural Radiance Cache. "The answer is hard to predict. Maybe another way of saying it is that the future is neural rendering. It is basically DLSS. I think you're going to see more and more advances of DLSS. I would expect that the ability for us to generate imagery of almost any style, from photo realism, extreme photo realism, basically a photograph interacting with you at five hunderd frames per second, all the way to cartoon shading if you want, that entire range is going to be quite sensible to expect." Jensen Huang - NVIDIA CEO (via PCWorld) 'The bottom line is that, in the future, it is very likely that we'll do more and more computation on fewer and fewer pixels,' he says. 'And by doing so, the pixels that we do compute are insanely beautiful, and then we use AI to infer what must be around it. So it's a little bit like generative AI, except we can heavily condition it with the rendered pixels.' Jensen Huang - NVIDIA CEO (via Club386) Jensen also said that in the future, traditional shaders will be replaced by Neural Shaders, and even more computation will be done on a lower amount of pixels so that the pixels that are presented are not only "Beautiful" but also lead to images and frames that are hyper-realistic or close to real. There's alot to take from these few statement, but one thing is clear, NVIDIA is clear that gaming and AI share the same bond and that Neural Rendering is going to strengthen this relation in the future.

Share

Share

Copy Link

For the first time in five years, Nvidia declined to announce new gaming GPUs at CES 2026, focusing instead on its AI business. CEO Jensen Huang spent nearly two hours discussing the Rubin platform for AI data centers while memory chip shortages drive GPU prices skyward. The RTX 5090 now fetches $4,000 at some retailers, and Nvidia may bring back the 2021 RTX 3060 to address supply constraints.

Nvidia Prioritizes AI Over Gaming GPUs at CES 2026

For the first time in five years, Nvidia made a striking departure from tradition at CES 2026. The world's largest GPU manufacturer didn't announce any new gaming GPUs during CEO Jensen Huang's nearly two-hour keynote

1

2

. Instead, Nvidia focused almost entirely on its dominant AI business, which now accounts for nearly 90% of the company's revenue3

. The shift signals a fundamental change in priorities as AI data centers consume the lion's share of production capacity and memory resources.

Source: Tom's Hardware

The most significant announcement centered on the Rubin platform, Nvidia's successor to the Blackwell architecture, which has entered full production

3

. The Rubin GPU spans 336 billion transistors and promises a five times performance increase in AI inference compared to Blackwell, while the accompanying Vera CPU features 88 custom Olympus cores3

. Major AI labs including OpenAI, Anthropic, Meta, and Elon Musk's xAI are preparing to adopt the platform, with cloud providers like AWS, Microsoft, and Google slated to deploy it in the second half of 2026.

Source: PC Magazine

Neural Rendering Signals Shift Away from Traditional Rasterization

At a Q&A session attended by Tom's Hardware, Nvidia CEO Jensen Huang made a bold declaration about the future of graphics technology

2

2

. When asked whether the RTX 5090 represents the fastest GPU gamers will ever see in traditional rasterization, Huang responded: "The future is neural rendering. It is basically DLSS. That's the way graphics ought to be"2

.Huang speculated that future rendering will involve more AI operations on fewer, extremely high quality pixels, and shared that "we're working on things in the lab that are just utterly shocking and incredible"

2

. This suggests the traditional shader compute ceiling may no longer grow as much or as fast, with AI-reliant features becoming the new frontier of innovation.

Source: Tom's Hardware

DLSS Advancements Offer Software Solution to Hardware Constraints

With no new hardware to announce, Nvidia leaned heavily on software improvements. The company unveiled DLSS 4.5, featuring a second-generation transformer model trained on an expanded dataset to improve upscaling predictions

1

. The improvements particularly benefit Performance and Ultra Performance modes, where the upscaler works from lower-resolution source images.DLSS Multi-Frame Generation now increases AI-generated frames from three to five per rendered frame, with a new 6x mode paired with Dynamic Multi-Frame Generation

1

. This feature dynamically adjusts the number of generated frames based on scene complexity, though it still requires RTX 50-series hardware and a reasonably high base frame rate to minimize lag and rendering artifacts. The DLSS 4.5 transformer model runs on GeForce 20- and 30-series GPUs, though older cards experience a performance hit of 14 to 24 percent compared to DLSS 4.01

.GPU Shortages and Memory Chip Shortages Drive Prices Skyward

The absence of new gaming GPUs at CES 2026 reflects deeper supply chain challenges affecting PC gamers. Memory chip shortages, driven by sky-high demand from AI data centers, have created serious constraints on GPU production

3

. The shortage is particularly acute for GDDR7 RAM needed for newer RTX 5060 cards5

. This has caused consumer DDR5 RAM and SSD storage prices to jump, with the flagship RTX 5090 now fetching an eye-watering $4,000 at some retailers4

.Rumors suggested Nvidia was preparing 50-series Super cards with a 50 percent RAM capacity increase thanks to a switch from 2GB to 3GB chips, but these plans appear to have been derailed by the memory shortage

1

. Given that modern-day Nvidia is primarily an AI company that sells consumer GPUs on the side, it makes sense the company would allocate scarce RAM to more profitable AI GPUs rather than a mid-generation GeForce refresh.Related Stories

RTX 3060 Reintroduction Floated as Potential Solution

When Tom's Hardware directly asked Nvidia CEO Jensen Huang about easing pressure on the consumer GPU market, his response was notably non-committal

4

. Huang acknowledged the possibility of spinning up production on older generation GPUs on older process nodes where more production capacity might be available, saying "possibly, and we could possibly, depending on which generation, we could also bring the latest generation AI technology to the previous generation GPUs"4

.Reports suggest Nvidia may bring back the 2021 RTX 3060, which originally cost around $329, to address supply constraints

5

. The five-year-old GPU remains popular despite being phased out in 2024, though pricing remains uncertain. PC gamers face a challenging landscape as supply constraints are likely to persist for years before chipmakers can dramatically increase production on the latest process nodes to feed both consumer and AI data center markets.What This Means for PC Gamers

The implications extend beyond immediate hardware availability. Huang's vision includes AI-powered NPCs with their own neural networks, animated using AI to achieve emotional realism

2

. While this could reduce development times and create more polished outcomes, concerns persist about losing the human touch and creativity in game design. Upscaling and frame generation have become expected parts of the performance equation, with developers now counting DLSS as part of default system requirements2

. For now, PC gamers must navigate a market where the very technology causing the component crisis is being positioned as its solution.References

Summarized by

Navi

[4]

Related Stories

Nvidia delays next-gen graphics cards until 2028 as AI chips take priority over gaming GPUs

05 Feb 2026•Business and Economy

Nvidia's DLSS 4 Multi-Frame Generation: Potential Expansion to Older GPUs and New Features for RTX 50-Series

15 Jan 2025•Technology

Nvidia Unveils RTX 50 Series GPUs: A Leap Forward in AI-Powered Gaming Graphics

07 Jan 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation