Nvidia upgrades Vera Rubin specs with higher power and memory to counter AMD Instinct MI455X

3 Sources

3 Sources

[1]

Nvidia reportedly boosts Vera Rubin performance to ward hyperscalers off AMD Instinct AI accelerators -- increased boost clocks and memory bandwidth pushes power demand by 500 watts to 2300 watts

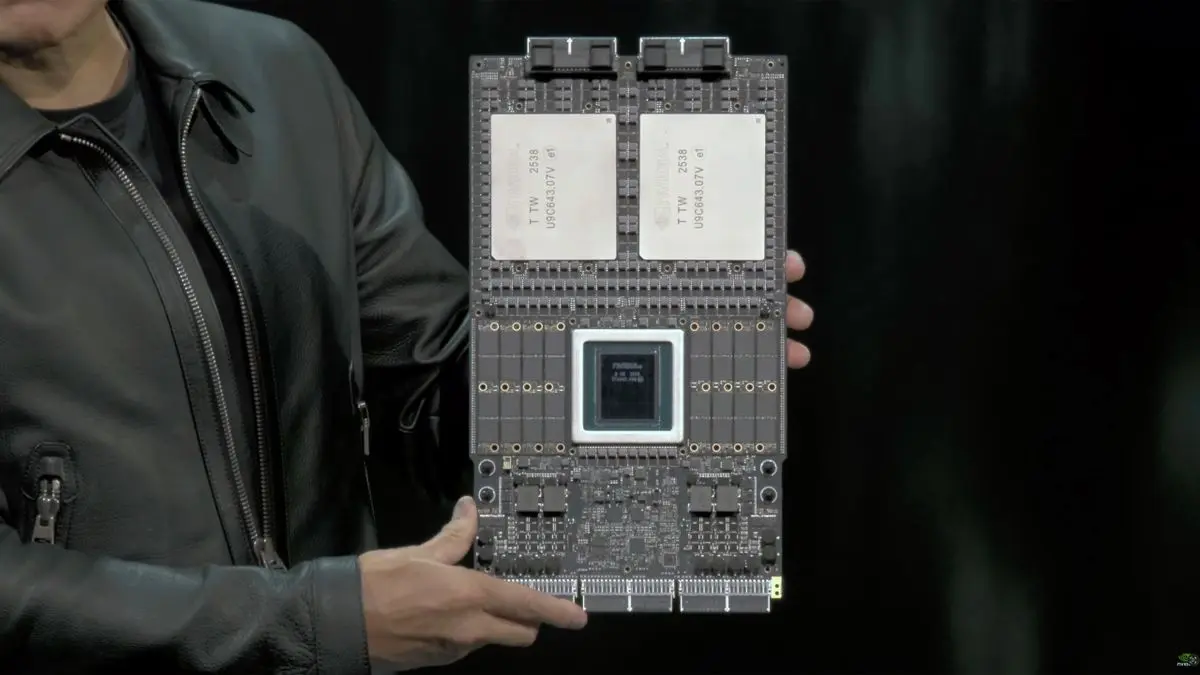

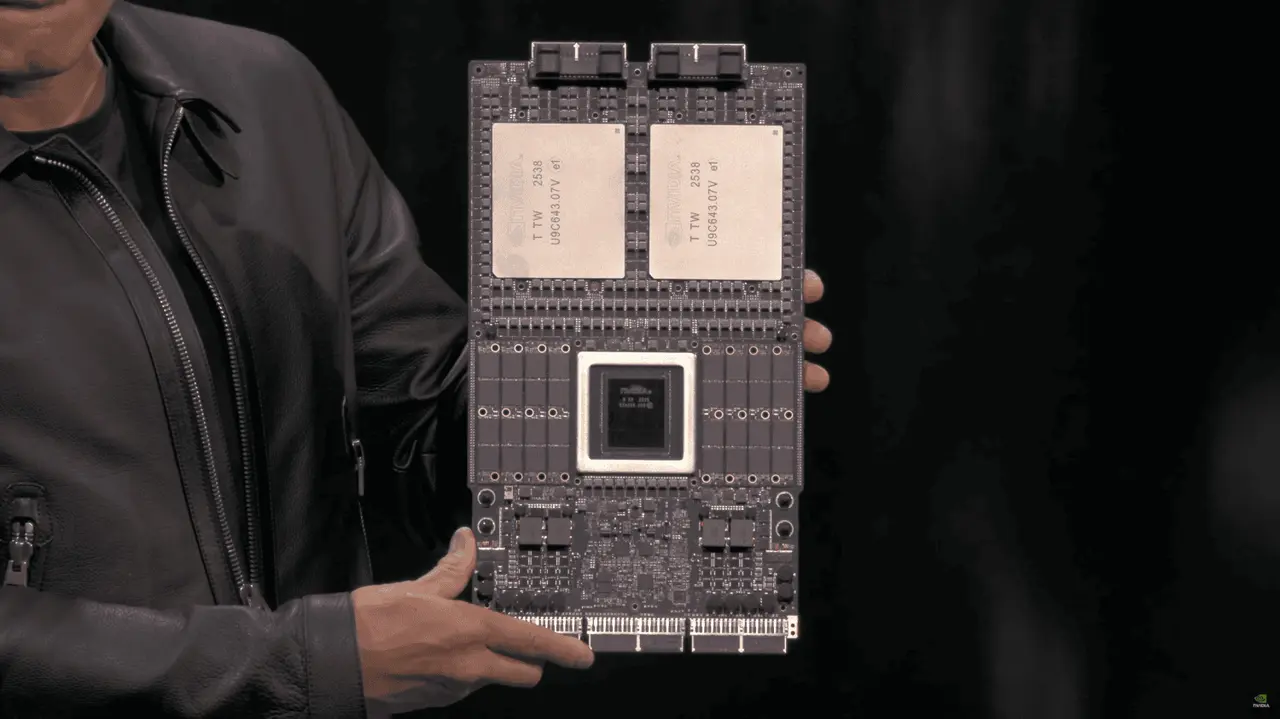

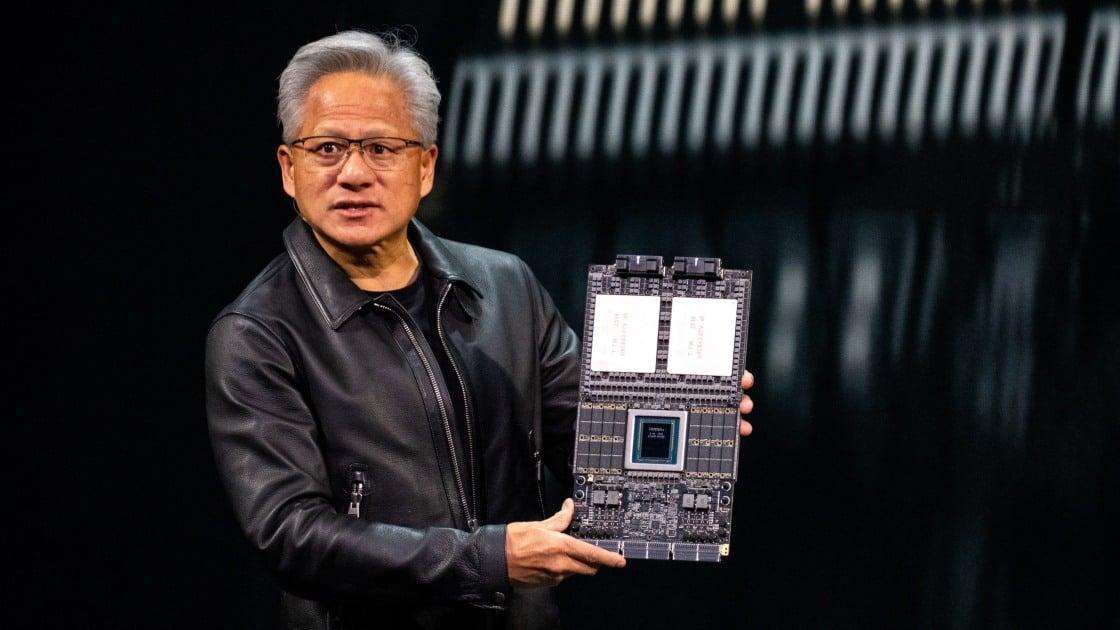

Recently, Nvidia announced that it had initiated 'full production' of its Vera Rubin platform for AI datacenters, reassuring the partners that it is on track to launch later this year and introducing ahead of its rivals, such as AMD. However, in addition to possibly bringing the release forward, Nvidia is also reportedly revamping the specifications of the Rubin GPU to offer higher performance: reports suggest a TDP increase to 2.30 kW per GPU and a memory bandwidth of 22.2 TB/s. The Rubin GPU's power rating has now been locked in at 2.3 kW, up from 1.8 kW originally announced by Nvidia, but down from 2.5 kW expected by some market observers, according to Keybanc (via @Jukan05). The intention to increase the power rating from 1.8 kW stems from the desire to ensure that this year's Rubin-based platforms are markedly faster compared to AMD's Instinct MI455X, which is projected to operate at around 1.7 kW. The information about the power budget increase for Rubin comes from an unofficial source, but it is indirectly corroborated by SemiAnalysis, which claims that Nvidia has increased the data transfer rates of HBM4 stacks, and now each Rubin GPU boasts a memory bandwidth of 22.2 TB/s, up from 13 TB/s. We have reached out to Nvidia to try to verify these claims. An additional ~500W of power headroom gives Nvidia multiple options to improve real-world performance rather than just specifications on paper. Most directly, it would enable higher sustained clocks under continuous training and inference loads, as well as reduced throttling when the AI accelerator is fully stressed. That extra power would also make it easier to keep more execution units running at the same time, which boosts throughput in heavy workloads where compute, memory, and interconnect are all under load at once. In addition to stream processors (or rather tensor units), the extra power budget could be used to run HBM4 memory and PHYs at higher clocks to improve memory bandwidth. In fact, a higher power budget would also enable Nvidia to improve the performance of all links (including memory, internal interconnects, and NVLink) to more aggressive operating points while retaining sound signal margins, which is increasingly important as modern AI systems become constrained by memory bandwidth and fabric performance. At the system level, an extra 500W TDP for AI accelerators translates into higher performance per node and per rack. Hyperscalers value higher system-level performance more than per-GPU performance per se, as fewer GPUs may be needed to complete the same job, which lowers networking load and improves cluster-level efficiency. That, of course, assumes that these hyperscalers can feed machines with a considerably higher power consumption. Last but not least, a higher TDP would also help on the manufacturing side as it enables more flexible binning and voltage headroom, which improves usable yield without the necessity to cut down the number of execution units or lower clocks. As a result, the extra 500W functions not only as a way to improve the performance of Rubin GPUs and the competitive position of VR200 NVL144 rack-scale solutions, but also acts as reliability headroom that ensures that the GPU can deliver predictable, sustained throughput in large-scale datacenter deployments rather than just offer higher peak numbers on paper. As a bonus, Nvidia can potentially supply more Rubin GPUs to the market, which is good for its bottom line. Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

[2]

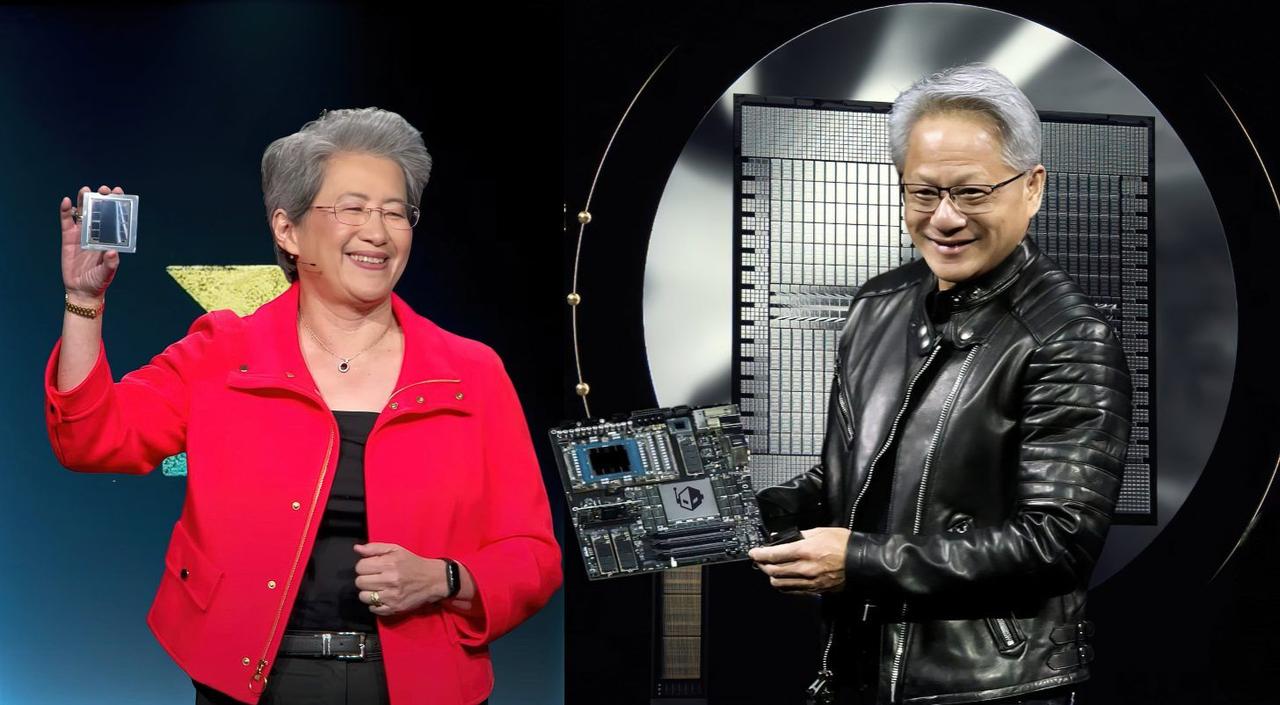

NVIDIA upgrades Vera Rubin HBM4 bandwidth by 10% in order to stay ahead of AMD Instinct MI455X

TL;DR: NVIDIA updated its Vera Rubin NVL72 AI server at CES 2026, boosting HBM4 memory bandwidth by 10% to 22.2TB/sec, surpassing AMD's Instinct MI455X. This enhancement, driven by competitive pressure, leverages faster 8-Hi HBM4 stacks to deliver superior AI acceleration performance. NVIDIA revised its Vera Rubin VR200 NVL72 AI server spec at CES 2026 a couple of weeks ago, increasing the HBM4 memory bandwidth by 10% to ensure it beats AMD's upcoming Instinct MI455X AI accelerator. In a new post on X from @SemiAnalysis, the NVIDIA Vera Rubin NVL72 AI server specifications now see HBM4 memory bandwidth at 22.2TB/sec, which is a 10% increase from the specs disclosed by NVIDIA at GTC 2025 last year. The original 13TB/sec of memory bandwidth from the HBM4 memory was impressive from the original specs unveiled for Vera Rubin NVL144 last year, with NVIDIA originally asking for 9Gbps bandwidth per pin from HBM4, and then a faster 10-11Gbps... but why is that? Why did NVIDIA upgrade the HBM4 bandwidth on Vera Rubin? NVIDIA is using 8-Hi HBM4 stacks for Vera Rubin, which is why it has been pushing for HBM4 specifications to exceed JEDEC's rating, asking HBM makers to increase pin speeds to 11Gbps. On the other hand, AMD is using 12-Hi HBM4 stacks, resulting in 19.6TB/sec of memory bandwidth... NVIDIA needed that 10% higher HBM4 bandwidth, now at 22.2TB/sec for its upcoming Vera Rubin NVL72 AI servers.

[3]

NVIDIA Is Feeling the Heat From AMD's Instinct MI455X AI Chips, Triggering Unusual Vera Rubin Upgrades to Hold Its Competitive Edge

NVIDIA's Vera Rubin AI chips have seen significant upgrades in key specifications over time, as Team Green aims to maintain its competitive lead over AMD's MI455X platform. NVIDIA Plans to Ramp Up Vera Rubin Memory Bandwidth By Bumping HBM4 Specs, Taking a Lead Over AMD's MI455X The Vera Rubin platform from NVIDIA is one of the company's most highly anticipated releases in the AI infrastructure race, driven by the tremendous upgrades Team Green has brought in within the architecture. We are looking at entirely revamped GPUs, CPUs, networking chips, along with other elements that shape the architecture to be powerful for agentic and inference workloads. However, according to recent reports, NVIDIA has restructured certain Vera Rubin elements, and this move is claimed to be a response to AMD's pursuit of the Instinct MI455X AI chips. According to SemiAnalysis, NVIDIA's Vera Rubin NVL72 specifications will now feature a memory bandwidth of 22.2 TB/s, a massive increase from the initial specifications disclosed at GTC 2025. This sudden jump is an indication that NVIDIA plans to maintain its lead in hyperscaler adoption and, more importantly, that agentic AI systems taking over the lead this year have made higher memory bandwidth onboard an essential requirement, prompting NVIDIA to be aggressive with its Rubin specifications. If you are curious about how NVIDIA has progressed from 13TB/s to almost 22.2TB/s of memory bandwidth, well, the company has been pursuing HBM4 specifications that exceed the standard JEDEC rating, reportedly asking suppliers to raise pin speeds up to 11 Gbps. Compared to AMD, NVIDIA also employs a narrower 8-stack interface, which is why overclocking pin speeds is the way to lead memory bandwidth. AMD had maintained the lead early on by focusing on 12-Hi HBM4 stacks, which yielded a 19.6TB/s figure. AMD has shown immense confidence towards its Instinct MI400 series in the past as well, and it appears that Team Red is expecting to provide hyperscalers with a much competitive option this time. It would be interesting to see whether we could witness a shift in market shares once Rubin and MI455X become mainstream. Follow Wccftech on Google to get more of our news coverage in your feeds.

Share

Share

Copy Link

Nvidia has significantly upgraded its Vera Rubin AI accelerator specifications in response to competitive pressure from AMD's Instinct MI455X. The chip now features 22.2TB/s memory bandwidth—a 10% increase—and a 2.3kW TDP, up from the originally announced 1.8kW. These Vera Rubin upgrades aim to secure hyperscaler adoption and maintain Nvidia's dominance in AI datacenter infrastructure.

Nvidia Vera Rubin Gets Major Specification Boost

Nvidia has revised the specifications of its Nvidia Vera Rubin platform ahead of its 2025 launch, introducing substantial performance enhancements that directly respond to competitive threats from AMD Instinct MI455X AI accelerators

1

. The changes include a power consumption increase to 2.3kW per GPU, up from the originally announced 1.8kW, and increased memory bandwidth that pushes HBM4 memory bandwidth to 22.2TB/s2

. According to reports from Keybanc and corroborated by SemiAnalysis, these Vera Rubin upgrades were unveiled at CES 2026 and represent a strategic move to maintain Nvidia's competitive edge in the AI infrastructure market3

.

Source: Tom's Hardware

Increased Memory Bandwidth Addresses AMD Competition

The most striking enhancement involves HBM4 specifications that now deliver 22.2TB/s of memory bandwidth per GPU, representing a dramatic increase from the 13TB/s initially disclosed at GTC 2025

2

. This 10% boost in the VR200 NVL72 configuration stems from Nvidia pushing HBM4 pin speeds beyond standard JEDEC ratings, reportedly requesting suppliers to achieve 11Gbps per pin3

. The company employs 8-Hi HBM4 stacks, necessitating higher clock speeds to compete with AMD's approach of using 12-Hi HBM4 stacks that deliver 19.6TB/s on the Instinct MI455X platform2

. This architectural decision reflects the growing importance of memory bandwidth in agentic AI systems and inference workloads that dominate current datacenter deployments3

.

Source: Wccftech

Power Consumption Jump Enables Performance Gains

The TDP increase to 2.3kW, adding approximately 500W of thermal headroom, provides Nvidia multiple avenues to improve AI acceleration performance beyond paper specifications

1

. This additional power budget enables higher sustained clock speeds under continuous training and inference loads, reducing throttling when AI accelerators face full stress1

. The extra wattage also allows more execution units to run simultaneously, boosting throughput in heavy workloads where compute, memory, and NVLink interconnects operate under maximum load. Importantly, the higher power envelope improves the performance of HBM4 memory and PHYs at elevated operating points while maintaining signal integrity—critical as modern AI systems become increasingly constrained by memory bandwidth and fabric performance1

.Related Stories

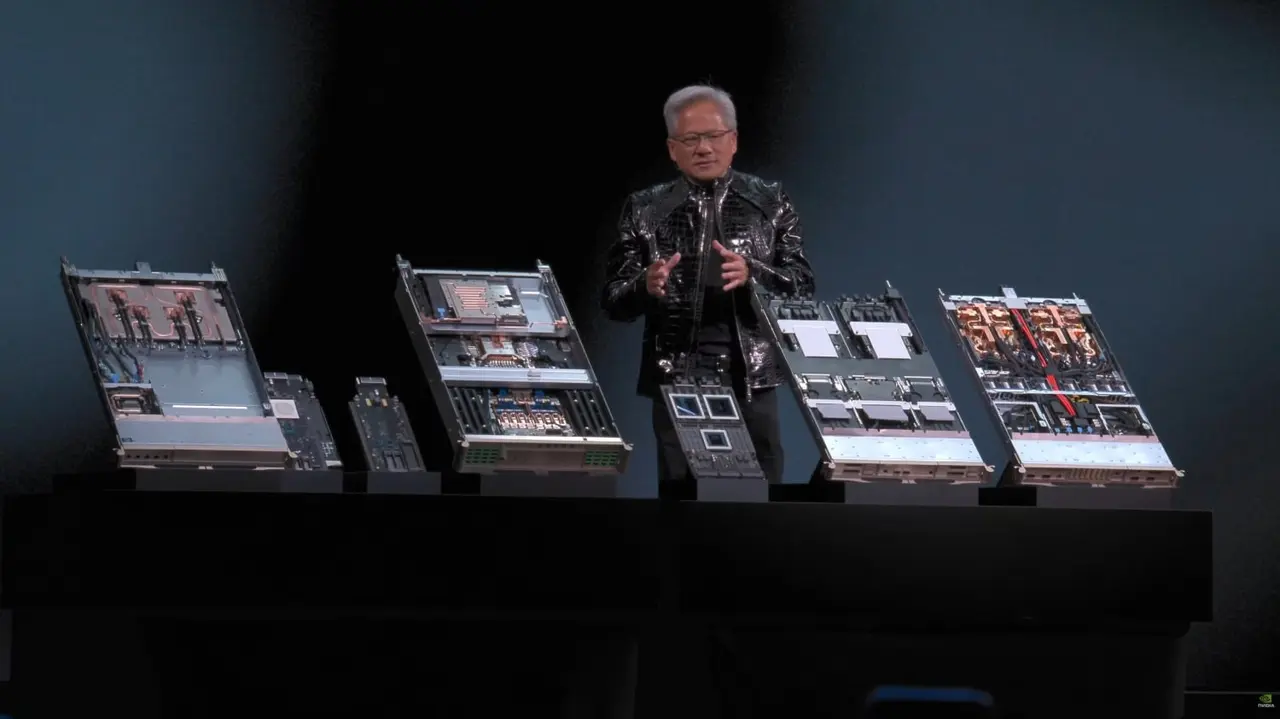

Strategic Implications for Hyperscalers

The specification changes target hyperscalers specifically, as these customers prioritize system-level performance over per-GPU metrics

1

. Higher performance per node and per rack means fewer GPUs may be needed to complete identical workloads, reducing networking load and improving cluster-level efficiency—assuming datacenter infrastructure can accommodate the elevated power demands. The increased TDP also provides manufacturing advantages through more flexible binning and voltage headroom, improving usable yield without requiring cuts to execution units or reduced clock speeds1

. AMD has demonstrated considerable confidence in its Instinct MI400 series, with the MI455X projected to operate at approximately 1.7kW, making it a formidable alternative for hyperscalers seeking competitive options1

3

. Market observers will watch closely to see whether these aggressive upgrades maintain Nvidia's market share dominance or if AMD gains ground when both platforms reach full production later this year.

Source: TweakTown

References

Summarized by

Navi

[2]

Related Stories

NVIDIA Unveils Next-Gen AI Powerhouses: Rubin and Rubin Ultra GPUs with Vera CPUs

19 Mar 2025•Technology

NVIDIA's Next-Generation Rubin AI GPUs Enter Production with HBM4 Memory Samples

09 Nov 2025•Technology

Nvidia Unveils Vera Rubin Superchip: Six-Trillion Transistor AI Platform Set for 2026 Production

29 Oct 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology