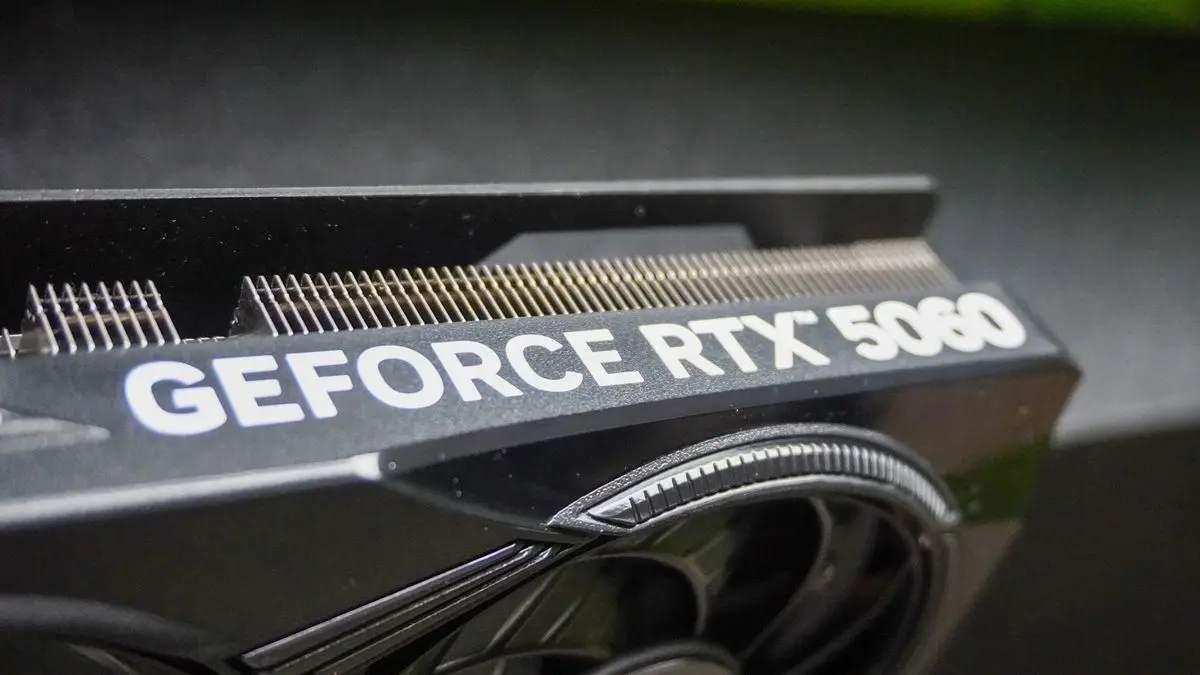

Nvidia's AI Texture Compression: A Potential Game-Changer for 8GB GPUs

2 Sources

2 Sources

[1]

Nvidia wants to make 8GB GPUs great again with AI texture compression -- but I'm not convinced

If you're annoyed by just getting 8GB of video memory (VRAM) on your Nvidia RTX 5060 Ti, RTX 5060 or RTX 5050 GPU, there may be a fix coming. And just like a lot of Team Green's work, it's all about AI. In 2025, when plenty of games are requiring more than this from the jump, it's simply not enough (and PC gamers are letting Nvidia and AMD know with their wallets). Which is why Nvidia is looking to neural trickery -- it's bread and butter with the likes of DLSS 4 and multi-frame gen. You may already know of Neural Texture Compression (or NTC), which is exactly what it says on the tin: taking those detailed in-game textures and compressing them for efficiency of loading and frame rate. As WCCFTech reports, NTC has seemingly taken another giant step forward by taking advantage of Microsoft's new Cooperative Vector in DirectX Raytracing 1.2 -- resulting in one test showing an up-to-90% reduction in VRAM consumption for textures. To someone who is always wanting to make sure people get the best PC gaming bang for their buck, this sounds amazing. But I'm a little weary for three key reasons. As you can see in tests run by Osvaldo Pinali Doederlein on X (using a prerelease driver), this update to make the pipeline of loading textures more efficient with AI is significant. Texture size dropped from 79 MB all the way to just 9 MB -- dropping the VRAM consumption by nearly 90%. Just like DLSS 4 and other technologies extracting a higher frame rate and better graphical fidelity out of RTX 50-series GPUs, NTC requires developers to code it in. And while Nvidia is one of the better companies in terms of game support for its AI magic (so far over 125 games support DLSS 4), it's still a relatively small number when you think of the many thousands of PC titles that launch every year. Of course, this is not a burn on Doederlein here. This testing is great! But it is one example that doesn't take into account the broader landscape of challenges that are faced in a game -- a test scene of a mask with several different textures isn't the same as rendering an entire level. So while this near-90% number is impressive nonetheless, when put to a far bigger challenge, I anticipate that number will be much lower on average. But when it comes to 8GB GPUs, every little bit helps! So yes, on paper, Nvidia's NTC could be the savior of 8GB GPUs, and it could extract more value from your budget graphics card. But let's address the elephant in the room -- graphics cards with this low amount of video memory have been around for years, games in 2025 have proven that it's not enough and neural texture compression looks to me like a sticking plaster. I don't want to ignore the benefits here, though, because any chance to make budget tech even better through software and AI is always going to be a big win for me. But with the ever-increasing demands of developers (especially with Unreal Engine 5 bringing ever-more demanding visual masterpieces like The Witcher 4 to the front), how far can AI compression really go?

[2]

Got buyer's remorse with your 8GB graphics card? Nvidia's AI texture compression promises huge benefits for GPUs with stingy amounts of memory

Testing with a demo showed almost a 90% reduction in video RAM footprint for textures, but that won't apply in all scenarios (by any means) Worried your Nvidia graphics card may be underpowered in terms of the video RAM (VRAM) it has on board? You may be aware that Team Green is working on tech to mitigate such concerns, and it appears to be taking considerable strides forward in that regard. Wccftech reports that Nvidia's RTX Neural Texture Compression (or NTC) has got a big boost thanks to Microsoft's new Cooperative Vector in DirectX Raytracing 1.2. Does that sound like a load of techie nonsense? Well, yes, and if you dare to look at the in-depth explanation of what's afoot here, you'll likely be very much lost in a land of confusion - see the post on X below from Osvaldo Pinali Doederlein (who tested the feature with a prerelease graphics driver). So, in short, Nvidia's texture compression trickery, which allows textures to be made smaller, meaning more can fit in a modest helping of VRAM, now works much more efficiently thanks to the Cooperative Vector. As Doederlein observes, in the demo he ran as a test, NTC offered a saving of almost 90% in terms of reducing VRAM footprint, which is a massive deal. If, and this is a key caveat, the game supports NTC (so developers must code it in, as is the case with Nvidia DLSS, of course). Given that textures generally occupy half the VRAM in any given game session (or a good deal more), the benefits should translate to a sizable win for games where grabbing textures from outside the video memory becomes a chore that slows down everything. NTC uses AI (neural networks, hence the name: Neural Texture Compression) to intelligently compress graphics textures - in theory without much of a noticeable difference from native textures, when they're decompressed for displaying to the gamer - and as mentioned, Microsoft's Cooperative Vectors in DXR 1.2 ensure this process is more efficient. Quite a lot more efficient, in fact, and I'm thinking that Nvidia had an eye on how this kind of technology would progress down the line when Team Green was making decisions about deploying some current-gen Blackwell graphics cards and sticking with 8GB of video RAM. That has been a very unpopular decision in some quarters, so perhaps Nvidia is counting - and counting heavily - on NTC to prove a major difference in future-proofing some of those GPUs. (Such as the RTX 5060 Ti 8GB, and the vanilla RTX 5060 - the RTX 5050 has 8GB, too, but this memory configuration is more understandable on a budget graphics card). That said, we've got to be pretty careful in terms of trusting some early results in a single demo as a reason to celebrate - as promising as they may seem. The figure of a (close to) 90% reduction won't be the case in every scenario, you can bank on that, but even more modest boosts (to the tune of, say, 50%) are still going to be great news. The other point to bear in mind is that NTC could have a side effect of some performance loss in the frame rate - at least in certain scenarios. Hopefully, any such frame rate drops - or effects on image quality for that matter - would be mild, and presumably worth the trade-off in terms of making life easier for the VRAM (otherwise, what would be the point?). Also, while I'm making more cautious noises here, as already noted, textures aren't everything in terms of what's packed onto VRAM in a game session, and so broader concerns remain around the future of 8GB graphics cards in that light. Still, NTC could be a key piece of the puzzle for Nvidia, and it's looking more so given these test results. As you might guess, this tech is for Team Green's graphics cards, and only the more modern offerings at that. (While in theory NTC can be used by rival GPUs, such as AMD's Radeon boards, realistically Nvidia recommends that only its RTX 4000 or 5000 graphics cards have the necessary oomph and hardware to run it). So, where does that leave AMD and Intel's products? Those GPU makers have their own neural compression tech on the boil, and the good thing is, they'll also benefit from DirectX Cooperative Vectors (everyone will). Although right now, Nvidia has the lead in this department - but we're still a fair way from seeing this tech realized. As noted, game developers need to support the tech in their titles - this isn't a driver-level feature that can be applied across all games. (Well, not unless Nvidia is planning a watered-down version at a later date - a sort of equivalent of what Smooth Motion is to DLSS). In short, Nvidia has some persuading to do, again much along the same lines as DLSS, because if developers don't adopt this, it's a non-starter. The selling points, however, look to be seriously compelling.

Share

Share

Copy Link

Nvidia's Neural Texture Compression (NTC) technology promises significant VRAM savings for 8GB GPUs, but its real-world impact and adoption remain uncertain.

Nvidia's Neural Texture Compression: A Potential Solution for 8GB GPUs

Nvidia is making strides in addressing the limitations of 8GB GPUs with its Neural Texture Compression (NTC) technology. As games in 2025 and beyond demand more video memory, Nvidia is turning to AI-driven solutions to extend the viability of lower VRAM graphics cards

1

.The Promise of Neural Texture Compression

Source: Tom's Guide

NTC, which compresses in-game textures for efficient loading and improved frame rates, has reportedly taken a significant leap forward. By leveraging Microsoft's new Cooperative Vector in DirectX Raytracing 1.2, early tests have shown an impressive reduction in VRAM consumption for textures—up to 90% in some cases

2

.Technical Advancements and Potential Impact

Osvaldo Pinali Doederlein's tests using a prerelease driver demonstrated a dramatic decrease in texture size, from 79 MB to just 9 MB. This significant reduction in VRAM usage could potentially breathe new life into 8GB GPUs like the RTX 5060 Ti, RTX 5060, and RTX 5050

1

.Challenges and Limitations

Despite the promising results, several challenges remain:

- Developer adoption: Like DLSS 4, NTC requires developers to implement the technology in their games

1

. - Real-world performance: The near-90% reduction observed in controlled tests may not translate directly to complex game environments

1

. - Broader applicability: While textures occupy a significant portion of VRAM, they are not the only consideration in a game's memory usage

2

.

Industry Implications

Nvidia's focus on NTC could be seen as a strategic move to justify the continued production of 8GB GPUs in their current and future lineups. This technology might play a crucial role in future-proofing these more affordable graphics cards

2

.Related Stories

Competition and Compatibility

While NTC is primarily designed for Nvidia's RTX 4000 and 5000 series GPUs, the underlying DirectX improvements could benefit other manufacturers as well. AMD and Intel are reportedly working on their own neural compression technologies, although Nvidia currently leads in this area

2

.The Road Ahead

Source: TechRadar

The success of NTC will largely depend on its adoption by game developers and its real-world performance across various gaming scenarios. If successful, it could significantly extend the lifespan of 8GB GPUs and potentially influence future GPU design decisions in the industry

2

.As the gaming industry continues to push graphical boundaries with technologies like Unreal Engine 5, the effectiveness of AI-driven compression techniques like NTC will be crucial in determining the longevity of more affordable GPU options in the market

1

.References

Summarized by

Navi

[1]

Related Stories

Neural Texture Compression: AI-Powered Solution for VRAM Efficiency in Gaming GPUs

19 Jun 2025•Technology

Nvidia's Neural Texture Compression: A Game-Changer for VRAM Usage and Game Sizes

11 Feb 2025•Technology

Nvidia RTX 5060: Impressive Performance with DLSS 4, but VRAM Concerns Persist

18 May 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation