NVIDIA Unveils Blackwell Ultra GB300: A Leap Forward in AI Accelerator Technology

4 Sources

4 Sources

[1]

NVIDIA GB300 Blackwell Ultra -- Dual-Chip GPU with 20,480 CUDA Cores

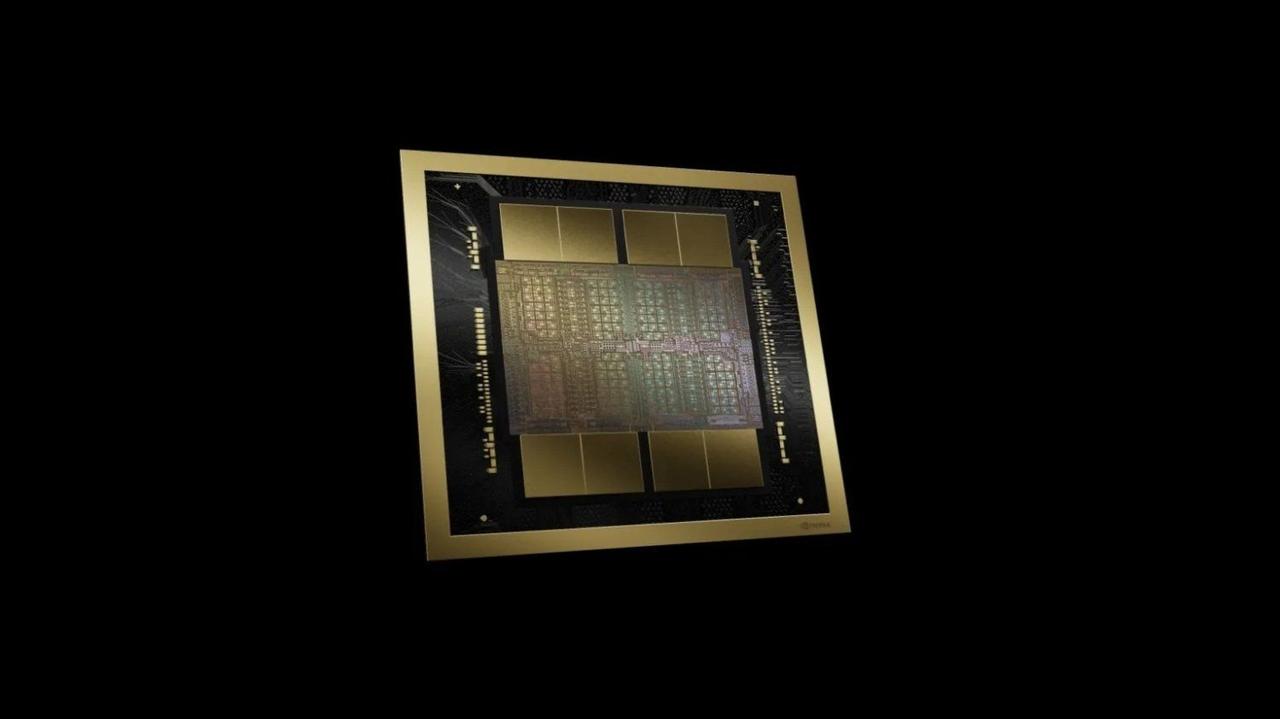

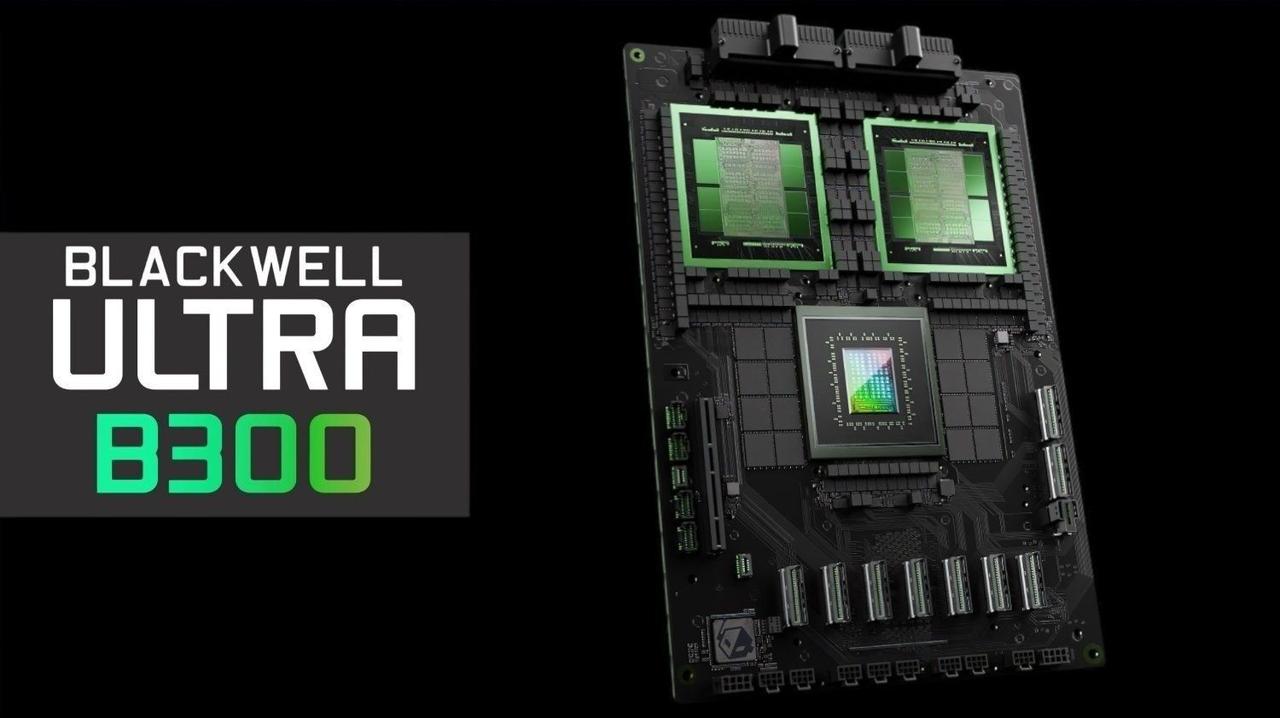

NVIDIA has revealed the GB300 Blackwell Ultra, a massive step forward in its AI accelerator lineup. This chip builds on the already powerful GB200 but increases compute resources, memory size, and communication speed. It is designed to handle the largest AI models and scientific simulations, with more performance headroom than any NVIDIA GPU before it. The GB300 is built using a dual-chip approach. Instead of a single large die, it combines two silicon chips that together pack 208 billion transistors. These are produced on TSMC's 4NP process and linked by NVIDIA's NV-HBI technology, which provides 10 TB/s of bandwidth between them. This connection allows the two chips to function as one unified GPU, simplifying programming and maximizing throughput. Inside, the GPU is structured into 160 streaming multiprocessors. Each multiprocessor carries 128 CUDA cores, adding up to 20,480 cores in total. The architecture also comes with fifth-generation Tensor Cores, which accelerate matrix math for AI training and inference. These support multiple precision modes, such as FP8 and FP6, but also a new NVFP4 format. NVFP4 uses less memory than FP8 while keeping comparable accuracy, which can be crucial when training or deploying very large models. On the memory side, NVIDIA has equipped the GB300 with eight HBM3E stacks. Each stack uses a 12-layer design, and together they provide 288 GB of memory directly on the GPU package. Bandwidth reaches 8 TB/s over an 8192-bit bus, spread across 16 memory channels. This enormous bandwidth helps keep thousands of cores busy and ensures that models can stay in GPU memory without constantly swapping data to external storage. The GPU also has 40 MB of Tensor memory distributed across its multiprocessors, which speeds up frequent AI workloads. The performance boost comes at the cost of power. The GB300's thermal graphics power (TGP) reaches 1400 W, far above most consumer GPUs and even higher than the GB200. This makes cooling and power delivery a serious engineering challenge, but it also shows how far NVIDIA is pushing performance for datacenter and supercomputing customers. Connectivity is another major part of the design. GPU-to-GPU links use NVLink 5, which can deliver 1.8 TB/s of bidirectional bandwidth per accelerator. For CPU connections, NVIDIA relies on NVLink-C2C, a protocol that links directly with Grace CPUs at 900 GB/s and allows them to share a single memory space. In addition, the accelerator now supports PCIe 6.0 x16, doubling throughput over PCIe 5.0 and giving 256 GB/s between the GPU and host systems. These accelerators are designed to scale out in clusters. NVIDIA is offering the GB300 NVL72 rack system, which fits 72 GPUs and 72 Grace Superchip CPUs. Together, this setup provides 20.7 TB of HBM3E memory and 576 TB/s of combined bandwidth, enough for training extremely large AI models or running advanced scientific workloads that need both CPU and GPU performance. One of the more interesting features is the new NVFP4 data format. It reduces memory usage by almost half compared to FP8 while still delivering a similar level of accuracy. With AI models growing to trillions of parameters, memory usage is often a bottleneck. A format like NVFP4 helps fit larger models into available GPU memory, speeding up training and reducing costs. Feature NVIDIA GB200 NVIDIA GB300 Blackwell Ultra Process Node TSMC 4NP TSMC 4NP Architecture Blackwell Blackwell Ultra Transistors ~208 billion (dual-die) 208 billion (dual-die) Streaming Multiprocessors (SMs) 144 SMs 160 SMs CUDA Cores per SM 128 128 Total CUDA Cores 18,432 20,480 Tensor Cores 5th Gen, FP8 / FP6 5th Gen, FP8 / FP6 / NVFP4 Tensor Memory per SM 256 KB 256 KB Total Tensor Memory 36 MB 40 MB HBM3E Memory 192 GB (6 stacks) 288 GB (8 stacks) Memory Interface 6144-bit (12 × 512-bit channels) 8192-bit (16 × 512-bit channels) Memory Bandwidth 6.4 TB/s 8 TB/s Power (TGP) 1200 W 1400 W GPU-to-GPU Interconnect NVLink 5 -- 1.8 TB/s NVLink 5 -- 1.8 TB/s CPU Interconnect NVLink-C2C -- 900 GB/s NVLink-C2C -- 900 GB/s PCIe Interface PCIe 5.0 x16 (128 GB/s) PCIe 6.0 x16 (256 GB/s) Rack System NVL72 (72 GPUs + 72 Grace CPUs) NVL72 (72 GPUs + 72 Grace CPUs) Total Rack Memory 13.8 TB HBM3E 20.7 TB HBM3E Rack Bandwidth 432 TB/s 576 TB/s Source: nvidia

[2]

NVIDIA details Blackwell Ultra GB300: dual-die design, 208B transistors, up to 288GB HBM3E

TL;DR: NVIDIA's Blackwell Ultra GB300 GPU, unveiled at Hot Chips 2025, delivers 50% faster AI performance than its predecessor with 20,480 CUDA cores, 5th Gen Tensor Cores, and up to 288GB HBM3E memory. It supports multi-trillion-parameter models, enhanced compute efficiency, and advanced AI workloads in scalable systems. NVIDIA has quite a lot of things to detail and announce at Hot Chips 2025, with one of them being more details on its new Blackwell Ultra GB300 GPU, the fastest AI chip the company has ever made, and it's 50% faster than GB200. The new entry into the Blackwell AI GPU family before its next-gen Rubin AI chips debut in 2026, the new Blackwell Ultra GB300 features two Reticle-sized Blackwell GPU dies, connecting them through NVIDIA's in-house NV-HBI high-bandwidth interface, making them appear as a single GPU. The Blackwell Ultra GPU is made on the TSMC N4P process node (which is an optimized 5nm node for NVIDIA) with 208 billion transistors in total, beating out the 185 billion transistors in AMD's new flagship Instinct MI355X AI accelerator. The NV-HBI interface on Blackwell Ultra GB300 has 10TB/sec of bandwidth for the two GPU dies, while functioning as a single chip. NVIDIA's new Blackwell Ultra GB300 GPU features 160 SMs in total, each containing 128 CUDA cores for a total of 20,480 CUDA cores, with 5th Gen Tensors Cores with FP8, FP6, NVFP4 precision compute, 256KB of Tensor memory (TMEM) and SFUs. All of the AI goodness happens inside of those 5th Gen Tensor Cores, with NVIDIA injecting huge innovations throughout each generation of Tensor Cores, and the 5th Gen Tensor Cores are no different. Here's how it has been over the years with GPU architectures and Tensor Core generations: * NVIDIA Volta: 8-thread MMA units, FP16 with FP32 accumulation for training. * NVIDIA Ampere: Full warp-wide MMA, BF16, and TensorFloat-32 formats. * NVIDIA Hopper: Warp-group MMA across 128 threads, Transformer Engine with FP8 support. * NVIDIA Blackwell: 2nd Gen Transformer Engine with FP8, FP6, NVFP4 compute, TMEM Memory. NVIDIA also has a huge HBM capacity increased on Blackwell Ultra GB300, with up to 288GB HBM3E per GPU compared to 192GB on GB200. GB300 opens the door to NVIDIA supporting multi-trillion-parameter AI models, with the HBM3E arriving in 8-Hi stack with 16 512-bit memory controller (8192-bit interface) with 8TB/sec of memory bandwidth per GPU. GB300 with 288GB of HBM is a 3.6x increase over the 80GB on H100, and a 50% increase in HBM over the GB200. This allows for: * Complete model residence: 300B+ parameter models without memory offloading. * Extended context lengths: Larger KV cache capacity for transformer models. * Improved compute efficiency: Higher compute-to-memory ratios for diverse workloads. These enhancements aren't just about raw FLOPS. The new Tensor Cores are tightly integrated with 256 KB of Tensor Memory (TMEM) per SM, optimized to keep data close to the compute units. They also support dual-thread-block MMA, where paired SMs cooperate on a single MMA operation, sharing operands and reducing redundant memory traffic. The result is higher sustained throughput, better memory efficiency, and faster large-batch pre-training, reinforcement learning for post-training, and low-batch, high-interactivity inference.

[3]

Nvidia unveils Blackwell Ultra GB300 with 20,480 CUDA cores

The GB300 doubles the memory of the GB200, boosts streaming multiprocessors, and increases power draw to 1,400W for enhanced AI performance. Nvidia has revealed the specifications of the Blackwell Ultra GB300, its forthcoming professional AI accelerator. The GB300 is designed as a replacement for the existing GB200 and features enhancements in core count, memory capacity, and input/output speeds, in addition to an increased power draw. The current landscape of AI hardware development sees numerous companies globally focused on creating advanced solutions for AI training and inference. Nvidia is positioned at the forefront of this sector, offering hardware designed for efficiency and performance in AI processing. The GB200 has been the company's top-performing product. The GB300 is expected to provide a performance increase for early adopters. The Blackwell Ultra GPU, which is integrated into the GB300, incorporates 160 streaming multiprocessors. This is an increase from the 144 streaming multiprocessors found in the GB200. The GB300's design results in a total of 20,480 CUDA cores. The architecture of the GB300 is based on the 4NP TSMC node, representing an advancement over the 4N node used in the GB200 platform. The cores within the GB300 are equipped with 5th-generation Tensor Cores, offering support for FP8, FP6, and NVFP4 formats, according to VideoCardz. These cores also feature increased Tensor memory. This configuration is expected to increase performance in relevant calculations. The GB300's capabilities are designed to address complex computational workloads. Memory specifications for the GB300 include eight 12-Hi HBM3E stacks. This configuration provides a total of 288 gigabytes of high-bandwidth memory. This doubles the memory capacity of the GB200 and allows the platform to manage larger AI models and accelerate the use of their functionalities. The increased memory capacity aims to improve processing capabilities for demanding AI applications. The GB300 uses the PCIe 6 interface, which facilitates data transmission. This interface offers double the performance of the PCIe 5 design, with speeds of up to 256 GB/s. Power requirements for the GB300 have increased with the new platform demanding up to 1,400W of power during operation. This increase in power consumption is associated with the enhanced performance capabilities. The GB300 is currently in mass production and is slated to begin shipping to customers in the near future. However, due to existing export restrictions, the GB300 will not be shipped to China or related territories. A modified, less powerful version, the GB30, may be shipped to China. The decision regarding the GB30 remains unclear given China's existing concerns regarding Nvidia hardware.

[4]

CoreWeave Demonstrates 6X GPU Througput With NVIDIA GB300 NVL72 Vs H100 In DeepSeek R1

The latest NVIDIA Blackwell AI superchip can easily outperform the previous-gen H100 GPU by reducing the tensor parallelism, offering significantly higher throughput. NVIDIA's Blackwell-powered AI superchips can introduce some drastic advantages over the previous-gen GPUs like the H100. The GB300 is already NVIDIA's best-ever offering, delivering great generational uplifts in compute and much higher memory capacity and bandwidth, which are crucial in heavy AI workloads. This is evident from the latest benchmark, conducted by CoreWeave, which found that NVIDIA's latest platform can offer significantly higher throughput by reducing the tensor parallelism. CoreWeave tested both platforms in the DeepSeek R1 reasoning model, which is a pretty complex model, but here the major difference was the starkly different configurations. On one hand, it needed a 16x NVIDIA H100 cluster to run the DeepSeek R1 model, but on the other hand, it only needed 4x GB300 GPUs on the NVIDIA GB300 NVL72 infrastructure to get the job done. Despite using one-quarter of the GPUs, the GB300-based system delivered 6X higher raw throughput per GPU, showcasing the GPU's huge advantage in complex AI workloads compared to the H100. As demonstrated, it is clear that the GB300 has a great advantage over the H100 system as the former allows running the same model in just 4-way tensor parallelism. Due to fewer splits, the inter-GPU communication is improved, and the higher memory capacity and bandwidth also played a crucial role in delivering drastic performance uplifts. With such an architectural leap, the GB300 NVL72 platform looks solid, thanks to the high-bandwidth NVLink and NVSwitch interconnects, which enable the GPUs to exchange data at incredible speeds. For customers, this enables faster token generation and lower latency while offering more efficient scaling of enterprise AI workloads. CoreWeave highlights the extraordinary specifications and features of the NVIDIA GB300 NVL72 rack-scale system, which offers a huge 37 TB memory capacity (GB300 NVL72 supports up to 40 TB) for running large and complex AI models, and blazing-fast interconnects that deliver 130 TB/s of memory bandwidth. All in all, the NVIDIA GB300 isn't just about the raw TFLOPs but also efficiency. The reduction in tensor parallelism enables the GB300 to minimize the GPU communication overhead, which usually bottleneck large-scale AI training and inference. With the GB300, enterprises can now achieve much higher throughput even with fewer GPUs, which won't just reduce the overall costs but will also help them scale efficiently.

Share

Share

Copy Link

NVIDIA introduces the GB300 Blackwell Ultra, a dual-chip GPU with 20,480 CUDA cores, offering significant improvements in AI performance and efficiency over its predecessor.

NVIDIA Introduces Groundbreaking GB300 Blackwell Ultra GPU

NVIDIA has unveiled its latest AI accelerator, the GB300 Blackwell Ultra, marking a significant advancement in GPU technology for artificial intelligence and scientific computing. This new chip builds upon the capabilities of its predecessor, the GB200, offering substantial improvements in compute resources, memory capacity, and communication speed

1

.Architectural Innovations

Source: TweakTown

The GB300 employs a dual-chip approach, combining two silicon chips that together pack an impressive 208 billion transistors. Manufactured using TSMC's 4NP process, these chips are linked by NVIDIA's NV-HBI technology, providing 10 TB/s of bandwidth between them

1

. This design allows the two chips to function as a unified GPU, simplifying programming and maximizing throughput.Enhanced Compute Capabilities

At the heart of the GB300 are 160 streaming multiprocessors, each containing 128 CUDA cores, totaling 20,480 cores. This represents an increase from the 144 streaming multiprocessors and 18,432 CUDA cores found in the GB200

2

. The GPU also features 5th generation Tensor Cores, which accelerate matrix math for AI training and inference, supporting multiple precision modes including FP8, FP6, and the new NVFP4 format1

.Memory and Bandwidth Advancements

Source: Guru3D

NVIDIA has equipped the GB300 with eight HBM3E stacks, providing a total of 288 GB of memory directly on the GPU package. This represents a significant increase from the 192 GB found in the GB200

3

. The memory bandwidth reaches an impressive 8 TB/s over an 8192-bit bus, spread across 16 memory channels1

.Connectivity and Scalability

The GB300 features advanced connectivity options, including NVLink 5 for GPU-to-GPU links, delivering 1.8 TB/s of bidirectional bandwidth per accelerator. For CPU connections, it uses NVLink-C2C, linking directly with Grace CPUs at 900 GB/s. Additionally, the accelerator supports PCIe 6.0 x16, doubling throughput over PCIe 5.0 to 256 GB/s

1

.Related Stories

Performance and Power Considerations

Source: Wccftech

The GB300's enhanced performance comes at the cost of increased power consumption, with a thermal graphics power (TGP) reaching 1400 W, up from 1200 W in the GB200

1

. This presents significant engineering challenges for cooling and power delivery in data center and supercomputing applications.Real-World Performance

CoreWeave, a cloud services provider, has demonstrated the GB300's capabilities in a benchmark using the DeepSeek R1 reasoning model. The test showed that a system with just 4 GB300 GPUs could deliver 6 times higher raw throughput per GPU compared to a 16-GPU cluster of H100s

4

. This significant performance gain is attributed to reduced tensor parallelism and improved inter-GPU communication.Implications for AI and Scientific Computing

The GB300 Blackwell Ultra represents a major step forward in GPU technology for AI and scientific computing. Its increased memory capacity and bandwidth, coupled with advanced Tensor Cores and the new NVFP4 format, enable the handling of larger AI models and more complex scientific simulations

2

. This advancement is particularly crucial as AI models continue to grow in size and complexity, with some reaching trillions of parameters.As NVIDIA continues to push the boundaries of GPU technology, the GB300 Blackwell Ultra sets a new standard for AI accelerators, promising to drive innovation in fields ranging from natural language processing to scientific research and beyond.

References

Summarized by

Navi

[2]

Related Stories

Nvidia Unveils Blackwell Ultra B300: A Leap Forward in AI Computing

19 Mar 2025•Technology

NVIDIA's GB300 'Blackwell Ultra' AI Servers: A Leap Towards Fully Liquid-Cooled AI Clusters

11 Mar 2025•Technology

NVIDIA Unveils GB200 NVL4: A Powerhouse AI Accelerator with Quad Blackwell GPUs and Dual Grace CPUs

19 Nov 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology