SonicSense: Robots Gain Human-Like Perception Through Acoustic Vibrations

4 Sources

4 Sources

[1]

Listening skills bring human-like touch to robots

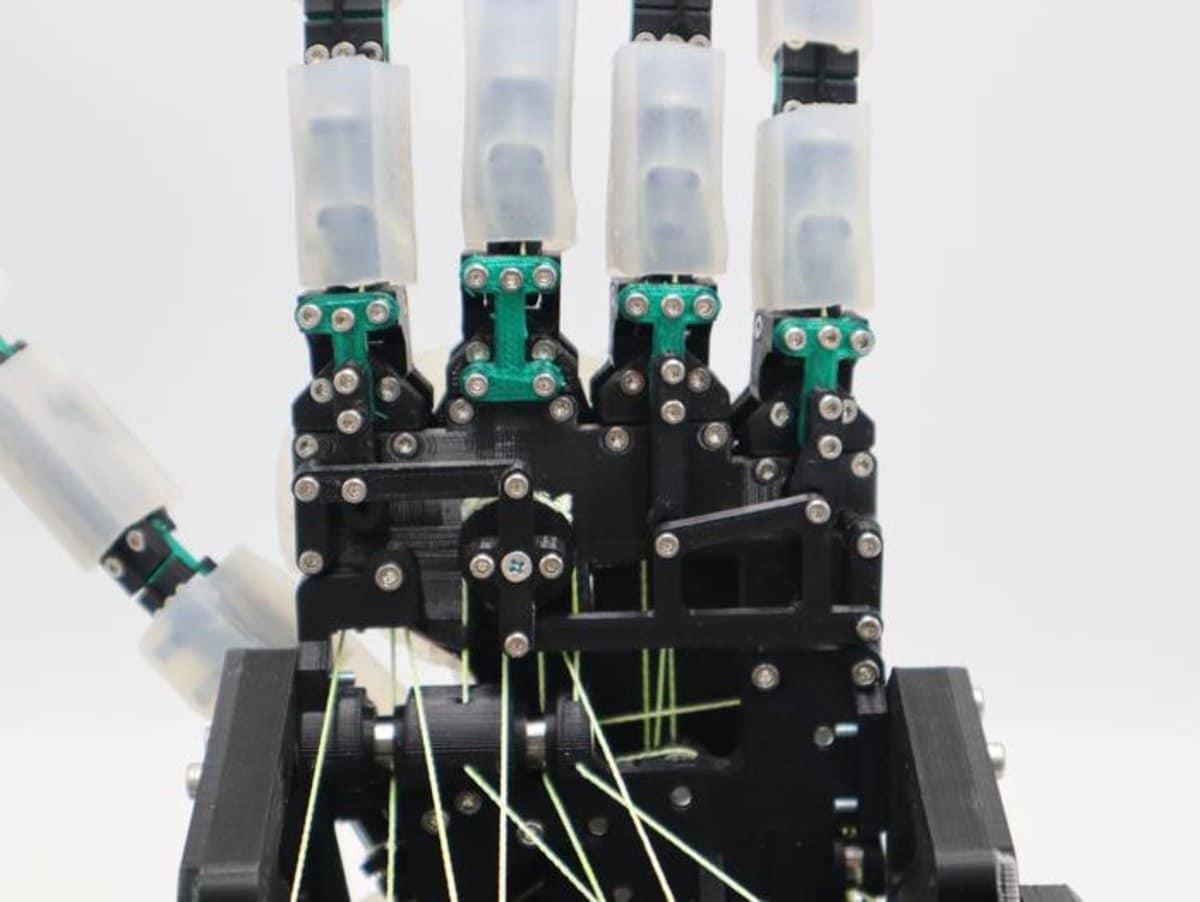

Imagine sitting in a dark movie theater wondering just how much soda is left in your oversized cup. Rather than prying off the cap and looking, you pick up and shake the cup a bit to hear how much ice is inside rattling around, giving you a decent indication of if you'll need to get a free refill. Setting the drink back down, you wonder absent-mindedly if the armrest is made of real wood. After giving it a few taps and hearing a hollow echo however, you decide it must be made from plastic. This ability to interpret the world through acoustic vibrations emanating from an object is something we do without thinking. And it's an ability that researchers are on the cusp of bringing to robots to augment their rapidly growing set of sensing abilities. Set to be published at the Conference on Robot Learning (CoRL 2024) being held Nov. 6-9 in Munich, Germany, new research from Duke University details a system dubbed SonicSense that allows robots to interact with their surroundings in ways previously limited to humans. "Robots today mostly rely on vision to interpret the world," explained Jiaxun Liu, lead author of the paper and a first-year Ph.D. student in the laboratory of Boyuan Chen, professor of mechanical engineering and materials science at Duke. "We wanted to create a solution that could work with complex and diverse objects found on a daily basis, giving robots a much richer ability to 'feel' and understand the world." SonicSense features a robotic hand with four fingers, each equipped with a contact microphone embedded in the fingertip. These sensors detect and record vibrations generated when the robot taps, grasps or shakes an object. And because the microphones are in contact with the object, it allows the robot to tune out ambient noises. Based on the interactions and detected signals, SonicSense extracts frequency features and uses its previous knowledge, paired with recent advancements in AI, to figure out what material the object is made out of and its 3D shape. If it's an object the system has never seen before, it might take 20 different interactions for the system to come to a conclusion. But if it's an object already in its database, it can correctly identify it in as little as four. "SonicSense gives robots a new way to hear and feel, much like humans, which can transform how current robots perceive and interact with objects," said Chen, who also has appointments and students from electrical and computer engineering and computer science. "While vision is essential, sound adds layers of information that can reveal things the eye might miss." In the paper and demonstrations, Chen and his laboratory showcase a number of capabilities enabled by SonicSense. By turning or shaking a box filled with dice, it can count the number held within as well as their shape. By doing the same with a bottle of water, it can tell how much liquid is contained inside. And by tapping around the outside of an object, much like how humans explore objects in the dark, it can build a 3D reconstruction of the object's shape and determine what material it's made from. While SonicSense is not the first attempt to use this approach, it goes further and performs better than previous work by using four fingers instead of one, touch-based microphones that tune out ambient noise and advanced AI techniques. This setup allows the system to identify objects composed of more than one material with complex geometries, transparent or reflective surfaces, and materials that are challenging for vision-based systems. "While most datasets are collected in controlled lab settings or with human intervention, we needed our robot to interact with objects independently in an open lab environment," said Liu. "It's difficult to replicate that level of complexity in simulations. This gap between controlled and real-world data is critical, and SonicSense bridges that by enabling robots to interact directly with the diverse, messy realities of the physical world." These abilities make SonicSense a robust foundation for training robots to perceive objects in dynamic, unstructured environments. So does its cost; using the same contact microphones that musicians use to record sound from guitars, 3D printing and other commercially available components keeps the construction costs to just over $200. Moving forward, the group is working to enhance the system's ability to interact with multiple objects. By integrating object-tracking algorithms, robots will be able to handle dynamic, cluttered environments -- bringing them closer to human-like adaptability in real-world tasks. Another key development lies in the design of the robot hand itself. "This is only the beginning. In the future, we envision SonicSense being used in more advanced robotic hands with dexterous manipulation skills, allowing robots to perform tasks that require a nuanced sense of touch," Chen said. "We're excited to explore how this technology can be further developed to integrate multiple sensory modalities, such as pressure and temperature, for even more complex interactions." This work was supported by the Army Research laboratory STRONG program (W911NF2320182, W911NF2220113) and DARPA's FoundSci program (HR00112490372) and TIAMAT (HR00112490419). CITATION: "SonicSense: Object Perception from In-Hand Acoustic Vibration," Jiaxun Liu, Boyuan Chen. Conference on Robot Learning, 2024. ArXiv version available at: 2406.17932v2 and on the General Robotics Laboratory website.

[2]

Robots learn to perceive objects using acoustic vibrations

Imagine sitting in a dark movie theater wondering just how much soda is left in your oversized cup. Rather than prying off the cap and looking, you pick up and shake the cup a bit to hear how much ice is inside rattling around, giving you a decent indication of if you'll need to get a free refill. Setting the drink back down, you wonder absent-mindedly if the armrest is made of real wood. After giving it a few taps and hearing a hollow echo, however, you decide it must be made from plastic. This ability to interpret the world through acoustic vibrations emanating from an object is something we do without thinking. And it's an ability that researchers are on the cusp of bringing to robots to augment their rapidly growing set of sensing abilities. Set to be presented at the Conference on Robot Learning (CoRL 2024) being held Nov. 6-9 in Munich, Germany, new research from Duke University details a system dubbed SonicSense that allows robots to interact with their surroundings in ways previously limited to humans. The findings are published on the arXiv preprint server. "Robots today mostly rely on vision to interpret the world," explained Jiaxun Liu, lead author of the paper and a first-year Ph.D. student in the laboratory of Boyuan Chen, professor of mechanical engineering and materials science at Duke. "We wanted to create a solution that could work with complex and diverse objects found on a daily basis, giving robots a much richer ability to 'feel' and understand the world." SonicSense features a robotic hand with four fingers, each equipped with a contact microphone embedded in the fingertip. These sensors detect and record vibrations generated when the robot taps, grasps or shakes an object. And because the microphones are in contact with the object, it allows the robot to tune out ambient noises. Based on the interactions and detected signals, SonicSense extracts frequency features and uses its previous knowledge, paired with recent advancements in AI, to figure out what material the object is made out of and its 3D shape. If it's an object the system has never seen before, it might take 20 different interactions for the system to come to a conclusion. But if it's an object already in its database, it can correctly identify it in as little as four. "SonicSense gives robots a new way to hear and feel, much like humans, which can transform how current robots perceive and interact with objects," said Chen, who also has appointments and students from electrical and computer engineering and computer science. "While vision is essential, sound adds layers of information that can reveal things the eye might miss." In the paper and demonstrations, Chen and his laboratory showcase a number of capabilities enabled by SonicSense. By turning or shaking a box filled with dice, it can count the number held within as well as their shape. By doing the same with a bottle of water, it can tell how much liquid is contained inside. And by tapping around the outside of an object, much like how humans explore objects in the dark, it can build a 3D reconstruction of the object's shape and determine what material it's made from. While SonicSense is not the first attempt to use this approach, it goes further and performs better than previous work by using four fingers instead of one, touch-based microphones that tune out ambient noise and advanced AI techniques. This setup allows the system to identify objects composed of more than one material with complex geometries, transparent or reflective surfaces, and materials that are challenging for vision-based systems. "While most datasets are collected in controlled lab settings or with human intervention, we needed our robot to interact with objects independently in an open lab environment," said Liu. "It's difficult to replicate that level of complexity in simulations. This gap between controlled and real-world data is critical, and SonicSense bridges that by enabling robots to interact directly with the diverse, messy realities of the physical world." These abilities make SonicSense a robust foundation for training robots to perceive objects in dynamic, unstructured environments. So does its cost; using the same contact microphones that musicians use to record sound from guitars, 3D printing and other commercially available components keeps the construction costs at just over $200. Moving forward, the group is working to enhance the system's ability to interact with multiple objects. By integrating object-tracking algorithms, robots will be able to handle dynamic, cluttered environments -- bringing them closer to human-like adaptability in real-world tasks. Another key development lies in the design of the robot hand itself. "This is only the beginning. In the future, we envision SonicSense being used in more advanced robotic hands with dexterous manipulation skills, allowing robots to perform tasks that require a nuanced sense of touch," Chen said. "We're excited to explore how this technology can be further developed to integrate multiple sensory modalities, such as pressure and temperature, for even more complex interactions."

[3]

Robots Gain Ability to "Hear" Objects Through Vibration - Neuroscience News

Summary: New research introduces SonicSense, a system that gives robots the ability to interpret objects through vibrations. Equipped with microphones in their fingertips, robots can tap, grasp, or shake objects to detect sound and determine material type, shape, and contents. Using AI, SonicSense allows robots to identify new objects and perceive environments with greater depth and accuracy, beyond what vision alone can offer. This system marks a significant leap in robotic sensing, allowing robots to interact with the physical world in dynamic, unstructured settings. Imagine sitting in a dark movie theater wondering just how much soda is left in your oversized cup. Rather than prying off the cap and looking, you pick up and shake the cup a bit to hear how much ice is inside rattling around, giving you a decent indication of if you'll need to get a free refill. Setting the drink back down, you wonder absent-mindedly if the armrest is made of real wood. After giving it a few taps and hearing a hollow echo however, you decide it must be made from plastic. This ability to interpret the world through acoustic vibrations emanating from an object is something we do without thinking. And it's an ability that researchers are on the cusp of bringing to robots to augment their rapidly growing set of sensing abilities. Set to be published at the Conference on Robot Learning (CoRL 2024) being held Nov. 6-9 in Munich, Germany, new research from Duke University details a system dubbed SonicSense that allows robots to interact with their surroundings in ways previously limited to humans. "Robots today mostly rely on vision to interpret the world," explained Jiaxun Liu, lead author of the paper and a first-year Ph.D. student in the laboratory of Boyuan Chen, professor of mechanical engineering and materials science at Duke. "We wanted to create a solution that could work with complex and diverse objects found on a daily basis, giving robots a much richer ability to 'feel' and understand the world." SonicSense features a robotic hand with four fingers, each equipped with a contact microphone embedded in the fingertip. These sensors detect and record vibrations generated when the robot taps, grasps or shakes an object. And because the microphones are in contact with the object, it allows the robot to tune out ambient noises. Based on the interactions and detected signals, SonicSense extracts frequency features and uses its previous knowledge, paired with recent advancements in AI, to figure out what material the object is made out of and its 3D shape. If it's an object the system has never seen before, it might take 20 different interactions for the system to come to a conclusion. But if it's an object already in its database, it can correctly identify it in as little as four. "SonicSense gives robots a new way to hear and feel, much like humans, which can transform how current robots perceive and interact with objects," said Chen, who also has appointments and students from electrical and computer engineering and computer science. "While vision is essential, sound adds layers of information that can reveal things the eye might miss." In the paper and demonstrations, Chen and his laboratory showcase a number of capabilities enabled by SonicSense. By turning or shaking a box filled with dice, it can count the number held within as well as their shape. By doing the same with a bottle of water, it can tell how much liquid is contained inside. And by tapping around the outside of an object, much like how humans explore objects in the dark, it can build a 3D reconstruction of the object's shape and determine what material it's made from. While SonicSense is not the first attempt to use this approach, it goes further and performs better than previous work by using four fingers instead of one, touch-based microphones that tune out ambient noise and advanced AI techniques. This setup allows the system to identify objects composed of more than one material with complex geometries, transparent or reflective surfaces, and materials that are challenging for vision-based systems. "While most datasets are collected in controlled lab settings or with human intervention, we needed our robot to interact with objects independently in an open lab environment," said Liu. "It's difficult to replicate that level of complexity in simulations. This gap between controlled and real-world data is critical, and SonicSense bridges that by enabling robots to interact directly with the diverse, messy realities of the physical world." These abilities make SonicSense a robust foundation for training robots to perceive objects in dynamic, unstructured environments. So does its cost; using the same contact microphones that musicians use to record sound from guitars, 3D printing and other commercially available components keeps the construction costs to just over $200. Moving forward, the group is working to enhance the system's ability to interact with multiple objects. By integrating object-tracking algorithms, robots will be able to handle dynamic, cluttered environments -- bringing them closer to human-like adaptability in real-world tasks. Another key development lies in the design of the robot hand itself. "This is only the beginning. In the future, we envision SonicSense being used in more advanced robotic hands with dexterous manipulation skills, allowing robots to perform tasks that require a nuanced sense of touch," Chen said. "We're excited to explore how this technology can be further developed to integrate multiple sensory modalities, such as pressure and temperature, for even more complex interactions." Funding: This work was supported by the Army Research laboratory STRONG program (W911NF2320182, W911NF2220113) and DARPA's FoundSci program (HR00112490372) and TIAMAT (HR00112490419). Note: The image is a representation of a robotic hand, not the SonicSense system. Author: Ken Kingery Source: Duke University Contact: Ken Kingery - Duke University Image: The image is credited to Neuroscience News Original Research: The findings will be presented at the Conference on Robot Learning

[4]

Robots that sense the world through sound are more human-like - Earth.com

The audience gasps as sound effects vibrate the seats of a darkened theater. They shake the cup to hear the clinking ice to know how much is left. Lost in thought, the moviegoers tap the armrest, contemplating if it's real wood or plastic imitating the real thing. This knack for identifying objects and their makeup through sound is common practice. Striving to augment the sensory abilities of robots, researchers are now replicating this human ability. Next month, at the Conference on Robot Learning (CoRL 2024) in Munich, Germany, experts from Duke University will introduce the world to SonicSense. This system offers robots a perception capability similar to humans. "We introduce SonicSense, a holistic design of hardware and software to enable rich robot object perception through in-hand acoustic vibration sensing," wrote the researchers. "While previous studies have shown promising results with acoustic sensing for object perception, current solutions are constrained to a handful of objects with simple geometries and homogeneous materials, single-finger sensing, and mixing training and testing on the same objects." Study lead author Jiaxun Liu is a first-year PhD student in the laboratory of Boyuan Chen, professor of mechanical engineering and materials science at Duke. "Robots today mostly rely on vision to interpret the world," explained Liu. "We wanted to create a solution that could work with complex and diverse objects found on a daily basis, giving robots a much richer ability to 'feel' and understand the world." SonicSense is a robotic hand fitted with four fingers and each finger has a contact microphone at the tip. When the robot interacts with an object - tapping, holding, or shaking - the sensors capture the vibrations and tune out ambient noise. The SonicSense system identifies objects and determines their shape based on the detected signals and frequency features. The system relies on prior knowledge and leverages advancements in artificial intelligence to discern the object's material and three-dimensional shape. Liu and Chen's laboratory demonstrations of SonicSense reveal its impressive capabilities. For instance, it estimates how many dice are inside a box or how much water is contained in a bottle by shaking the objects or tapping their surfaces. While SonicSense isn't the first of its kind, it's a unique setup. The four fingers touch-based microphones and advanced AI techniques set it apart from previous attempts on robots. The integration allows SonicSense to identify objects of varying complexity. It is useful for use with materials challenging for vision-based systems and objects composed of multiple materials with reflective or transparent surfaces. As SonicSense continues to evolve, researchers focus on enhancing its interaction with multiple objects. The future includes an upgraded robotic hand with advanced manipulation skills, paving the way for robots to perform tasks that need a subtle sense of touch. An exciting avenue for SonicSense's evolution is its use in varied environments outside the laboratory. Researchers are exploring the potential for SonicSense to function in dynamic and unpredictable environments such as urban landscapes or disaster zones. By fine-tuning its sensory capabilities, SonicSense could enable robots to navigate through debris, assess stability, or identify materials essential for rescue operations. Incorporating external data sources and environmental adaptability algorithms is essential for advancement, allowing SonicSense to broaden the range of scenarios for beneficial robotic intervention. Ethical consideration and societal impacts become paramount as SonicSense moves towards greater integration in autonomous robotic systems. Robots discerning and interacting with the world using sound elevates questions regarding privacy, ownership of generated data, and potential biases intrinsic to AI systems. As robots play larger roles in healthcare, retail, and domestic spaces, it's critical to ensuring transparency in how SonicSense interprets sensory data. The development process must incorporate diverse perspectives, aiming for inclusive technology that respects individual rights and maximizes the societal benefits of SonicSense's capabilities. The ability to bring robots closer to human-like adaptability is a testament to advances in robotics and artificial intelligence. The technology's potential to bridge the gap between contrived lab settings and real-world complexity could revolutionize how people think about robots and their role in the world. Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

Share

Share

Copy Link

Duke University researchers develop SonicSense, a system that enables robots to perceive objects through acoustic vibrations, mimicking human-like sensory abilities and potentially revolutionizing robotic interaction with the physical world.

Introducing SonicSense: A Breakthrough in Robotic Perception

Researchers at Duke University have developed a groundbreaking system called SonicSense, which enables robots to perceive and interact with objects using acoustic vibrations, much like humans do

1

2

. This innovative technology, set to be presented at the Conference on Robot Learning (CoRL 2024) in Munich, Germany, marks a significant advancement in robotic sensing capabilities.How SonicSense Works

SonicSense features a robotic hand equipped with four fingers, each containing a contact microphone embedded in the fingertip

1

. These sensors detect and record vibrations generated when the robot interacts with objects through tapping, grasping, or shaking2

. The system then uses AI algorithms to analyze the frequency features and determine the object's material composition and 3D shape3

.Key Capabilities and Advantages

SonicSense demonstrates remarkable abilities in object perception:

- Material identification: The system can distinguish between different materials, even in complex, multi-material objects

1

. - Shape reconstruction: By tapping around an object, SonicSense can build a 3D model of its shape

2

. - Content analysis: It can determine the contents of containers, such as counting dice in a box or measuring liquid in a bottle

3

. - Ambient noise filtering: The contact-based microphones allow the system to focus on object-generated vibrations while tuning out background noise

1

.

Advancements Over Previous Systems

While not the first attempt at acoustic-based robotic perception, SonicSense offers several improvements:

- Multi-finger sensing: The use of four fingers instead of one provides more comprehensive data

2

. - Advanced AI techniques: Improved algorithms enable better interpretation of complex signals

1

. - Versatility: SonicSense can identify objects with complex geometries, transparent or reflective surfaces, and materials challenging for vision-based systems

3

.

Related Stories

Real-World Applications and Future Developments

The SonicSense system has potential applications across various fields:

- Unstructured environments: Its ability to interact with diverse objects makes it suitable for dynamic, real-world settings

4

. - Cost-effective solution: With construction costs just over $200, SonicSense offers an affordable option for advanced robotic perception

1

. - Future enhancements: Researchers aim to improve the system's ability to interact with multiple objects and integrate additional sensory modalities like pressure and temperature

2

.

Implications for Robotics and AI

SonicSense represents a significant step towards more human-like robotic perception:

- Enhanced sensory capabilities: By adding sound-based perception to visual systems, robots can gather more comprehensive environmental data

3

. - Improved adaptability: The system's ability to learn and identify new objects brings robots closer to human-like adaptability in real-world tasks

4

. - Potential for complex interactions: Future developments could enable more nuanced object manipulation and environmental understanding

2

.

As SonicSense continues to evolve, it promises to bridge the gap between controlled laboratory settings and the complex, messy realities of the physical world, potentially revolutionizing how robots interact with their surroundings

1

4

.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

AI Robots Integrate Touch and Vision for Human-Like Object Manipulation

04 Sept 2025•Technology

Robotic Hand Mimics Human-Like Grasp with Innovative Compliant Design

14 May 2025•Science and Research

AI Co-Pilot for Bionic Hands Transforms How Amputees Control Prosthetics with Intuitive Grasping

09 Dec 2025•Health

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation