Tech Giants Race to Build AI Data Centers in Space as Earth-Based Infrastructure Hits Limits

5 Sources

5 Sources

[1]

SpaceX CEO Elon Musk says AI compute in space will be the lowest-cost option in 5 years -- but Nvidia's Jensen Huang says it's a 'dream'

In addition to hardware costs, power generation and delivery and cooling requirements will be among the main constraints for massive AI data centers in the coming years. X, xAI, SpaceX, and Tesla CEO Elon Musk argues that over the next four to five years, running large-scale AI systems in orbit could become far more economical than doing the same work on Earth. That's primarily due to 'free' solar power and relatively easy cooling. Jensen Huang agrees about the challenges ahead of gigawatt or terawatt-class AI data centers, but says that space data centers are a dream for now. "My estimate is that the cost of electricity, the cost effectiveness of AI and space will be overwhelmingly better than AI on the ground so far, long before you exhaust potential energy sources on Earth," said Musk at the U.S.-Saudi investment forum. "I think even perhaps in the four- or five-year timeframe, the lowest cost way to do AI compute will be with solar-powered AI satellites. I would say not more than five years from now." Jensen Huang, chief executive of Nvidia, notes that the compute and communication equipment inside today's Nvidia GB300 racks is extremely small compared to the total mass, because nearly the entire structure -- roughly 1.95 tons out of 2 tons -- is essentially a cooling system. Musk emphasized that as compute clusters grow, the combined requirements for electrical supply and cooling escalate to the point where terrestrial infrastructure struggles to keep up. He claims that targeting continuous output in the range of 200 GW - 300 GW annually would require massive and costly power plants, as a typical nuclear power plant produces around 1 GW of continuous power output. Meanwhile, the U.S. generates around 490 GW of continuous power output these days (note that Musk says 'per year,' but what he means is continous power output at a given time), so using the lion's share of it on AI is impossible. Anything approaching a terawatt of steady AI-related demand is unattainable within Earth-based grids, according to Musk. " There is no way you are building power plants at that level: if you take it up to say, a [1 TW of continuous power], impossible," said Musk. You have to do that in space. There is just no way to do a terawatt [of continuous power on] Earth. In space, you have got continuous solar, you actually do not need batteries because it is always sunny in space and the solar panels actually become cheaper because you do not need glass or framing and the cooling is just radiative." While Musk may be right about issues with generating enough power for AI on Earth and the fact that space could be a better fit for massive AI compute deployments, many challenges remain with putting AI clusters into space, which is why Jensen Huang calls it a dream for now. "That's the dream," Huang exclaimed. On paper, space is a good place for both generating power and cooling down electronics as temperatures can be as low as -270°C in the shadow. But there are many caveats. For example, they can reach +120°C in direct sunlight. However, when it comes to earth orbit, temperature swings are less extreme: -65°C to +125°C on Low Earth Orbit (LEO), -100°C to +120°C on Medium Earth Orbit (MEO), -20°C to +80°C on Geostationary Orbit (GEO), and -10°C to +70°C on High Earth Orbit (HEO). LEO and MEO are not suitable for 'flying data centers' due to unstable illumination pattern, substantial thermal cycling, crossing of radiation belts, and regular eclipses. GEO is more feasible as there is always sunny (well, there are annual eclipses too, but they are short) and it is not too radioactive. Even in GEO, building large AI data centers faces severe obstacles: megawatt-class GPU clusters would require enormous radiator wings to reject heat solely through infrared emission (as only radiative emission is possible, as Musk noted). This translates into tens of thousands of square meters of deployable structures per multi-gigawatt system, far beyond anything flown to date. Launching that mass would demand thousands of Starship-class flights, which is unrealistic within Musk's four-to-five-year window, and which is extremely expensive. Also, high-performance AI accelerators such as Blackwell or Rubin as well as accompanying hardware still cannot survive GEO radiation without heavy shielding or complete rad-hard redesigns, which would slash clock speeds and/or require entirely new process technologies that are optimized for resilience rather than for performance. This will reduce feasibility of AI data centers on GEO. On top of that, high-bandwidth connectivity with earth, autonomous servicing, debris avoidance, and robotics maintencance all remain in their infancy given the scale of the proposed projects. Which is perhaps why Huang calls it all a 'dream' for now.

[2]

Jeff Bezos's New AI Hardware Startup Isn't Even His Biggest Moonshot

Here on Earth, regulators and citizens alike are realizing that there may be downsides to going all in on the demands of AI data centers and the companies that are building them, and pushback is starting to become more prevalent. But in space, no one can hear you object to the massive energy demands and dubious economic "benefits" of these massive infrastructure projects. That's why Jeff Bezos (fresh off of announcing his big AI hardware startup Project Prometheus) and other tech billionaires are reaching for the stars and planning to put data centers in orbit, per the Wall Street Journal. The idea of the space-based data center has been floating around for some time now. Bezos talked it up at Italian Tech Week last month, where he told an audience, “We will be able to beat the cost of terrestrial data centers in space in the next couple of decades." Google CEO Sundar Pichai announced the company's own space-based data venture, called Project Suncatcher, earlier this month. Nvidia has also gotten in on the action, announcing a plan for an orbital data center. Blue Origin CEO Dave Limp recently said we'll have data centers in space "in our life." And of course, Elon Musk has made the most ambitious and optimistic pitch on how AI in space might play out. In a recent appearance at the Baron Capital Conference, Musk suggested that Starlink satellites would be able to generate as much as 100 gigawatts of power every year by harnessing solar energy. "We have a plan mapped out to do it,†he said. “It gets crazy.†There's also never been a more friendly audience to receive that message: Baron Capital backed Musk's $1 trillion pay package at Tesla, and its founder, Ron Baron, has talked up Tesla at every opportunity, including a recent CNBC hit where he said the company could be a $10,000 stock. The tech execs clamouring to clutter space with their AI data centers have a believer in Phil Metzger, a research professor at the University of Central Florida. As WSJ points out, Metzger recently voiced his support for the data center space race, writing on X, "I originally expected it would be 30-50 years before it would be cheaper in space, but I ran quantitative numbers twice and both times they predicted only 10 to 11 years." There are a couple of intuitive reasons why aiming for the stars makes sense for data centers. Orbital data centers could save us from selling off all our precious terrestrial real estate to big, mostly empty boxes of whirring fans and information-crunching chips. And they would be closer to the sun to capitalize on solar power capabilities. But actually achieving this goal isn't as easy as just firing some servers into orbit. Data centers generate lots of heat and need to be cooled, and simply letting that heat dissipate in space is inefficient and possibly insufficient. Assembling the data centers in space is possible, but maintaining them could be challengingâ€"and any failure is going to be harder than it would be on Earth. Then there's the fact that we're already dealing with an increasingly crowded orbital area. A recent study found that satellites in orbit are performing collision-avoidance maneuvers at seven times the frequency than they were just five years ago, and those precautions will increasingly become necessary the more we send into orbit. We do have another option: pump the brakes on the AI buildout before we overcommit so much that we litter the planet and space with technology that might never get utilized in any meaningful way. Unfortunately, it seems like that might be the even bigger moonshot.

[3]

Why Elon Musk, Google and Amazon Want to Make Space AI's Next Frontier

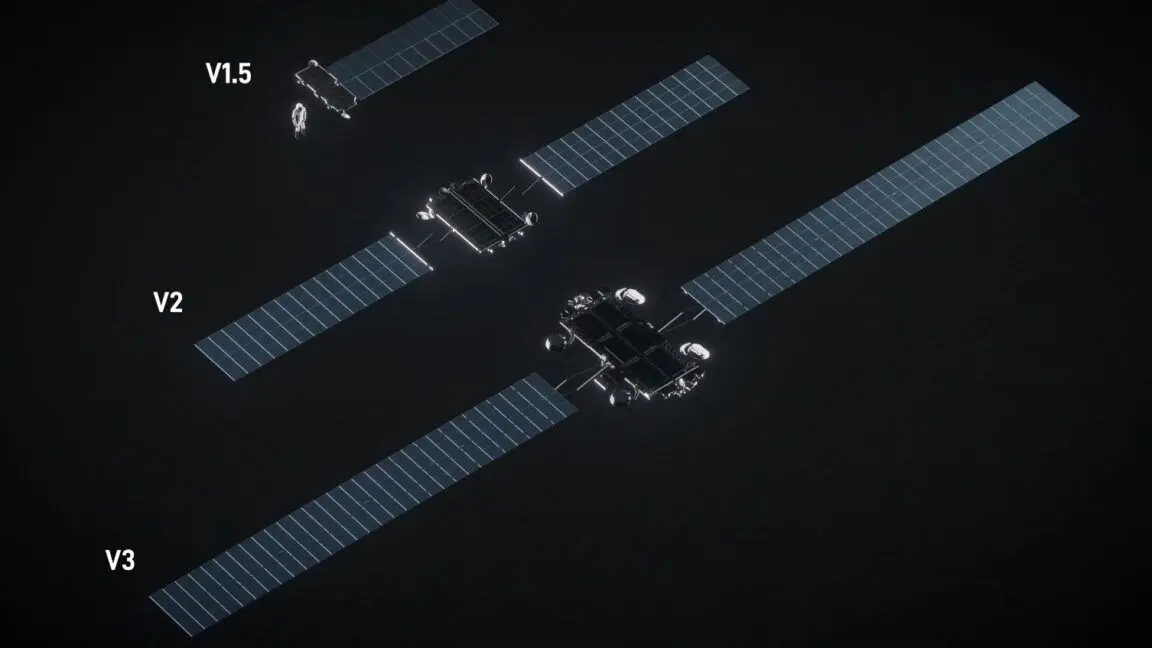

Jeff Bezos believes space data centres could arrive in 10-20 years Artificial intelligence (AI) processing could soon move to space. At least two companies have initiated projects focused on building out AI data centres in space, while other leaders in the space have discussed the need to do so. Google's Project Suncatcher is currently at the conceptual stage, while Nvidia's Starcloud is currently developing a satellite that can harness the power of the Sun. But why are AI players suddenly focused on taking their data centres to space, and what are the advantages? AI Processing in Future Could Occur in Outer Space According to The Wall Street Journal, some of the biggest companies working on AI are considering moving their data centres to outer space. The group of tech companies that are currently either involved in active research or have discussed the move include Amazon, Google, Nvidia, and Elon Musk's SpaceX. The reason? Cost of energy. Running data centres is an expensive venture. These establishments run on specialised GPUs that constantly process data from a large number of users and enterprises. This requires a massive amount of energy to run these systems 24x7. The larger the user base, the larger the energy demand, and the higher the energy costs these companies have to pay. Second, AI servers run hot. To prevent performance drops or damage, data centres use advanced cooling systems such as liquid cooling, immersion cooling or highly-engineered air-cooling setups. Providing resources for these systems and running them is another cost sink. These AI companies have figured out a cheaper way of reducing the cost of energy and maintenance to zero: harnessing the Sun's rays to power the data centre. Last week, Google CEO Sundar Pichai announced Project Suncatcher, which is researching the way to build a compact constellation of solar-powered satellites that carry Google TPUs and are connected by free-space optical links. Pichai said if this plan works out, it will allow the company to scale exponentially and minimise the impact of terrestrial resources. Nvidia is a step ahead. One of the startups in the Nvidia Inception programme, Starcloud, is building a satellite that orbits the Earth while consuming solar energy to power AI-led processing. It is planning to launch its first satellite, Starcloud-1, with H100 GPUs, and is expected to offer 100 times the GPU compute power than any previous space operation. According to Reuters, Amazon founder Jeff Bezos also recently discussed the proposition of space-based data processing for AI-led compute. He predicts that such clusters will be commonplace in the next 10-20 years, and they will significantly outperform the Earth-based data centres. In a post on X, Musk also displayed eagerness to start a similar venture. Responding to a post about Nvidia's project, he said, "Simply scaling up Starlink V3 satellites, which have high-speed laser links, would work. SpaceX will be doing this." With so many major tech corporations eyeing outer space as the next frontier of AI expansion, questions are also arising on issues such as satellite crowding and space debris.

[4]

Elon Musk Says Starship Can Deliver 300 GW Solar AI Satellites Per Year, But There's One Major 'Piece Of The Puzzle' That Stands In Its Way - Amazon.com (NASDAQ:AMZN), Salesforce (NYSE:CRM)

SpaceX CEO Elon Musk has claimed that the Starship rocket can deliver 300 Gigawatt solar-powered AI satellites to orbit amid space-based data center talks. Tonnage To Orbit Solved By Starship, Says Elon Musk Salesforce Inc. (NYSE:CRM) CEO Marc Benioff took to the social media platform X on Wednesday, sharing a video of Musk talking about the cost-effectiveness of having datacenters in Orbit vs on the ground. Responding to Benioff, Musk shared an insight into his claims. "Starship should be able to deliver around 300 GW per year of solar-powered AI satellites to orbit, maybe 500 GW," the billionaire said. He also added that on average, the electricity consumption in the U.S. was "500 GW," though he did not specify the time frame for the figure. Data from the Federal Energy Regulatory Commission said that the U.S. generated over 4,151 terawatt-hours of electricity last year, which, when divided by the number of hours in a year (8,760), would give you a figure of approximately 473 GW. "At 300 GW/year, AI in space would exceed the entire US economy just in intelligence processing every 2 years," Musk said, adding that the Starship rocket would solve "tonnage to orbit." "Chip production is therefore the major piece of the puzzle to be solved," Musk said, adding that the "Tesla Terafab is needed" to meet the demand. The TeraFab is a proposed foundry by Tesla Inc. (NASDAQ:TSLA), reportedly in development with Intel Corp. (NASDAQ:INTC). The automaker also pulled the plug on its Dojo Supercomputer team earlier this year. Jeff Bezos, Alphabet Inc. Tout Orbital Data Centers Musk's comments come at a time when talks of space-based data centers have been gathering steam. Last month, Blue Origin CEO Jeff Bezos touted the idea of Gigawatt-scale data centers in space, sharing that the data centers could prove to be more cost-effective. Bezos is also reportedly backing a new startup, dubbed Project Prometheus, which focuses on AI applications in the automotive, aerospace and scientific research sectors, much to Musk's amusement, who termed Bezos a "copycat" as another one of the Amazon.com Inc. (NASDAQ:AMZN) founder's enterprises could prove to be a Musk-backed company's rival. Elsewhere, Alphabet Inc. (NASDAQ:GOOGL) (NASDAQ:GOOG) CEO Sundar Pichai also outlined Project Suncatcher, which aims to launch a low Earth orbit data center powered directly through solar energy in orbit. Check out more of Benzinga's Future Of Mobility coverage by following this link. Read Next: TSLA Stock Gains After-Hours After Trump Says His Tax Bill Offers Deductions On Tesla Cars: 'You're So Lucky I'm With You Elon' Photo courtesy: Shutterstock AMZNAmazon.com Inc$226.001.49%OverviewCRMSalesforce Inc$228.940.47%GOOGAlphabet Inc$297.941.69%GOOGLAlphabet Inc$297.781.70%INTCIntel Corp$35.651.54%TSLATesla Inc$409.611.39%Market News and Data brought to you by Benzinga APIs

[5]

Elon Musk Says 'You Must Have Solar-Powered AI Satellites In Deep Space' -- Adds It's 'Handy' To Have SpaceX - Alphabet (NASDAQ:GOOG), Alphabet (NASDAQ:GOOGL)

SpaceX CEO Elon Musk said it's good to have his space exploration company, SpaceX, while talking about AI data centers in space. "Space Is Overwhelmingly What Matters" Speaking at the U.S.-Saudi Investment Forum on Wednesday, Musk was asked to share his thoughts on AI being in space. "You must have solar-powered AI satellites in deep space" to use even a fraction of the sun's energy to have meaningful progress as a civilization in space, Musk said. "Once you think in terms of the Kardashev scale 2 civilization...Space is overwhelmingly what matters," Musk said, outlining the importance of harnessing the sun's energy. "If you want to have something that is, say, a million times more energy than Earth could possibly produce, you must go into space," the billionaire said, adding that it was "handy" to have a space company like SpaceX. AI Satellites In Space "Cost effectiveness of AI in space will be overwhelmingly better than AI on the ground," Musk said. He added that the lowest cost way to do AI compute in the next four to five years would be via "solar-powered AI satellites" in space. Nvidia Corp. (NASDAQ:NVDA) CEO Jensen Huang, who was present with Musk at the event, shared that each rack of the supercomputers currently being built weighs over 2 tons, out of which "1.95 of it is probably for cooling." Musk agreed with Huang, adding that space would become very "compelling" for cooling purposes. He also added that AI's power requirements would be "two-thirds" of the U.S.'s total energy consumption. "You actually don't need batteries cause it's always sunny in space." Jeff Bezos, Sundar Pichai Share Musk's Ambitions Blue Origin CEO Jeff Bezos had earlier touted the idea of Gigawatt-scale data centers in space, sharing that proposed data centers in space could prove to be more cost-effective than their on-ground counterparts. Bezos is also reportedly backing a new startup, Project Prometheus, which focuses on AI applications in the automotive, aerospace and scientific research sectors. Alphabet Inc. (NASDAQ:GOOGL) (NASDAQ:GOOG) CEO Sundar Pichai also outlined Project Suncatcher, which aims to launch a low Earth orbit data center powered directly by the sun. Musk hailed the idea. Elon Musk's Mars Ambitions, SpaceX's Possible IPO Musk has previously shared his ambitions of colonizing Mars, with SpaceX recently sharing an expedited timeline of the Starship rocket's Lunar and Mars missions. Musk also shared that the Starship rocket can carry up to 100 people on board. Musk also hinted at the possibility of SpaceX going public sometime in the future. "I want to try to figure out some way for Tesla shareholders to participate in SpaceX," adding that he wanted his supporters to be able to have SpaceX stock Check out more of Benzinga's Future Of Mobility coverage by following this link. Read Next: TSLA Stock Gains After-Hours After Trump Says His Tax Bill Offers Deductions On Tesla Cars: 'You're So Lucky I'm With You Elon' GOOGAlphabet Inc$299.982.39%OverviewGOOGLAlphabet Inc$299.432.26%NVDANVIDIA Corp$196.005.08%Market News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

Major tech companies including SpaceX, Google, Amazon, and Nvidia are pursuing ambitious plans to deploy AI data centers in space, citing cost advantages from solar power and cooling. However, significant technical and logistical challenges remain.

The Space Data Center Vision

Major technology companies are setting their sights on space as the next frontier for artificial intelligence infrastructure. Elon Musk, CEO of SpaceX, Tesla, and xAI, has made bold predictions that AI compute in space will become the "lowest-cost option" within four to five years

1

. This ambitious timeline has captured the attention of industry leaders, though not without skepticism from some quarters.

Source: Tom's Hardware

The push toward orbital data centers represents a response to mounting constraints facing terrestrial AI infrastructure. As Musk explained at the U.S.-Saudi investment forum, the combined requirements for electrical supply and cooling are escalating to unprecedented levels

5

. He estimates that targeting continuous output in the range of 200-300 GW annually would require massive and costly power plants, noting that a typical nuclear power plant produces around 1 GW of continuous power output.

Source: Benzinga

Industry Players Join the Race

The space-based AI initiative extends beyond Musk's companies. Google CEO Sundar Pichai recently announced Project Suncatcher, a conceptual venture aimed at building "a compact constellation of solar-powered satellites that carry Google TPUs and are connected by free-space optical links"

3

. If successful, this project could allow Google to scale exponentially while minimizing the impact on terrestrial resources.Amazon founder Jeff Bezos has also entered the conversation, predicting that space-based data processing clusters will become commonplace within 10-20 years

2

. Bezos recently launched Project Prometheus, a new AI hardware startup focusing on applications in automotive, aerospace, and scientific research sectors. At Italian Tech Week, he told audiences that "we will be able to beat the cost of terrestrial data centers in space in the next couple of decades."

Source: Gizmodo

Nvidia has taken concrete steps through its Inception program, supporting Starcloud, a startup building satellites that orbit Earth while consuming solar energy to power AI processing

3

. The company plans to launch Starcloud-1 with H100 GPUs, expected to offer 100 times the GPU compute power of any previous space operation.Technical Advantages and Challenges

The appeal of space-based data centers stems from several theoretical advantages. As Musk emphasized, space offers "continuous solar" power without the need for batteries since "it's always sunny in space"

1

. Solar panels become cheaper in space because they don't require glass or framing, and cooling can be achieved through radiative emission.However, Nvidia CEO Jensen Huang, while acknowledging the challenges of gigawatt-class terrestrial data centers, remains skeptical about the near-term feasibility of space-based alternatives. "That's the dream," Huang stated, highlighting the significant technical obstacles that remain

1

. He noted that current Nvidia GB300 racks weigh approximately 2 tons, with 1.95 tons dedicated solely to cooling systems.The technical challenges are formidable. Megawatt-class GPU clusters would require enormous radiator wings spanning tens of thousands of square meters to reject heat through infrared emission alone

1

. High-performance AI accelerators like Blackwell or Rubin cannot currently survive geostationary orbit radiation without heavy shielding or complete radiation-hardened redesigns, which would significantly reduce performance.Related Stories

Manufacturing and Launch Constraints

Musk has claimed that Starship rockets could deliver "around 300 GW per year of solar-powered AI satellites to orbit, maybe 500 GW"

4

. However, he acknowledged that "chip production is therefore the major piece of the puzzle to be solved," suggesting that Tesla's proposed TeraFab foundry would be needed to meet demand.The scale of required launches presents another obstacle. Deploying multi-gigawatt systems would demand thousands of Starship-class flights, which appears unrealistic within Musk's four-to-five-year timeframe and would be extremely expensive

1

.Growing Concerns About Space Congestion

The rush toward space-based infrastructure raises concerns about orbital congestion and space debris. Recent studies indicate that satellites in orbit are performing collision-avoidance maneuvers seven times more frequently than five years ago

2

. Additional challenges include high-bandwidth connectivity with Earth, autonomous servicing, debris avoidance, and robotic maintenance, all of which remain underdeveloped for the proposed scale of operations.References

Summarized by

Navi

[1]

Related Stories

SpaceX acquires xAI as Elon Musk bets big on 1 million satellite constellation for orbital AI

29 Jan 2026•Technology

SpaceX pushes AI data centers into orbit as Musk predicts space will beat Earth in 36 months

06 Feb 2026•Technology

SpaceX files plan for 1 million satellites as orbital AI data centers, but experts warn of major hurdles

Yesterday•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology