US National Labs Launch Massive AI Supercomputing Initiative to Maintain Global Technological Leadership

2 Sources

2 Sources

[1]

The exascale offensive: America's race to rule AI HPC

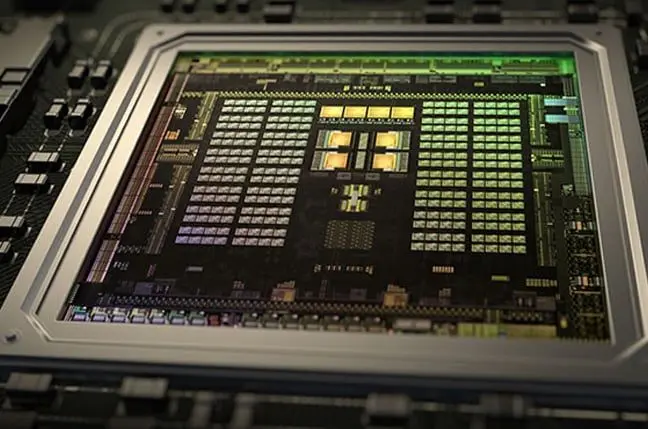

From nuclear weapons testing to climate modeling, nine new machines will give the US unprecedented computing firepower Feature A silent arms race is accelerating in the world's most advanced laboratories. While headlines focus on chatbots and consumer AI, the United States is orchestrating something far more consequential: a massive expansion of supercomputing power that may reshape the future of science, security, and technological supremacy. The stakes couldn't be higher. Across three fortress-like national laboratories, a new generation of machines is rising - systems so powerful they dwarf anything that came before. These aren't just faster computers. They are weapons in a global war where leadership in artificial intelligence is expected to determine which nations shape the 21st century. The Department of Energy's audacious plan will deploy nine cutting-edge supercomputers across the Argonne, Oak Ridge, and Los Alamos National Laboratories through unprecedented public-private partnerships. The scale is staggering: systems bristling with hundreds of thousands of next-generation processors, capable of quintillions of calculations per second, purpose-built to unlock AI applications - and to ensure America's rivals don't get there first. At Argonne, two flagship systems named Solstice and Equinox will anchor what may become the world's most formidable AI computing infrastructure. Solstice alone will harness 100,000 Nvidia Blackwell GPUs, creating the largest AI supercomputer in the DOE's network - a silicon leviathan designed to push the boundaries of what's computationally possible. In addition to these, Argonne will include three smaller systems - Minerva, Tara, and Janus - aimed at specialized tasks. Minerva and Tara will focus on AI-based predictive modeling, while Janus is intended to support workforce development in AI. Together, these five systems at Argonne will form a multi-tier computing ecosystem to serve applications from material discovery and climate modeling to AI-driven experimental design. Oak Ridge, which is home to the Frontier supercomputer, will receive two AI-accelerated machines built with AMD and HPE technology. The first is Lux, an AI cluster powered by AMD Instinct MI355X GPUs and EPYC CPUs, scheduled for deployment in early 2026. The system will provide a secure, open AI software stack to tackle urgent research priorities from fusion energy simulations and fission reactor materials to quantum science. The second system, Discovery, will be based on the HPE Cray Supercomputing GX5000, slated for 2028, and will use next-generation AMD hardware. It will include EPYC Venice processors and Instinct MI430X GPUs. Discovery is expected to significantly outperform Frontier (the world's second-fastest) with performance well beyond one exaFLOPS. Finally, Los Alamos will get two supercomputers focused on national security science, in partnership with HPE and Nvidia. The purpose-built AI systems are named Mission and Vision for nuclear security modeling and simulation. Mission will be dedicated to atomic stockpile stewardship, explicitly intended to assess and improve nuclear weapons reliability without live testing. On the other hand, Vision will support a broad range of open science projects in materials science, energy modeling, and biomedical research. With the rapid development, a major question is why the US is ramping up supercomputing power at this time. A clear driver is the explosive growth of AI and the need for research infrastructure to support it. AI has been a state priority for both the Trump and Biden administrations, and these supercomputers reflect that focus. This surge aligns directly with Washington's AI Action Plan and its renewed emphasis on "AI-enabled science." Beyond chasing speed, the US is positioning supercomputers as the backbone of national AI infrastructure, necessary for climate modeling, materials discovery, healthcare simulation, and defense. Modern science generates enormous datasets, from particle accelerators to genomic research, and AI algorithms become far more potent when paired with faster supercomputers. The DOE's Office of Science notes that AI is an ideal tool for extracting insights from big data, and that it becomes more useful as the speed and computational power of today's supercomputers grow. New machines like Oak Ridge's Discovery and Lux are designed to leverage AI for science, expanding America's leadership in AI-powered scientific computing. These systems integrate traditional simulation with machine learning, allowing researchers to train frontier AI models for open science and analyze data at unprecedented speeds. The result is a step change in capability. Complex problems, from climate modeling to biomedical research, can be tackled with AI-enhanced simulations, accelerating the cycle from hypothesis to discovery. This directly supports the AI Action Plan's call to invest in AI-enabled science. There is also a sense of urgency arising from international competition. US policymakers view leadership in AI and supercomputing as a strategic asset, highlighting economic competitiveness, scientific leadership, and national security. The Trump administration has been vocal about winning the AI race and not ceding ground to rival nations. There's also a geopolitical reason why. Other major powers are rapidly expanding their own HPC infrastructure. China, for instance, has been a formidable player in supercomputing for more than a decade. By 2020-21, reports indicate China built at least two exascale-class supercomputers, often referenced as an upgraded Sunway system and the Tianhe-3 system. These supercomputers achieved exascale performance before the US did, without public benchmarking. China has stopped submitting its top supercomputers to the international TOP500 list, so their true capabilities are somewhat opaque. US officials and experts believe this is partly due to trade tensions and sanctions. The details could expose China's systems to US export controls or give away strategic information. Regardless, it's understood that China is at technological parity in HPC and possibly even ahead in some aspects. This competitive pressure is a primary rationale for US policymakers to make sustained HPC investment. The US's response has been twofold: out-compute China by fielding superior machines (hence the drive for exascale and beyond) and slow China's progress via export controls on advanced semiconductors. Europe, meanwhile, has been organizing a collective effort to boost its HPC capabilities through the EuroHPC Joint Undertaking. In September, Europe inaugurated its first exascale supercomputer, Jupiter in Germany, which received roughly €500 million of joint investment and runs on Nvidia's Grace Hopper platform. By commissioning nine supercomputers essentially at once, the US is trying not only to maintain its lead but to widen it. As of now, DOE machines hold the top three spots in the world TOP500 rankings. The forthcoming systems - Solstice, Equinox, Discovery, Lux, and Vision - are intended to strengthen that dominance in both traditional HPC and AI-specific computing for years to come. The global HPC landscape in 2025 is one of rapid advancement and one-upmanship. China likely has multiple exascale systems but keeps them under wraps, while the US has publicly claimed the fastest benchmarks and is now pivoting to AI-centric upgrades. By infusing its new supercomputers with AI capabilities and deploying them more quickly through partnerships, the US aims to set the pace of innovation. The new supercomputers are significant not just for the number of systems or their geopolitical context, but also for the latest technologies they introduce. The availability of the next-generation hardware has dramatically boosted performance. Both Nvidia and AMD are rolling out new chips around 2025-26 that promise order-of-magnitude gains in AI and simulation capacity. The DOE is seizing the moment to incorporate these into national lab systems. We are witnessing the rise of a new generation of supercomputers that go beyond traditional CPUs and GPUs, incorporating specialized hardware and novel architectures optimized for AI. One headline innovation is the Nvidia Vera Rubin platform, which will debut on the Los Alamos machines and may later be deployed at other labs. This platform splits the namesake across a CPU (Nvidia Vera) and a GPU (Nvidia Rubin), which represents the company's first foray into designing its own CPU for HPC alongside its GPUs. By integrating these with Quantum-2/X800 InfiniBand networks at massive scale, the Vera Rubin systems are expected to handle mixed workloads far more efficiently. For example, they will use lower numerical precision where possible to get a huge 2,000-plus exaFLOPS AI throughput, without sacrificing the high precision needed for physics in other parts of the calculation. On the AMD side, Oak Ridge's Discovery system offers a peek into AMD's HPC technology roadmap. It will use AMD's Venice EPYC processors and Instinct MI430X GPUs, which are not yet on the market and presumably two generations beyond today's hardware. AMD has been focusing on heterogeneous computing as well; its Instinct MI300 series already combines CPU and GPU in a single package, and the future MI400 series might push this further. The timing of America's supercomputing push is no coincidence. It directly reflects the imperatives laid out in the country's AI strategy. From AI-enabled science breakthroughs to national security advantages, and from infrastructure building to workforce development, the new DOE supercomputers are accelerators for each pillar of the US AI Action Plan. As HPC networks grow more intelligent and more powerful, we may look back on this moment as when the era of exascale truly took off into the era of AI-driven exa-intelligence. Assuming the bubble doesn't burst. ®

[2]

To meld AI with supercomputers, US national labs are picking up the pace

America's top scientific labs are now teaming up with tech giants like Nvidia and Oracle. This partnership aims to speed up the arrival of powerful supercomputers for AI research. These new machines will help develop drugs, batteries, and power plants faster. The government is shifting to a business-like strategy to keep pace with global AI advancements. For years, Rick Stevens, a computer scientist at Argonne National Laboratory, pushed the notion of transforming scientific computing with artificial intelligence. But even as Stevens worked toward that goal, government labs such as Argonne -- created in 1946 and sponsored by the Department of Energy -- often took five years or more to develop powerful supercomputers that can be used for AI research. Stevens watched as companies such as Amazon, Microsoft and Elon Musk's xAI made faster gains by installing large AI systems in a matter of months. Last month, Stevens welcomed a major change. The Energy Department began cutting deals with tech giants to hasten how quickly national labs can land bigger machines. At Argonne, outside Chicago, AI chip giant Nvidia and cloud provider Oracle agreed to deliver the lab's first two dedicated AI systems, with a modest size machine in 2026 and a larger system later. In a shift, the tech companies are expected to pay at least some of the costs of building and operating the hardware, rather than the government. And other companies are expected to share use of the hardware. "It's a much more businesslike strategy," said Stevens, an Argonne associate laboratory director and computer science professor at the University of Chicago. "It's much more of a Silicon Valley kind of strategy." The AI boom is shaking up national laboratories that have led some of the most cutting-edge scientific research, increasingly pushing them to emulate the behavior of the tech giants. That's because AI has added new urgency to the world of high-performance computing, promising to stunningly speed up tasks such as developing drugs, new batteries and power plants. Many of the labs now want dedicated AI hardware more quickly. That has led to deals with tech companies, including chipmakers Nvidia and Advanced Micro Devices. Oak Ridge National Laboratory, founded in 1943 and a leader in developing nuclear technology, recently said it expected an AI system called Lux to be installed in just six months in a project driven by AMD. The moves dovetail with the Trump administration's efforts to bolster the United States in an AI race against China, in part by cutting red tape. "If we move at the old speed of government, we're going to get left behind," the energy secretary, Chris Wright, said at a briefing last month on the Oak Ridge announcements. "We're going to have dozens of partnerships with companies to build facilities at commercial speed." The strategy emerged from talks between Wright and the chiefs of many major chip and AI companies that started in the spring, according to lab officials and company executives. It is just one facet of a flurry of activity in supercomputers, which was the subject of a major conference last week in St. Louis. Nvidia helped galvanize the action last month when Jensen Huang, its CEO, unveiled plans for seven Department of Energy supercomputers. A day earlier, Oak Ridge announced two AMD-powered machines. Supercomputer veterans said they could not recall announcements of nine major systems in one week. "I don't think that's ever happened before," said Trish Damkroger, a senior vice president at Hewlett Packard Enterprise, which is building five of the new systems. Supercomputers are room-size machines that have historically been used to create simulations of complex processes, like explosions or the movement of air past aircraft wings. They use thousands of processor chips and high-speed networks, with the silicon components working together like one vast electronic brain. The machines have many similarities with the hardware in AI data centers, which are the computing hubs that power the development of the technology. But scientific chores typically demand high-precision calculations, processing what are known as 64-bit chunks of data at a time. Many AI chores require simpler math, so the latest systems use chunks of data as small as 4 bits to do many more calculations at once. Nvidia estimates that the large system being constructed for Argonne will handle more operations per second than the 500 largest conventional supercomputers combined. Such speeds can slash months off tasks like training large language models, which are the systems that underpin many AI products. The potential benefits have spurred private investments that dwarf those of the government. Microsoft, for example, plans to spend $7 billion on a single AI computing complex in Wisconsin. The speediest supercomputer at Lawrence Livermore National Laboratory, by contrast, cost about $600 million. Those differences have pushed national labs to seek specialized AI systems and change their buying practices, which often involved waiting for future chips to be developed and procedures such as requests for proposals, or RFPs. "The old way we bought things is not the way we should do it going forward," said Gary Grider, the high performance supercomputing division leader at Los Alamos National Laboratory, which is expected to receive two new supercomputers. This past spring, the Energy Department began brainstorming how to speed up. It offered to provide space and electrical power for potential AI data centers at 16 national labs. Wright also invited tech leaders to come up with proposals. One who responded was Lisa Su, the CEO of AMD, which offered to build a machine in just several months at Oak Ridge if the lab provided a location and maintained the system, Wright said at the briefing last month. He recalled Su saying: "'I'm going to pay for it. I'm going to build it, and then we're going to split the use of it.'" Su said there were multiple meetings with Wright but did not discuss financial arrangements. Oak Ridge, historically home to some of the largest scientific machines, said in October that it would add to that line in three years with a machine called Discovery. It expects the additional Lux system to help train specialized AI models and accelerate research in nuclear fusion and the discovery of new materials, said Gina Tourassi, an associate laboratory director. Stevens has similar hopes at Argonne, where five new supercomputers were announced last month. Nvidia and Oracle first plan to deliver an AI system called Equinox, which has 10,000 of Nvidia's coveted Blackwell processors. They later expect to deliver Solstice, powered by 100,000 of those chips. (BEGIN OPTIONAL TRIM.) Besides reaching researchers, Stevens said, he expects the machines to attract companies pushing the frontiers of AI. "Instead of thinking of the government or DOE as the only customer, think of us as at the center of a consortium," Stevens said. And instead of buying the new hardware outright, the lab can essentially pay for use of the systems as needed, he said. (END OPTIONAL TRIM.) Many questions remain about the changing approach, including the potential impact on taxpayers and whether Congress will have input. "No matter how good these systems are for American innovation and leadership, it is unusual to have the funding methods and RFP process to be obscured," said Addison Snell, CEO of Intersect360 Research, a firm that tracks the supercomputer industry. (STORY CAN END HERE. OPTIONAL MATERIAL FOLLOWS.) Most questions about the new purchasing approach should be answered after negotiations among the parties are completed, said Ian Buck, vice president and general manager of Nvidia's accelerated computing business. But he added that Nvidia would not be paying for all the chips going to Argonne. "It's not a donation," Buck said. This article originally appeared in The New York Times.

Share

Share

Copy Link

The US Department of Energy is deploying nine cutting-edge AI supercomputers across three national laboratories through unprecedented public-private partnerships, marking a strategic shift to accelerate scientific computing capabilities and maintain America's competitive edge in the global AI race.

Unprecedented Supercomputing Expansion

The United States is embarking on the largest expansion of AI-focused supercomputing infrastructure in its history, with the Department of Energy announcing plans to deploy nine cutting-edge systems across three premier national laboratories. This massive initiative represents a fundamental shift in how America approaches high-performance computing, moving from traditional government procurement to rapid Silicon Valley-style partnerships with technology giants

1

.The scale of this undertaking is unprecedented in the supercomputing world. Industry veterans noted they could not recall announcements of nine major systems in a single week, highlighting the urgency driving this initiative. The systems will be distributed across Argonne National Laboratory outside Chicago, Oak Ridge National Laboratory in Tennessee, and Los Alamos National Laboratory in New Mexico

2

.Flagship Systems and Technical Specifications

At Argonne National Laboratory, two flagship systems named Solstice and Equinox will anchor what may become the world's most formidable AI computing infrastructure. Solstice alone will harness 100,000 Nvidia Blackwell GPUs, creating the largest AI supercomputer in the DOE's network. The laboratory will also house three smaller specialized systems: Minerva and Tara for AI-based predictive modeling, and Janus for workforce development in AI applications

1

.Oak Ridge National Laboratory, home to the current Frontier supercomputer, will receive two AI-accelerated machines built with AMD and HPE technology. The first system, Lux, will be powered by AMD Instinct MI355X GPUs and EPYC CPUs, scheduled for deployment in early 2026. The second system, Discovery, based on the HPE Cray Supercomputing GX5000 and slated for 2028, will use next-generation AMD hardware including EPYC Venice processors and Instinct MI430X GPUs, with performance expected to significantly exceed one exaFLOPS

1

.Los Alamos National Laboratory will receive two supercomputers focused on national security science through partnerships with HPE and Nvidia. The systems, named Mission and Vision, will serve distinct purposes: Mission will be dedicated to atomic stockpile stewardship for nuclear weapons reliability assessment without live testing, while Vision will support broader open science projects in materials science, energy modeling, and biomedical research

1

.Related Stories

Revolutionary Partnership Model

The initiative represents a dramatic departure from traditional government procurement practices. Instead of the typical five-year development cycles that have characterized national laboratory supercomputer projects, these new systems are being deployed through public-private partnerships where technology companies share costs and accelerate timelines. Rick Stevens, an associate laboratory director at Argonne, described this as "a much more businesslike strategy" and "much more of a Silicon Valley kind of strategy" .

This shift emerged from discussions between Energy Secretary Chris Wright and chiefs of major chip and AI companies that began in spring. The approach allows for rapid deployment, with some systems expected to be installed in just six months compared to the traditional multi-year timelines. As Wright emphasized, "If we move at the old speed of government, we're going to get left behind"

2

.References

Summarized by

Navi

[1]

Related Stories

NVIDIA Predicts AI-Accelerated Future for Scientific Computing as GPU Supercomputers Dominate Top500

18 Nov 2025•Science and Research

AMD and DOE Forge $1 Billion Partnership for Sovereign AI Supercomputers

27 Oct 2025•Science and Research

High-Performance Computing Challenges Threaten US Innovation and Global Leadership

15 May 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy