AI Supercomputers: Exponential Growth Raises Concerns Over Cost and Power Consumption

3 Sources

3 Sources

[1]

Within six years, building the leading AI data center may cost $200B | TechCrunch

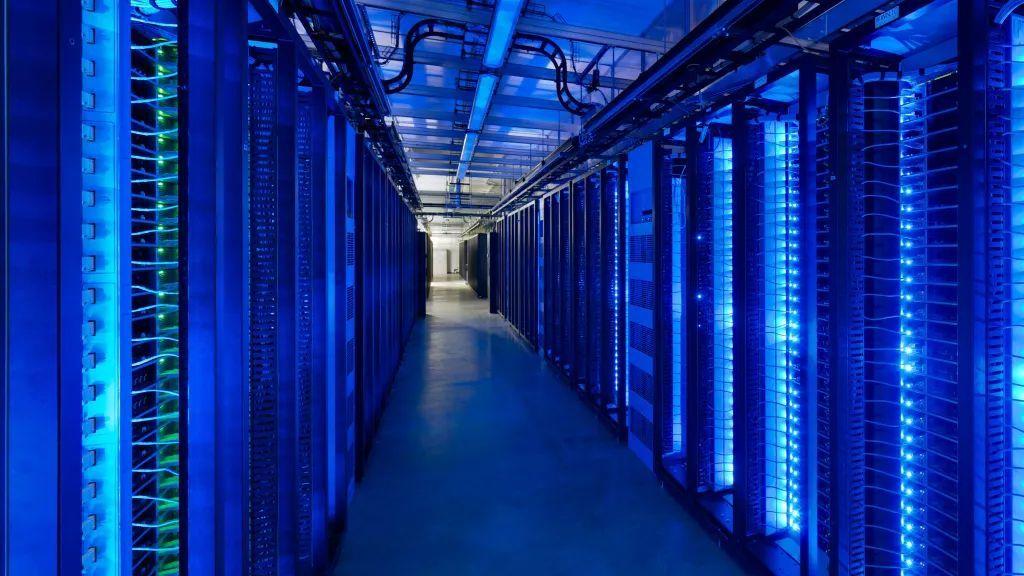

Data centers to train and run AI may soon contain millions of chips, cost hundreds of billions of dollars, and require power equivalent to a large city's electricity grid, if the current trends hold. That's according to a new study from researchers at Georgetown, Epoch AI, and Rand, which looked at the growth trajectory of AI data centers around the world from 2019 to this year. The co-authors compiled and analyzed a data set of over 500 AI data center projects and found that, while the computational performance of data centers is more than doubling annually, so are the power requirements and capital expenditures. The findings illustrate the challenge in building the necessary infrastructure to support the development of AI technologies in the coming decade. OpenAI, which recently said that roughly 10% of the world's population is using its ChatGPT platform, has a partnership with Softbank and others to raise up to $500 billion to establish a network of AI data centers in the U.S. (and possibly elsewhere). Other tech giants, including Microsoft, Google, and AWS, have collectively pledged to spend hundreds of millions of dollars this year alone expanding their data center footprints. According to the Georgetown, Epoch, and Rand study, the hardware costs for AI data centers like xAI's Colossus, which has a price tag of around $7 billion, increased 1.9x each year between 2019 and 2025, while power needs climbed 2x annually over the same period. (Colossus draws an estimated 300 megawatts of power, as much as 250,000 households.) The study also found that data centers have become much more energy efficient in the last five years, with one key metric -- computational performance per watt -- increasing 1.34x each year from 2019 to 2025. Yet these improvements won't be enough to make up for growing power needs. By June 2030, the leading AI data center may have 2 million AI chips, cost $200 billion, and require 9 GW of power -- roughly the output of 9 nuclear reactors. It's not a new revelation that AI data center electricity demands are on pace to greatly strain the power grid. Data center energy intake is forecast to grow 20% by 2030, according to a recent Wells Fargo analysis. That could push renewable sources of power, which are dependent on variable weather, to their limits -- spurring a ramp-up in non-renewable, environmentally damaging electricity sources like fossil fuels. AI data centers also pose other environmental threats, such as high water consumption, and take up valuable real estate, as well as erode state tax bases. A study by Good Jobs First, a Washington, D.C.-based nonprofit, estimates that at least 10 states lose over $100 million per year in tax revenue to data centers, the result of overly generous incentives. It's possible that these projections may not come to pass, of course, or that the time scales are off-kilter. Some hyperscalers, like AWS and Microsoft, have pulled back on data center projects in the last several weeks. In a note to investors in mid-April, analysts at Cowen observed that there's been a "cooling" in the data center market in early 2025, signaling the industry's fear of unsustainable expansion.

[2]

AI supercomputers may cost $200B, use 9 nuclear reactors' power

The next generation of AI supercomputers is shaping up to be massive in size, cost, and power demand. A new study by researchers from Georgetown, Epoch AI, and Rand reveals just how immense the infrastructure required to train and run AI systems may become by the end of the decade. Between 2019 and 2025, leading AI data centers' hardware costs and power consumption have doubled annually. And if this exponential growth continues, we may see a single AI supercomputer in 2030 housing two million chips, costing $200 billion, and consuming 9 gigawatts of power, roughly what nine nuclear reactors produce.

[3]

AI Supercomputers May Run Into Power Constraints by 2030 | PYMNTS.com

While companies building artificial intelligence (AI)-powered supercomputers are likely to be able to get the chips and capital they need, they may run into power constraints by 2030, according to research institute Epoch AI. "If the observed trends continue, the leading AI supercomputer in June 2030 will need 2 million AI chips, cost $200 billion and require 9GW of power," the company said in a paper posted Wednesday (April 23). "Historical AI chip production growth and major capital commitments like the $500 billion Project Stargate suggest the first two requirements can likely be met. However, 9GW of power is the equivalent of nine nuclear reactors, a scale beyond any existing industrial facilities." To overcome this challenge, companies may shift to decentralized training approaches that would allow them to distribute their training across AI supercomputers in several locations, according to the paper. Epoch AI said this in a paper in which it reported that since 2019, AI supercomputers' computation performance grew 2.5 times per year, while their power requirements and hardware costs doubled each year. The computational performance of the AI supercomputers has been driven by the use of more and better chips, the report said. Since 2019, chip quantity has increased 1.6 times per year and chip performance has increased 1.6 times per year. "While systems with more than 10,000 chips were rare in 2019, several companies deployed AI supercomputers more than 10 times that size in 2024, such as xAI's Colossus with 200,000 AI chips," the paper said. Epoch AI said in the paper that it found that the share of AI supercomputers' computing power owned by companies rather than the public sector rose from 40% in 2019 to 80% in 2025. The company also found that 75% of AI supercomputers' computing power is hosted in the United States, while the second-largest share, 15%, is hosted in China. xAI launched its Colossus 100k H100 training cluster in September, with owner Elon Musk saying at the time that Colossus would double in size to 200k (50k H200s) within months.

Share

Share

Copy Link

A new study reveals the staggering growth of AI data centers, projecting that by 2030, leading AI supercomputers may cost $200 billion and consume as much power as nine nuclear reactors, raising concerns about sustainability and infrastructure challenges.

Exponential Growth in AI Supercomputing

A groundbreaking study conducted by researchers from Georgetown, Epoch AI, and Rand has unveiled the staggering growth trajectory of AI data centers worldwide. The research, which analyzed over 500 AI data center projects from 2019 to 2025, reveals that the computational performance of these facilities is more than doubling annually

1

.Key findings show that hardware costs for AI data centers have increased 1.9x each year, while power requirements have climbed 2x annually during the same period. This rapid expansion is exemplified by projects like xAI's Colossus, which comes with a hefty price tag of around $7 billion and consumes an estimated 300 megawatts of power – equivalent to the energy needs of 250,000 households

1

.Projected Costs and Power Demands

If current trends persist, the study projects that by June 2030, the leading AI data center may require:

- 2 million AI chips

- $200 billion in construction costs

- 9 gigawatts of power (equivalent to the output of 9 nuclear reactors)

These projections highlight the immense scale of infrastructure needed to support AI technologies in the coming decade

2

.Industry Response and Investments

Tech giants are already making significant moves in response to these trends. OpenAI, in partnership with SoftBank and others, is reportedly working to raise up to $500 billion for establishing a network of AI data centers in the U.S. and potentially other locations. Similarly, Microsoft, Google, and AWS have pledged hundreds of millions of dollars this year alone to expand their data center footprints

1

.Energy Efficiency and Environmental Concerns

Despite improvements in energy efficiency, with computational performance per watt increasing 1.34x each year from 2019 to 2025, these advancements may not be sufficient to offset the growing power demands. The Wells Fargo analysis forecasts a 20% growth in data center energy intake by 2030, potentially straining renewable power sources and leading to increased reliance on fossil fuels

1

.Geographic Distribution and Ownership

Epoch AI's research indicates a significant shift in the ownership and location of AI computing power. The share owned by private companies has risen from 40% in 2019 to 80% in 2025. Geographically, 75% of AI supercomputers' computing power is hosted in the United States, followed by China with a 15% share

3

.Related Stories

Challenges and Potential Solutions

The exponential growth in AI supercomputing raises concerns about power constraints by 2030. To address this challenge, companies may need to explore decentralized training approaches, distributing their operations across multiple locations

3

.Market Dynamics and Future Outlook

While the projections paint a picture of unprecedented growth, recent market trends suggest a potential "cooling" in the data center market. Some hyperscalers, including AWS and Microsoft, have scaled back their data center projects in early 2025, indicating industry concerns about unsustainable expansion

1

.As the AI industry continues to evolve rapidly, balancing technological advancement with environmental sustainability and infrastructure capabilities remains a critical challenge for the coming years.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy