Biological Computers: A Slow but Energy-Efficient Alternative to Traditional Computing

2 Sources

2 Sources

[1]

Biological computers could use far less energy than current technology by working more slowly

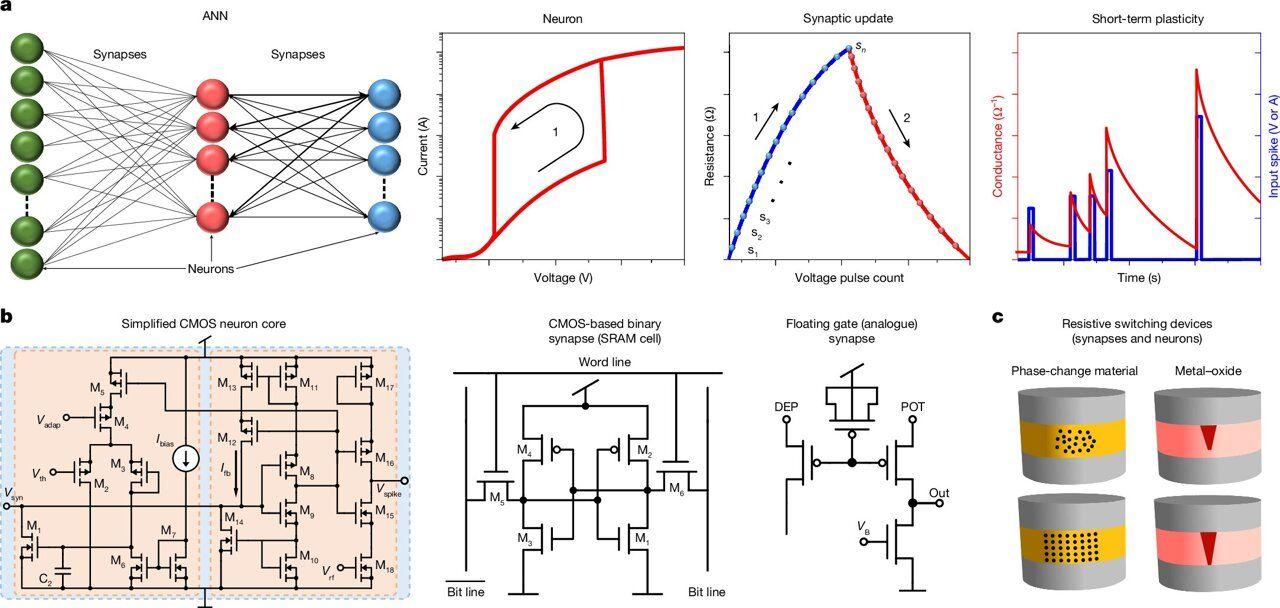

Modern computers are a triumph of technology. A single computer chip contains billions of nanometer-scaled transistors that operate extremely reliably and at a rate of millions of operations per second. However, this high speed and reliability comes at the cost of significant energy consumption: data centers and household IT appliances like computers and smartphones account for around 3% of global electricity demand, and the use of AI is likely to drive even more consumption. But what if we could redesign the way computers work so that they could perform computation tasks as quickly as today while using far less energy? Here, nature may offer us some potential solutions. The IBM scientist Rolf Landauer addressed the question of whether we need to spend so much energy on computing tasks in 1961. He came up with the Landauer limit, which states that a single computational task -- for example setting a bit, the smallest unit of computer information, to have a value of zero or one -- must expend about 10 joules (J) of energy. This is a very small amount, notwithstanding the many billions of tasks that computers perform. If we could operate computers at such levels, the amount of electricity used in computation and managing waste heat with cooling systems would be of no concern. However, there is a catch. To perform a bit operation near the Landauer limit, it needs to be carried out infinitely slowly. Computation in any finite time period is predicted to cost an additional amount that is proportional to the rate at which computations are performed. In other words, the faster the computation, the more energy is likely to be used. More recently, this has been demonstrated by experiments set up to simulate computational processes: the energy dissipation begins to increase measurably when you carry out more than about one operation per second. Processors that operate at a clock speed of a billion cycles per second, which is typical in today's semiconductors, use about 10 J per bit -- about ten billion times more than the Landauer limit. A solution may be to design computers in a fundamentally different way. The reason that traditional computers work at a very fast rate is that they operate serially, one operation at a time. If instead, one could use a very large number of "computers" working in parallel, then each could work much slower. For example, one could replace a "hare" processor that performs a billion operations in one second with a billion "tortoise" processors, each taking a full second to do their task, at a far lower energy cost per operation. A 2023 paper that I co-authored showed that a computer could then operate near the Landauer limit, using orders of magnitude less energy than today's computers. Tortoise power Is it even possible to have billions of independent "computers" working in parallel? Parallel processing on a smaller scale is commonly used already today, for example, when around 10,000 graphics processing units or GPUs run at the same time for training artificial intelligence models. However, this is not done to reduce speed and increase energy efficiency, but rather out of necessity. The limits of heat management make it impossible to further increase the computation power of a single processor, so processors are used in parallel. An alternative computing system that is much closer to what would be required to approach the Landauer limit is known as network-based biocomputation. It makes use of biological motor proteins, which are tiny machines that help perform mechanical tasks inside cells. This system involves encoding a computational task into a nanofabricated maze of channels with carefully designed intersections, which are typically made of polymer patterns deposited on silicon wafers. All the possible paths through the maze are explored in parallel by a very large number of long thread-like molecules called biofilaments, which are powered by the motor proteins. Each filament is just a few nanometers in diameter and about a micrometer long (1,000 nanometers). They each act as an individual "computer," encoding information by its spatial position in the maze. This architecture is particularly suitable for solving so-called combinatorial problems. There are problems with many possible solutions, such as scheduling tasks, which are computationally very demanding for serial computers. Experiments confirm that such a biocomputer requires between 1,000 and 10,000 times less energy per computation than an electronic processor. This is possible because biological motor proteins have themselves evolved to use no more energy than needed to perform their task at the required rate. This is typically a few hundred steps per second, a million times slower than transistors. At present, only small biological computers have been built by researchers to prove the concept. To be competitive with electronic computers in terms of speed and computation, and explore very large numbers of possible solutions in parallel, network-based biocomputation needs to be scaled up. A detailed analysis shows that this should be possible with current semiconductor technology, and could profit from another great advantage of biomolecules over electrons, namely their ability to carry individual information, for example in the form of a DNA tag. There are nevertheless numerous obstacles to scaling these machines, including learning how to precisely control each of the biofilaments, reducing their error rates, and integrating them with current technology. If these kinds of challenges can be overcome in the next few years, the resulting processors could solve certain types of challenging computational problems with a massively reduced energy cost. Neomorphic computing Alternatively, it is an interesting exercise to compare the energy use in the human brain. The brain is often hailed as being very energy-efficient, using just a few watts -- far less than AI models -- for operations like breathing or thinking. Yet it doesn't seem to be the basic physical elements of the brain that save energy. The firing of a synapse, which may be compared to a single computational step, actually uses about the same amount of energy as a transistor requires per bit. However, the architecture of the brain is very highly interconnected and works fundamentally differently from both electronic processors and network-based biocomputers. So-called neuromorphic computing attempts to emulate this aspect of brain operations, but using novel types of computer hardware as opposed to biocomputing. It would be very interesting to compare neuromorphic architectures to the Landauer limit to see whether the same kinds of insights from biocomputing could be transferable to here in the future. If so, it too could hold the key to a huge leap forward in computer energy-efficiency in the years ahead.

[2]

Biological computers could use far less energy than current technology - by working more slowly

Lund University provides funding as a member of The Conversation UK. Modern computers are a triumph of technology. A single computer chip contains billions of nanometre-scaled transistors that operate extremely reliably and at a rate of millions of operations per second. However, this high speed and reliability comes at the cost of significant energy consumption: data centres and household IT appliances like computers and smartphones account for around 3% of global electricity demand, and the use of AI is likely to drive even more consumption. But what if we could redesign the way computers work so that they could perform computation tasks as quickly as today while using far less energy? Here, nature may offer us some potential solutions. The IBM scientist Rolf Landauer addressed the question of whether we need to spend so much energy on computing tasks in 1961. He came up with the Landauer limit, which states that a single computational task - for example setting a bit, the smallest unit of computer information, to have a value of zero or one - must expend about 10⁻²¹ joules (J) of energy. This is a very small amount, notwithstanding the many billions of tasks that computers perform. If we could operate computers at such levels, the amount of electricity used in computation and managing waste heat with cooling systems would be of no concern. However, there is a catch. To perform a bit operation near the Landauer limit, it needs to be carried out infinitely slowly. Computation in any finite time period is predicted to cost an additional amount that is proportional to the rate at which computations are performed. In other words, the faster the computation, the more energy is likely used. More recently this has been demonstrated by experiments set up to simulate computational processes: the energy dissipation begins to increase measurably when you carry out more than about one operation per second. Processors that operate at a clock speed of a billion cycles per second, which is typical in today's semiconductors, use about 10⁻¹¹J per bit - about ten billion times more than the Landauer limit. A solution may be to design computers in a fundamentally different way. The reason that traditional computers work at a very fast rate is that they operate serially, one operation at a time. If instead one could use a very large number of "computers" working in parallel, then each could work much slower. For example, one could replace a "hare" processor that performs a billion operations in one second by a billion "tortoise" processors, each taking a full second to do their task, at a far lower energy cost per operation. A 2023 paper that I co-authored showed that a computer could then operate near the Landauer limit, using orders of magnitude less energy than today's computers. Tortoise power Is it even possible to have billions of independent "computers" working in parallel? Parallel processing on a smaller scale is commonly used already today, for example when around 10,000 graphics processing units or GPUs run at the same time for training artificial intelligence models. However, this is not done to reduce speed and increase energy efficiency, but rather out of necessity. The limits of heat management make it impossible to further increase the computation power of a single processor, so processors are used in parallel. An alternative computing system that is much closer to what would be required to approach the Landauer limit is known as network-based biocomputation. It makes use of biological motor proteins, which are tiny machines that help perform mechanical tasks inside cells. This system involves encoding a computational task into a nanofabricated maze of channels with carefully designed intersections, which are typically made of polymer patterns deposited on silicon wafers. All the possible paths through the maze are explored in parallel by a very large number of long thread-like molecules called biofilaments, which are powered by the motor proteins. Each filament is just a few nanometres in diameter and about a micrometre long (1,000 nanometres). They each act as an individual "computer", encoding information by its spatial position in the maze. This architecture is particularly suitable for solving so-called combinatorial problems. These are problems with many possible solutions, such as scheduling tasks, which are computationally very demanding for serial computers. Experiments confirm that such a biocomputer requires between 1,000 and 10,000 times less energy per computation than an electronic processor. This is possible because biological motor proteins are themselves evolved to use no more energy than needed to perform their task at the required rate. This is typically a few hundred steps per second, a million times slower than transistors. At present, only small biological computers have been built by researchers to prove the concept. To be competitive with electronic computers in terms of speed and computation, and explore very large numbers of possible solutions in parallel, network-based biocomputation needs to be scaled up. A detailed analysis shows that this should be possible with current semiconductor technology, and could profit from another great advantage of biomolecules over electrons, namely their ability to carry individual information, for example in the form of a DNA tag. There are nevertheless numerous obstacles to scaling these machines, including learning how to precisely control each of the biofilaments, reducing their error rates, and integrating them with current technology. If these kinds of challenges can be overcome in the next few years, the resulting processors could solve certain types of challenging computational problems with a massively reduced energy cost. Neomorphic computing Alternatively, it is an interesting exercise to compare the energy use in the human brain. The brain is often hailed as being very energy efficient, using just a few watts - far less than AI models - for operations like breathing or thinking. Yet it doesn't seem to be the basic physical elements of the brain that save energy. The firing of a synapse, which may be compared to a single computational step, actually uses about the same amount of energy as a transistor requires per bit. Read more: Noise in the brain enables us to make extraordinary leaps of imagination. It could transform the power of computers too However, the architecture of the brain is very highly interconnected and works fundamentally differently from both electronic processors and network-based biocomputers. So-called neuromorphic computing attempts to emulate this aspect of brain operations, but using novel types of computer hardware as opposed to biocomputing. It would be very interesting to compare neuromorphic architectures to the Landauer limit to see whether the same kinds of insights from biocomputing could be transferable to here in future. If so, it too could hold the key to a huge leap forward in computer energy-efficiency in the years ahead.

Share

Share

Copy Link

Researchers explore the potential of biological computers that could significantly reduce energy consumption in computing by operating at slower speeds, inspired by nature's efficiency.

The Energy Dilemma in Modern Computing

Modern computers, while marvels of technology, come with a significant energy cost. Data centers and household IT devices account for approximately 3% of global electricity demand, with AI usage potentially driving this figure even higher

1

2

. This energy consumption has prompted researchers to explore alternative computing methods that could maintain computational power while drastically reducing energy use.The Landauer Limit and the Speed-Energy Trade-off

In 1961, IBM scientist Rolf Landauer introduced the concept of the Landauer limit, which states that a single computational task must expend about 10^-21 joules (J) of energy

1

. However, this minimal energy expenditure is only achievable when operations are performed infinitely slowly. Current processors, operating at billions of cycles per second, use about 10^-11 J per bit—ten billion times more than the Landauer limit1

2

.The "Tortoise" Approach to Computing

Researchers are now considering a fundamentally different approach to computer design. Instead of relying on fast, serial processing, they propose using a vast number of slower "computers" working in parallel. This concept, likened to replacing a single "hare" processor with billions of "tortoise" processors, could potentially allow computers to operate near the Landauer limit, using significantly less energy

1

2

.Network-Based Biocomputation: Nature's Solution

An innovative approach called network-based biocomputation is being explored as a potential solution. This system utilizes biological motor proteins and biofilaments to perform computations

1

2

. Key features of this approach include:- Nanofabricated mazes: Computational tasks are encoded into carefully designed channel intersections.

- Parallel processing: Large numbers of biofilaments explore all possible paths simultaneously.

- Energy efficiency: Experiments show biocomputers require 1,000 to 10,000 times less energy per computation than electronic processors

1

2

.

Related Stories

Advantages and Challenges of Biological Computing

Biological computers offer several advantages:

- Energy efficiency: They operate closer to the Landauer limit.

- Parallel processing: Ideal for solving combinatorial problems.

- Information carrying: Biomolecules can carry individual information, such as DNA tags

1

2

.

However, scaling up these systems faces challenges:

- Precise control of biofilaments

- Reducing error rates

- Integration with current technology

1

2

Future Prospects and Implications

While only small-scale biological computers have been built so far, researchers believe scaling up is possible with current semiconductor technology. If successful, these processors could solve certain types of challenging computational problems with significantly reduced energy costs

1

2

. This development could have far-reaching implications for the tech industry, potentially revolutionizing data center operations and reducing the carbon footprint of computing.References

Summarized by

Navi

[1]

Related Stories

Thermodynamic computing could slash AI image generation energy use by ten billion times

28 Jan 2026•Science and Research

Breakthrough in AI Energy Efficiency: New Systems Promise Drastic Reduction in Power Consumption

08 Mar 2025•Technology

Breakthrough in Neuromorphic Computing: Single Silicon Transistor Mimics Neuron and Synapse

29 Mar 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology