China Implements Strict AI Content Labeling Law on Social Media Platforms

5 Sources

5 Sources

[1]

Chinese social media firms comply with strict AI labelling law, making it clear to users and bots what's real and what's not

It's part of a broader push by the Cyberspace Administration of China to have greater oversight over the AI industry and the content it produces. Chinese social media companies have begun requiring users to classify AI generated content that is uploaded to their services in order to comply with new government legislation. By law, the sites and services now need to apply a watermark or explicit indicator of AI content for users, as well as include metadata for web crawling algorithms to make it clear what was generated by a human and what was not, according to SCMP. Countries and companies the world over have been grappling with how to deal with AI generated content since the explosive growth of popular AI tools like ChatGPT, Midjourney, and Dall-E. After drafting the new law in March, China has now implemented it, taking the lead in increasing oversight and curtailing rampant use with its new labeling law making social media companies more responsible for the content on their platforms. Chinese officials claim the law is designed to help combat AI misinformation and fraud, and is applicable to all the major social media firms. That includes Tencent Holdings' WeChat - a Chinese WhatsApp equivalent - which has over 1.4 billion users, and Bytedance's TikTok alternative, Douyin, which has around a billion users of its own. Social media platform Weibo, with its 500 million plus active monthly users, is also impacted, as is social media and ecommerce platform, Rednote. Each of them posted a message in recent days highlighting to users that anyone submitting AI generated content will be required under law to label it as such. They also include options for users to flag AI generated content that is not correctly labelled, and reserve the right to delete anything that is uploaded without appropriate labeling. The Cyberspace Administration of China (CAC) governing body has also announced undisclosed "penalties," for those found to be using AI to disseminate misinformation or manipulate public opinion, with particular scrutiny said to be placed on paid online commentators. Although China is the first major country to implement an AI content labeling system through legislation, similar conventions are being considered elsewhere, too. Just last week the, Internet Engineering Task Force proposed a new AI header field which would use metadata to disclose if content was AI generated or not. That wouldn't necessarily make it easier for humans to tell the difference, but it would give the algorithms a heads up that what it's viewing may not be human-crafted. Google's new Pixel 10 phones also implement C2PA credentials for the camera, which can help users to know if an image was edited with AI or not. Although there are already numerous reports of users circumventing these safeguards, they are becoming more common. With China now implementing stricter AI controls, it's perhaps not long until we see something similar in Western countries.

[2]

Chinese social media platforms roll out labels for AI-generated material

Major social media platforms in China have started rolling out labels for AI-generated content to comply with a law that took effect on Monday. Users of the likes of WeChat, Douyin, Weibo and RedNote (aka Xiaohongshu) are now seeing such labels on posts. These denote the use of generative AI in text, images, audio, video and other types of material, according to the . Identifiers such as watermarks have to be included in metadata too. WeChat has told users they must proactively apply labels to their AI-generated content. They're also prohibited from removing, tampering with or hiding any AI labels that WeChat applies itself, or to use "AI to produce or spread false information, infringing content or any illegal activities." ByteDance's Douyin -- the Chinese version of TikTok -- similarly urged users to apply a label to every post of theirs that includes AI-generated material while noting it's able to use metadata to detect where a piece of content content came from. Weibo, meanwhile, has added the option for users to report "unlabelled AI content" option when they see something that should have such a label. Four agencies drafted the law -- which was -- including the main internet regulator, the Cyberspace Administration of China (CAC). The Ministry of Industry and Information Technology, the Ministry of Public Security and the National Radio and Television Administration also helped put together the legislation, which is being enforced to help oversee the tidal wave of genAI content. In April, the CAC a three-month campaign to regulate AI apps and services. Mandatory labels for AI content could help folks better understand when they're seeing AI slop and/or misinformation instead of something authentic. Some US companies that provide genAI tools offer similar labels and are starting to bake such identifiers into hardware. Google's are the first phones that implement (Coalition for Content Provenance and Authenticity) content credentials .

[3]

All AI-generated online content must now be labelled under Chinese law

Yesterday saw the official roll-out of China's new law designed to tackle online misinformation and fraud by mandating social media players to label all AI-generated content. China's major social media platforms have all rushed to comply as the roll-out of a new law began yesterday (Monday) that requires all AI generated content online to be labelled, whether it be text, images, video or audio, implicitly or explicitly. The law which was announced back in March by China's watchdog, the Cyberspace Administration of China (CAC) requires explicit markings on all AI-generated content, or implicit markings like watermarks on images and videos to be included in the file's metadata. The Chinese government has said the law was designed to protect users online from misinformation, online fraud and copyright infringement. According to the South China Morning Post (SCMP), it reflects a growing scrutiny from Beijing of all thinks AI. It follows the recently launched Qinglang ('clear and bright') , CAC campaign which aims to clean up the online world in China, and had a major focus on AI. Tencent's WeChat, the huge WhatsApp-style messaging platform, and ByteDance's Douyin - the equivalent of X in China - have all rushed to implement new features in order to comply with the new law, posting messages to inform their users of the new rules and features, as did Weibo and RedNote. Mandatory labels would certainly go some way to helping the online user to identify so called AI 'slop', and it may be a sign that western countries are starting to wake up to the need for such identifiers that some US companies like Google are beginning to build such labelling into their tech. The recently launched Google Pixel 10 phones, for example, implement C2PA (Coalition for Content Provenance and Authenticity) credentials in the camera application. According to Engadget, the feature will roll out to all phones running Google's photo app in coming weeks, and will eventually be baked into Google Search. For now, China has stolen a march on other countries with its wide-ranging AI labelling law. Is it just a matter of time before other countries follow suit? Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[4]

China's top social media platforms take steps to comply with new AI content labeling rules - SiliconANGLE

China's top social media platforms take steps to comply with new AI content labeling rules China's top social media platforms, including ByteDance Ltd.'s Tiktok clone Douying and Tencent Holding's WeChat, rolled out new features today to try and comply with a new law that mandates all artificial intelligence content is clearly labeled as such. The new content labeling rules mandate that all AI-generated content posted on social media is tagged with explicit markings visible to users. It applies to AI-generated text, images, videos and audio, and also requires that implicit identifiers, such as digital watermarks, are embedded in the content's metadata. The law, which was first announced in March by the Cyberspace Administration of China, reflects Beijing's increased scrutiny of AI at a time when concerns are rising about misinformation, online fraud and copyright infringement. According to a report in the South China Morning Post, the law comes amid a broader push by Chinese authorities to increase oversight of AI, as illustrated by the CAC's 2025 Qinglang campaign, which aims to clean up the Chinese language internet. WeChat, one of the most popular messaging platforms in China, which boasts more than 1.4 billion monthly active users globally, has said that all creators using its platform must voluntarily declare any AI-generated content they publish. It's also reminding users to "exercise their own judgement" for any content that has not been flagged as AI generated. In a post on Monday, WeChat said it "strictly prohibits" any attempts to delete, tamper with, forge or conceal AI labels added by its own automated tools, which are designed to pick up any AI-generated content that's not flagged by users who upload it. It also reminded users against using AI to spread false information or for any other "illegal activities." Meanwhile Douyin, which has around 766 million monthly active users, said in a post on Monday that it's encouraging users to add clear labels to every AI-generated video they upload to its platform. It will also attempt to flag AI-generated content that isn't flagged by users by checking its source via its metadata. Several other popular social media platforms made similar announcements. For instance, the microblogging site Weibo, often known as China's Twitter, said on Friday it's adding tools for users to tag their own content, as well as a button for users to report "unlabeled AI content" posted by others. RedNote, the e-commerce-based social media platform, issued its own statement on Friday, saying that it reserves the right to add explicit and implicit identifiers to any unidentified AI-generated content it detects on its platform. Many of China's best known AI tools are also moving to comply with the new law. For instance, Tencent's AI chatbot Yuanbao said on Sunday it has created a new labeling system for any content it generates on behalf of users, adding explicit and implicit tags to text, videos and images. In its statement, it also advised users that they should not attempt to remove the labels it automatically adds to the content it creates. When the CAC announced the law earlier this year, it said its main objectives were to implement robust AI content monitoring, enforce mandatory labeling and apply penalties to anyone who disseminates misinformation through AI or uses the technology to manipulate public opinion. It also pledged to crack down on deceptive marketing that uses AI, and strengthen online protections for underage users. The European Union is set to implement its own AI content labeling requirements in August 2026, as part of the EU AI Act, which mandates that any content "significantly generated" by AI must be labeled to ensure transparency. The U.S. has not yet mandated AI content labels, but a number of social media platforms, such as Meta Platforms Inc., are implementing their own policies regarding the tagging of AI-generated media.

[5]

AI-Generated Content Gets Labels in China, But Will It Work?

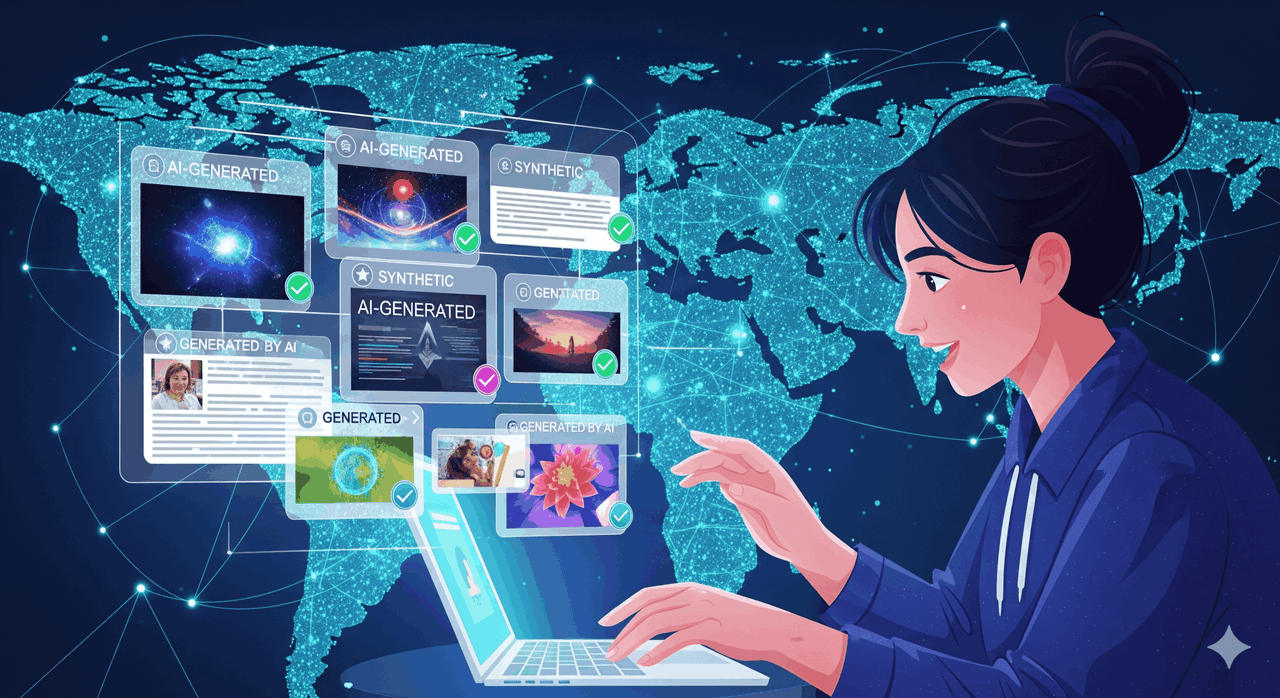

Chinese social media platforms like WeChat, Douyin, and Weibo now feature labels for AI-generated content to comply with the country's new social media law, according to a report by the South China Morning Post. The Chinese internet regulator, the Cyberspace Administration of China (CAC), released the law along with a series of measures to identify AI-generated content on digital platforms in March this year. The law requires AI-generated content to have both explicit and implicit labels. While explicit content labels must be easily visible, implicit ones must be embedded in the content's metadata. The law requires AI companies to ensure that content users generate via their services includes explicit identifiers such as: AI companies must also add implicit identifiers to the metadata of AI-generated content. Whenever a user uploads such content to a social media platform, the platform must verify this metadata and add prominent warning labels. If a user informs the platform that a piece of content is AI-generated, the platform must add warnings to it, even if the content does not have implicit identifiers. If neither the metadata nor the user indicates that the content is AI-generated but the platform suspects it, the platform must still add prominent warning labels to signal that the content is suspected of being AI-generated. China's measures are not the first time someone has suggested content labelling as a way of curbing AI-generated misinformation. Many tech companies proposed content labels as a solution to election-related deepfakes and misinformation in 2023 and 2024 in the run-up to the Indian and US elections. Digital platforms like Meta's Threads and Instagram also label content with an AI info tag. However, just because the Chinese government and many tech companies believe this is an effective measure to curb misinformation does not necessarily mean it is effective. A sophisticated bad actor could easily break the watermark or label. This information could then circulate without the watermark, making people believe it is real. Similarly, including identifiers in metadata may be ineffective in curbing misinformation and deepfakes because someone spreading such information could simply take a screenshot to remove these identifiers. They could then post the screenshot on encrypted platforms, making the misinformation harder to track and counter. Another practical challenge with watermarking or labelling is that it applies universally to any sort of AI-enabled adjustment to content. For example, if you take a picture and use an AI filter to remove acne, an AI label would categorize that at the same level as AI-generated non-consensual intimate imagery of a celebrity. This creates a problematic dynamic where people may unfairly dismiss legitimate AI-assisted content, while AI-generated or fake content that bypasses labelling requirements may gain unwarranted credibility. On the flip side, as the volume of AI-generated content grows, users could also develop label fatigue, similar to the consent fatigue people have reported with data privacy regulations. This fatigue could desensitize users to warnings, leading them to ignore labels even when they signal genuinely concerning content. Consider this scenario: a journalist working in a country under an oppressive regime wants to report on government corruption. To protect themselves and their sources, they use an AI-generated voice and visuals to convey the news. A regime that mandates implicit identifiers within an image's metadata could potentially reveal device identifiers, account information, or even the journalist's location. Metadata-level content identifiers could therefore undermine anonymity online and put many vulnerable groups, such as whistleblowers, journalists, and LGBTQ+ individuals in hostile conditions, at risk. As a result, people may self-censor rather than risk exposure. This highlights the fundamental issue with implicit identifiers: while these labels are designed to protect people from misinformation, they can also create a surveillance infrastructure that may be weaponized against the very groups that need protection most.

Share

Share

Copy Link

China has rolled out a new law requiring social media platforms to label AI-generated content, aiming to combat misinformation and fraud. Major platforms like WeChat and Douyin have quickly complied, implementing new features to identify AI-created text, images, audio, and video.

China's New AI Content Labeling Law

In a significant move to regulate artificial intelligence (AI) content, China has implemented a strict new law requiring social media platforms to label AI-generated material. The law, which took effect on Monday, aims to combat misinformation, online fraud, and copyright infringement by mandating clear identification of AI-created content

1

.

Source: Tom's Hardware

Key Requirements of the Law

The legislation, drafted by the Cyberspace Administration of China (CAC) and other agencies, requires:

- Explicit markings on all AI-generated content, including text, images, video, and audio.

- Implicit identifiers such as watermarks embedded in the content's metadata.

- Social media platforms to provide options for users to report unlabeled AI content.

- Penalties for using AI to disseminate misinformation or manipulate public opinion

2

.

Major Platforms' Compliance

China's leading social media platforms have swiftly adapted to comply with the new regulations:

- WeChat: With over 1.4 billion users, WeChat now requires users to proactively label AI-generated content and prohibits tampering with AI labels

3

.

Source: Silicon Republic

- Douyin: The Chinese version of TikTok, with around 766 million monthly active users, has implemented tools for users to label AI-generated videos and uses metadata to detect content sources

4

. - Weibo: The microblogging platform has added options for users to tag their own content and report unlabeled AI material

4

. - RedNote: The e-commerce-based social media platform reserves the right to add identifiers to any unidentified AI-generated content it detects

4

.

Related Stories

Global Context and Implications

China's implementation of this law puts it at the forefront of AI content regulation. While other countries are considering similar measures, China's approach is the most comprehensive to date:

- The European Union plans to implement AI content labeling requirements in August 2026 as part of the EU AI Act

4

. - Some U.S. companies, like Google, are beginning to incorporate AI content identifiers into their products

2

.

Challenges and Criticisms

Source: MediaNama

Despite the law's intentions, experts have raised concerns about its effectiveness and potential drawbacks:

- Sophisticated actors could potentially break watermarks or remove metadata, circumventing the labeling system

5

. - The universal application of labels to all AI-assisted content may lead to unfair dismissal of legitimate AI-enhanced material

5

. - Users might develop "label fatigue," becoming desensitized to warnings and ignoring important signals

5

. - The labeling system could potentially compromise anonymity, putting vulnerable groups like journalists and whistleblowers at risk

5

.

As the world grapples with the rapid advancement of AI technology, China's bold move sets a precedent for content regulation. The effectiveness and impact of this law will likely be closely watched by policymakers and tech companies worldwide as they seek to address the challenges posed by AI-generated content.

References

Summarized by

Navi

[1]

[3]

[4]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation