Huawei's CloudMatrix 384: A Powerful Challenger to Nvidia's AI Dominance

7 Sources

7 Sources

[1]

Huawei's rack-scale iron vs Nvidia H20, Blackwell: analysis

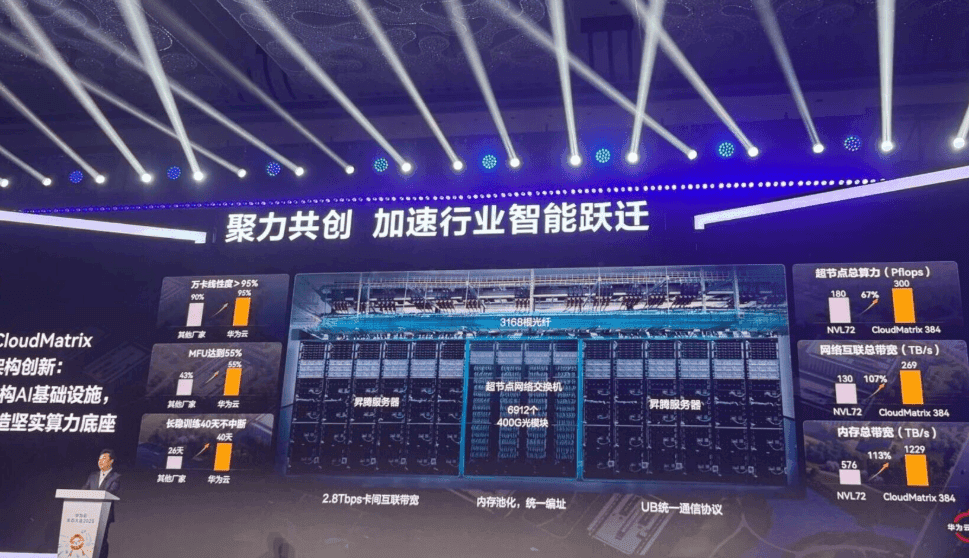

Chinese IT giant's CloudMatrix 384 promises GB200 beating perf, if you ignore power and the price tag Analysis Nvidia has the green light to resume shipments of its H20 GPUs to China, but while the chip may be plentiful, bit barn operators in the region now have far more capable alternatives at their disposal. Among the most promising is Huawei's CloudMatrix 384 rack systems, which it teased at the World Artificial Intelligence Conference (WAIC) in Shanghai this week. The system is powered by the Chinese IT goliath's latest Ascend neural processing unit (NPU), the P910C. Assuming you can get your hands on one, the chip promises more than twice the floating point performance of the H20 and more, albeit slower, memory to boot. However, with its CloudMatrix systems, Huawei is clearly aiming a lot higher than Nvidia's sanctions-compliant silicon. Compared to Nvidia's Blackwell-based GB200 NVL72 rack systems, Huawei's biggest iron boasts about 60 percent higher dense 16-bit floating point performance, roughly twice the memory bandwidth, and just over 3.5x the HBM. How does a company effectively blacklisted from Western chip tech accomplish that? Simple: the CloudMatrix 384 is enormous, packing more than 5x the accelerators and taking up 16x the floor space of Nvidia's NVL72. At the heart of the CloudMatrix 384 is Huawei's Ascend P910C NPU. Each of these accelerators comes equipped with a pair of compute dies stitched together using a high-speed chip-to-chip interconnect capable of shuttling data around at 540GB/s or 270GB/s in each direction. Combined, these dies are capable of churning out 752 teraFLOPS of dense FP16/BF16 performance. Feeding all that compute are eight stacks of high-bandwidth memory totaling 128GB, which supplies 1.6TB/s of memory bandwidth to each of the compute dies for a total of 3.2TB/s. If you've been keeping track of AI chip development, you'll know that's not exactly what you'd call competitive in 2025. For comparison, Nvidia's nearly two-year-old H200 boasts about 83 teraFLOPS higher floating point performance at FP16, 13GB more HBM, and 1.6TB/s more memory bandwidth. Since you can't exactly buy an H200 in China - at least not legally - the better comparison would be to the H20, which Nvidia is set to resume shipping any day now. While the H20 still holds a narrow advantage in memory bandwidth, the Ascend P910C has more HBM (128GB vs 96GB) and more than twice the floating-point performance. The P910C may not support FP8, but Huawei argues that INT8 is nearly as good, at least so far as inference is concerned. Individually, the P910C presents a compelling alternative to Nvidia's China-spec accelerators, even if they're no match for the GPU giant's latest batch of Blackwell chips. Most cutting-edge large language models aren't being trained or run on a single chip, however. There's simply not enough compute memory or bandwidth to make that work. Because of this, the chip's individual performance is less important than how efficiently you can scale it up and out. And that's exactly what Huawei has designed its latest NPUs to do. Huawei's Ascend P910C features an NVLink-like scale up interconnect or unified bus (UB), which allows Huawei to stitch multiple accelerators together into one great big one, just like Nvidia is doing with its HGX and NVL72 servers and rack systems. Each P910C accelerator features 14 28GB/s UB links (seven per compute die), which connect to seven UB switch ASICs baked into each node to form a fully non-blocking all-to-all mesh with eight NPUs and four Kunpeng CPUs per node. Unlike Nvidia's H20 or B200 boxes, Huawei's UB switches have a bunch of spare ports which connect up to a second tier of UB spine switches. This is what allows Huawei to scale from eight NPUs per box to 32 a rack or 384 per "supernode" -- hence the name CloudMatrix 384. From a rack-to-rack standpoint, Nvidia's GB200 NVL72 systems are upwards of 7.5x faster at FP16/BF16, offer 5.6x the memory bandwidth and 3.4x the memory capacity. However, Nvidia only supports a compute domain with up to 72 GPUs, which is less than one-fifth as many as Huawei. That's how the Chinese IT giant can claim greater system level performance than its Western rival on paper. As you might expect, with just 32 NPUs per rack, the full CloudMatrix 384 is a lot bigger than Nvidia's NVL72. Huawei's biggest AI iron spans 16 racks with 12 for compute and four for networking. We'll note that technically, Nvidia's NVLink switch tech can support scale-up networks with up to 576 GPUs but we've yet to see such a system in the wild. For deployments requiring more than 384 NPUs, Huawei's CloudMatrix also sports 400Gbps of scale-out networking per accelerator. This, the company claims, allows for training clusters with up to 165,000 NPUs. At least for inference, these large-scale compute fabrics present a couple of advantages, particularly when it comes to the flurry of massive mixture of experts (MoE) models coming out of China these days. More chips mean operators can better leverage techniques like tensor, data, and or expert parallelism to boost inference throughput and drive down the overall cost per token. In the case of the CloudMatrix 384, a mixture-of-experts model like DeepSeek R1 could be configured so that each NPU die hosts a single expert, Huawei explained in a paper published last month. To enable this, Huawei has developed an LLM inference serving platform called CloudMatrix-Infer, which disaggregates prefill, decode, and caching. "Unlike existing KV cache-centric architectures, this design enables high-bandwidth, uniform access to cached data via the UB network, thus reducing data locality constraints, simplifying task scheduling, and improving cache efficiency," the researchers wrote. If any of that sounds familiar, that's because Nvidia announced a similar system for its GPUs back at GTC called Dynamo, which we took a deep look at back in March. Testing on DeepSeek-R1, Huawei showed CloudMatrix-Infer dramatically increased performance, with a single NPU processing 6,688 input tokens a second while generating tokens at a rate of 1,943 tokens a second. That might sound incredible, but it's worth pointing out that aggregate throughput was at a batch size of 96. Individual performance was closer to 50ms per output token or 20 tokens a second. Pushing individual performance to around 66 tokens a second, something that's likely to make a noticeable difference for thinking models like R1, cuts the NPU's overall throughput to 538 tokens per second at a batch size of eight. Under ideal conditions, Huawei says it was able to achieve a prompt-processing efficiency of 4.5 tokens/sec per teraFLOPS, putting it just ahead of Nvidia's H800 at 3.96 tokens/sec per teraFLOPS. Huawei demonstrated similar performance during the decode phase, where the rack system eked out a roughly 10 percent lead over Nvidia's H800. As usual, take these vendor claims with a grain of salt. Inference performance is heavily dependent on your workload. While token/sec per teraFLOPS may offer some insights into the overall efficiency of the system, practically the more important metric is how expensive the tokens generated by the system are. This is usually measured in tokens per dollar per watt. So while the CloudMatrix 384's sheer scale allows it to compete with and even exceed the performance of Nvidia's much more powerful Blackwell systems, that doesn't matter much if it's more expensive to deploy and operate. Official power ratings for Huawei's CloudMatrix systems are hard to pin down, but SemiAnalysis has speculated that the complete system is likely pulling somewhere in the neighborhood of 600 kilowatts all in. That's compared to the roughly 120kW of the GB200 NVL72. Assuming those estimates are accurate, that'd not only make Nvidia's NVL72 several times more compute-dense, but more than 3x more power-efficient at 1,500 gigaFLOPS per watt versus Huawei's 460 gigaFLOPS per watt. Access to cheap power may be a major bottleneck in the West, but it is not necessarily such a big deal in China. Over the past few years, Beijing has invested aggressively in its national grid systems, building out large numbers of solar farms and nuclear reactors to offset its reliance on coal-fired power plants. The bigger issue may be infrastructure cost. Huawei's CloudMatrix 384 will reportedly retail for somewhere in the neighborhood of $8.2 million. Nvidia's NVL72 rack systems are estimated to cost around $3.5 million a piece. But if you happen to be a Chinese model dev, Nvidia's NVL racks aren't even a consideration. Thanks to Uncle Sam's export controls on AI accelerators, Huawei doesn't have much if any competition in the rack-scale arena, and its only major bottleneck may be just how many P910Cs China's foundry champion SMIC can pump out. Lawmakers in the US remain convinced that SMIC lacks the ability to manufacture chips of this complexity in high volumes. Then again, not too many years ago, industry experts believed SMIC lacked the tech necessary to manufacture 7nm and smaller process nodes, which turned out not to be the case. It remains to see in what volumes Huawei will be able to churn out CloudMatrix systems, but in the meantime, Nvidia CEO Jensen Huang would be happy to pack Chinese datacenters with as many H20s as they can handle. Nvidia has reportedly ordered another 300,000 H20 chips from TSMC to meet strong demand from Chinese customers. The Register reached out to Huawei for comment, but hadn't heard back at the time of publication. ®

[2]

Huawei shows off AI computing system to rival Nvidia's top product

SHANGHAI, July 26 (Reuters) - China's Huawei Technologies showed off an AI computing system on Saturday that one industry expert has said rivals Nvidia's most advanced offering, as the Chinese technology giant seeks to capture market share in the country's growing artificial intelligence sector. The CloudMatrix 384 system made its first public debut at the World Artificial Intelligence Conference (WAIC), a three-day event in Shanghai where companies showcase their latest AI innovations, drawing a large crowd to the company's booth. The system has drawn close attention from the global AI community since Huawei (HWT.UL) first announced it in April. Industry analysts view it as a direct competitor to Nvidia's (NVDA.O), opens new tab GB200 NVL72, the U.S. chipmaker's most advanced system-level product currently available in the market. Dylan Patel, founder of semiconductor research group SemiAnalysis, said in an April article that Huawei now had AI system capabilities that could beat Nvidia. Huawei staff at its WAIC booth declined to comment when asked to introduce the CloudMatrix 384 system. A spokesperson for Huawei did not respond to questions. Huawei has become widely regarded as China's most promising domestic supplier of chips essential for AI development, even though the company faces U.S. export restrictions. Nvidia CEO Jensen Huang told Bloomberg in May that Huawei had been "moving quite fast" and named the CloudMatrix as an example. The CloudMatrix 384 incorporates 384 of Huawei's latest 910C chips and outperforms Nvidia's GB200 NVL72 on some metrics, which uses 72 B200 chips, according to SemiAnalysis. The performance stems from Huawei's system design capabilities, which compensate for weaker individual chip performance through the use of more chips and system-level innovations, SemiAnalysis said. Huawei says the system uses "supernode" architecture that allows the chips to interconnect at super-high speeds and in June, Huawei Cloud CEO Zhang Pingan said the CloudMatrix 384 system was operational on Huawei's cloud platform. Reporting by Brenda Goh and Liam Mo; Editing by Hugh Lawson Our Standards: The Thomson Reuters Trust Principles., opens new tab

[3]

Huawei's CloudMatrix 384 could outpace Nvidia in the AI race, study suggests

Editor's take: The Trump administration attempted to curb China's ability to advance its computing capabilities through tariffs and other trade restrictions. However, this unprecedented trade war may have instead pushed Beijing to accelerate its efforts in developing powerful new AI systems. As newly appointed US tech czar David Sacks predicted just a month ago, Trump's tariffs appear to be backfiring in spectacular fashion. Chinese tech giant Huawei is reportedly developing a powerful AI system that can already compete with Nvidia's most advanced infrastructure in the race for the world's fastest AI platforms. Huawei publicly unveiled its new system, the CloudMatrix 384, at the recent World Artificial Intelligence Conference in Shanghai. The three-day event was packed with companies showcasing their latest AI innovations, and according to Reuters, Huawei's booth was among the most crowded and talked-about at the show. Many attendees were eager to learn more about the CloudMatrix 384, but Huawei officials declined to provide further details. While the system may have been on display primarily to generate buzz, enough information has emerged to give us a clearer sense of where Huawei - and China's broader AI industry - might be heading. According to a recent study by SemiAnalysis, Huawei's CloudMatrix 384 is the company's answer to Nvidia's GB200 NVL72 - a flagship AI supersystem designed to run trillion-parameter models in real time. Nvidia's solution includes 36 Grace CPUs and 72 Blackwell GPUs, working together in a unified rack to function as a single massive GPU that dramatically accelerates large language model inference. While Huawei lags behind Nvidia and other Western firms in advanced silicon design, its engineers chose to compensate with scale and innovation rather than raw speed. The CloudMatrix 384 incorporates 384 Ascend 910C chips, interconnected in an all-to-all topology to maximize performance. The Ascend 910C, designed by Huawei's own fabless semiconductor arm HiSilicon, effectively combines two Ascend 910B processors to deliver performance on par with Nvidia's H100 GPU. A fully configured CloudMatrix 384 can reach 300 petaFLOPs of dense BF16 compute, nearly double the compute capacity of Nvidia's GB200 NVL72. It also boasts 3.6× greater total memory capacity and 2.1× more memory bandwidth. SemiAnalysis noted in April that with further yield improvements, Huawei may soon outpace Nvidia - and by extension, the United States - in the AI race. The major caveat? Power consumption. The CloudMatrix 384 requires more than four times the energy needed to run a GB200 NVL72 at full capacity. However, unlike many Western countries, China has been aggressively expanding its power generation infrastructure using coal, solar, hydro, wind, and other sources.

[4]

Huawei launches CloudMatrix 384 server as an alternative to Nvidia's AI infrastructure stack - SiliconANGLE

Huawei launches CloudMatrix 384 server as an alternative to Nvidia's AI infrastructure stack China's Huawei Technologies Co. Ltd. threw down the gauntlet to Nvidia Corp. Saturday when it revealed its most powerful artificial intelligence server system to date. The company unveiled the CloudMatrix 384 system at the World Artificial Intelligence Conference in Shanghai, where dozens of local companies showed off their latest AI hardware. Reuters reported that Huawei is positioning the new CloudMatrix system as a direct rival to Nvidia's premium server product, the GB200 NVL72, which is subject to export controls and cannot be sold in China. CloudMatrix 384 is powered by 384 of Huawei's most sophisticated AI chips, the Ascend 910C graphics processing unit. That compares to just 72 B200 GPUs found in Nvidia's GB200 NVL72 system. The design of the CloudMatrix system illustrates Huawei's strategy for taking on Nvidia in the AI industry. While Nvidia's GPUs are more powerful on an individual level, Huawei believes it can overcome this deficiency by stacking more of its chips into a single cluster, allowing them to work together to overcome their lower performance. Huawei terms the idea as a "supernode" chip architecture, and says it has created high-speed network interconnection technology to enable the chips to communicate with each other. Huawei makes some stunning claims, saying that the CloudMatrix 384 delivers around 300 petaflops of compute performance, surpassing the 180 petaflops limit of Nvidia's GB200 NVL72 system. It also beats Nvidia's product in terms of memory and bandwidth, although the trade-off is that it uses significantly more energy to do so. According to Huawei, the CloudMatrix 384 consumes around 559 kilowatts per hour, which means it's almost four-times as power-hungry as Nvidia's system. Nonetheless, it's clear that Huawei has decided that sheer computing power is what counts most of all, and with the launch of the CloudMatrix 384, it can now offer an alternative to Chinese firms that have been blocked from accessing Nvidia's most advanced GPUs due to U.S. sanctions. Huawei first revealed the CloudMatrix 384 system in a low-key announcement in April, but it attracted little fanfare then as it wasn't yet available to customers. However, some analysts did take notice of it, with Dylan Patel from SemiAnalysis saying in a blog post that Huawei "now has AI system capabilities that could beat Nvidia". Praising Huawei's networked clustering capabilities, Dylan said the CloudMatrix 384 surpasses the performance of Nvidia's flagship server rack on a number of key metrics, and said it will become the obvious choice of infrastructure for Chinese AI companies. Nvidia Chief Executive Jensen Huang said something similar in May, telling Bloomberg that Huawei has been "moving quite fast", and highlighting the CloudMatrix 384 system as an example of that. Around the same time as the CloudMatrix 384 was announced, Huawei also unveiled an even more advanced processor called the Ascend 920, although that model has yet to go on sale. Huang's comments came just one month after U.S. President Donald Trump ramped up export controls on Nvidia's chip exports, banning the company from shipping its H20 GPUs to China - a lower powered version of the H200 GPU that had been specifically designed to meet earlier prohibitions on exports to China. Trump's decision effectively ended all Nvidia chip sales to China, but in a stunning turnaround, the White House reversed its course on July 15, saying it would grant special licenses to Chinese firms that want to import the H20, reopening the market for Nvidia. At the time, the White House's AI Czar David Sacks said the U-turn was justified, because it will help Nvidia to prevent Huawei from cornering the domestic market and generating more revenue that would be thrown at advanced chip research. However, that narrative does not tell the full story, for Commerce Secretary Howard Lutnick told Reuters that the decision to grant export licenses was made in connection with a broader trade deal that enables the U.S. to import vital rare earth minerals from China. In addition, there are question marks about the effectiveness of the U.S. sanctions on China. Last week, Nvidia told CNBC that building data centers with smuggled chips would be a "losing proposition" from a technical and financial perspective, in response to reports that Chinese AI companies are acquiring them from the black market. Earlier, a Financial Times report revealed that over $1 billion worth of advanced Nvidia chips had been smuggled into China via unofficial channels since May, including the B200 GPUs.

[5]

Huawei shows off AI computing system to rival Nvidia's top product - The Economic Times

The CloudMatrix 384 system made its first public debut at the World Artificial Intelligence Conference (WAIC), a three-day event in Shanghai where companies showcase their latest AI innovations, drawing a large crowd to the company's booth.China's Huawei Technologies showed off an AI computing system on Saturday that one industry expert has said rivals Nvidia's most advanced offering, as the Chinese technology giant seeks to capture market share in the country's growing artificial intelligence sector. The CloudMatrix 384 system made its first public debut at the World Artificial Intelligence Conference (WAIC), a three-day event in Shanghai where companies showcase their latest AI innovations, drawing a large crowd to the company's booth. The system has drawn close attention from the global AI community since Huawei first announced it in April. Industry analysts view it as a direct competitor to Nvidia's GB200 NVL72, the U.S. chipmaker's most advanced system-level product currently available in the market. Dylan Patel, founder of semiconductor research group SemiAnalysis, said in an April article that Huawei now had AI system capabilities that could beat Nvidia. Huawei staff at its WAIC booth declined to comment when asked to introduce the CloudMatrix 384 system. A spokesperson for Huawei did not respond to questions. Huawei has become widely regarded as China's most promising domestic supplier of chips essential for AI development, even though the company faces U.S. export restrictions. Nvidia CEO Jensen Huang told Bloomberg in May that Huawei had been "moving quite fast" and named the CloudMatrix as an example. The CloudMatrix 384 incorporates 384 of Huawei's latest 910C chips and outperforms Nvidia's GB200 NVL72 on some metrics, which uses 72 B200 chips, according to SemiAnalysis. The performance stems from Huawei's system design capabilities, which compensate for weaker individual chip performance through the use of more chips and system-level innovations, SemiAnalysis said. Huawei says the system uses "supernode" architecture that allows the chips to interconnect at super-high speeds and in June, Huawei Cloud CEO Zhang Pingan said the CloudMatrix 384 system was operational on Huawei's cloud platform.

[6]

Huawei Showcases Its NVIDIA GB200-Equivalent CloudMatrix AI Cluster for the First Time to The Public, Flaunting China's Cutting-Edge AI Hardware Breakthrough

Huawei has been making massive strides in the AI segment, and now, the company has showcased its CloudMatrix rack-scale cluster for the first time, revealing a gigantic breakthrough. When it comes to Chinese AI firms competing against NVIDIA's options, Huawei is undoubtedly at the forefront with its respective hardware, not just in terms of performance but also availability. The company has been more popular for its AI accelerator, coming in the "Ascend" lineup; however, Huawei recently expanded to rack-scale solutions, and they made a massive impact. The Chinese firm managed to compete with NVIDIA's top-end offering, and it reached to a point where even Jensen started to worry about Huawei's advancements. Although the CloudMatrix 384 has been discussed internally, Huawei has not publicly showcased it until today. At the WAIC conference held in the Shanghai World Expo Center (via MyDrivers), Huawei showcased the CloudMatrix cluster for the first time, naming it the Atlas 900 A3 Superpod. In terms of the specifications, the CloudMatrix 384 (CM384) AI cluster features 384 Ascend 910C chips connected in an "all-to-all topology" configuration. Interestingly, Huawei has managed to cover the architectural flaws by housing five times as many Ascend chips as NVIDIA's GB200. A CloudMatrix cluster is said to deliver 300 PetaFLOPS of BF16 computing, almost two times higher than GB200 NVL72. However, the only drawback here is the power CloudMatrix 384 is expected to consume, which is said to be 3.9x the power of a GB200 NVL72 and somewhat "awful" perf/watt figures across AI workloads. The price of a single CloudMatrix 384 AI cluster is claimed to be $8 million, which is almost three times higher than NVIDIA's GB200 NVL72 configuration, so the key motive behind Huawei's product is not to provide a cost-effective performance, rather a product that is made through in-house resources and is capable enough to compete with Western alternatives. This particular solution is verified by NVIDIA CEO Jensen himself, who claims that Huawei has managed to compete with Grace Blackwell systems.

[7]

Huawei shows off AI computing system to rival Nvidia's top product

SHANGHAI (Reuters) -China's Huawei Technologies showed off an AI computing system on Saturday that one industry expert has said rivals Nvidia's most advanced offering, as the Chinese technology giant seeks to capture market share in the country's growing artificial intelligence sector. The CloudMatrix 384 system made its first public debut at the World Artificial Intelligence Conference (WAIC), a three-day event in Shanghai where companies showcase their latest AI innovations, drawing a large crowd to the company's booth. The system has drawn close attention from the global AI community since Huawei first announced it in April. Industry analysts view it as a direct competitor to Nvidia's GB200 NVL72, the U.S. chipmaker's most advanced system-level product currently available in the market. Dylan Patel, founder of semiconductor research group SemiAnalysis, said in an April article that Huawei now had AI system capabilities that could beat Nvidia. Huawei staff at its WAIC booth declined to comment when asked to introduce the CloudMatrix 384 system. A spokesperson for Huawei did not respond to questions. Huawei has become widely regarded as China's most promising domestic supplier of chips essential for AI development, even though the company faces U.S. export restrictions. Nvidia CEO Jensen Huang told Bloomberg in May that Huawei had been "moving quite fast" and named the CloudMatrix as an example. The CloudMatrix 384 incorporates 384 of Huawei's latest 910C chips and outperforms Nvidia's GB200 NVL72 on some metrics, which uses 72 B200 chips, according to SemiAnalysis. The performance stems from Huawei's system design capabilities, which compensate for weaker individual chip performance through the use of more chips and system-level innovations, SemiAnalysis said. Huawei says the system uses "supernode" architecture that allows the chips to interconnect at super-high speeds and in June, Huawei Cloud CEO Zhang Pingan said the CloudMatrix 384 system was operational on Huawei's cloud platform. (Reporting by Brenda Goh and Liam Mo; Editing by Hugh Lawson)

Share

Share

Copy Link

Huawei unveils its CloudMatrix 384 AI computing system, potentially outperforming Nvidia's top offerings and reshaping the global AI hardware landscape.

Huawei's CloudMatrix 384: A New Contender in AI Computing

Huawei Technologies has unveiled its latest AI computing system, the CloudMatrix 384, at the World Artificial Intelligence Conference (WAIC) in Shanghai. This powerful system is positioned as a direct competitor to Nvidia's most advanced offering, the GB200 NVL72, and represents a significant leap in China's AI hardware capabilities

1

2

.

Source: Reuters

System Specifications and Performance

The CloudMatrix 384 is powered by 384 of Huawei's Ascend 910C neural processing units (NPUs), each featuring two compute dies interconnected with high-speed chip-to-chip technology

1

. Key specifications include:- 752 teraFLOPS of dense FP16/BF16 performance per NPU

- 128GB of high-bandwidth memory per NPU

- 3.2TB/s of memory bandwidth per NPU

- Total system performance of approximately 300 petaFLOPS

4

Compared to Nvidia's GB200 NVL72, the CloudMatrix 384 boasts about 60% higher dense 16-bit floating point performance, roughly twice the memory bandwidth, and over three times the high-bandwidth memory capacity

1

3

.Architectural Innovations

Huawei has implemented several architectural innovations to achieve this performance:

- "Supernode" architecture allowing high-speed chip interconnection

2

5

- Unified Bus (UB) interconnect with 14 28GB/s links per NPU

1

- Two-tier switching system enabling scaling up to 384 NPUs per "supernode"

1

- 400Gbps scale-out networking per accelerator for larger deployments

1

Comparison with Nvidia

Source: SiliconANGLE

While Nvidia's individual chips are more powerful, Huawei compensates by using more chips and implementing system-level innovations

2

4

. The CloudMatrix 384 outperforms Nvidia's GB200 NVL72 in several metrics:- Higher total compute performance (300 petaFLOPS vs 180 petaFLOPS)

- Greater memory capacity (3.6x more)

- Higher memory bandwidth (2.1x more)

4

However, this comes at the cost of significantly higher power consumption, with the CloudMatrix 384 using about four times more energy than Nvidia's system

3

4

.Market Implications

Huawei's advancement in AI computing capabilities has significant implications for the global AI hardware market:

- Providing an alternative for Chinese firms blocked from accessing Nvidia's most advanced GPUs due to U.S. sanctions

4

- Potentially reshaping the competitive landscape in AI infrastructure

2

3

- Accelerating China's efforts in developing powerful AI systems

3

Related Stories

Industry Reactions

The AI community has shown great interest in the CloudMatrix 384. Dylan Patel, founder of SemiAnalysis, stated that Huawei "now has AI system capabilities that could beat Nvidia"

2

5

. Even Nvidia CEO Jensen Huang acknowledged Huawei's rapid progress, specifically mentioning the CloudMatrix as an example2

5

.Challenges and Future Prospects

Source: Wccftech

Despite its impressive performance, the CloudMatrix 384 faces challenges:

- High power consumption may limit its adoption in some scenarios

3

4

- Ongoing U.S. export restrictions could impact Huawei's access to certain technologies

2

5

Nevertheless, with the CloudMatrix 384 now operational on Huawei's cloud platform, the company is poised to play a significant role in the evolving AI hardware landscape

5

.As the AI race intensifies, Huawei's CloudMatrix 384 represents a bold step forward in China's quest for technological self-sufficiency and global AI leadership.

References

Summarized by

Navi

[1]

[4]

Related Stories

Huawei Unveils Ambitious AI Chip Roadmap, Challenging Nvidia's Dominance in China

12 Sept 2025•Technology

Huawei's Ascend 910C Challenges Nvidia's AI Dominance with 60% H100 Inference Performance

05 Feb 2025•Technology

Huawei's Ascend 910D AI Chip Challenges NVIDIA Amid US Export Restrictions

21 Apr 2025•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation