Microsoft Study Reveals Humans Struggle to Distinguish AI-Generated Images from Real Photos

5 Sources

5 Sources

[1]

Think you can tell a fake image from a real one? Microsoft's quiz will test you

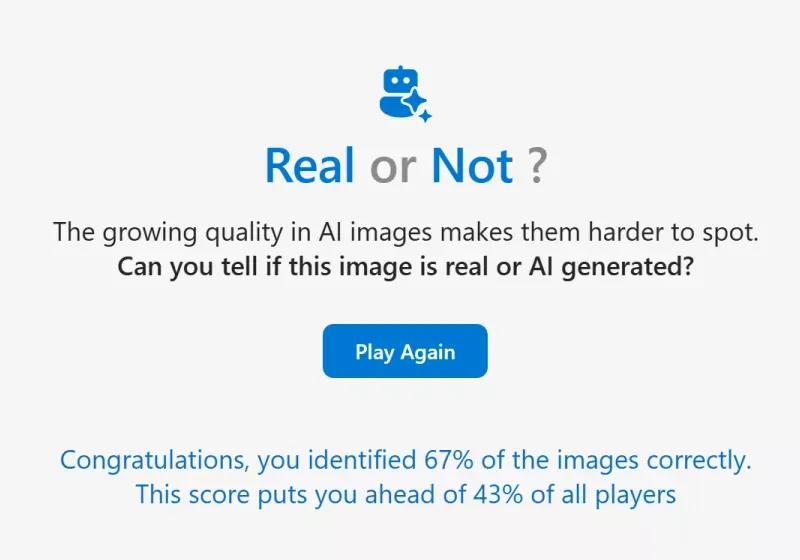

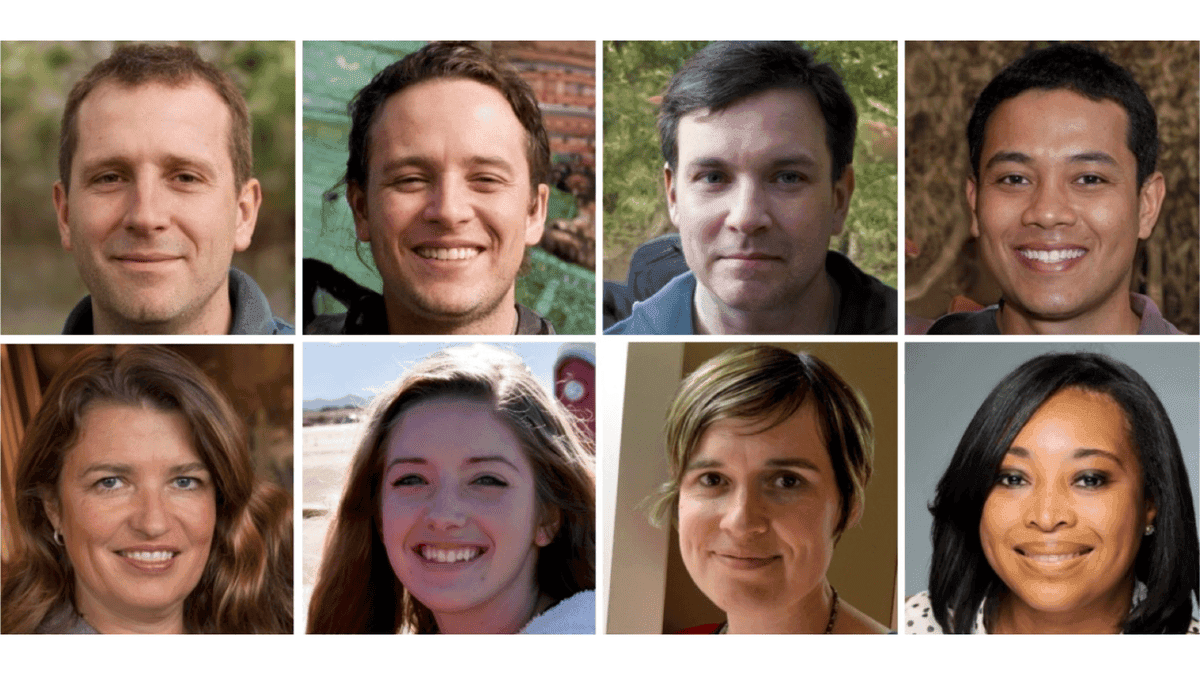

Through the looking glass: When AI image generators first emerged, misinformation immediately became a major concern. Although repeated exposure to AI-generated imagery can build some resistance, a recent Microsoft study suggests that certain types of real and fake images can still deceive almost anyone. The study found that humans can accurately distinguish real photos from AI-generated ones about 63% of the time. In contrast, Microsoft's in-development AI detection tool reportedly achieves a 95% success rate. To explore this further, Microsoft created an online quiz (realornotquiz.com) featuring 15 randomly selected images from stock photo libraries and various AI models. The study analyzed 287,000 images viewed by 12,500 participants from around the world. Participants were most successful at identifying AI-generated images of people, with a 65% accuracy rate. However, the most convincing fake images were GAN deepfakes that showed only facial profiles or used inpainting to insert AI-generated elements into real photos. Despite being one of the oldest forms of AI-generated imagery, GAN deepfakes (Generative Adversarial Networks) still fooled about 55% of viewers. This is partly because they contain fewer of the details that image generators typically struggle to replicate. Ironically, their resemblance to low-quality photographs often makes them more believable. Researchers believe that the increasing popularity of image generators has made viewers more familiar with the overly smooth aesthetic these tools often produce. Prompting the AI to mimic authentic photography can help reduce this effect. Some users found that including generic image file names in prompts produced more realistic results. Even so, most of these images still resemble polished, studio-quality photos, which can seem out of place in casual or candid contexts. In contrast, a few examples from Microsoft's study show that Flux Pro can replicate amateur photography, producing images that look like they were taken with a typical smartphone camera. Participants were slightly less successful at identifying AI-generated images of natural or urban landscapes that did not include people. For instance, the two fake images with the lowest identification rates (21% and 23%) were generated using prompts that incorporated real photographs to guide the composition. The most convincing AI images also maintained levels of noise, brightness, and entropy similar to those found in real photos. Surprisingly, the three images with the lowest identification rates overall: 12%, 14%, and 18%, were actually real photographs that participants mistakenly identified as fake. All three showed the US military in unusual settings with uncommon lighting, colors, and shutter speeds. Microsoft notes that understanding which prompts are most likely to fool viewers could make future misinformation even more persuasive. The company highlights the study as a reminder of the importance of clear labeling for AI-generated images.

[2]

Humans are really bad at detecting fake AI images, study shows

If you're constantly fooled by AI-generated images, you aren't alone. Turns out, most people are. According to a new research paper by Microsoft's AI for Good Lab, humans are surprisingly (or maybe not-so-surprisingly) bad at detecting and recognizing AI-generated images. The study collected data from an online "Real or Not Quiz" game, involving over 12,500 global participants who analyzed approximately 287,000 total images (a randomized mixture of real and AI-generated) to determine which ones were real and which ones were fake. The results showed that participants had an overall success rate around 62 percent -- only slightly better than flipping a coin. The study also showed that it's easier to identify fake images of faces than landscapes, but even then the difference is only by a few percent. In light of this study, Microsoft is advocating for clearer labeling of AI-generated images, but critics point out that it's easy to get around this by cropping the images in question.

[3]

A Little Over 50% of People Can Recognize AI Images from Real Photos

Just yesterday, PetaPixel reported that AI-generated models have started appearing in Vogue magazine and now a new Microsoft study suggests it is unlikely that readers realized that the photorealistic images were synthetic. A recent study from Microsoft's AI for Good Lab highlights the challenges people face in recognizing AI-generated images. According to the research, individuals' ability to detect these images was "only slightly higher than flipping a coin." Participants in an online quiz titled 'Real or Not,' developed by Microsoft and used as the foundation for the study, correctly identified images only 62% of the time. "Generative AI is evolving fast and new or updated generators are unveiled frequently, showing even more realistic output," the study's authors write. "It is fair to assume that our results likely overestimate nowadays people's ability to distinguish AI-generated images from real ones." The study involved over 12,500 participants who evaluated around 287,000 images, a mix of real and AI-generated photos. The results indicate that people are marginally better at identifying human faces (65% success rate) than they are at distinguishing real landscapes (59% success rate). The researchers attributed this difference to humans' innate ability to recognize faces, supported by other research such as a study from the University of Surrey, which notes that our brains are "drawn to and spot faces everywhere." Interestingly, participants performed similarly when identifying all images (62%) and when asked to focus exclusively on AI-generated images (63%). Images produced by various leading generative models were included in the quiz, with those made using Generative Adversarial Networks (GANs) yielding the highest error rate -- participants failed to correctly identify them 55% of the time. However, the study clarified that the realism of AI output isn't solely dictated by the model type. "We should not assume that a model architecture is responsible for the aesthetic of its output, the training data is," the researchers write. "The model architecture only determines how successful a model is at mimicking a training set." Some of the most difficult images to judge were real photos that contained visual elements that appeared unnatural, such as unusual lighting. Although these characteristics may seem artificial at first glance, they were the result of authentic conditions -- not digital manipulation. The research team is attempting to develop its own AI detection tool, which reportedly achieves over 95% accuracy in identifying both real and synthetic images. However, such tools have failed to materialize in the past.

[4]

Microsoft study suggests folks can't tell the difference between real and AI-generated images about 62% of the time -- but can you do any better?

Unless you take curation of your social feeds very seriously, the tidal wave of AI slop images and videos has felt nigh inescapable. More worrying still is how often this crashing tide serves to highlight which of our loved ones struggle to discern AI-generated content from all the slop made by human hands. Even worse still, one can often find oneself fooled by an image spat out by a generative model. Sound familiar? Turns out it's not just you. According to a new study out of Microsoft's AI for Good research lab, folks can accurately discern between real and AI-generated images only 62% of the time. I suppose that's still better than simply flipping a coin, but that's still pretty bleak. This figure is based on "287,000 image evaluations" from 12,500 participants tasked with labelling a selection of images. Participants assessed approximately 22 pictures each, selected from a sizeable bank that included both a "collection of 350 copyright free 'real' images" plus "700 diffusion-based images using DallE-3, Stable diffusion-3, Stable diffusion XL, Stable diffusion XL inpaintings, Amazon Titan v1 and Midjourney v6." If you fancy a humbling experience, you can give the same 'real or not real' quiz a go yourself. The Microsoft research lab then exposed their own in-development AI detector to the same bank of images, sharing that this tool was able to accurately identify artificially generated images at least 95% of the time. I understand that Microsoft is a massive, many-limbed company with interests all over the place, but it does feel weird for their research lab to highlight a problem that other parts of the company has arguably fuelled -- let alone for some part of Microsoft to be acting like, 'but don't worry, we'll soon have a tool for that.' To their credit, the research team does also call for "more transparency when it comes to generative AI," arguing that additional measures like "content credentials and watermarks are necessary to inform the public about the nature of the media they consume." However, it's easy enough to crop around a watermark, and I've no doubt those sufficiently motivated to do so will find a way around the similarly proposed measures of digital signatures and content credentials. A multi-pronged approach to AI-detection definitely doesn't hurt -- especially as it's clear we need to graduate beyond simply looking at a suspicious post and going 'it's giving AI vibes.' But while we're developing such an AI-detection tool kit, maybe we can also address AI's bottomless appetite for power, as well as its resulting greenhouse gas emissions and impact on local communities. Just a thought!

[5]

Can you tell the difference between a real photo and an AI generated image?

With AI image generation easily accessible and popular models from Dall-E, Stable Diffusion, and Midjourney delivering realistic results, you're probably not surprised that some people are unable to tell the difference between a real photo and an AI-generated image. A new study from Microsoft, which included 287,000 "image evaluations" with over 12,000 participants, shows that people can only accurately tell the difference between real and AI-generated images 62% of the time. An alarming figure, and one that showcases how far AI image generation has come, and the need for AI-detection tools so people are aware that what they're looking at is, well, "fake." If you're thinking that you'd be in the top percentile that can tell an AI photo from the real thing, you can take the quiz used in the research paper to find out. Simple head here and take the 'Real or Not?' quiz to see how you fare. Prepare to be humbled, as it can be a lot harder than it looks. "Participants were most accurate with human portraits but struggled significantly with natural and urban landscapes," Microsoft explains, highlighting that realistic humans are still an area where AI can improve. This quiz and research paper only cover AI-generated images that are meant to look like photographs, and do not cover artwork or other digital media that mimics the style of human artists. That's another area where it's easy to get "fooled" into thinking that what you're looking at is a genuine hand-drawn or digitally painted piece of art versus something generated by a powerful and complex AI model. Either way, it's another reminder that robust tools and checks are needed to ensure that AI-generated content can always be verified as such. "These results highlight the inherent challenge humans face in distinguishing AI-generated visual content, particularly images without obvious artifacts or stylistic cues," Microsoft continues. "This study stresses the need for transparency tools, such as watermarks and robust AI detection tools, to mitigate the risks of misinformation arising from AI-generated content." And just in case you're wondering, yes, all images in this article were AI-generated - using Midjourney v7.

Share

Share

Copy Link

A recent Microsoft study shows that people can only accurately identify AI-generated images 62% of the time, highlighting the growing challenge of distinguishing synthetic media from real photographs.

Microsoft's Revealing Study on AI Image Detection

A recent study conducted by Microsoft's AI for Good Lab has shed light on the growing challenge of distinguishing AI-generated images from real photographs. The research, which involved over 12,500 participants evaluating approximately 287,000 images, found that humans can accurately identify AI-generated images only about 62% of the time – just slightly better than random chance

1

.

Source: PetaPixel

Key Findings and Implications

The study revealed several interesting patterns in human perception of AI-generated imagery:

-

Facial Recognition: Participants were most successful at identifying AI-generated images of people, with a 65% accuracy rate

1

. -

Landscape Challenges: Identifying AI-generated landscapes proved more difficult, with participants achieving only a 59% success rate

3

. -

GAN Deepfakes: Despite being an older technology, GAN (Generative Adversarial Network) deepfakes still fooled about 55% of viewers

1

. -

Deceptive Real Photos: Surprisingly, some of the most challenging images to identify were actually real photographs with unusual lighting or settings, which participants mistakenly labeled as fake

1

.

Technological Advancements and Challenges

The study highlights the rapid evolution of AI image generation technology. Microsoft researchers noted that their results likely overestimate people's current ability to distinguish AI-generated images, as the technology continues to improve

3

.To address this challenge, Microsoft is developing an AI detection tool that reportedly achieves over 95% accuracy in identifying both real and synthetic images

3

. However, the effectiveness of such tools remains to be seen, as previous attempts have faced limitations4

.

Source: TechSpot

Related Stories

Implications for Media and Society

The study's findings raise important questions about the potential for misinformation and the need for transparency in AI-generated content:

-

Content Labeling: Microsoft advocates for clearer labeling of AI-generated images to help users distinguish between real and synthetic content

2

. -

Watermarking and Digital Signatures: Researchers suggest implementing watermarks, digital signatures, and content credentials to inform the public about the nature of the media they consume

4

. -

Public Awareness: The study underscores the importance of educating the public about AI-generated content and developing critical media literacy skills

5

.

As AI image generation technology continues to advance, the ability to distinguish between real and synthetic content becomes increasingly crucial. This study serves as a wake-up call for both technology companies and the general public to address the challenges posed by AI-generated imagery in our increasingly digital world.

References

Summarized by

Navi

Related Stories

AI-Generated Faces Fool Even Super Recognizers, But Five-Minute Training Boosts Detection

27 Dec 2025•Science and Research

Deepfake Detection Challenge: Only 0.1% of Participants Succeed in Identifying AI-Generated Content

19 Feb 2025•Technology

The Rise of AI-Generated Images: Challenges and Policies in the Digital Age

26 Aug 2024

Recent Highlights

1

EU launches formal investigation into Grok over sexualized deepfakes and child abuse material

Policy and Regulation

2

Google Chrome AI launches Auto Browse agent to handle tedious web tasks autonomously

Technology

3

AI agents launch their own social network with 32,000 users, sparking security concerns

Technology