Nvidia's Vera CPU enters data center battle as standalone chip to challenge Intel and AMD

3 Sources

3 Sources

[1]

Nvidia's Jensen Huang says the standalone Vera CPU will challenge AMD and Intel in the data center

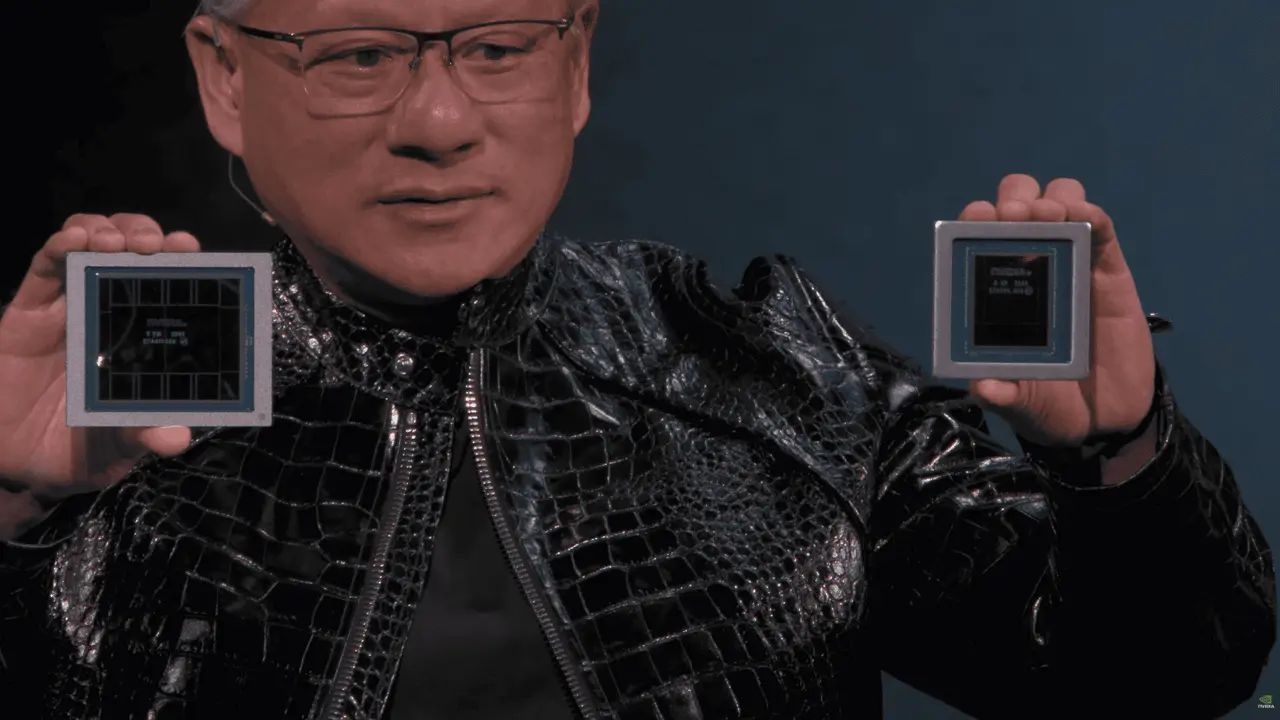

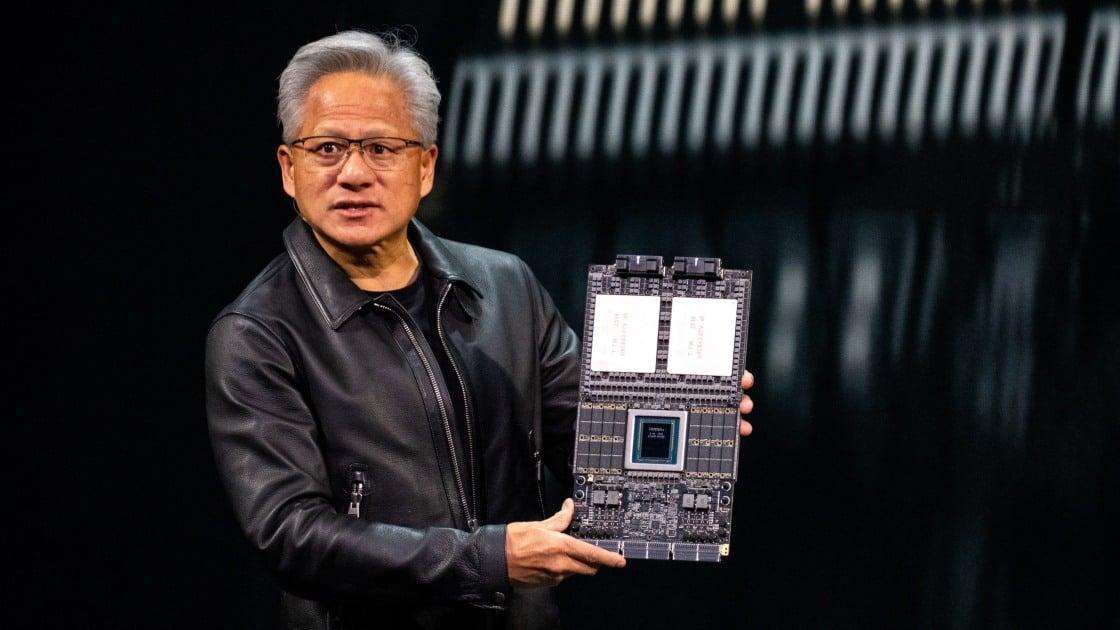

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Winners & losers: Nvidia CEO Jensen Huang has announced that the company's Vera CPU will debut as a standalone infrastructure product, not merely as a supporting element for its GPUs. For the first time, Nvidia will sell a processor capable of powering full computing stacks on its own, signaling an intentional move into the heart of the data center market long dominated by AMD and Intel. Huang described Vera as "revolutionary," hinting that early partners such as CoreWeave are already preparing deployments, even before official design wins have been announced. This decision represents far more than a new product launch; it is the culmination of Nvidia's push to become a one-stop silicon provider for AI and high-performance computing. The company's strategy is clear: rather than relying on GPUs to accelerate other firms' CPUs, Nvidia now wants its own architecture to run every stage of computation, from number-crunching cores to AI inference pipelines. The shift is expanding its influence across cloud and enterprise infrastructure, aiming to position Nvidia as a full-system supplier rather than a single-component vendor. Technically, Vera arrives well-equipped for that ambition. The processor is built around 88 custom Armv9.2 Olympus cores, each capable of running two threads through Spatial Multithreading. This design gives Vera an effective footprint of 88 cores and 176 threads, unlocking parallel performance traditionally reserved for x86-based rivals. Nvidia's choice to base the CPU on Arm architecture allows more flexibility in performance scaling and power optimization - critical advantages in data center efficiency. The chip integrates 1.5 terabytes of LPDDR5X memory and delivers 1.2 terabytes per second of bandwidth, an unusually high ratio for a general-purpose CPU. That balance makes Vera especially suited for memory-intensive workloads such as AI model preprocessing, data analytics, and simulation. Still, it remains unclear whether Nvidia will support conventional DDR5 RDIMM modules or rely solely on the LPDDR5X configuration typical of its system-on-module designs. A defining component of Vera's architecture is its second-generation Scalable Coherency Fabric, a high-speed interconnect that links all 88 cores across a single monolithic die. The fabric enables 3.4 terabytes per second of bisection bandwidth, allowing efficient data exchange between cores with minimal latency - an engineering choice that sidesteps the synchronization delays often encountered in chiplet-based CPUs such as AMD's EPYC. Vera's fabric doesn't act alone. It interfaces directly with Nvidia's NVLink Chip-to-Chip technology, now in its second generation, providing up to 1.8 terabytes per second of coherent bandwidth to external components such as the upcoming Rubin GPU. The symmetry between Vera and Rubin enables them to share memory models and data, creating a unified CPU-GPU environment within the same compute framework. Vera's cores use FP8 arithmetic and six 128-bit SVE2 vector units for faster data and AI processing. These capabilities allow Vera to handle certain AI and floating-point operations directly on its CPU cores, reducing the need to offload everything to a GPU. In doing so, Nvidia shifts AI-inference efficiency closer to the CPU - an advantage that could reduce energy overhead and latency in diverse enterprise applications. For Nvidia, the Vera CPU marks both a technological milestone and a strategic turning point. It transforms the company from the world's leading GPU supplier into a direct competitor in the general-purpose computing market. AMD's EPYC and Intel's Xeon families will soon face a new kind of challenger - one leveraging the same GPU-CPU integration that has fueled Nvidia's rise in AI acceleration.

[2]

NVIDIA's "Dearest" Neocloud, CoreWeave, to Get Early Access to Next-Gen Vera CPUs in a New Deal as Jensen Hints at a Push to Dominate the CPU Market

NVIDIA's CEO Jensen Huang has doubled down on the company's commitments to CoreWeave, as the neocloud will be the first to leverage Vera CPUs as a standalone offering. CoreWeave Will Offer Vera CPUs as "Standalone" Option, Indicating NVIDIA Is All-In Towards Agentic AI It appears that NVIDIA is back in the capital markets after scoring huge deals with the likes of Groq and Intel, and this time, the company has committed an additional $2 billion to the neocloud CoreWeave, claiming it will complement "long-standing" relations. According to the company's official blog post, NVIDIA will purchase Class A common stock at $87.20 per share, helping CoreWeave achieve its ambition to build 5 gigawatts of AI factories by 2030. While talking with Bloomberg's Ed Ludlow, NVIDIA's CEO revealed that CoreWeave will be the first to have access to Vera CPUs under the new deal. For the very first time, we're going to be offering Vera CPUs. Vera is such an incredible CPU. We're going to offer Vera CPUs as a standalone part of the infrastructure. And so not only, not only can you run your computing stack on NVIDIA GPUs, you can now also run your computing stack, wherever their CPU workload, run on Nvidia CPUs... Vera is completely revolutionary...CoreWeave is going to have to race if CoreWeave's going to be the first to stand up Vera CPUs. We haven't announced any of our CPU design wins, but there are going to be many. - NVIDIA's Jensen Huang via Ed Ludlow Well, it appears that from this particular deal, we have two narratives in place. The first is that NVIDIA has recognized that server CPUs are becoming another major bottleneck in the AI supply chain, and that, with agentic AI applications ramping up, a viable platform is more necessary than ever. Secondly, by offering Vera CPUs as a "standalone" offering, NVIDIA aims to provide customers a workaround for those willing to consider high-end CPU capabilities, and it would be a much cheaper option compared to getting the entire rack-scale solution. Vera CPUs are set to be among the most capable CPU offerings from NVIDIA, offering a massive bump over Grace Blackwell. You are looking at next-gen custom ARM architecture codenamed Olympus, which packs 88 cores, 176 threads (with NVIDIA Spatial Multi-Threading), a 1.8 TB/s NVLink-C2C coherent memory interconnect, 1.5 TB of system memory (3x Grace), 1.2 TB/s of memory bandwidth with SOCAMM LPDDR5X, and rack-scale confidential compute. This direction clearly indicates that when it comes to the server CPU ecosystem, NVIDIA is looking to go "heavy", and interestingly, when Jensen mentions "CPU design wins", he might also be pointing towards the upcoming ARM-based N1/N1X SoCs, which are upcoming consumer offerings dedicated towards AI PC workloads. Follow Wccftech on Google to get more of our news coverage in your feeds.

[3]

Nvidia strengthens its partnership with CoreWeave and unveils Vera, its first standalone CPU

Nvidia has announced a new $2bn strategic investment in CoreWeave, a specialist in cloud computing dedicated to artificial intelligence, reinforcing a partnership already backed by more than $6bn in services purchased through 2032. Following the announcement, CoreWeave shares climbed nearly 10% ahead of the Wall Street open. The shared goal: to deploy more than 5 gigawatts of computing capacity by 2030, amid massive demand for AI infrastructure. CoreWeave, valued at $47bn since its 2025 stockmarket debut, aims to build computing power equivalent to five nuclear reactors, supported by Nvidia capital intended to finance the group's energy and real-estate expansion. Under the agreement, Nvidia bought Class A common shares at $87.20 apiece and is entrusting CoreWeave with the priority rollout of its latest technologies, including Vera, its first standalone central processing unit. The new chip marks a strategic step: Vera moves into direct competition with CPUs from Intel and AMD, as well as custom components from Amazon and other cloud giants. Until now, Nvidia offered its CPUs only as part of integrated systems. With Vera, the group aims to extend its reach beyond GPUs, reinforcing its ambition to dominate the entire AI-related computing stack. This technological push comes alongside questions about the circularity of investments between Nvidia and its sector partners, including OpenAI, Anthropic and xAI. CEO Jensen Huang rejects those criticisms, saying the stakes reflect confidence in "generational" companies. Nvidia was already CoreWeave's fourth-largest shareholder before this deal, with a 6% stake. CoreWeave, still loss-making despite deals with OpenAI and Meta, is seeking to reduce its reliance on Microsoft, which accounted for 2/3 of its revenue at the end of 2025. In a fast-growing market, Nvidia now forecasts $500bn in cumulative revenue from data-center chips by the end of 2026, underscoring a growth trajectory still driven by demand the company describes as "unlimited".

Share

Share

Copy Link

Nvidia CEO Jensen Huang announced the company will sell its Vera CPU as a standalone infrastructure product for the first time, directly competing with AMD and Intel in the data center market. CoreWeave will be the first to deploy Vera CPUs following a $2 billion strategic investment from Nvidia, marking a major shift in the company's strategy from GPU-only supplier to full-system silicon provider.

Nvidia Transforms Into Direct CPU Competitor

Nvidia CEO Jensen Huang has announced that the company's Vera CPU will debut as a standalone infrastructure product, marking the first time Nvidia will sell a processor capable of powering full computing stacks independently of its GPUs

1

. This strategic move positions Nvidia in direct competition with Intel and AMD in the data center market, transforming the company from the world's leading GPU supplier into a comprehensive silicon provider for AI and high-performance computing workloads1

.

Source: Wccftech

The announcement came alongside a $2 billion strategic investment in CoreWeave, a neocloud specialist in cloud computing dedicated to artificial intelligence

2

3

. CoreWeave will be the first to deploy Vera CPUs, with Huang describing the processor as "revolutionary" during an interview with Bloomberg's Ed Ludlow2

.

Source: Market Screener

CoreWeave Gets Priority Access Through $2 Billion Deal

Nvidia purchased Class A common stock at $87.20 per share in CoreWeave, complementing a partnership already backed by more than $6 billion in services purchased through 2032

3

. The shared goal is to deploy more than 5 gigawatts of AI infrastructure by 2030, amid massive demand for AI computing stack capabilities3

. CoreWeave shares climbed nearly 10% following the announcement, reflecting investor confidence in the partnership3

.Jensen Huang emphasized that offering Vera CPUs as a standalone option provides customers with flexibility for AI workloads, stating: "Not only can you run your computing stack on Nvidia GPUs, you can now also run your computing stack, wherever their CPU workload, run on Nvidia CPUs"

2

. This approach addresses a critical bottleneck in the AI supply chain, particularly as agentic AI applications ramp up2

.Technical Architecture Built on Custom ARM-Based Processors

The Vera CPU is built around 88 custom Armv9.2 Olympus cores, each capable of running two threads through Spatial Multithreading, delivering an effective footprint of 88 cores and 176 threads

1

. This represents a massive improvement over the previous Grace Blackwell architecture2

. The choice of ARM architecture allows more flexibility in performance scaling and power optimization, critical advantages in data center efficiency1

.

Source: TechSpot

The chip integrates 1.5 terabytes of LPDDR5X memory—three times that of Grace—and delivers 1.2 terabytes per second of memory bandwidth, an unusually high ratio for a general-purpose CPU

1

2

. This balance makes Vera especially suited for memory-intensive workloads such as AI model preprocessing, data analytics, and simulation1

.Advanced Interconnect Enables CPU-GPU Integration

A defining component of Vera's architecture is its second-generation Scalable Coherency Fabric, a high-speed interconnect that links all 88 cores across a single monolithic die

1

. The fabric enables 3.4 terabytes per second of bisection bandwidth, allowing efficient data exchange between cores with minimal latency—an engineering choice that sidesteps synchronization delays often encountered in chiplet-based CPUs such as AMD's EPYC1

.The fabric interfaces directly with Nvidia's NVLink Chip-to-Chip technology, now in its second generation, providing up to 1.8 terabytes per second of coherent bandwidth to external components such as the upcoming Rubin GPU

1

2

. This symmetry enables Vera and Rubin to share memory models and data, creating a unified CPU-GPU environment within the same compute framework1

.Related Stories

AI Inference Capabilities Challenge Traditional CPU Design

Vera's cores use FP8 arithmetic and six 128-bit SVE2 vector units for faster data and AI processing

1

. These capabilities allow Vera to handle certain AI inference and floating-point operations directly on its CPU cores, reducing the need to offload everything to a GPU1

. This shift brings AI-inference efficiency closer to the CPU, potentially reducing energy overhead and latency in diverse enterprise applications1

.By offering Vera as a standalone option, Nvidia provides customers a more cost-effective alternative compared to purchasing entire rack-scale solutions

2

. The processor also features rack-scale confidential compute capabilities, addressing security concerns in multi-tenant cloud environments2

.Market Implications and Future Design Wins

Nvidia's move into competition with Intel and AMD represents both a technological milestone and a strategic turning point

1

. Until now, Nvidia offered its CPUs only as part of integrated systems, but Vera extends the company's reach beyond GPUs3

. AMD's EPYC and Intel's Xeon families now face a challenger leveraging the same GPU-CPU integration that has fueled Nvidia's rise in AI acceleration1

.Huang hinted that while no CPU design wins have been officially announced, "there are going to be many"

2

. Industry observers suggest this may also point toward upcoming ARM-based N1/N1X SoCs dedicated to AI PC workloads2

. Nvidia forecasts $500 billion in cumulative revenue from data-center chips by the end of 2026, underscoring a growth trajectory driven by what the company describes as "unlimited" demand3

.References

Summarized by

Navi

[1]

Related Stories

Nvidia Unveils Vera Rubin Superchip: Six-Trillion Transistor AI Platform Set for 2026 Production

29 Oct 2025•Technology

Nvidia Vera Rubin architecture slashes AI costs by 10x with advanced networking at its core

06 Jan 2026•Technology

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

18 Feb 2026•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology