NVIDIA Predicts AI-Accelerated Future for Scientific Computing as GPU Supercomputers Dominate Top500

2 Sources

2 Sources

[1]

The future of supercomputing will be AI accelerated

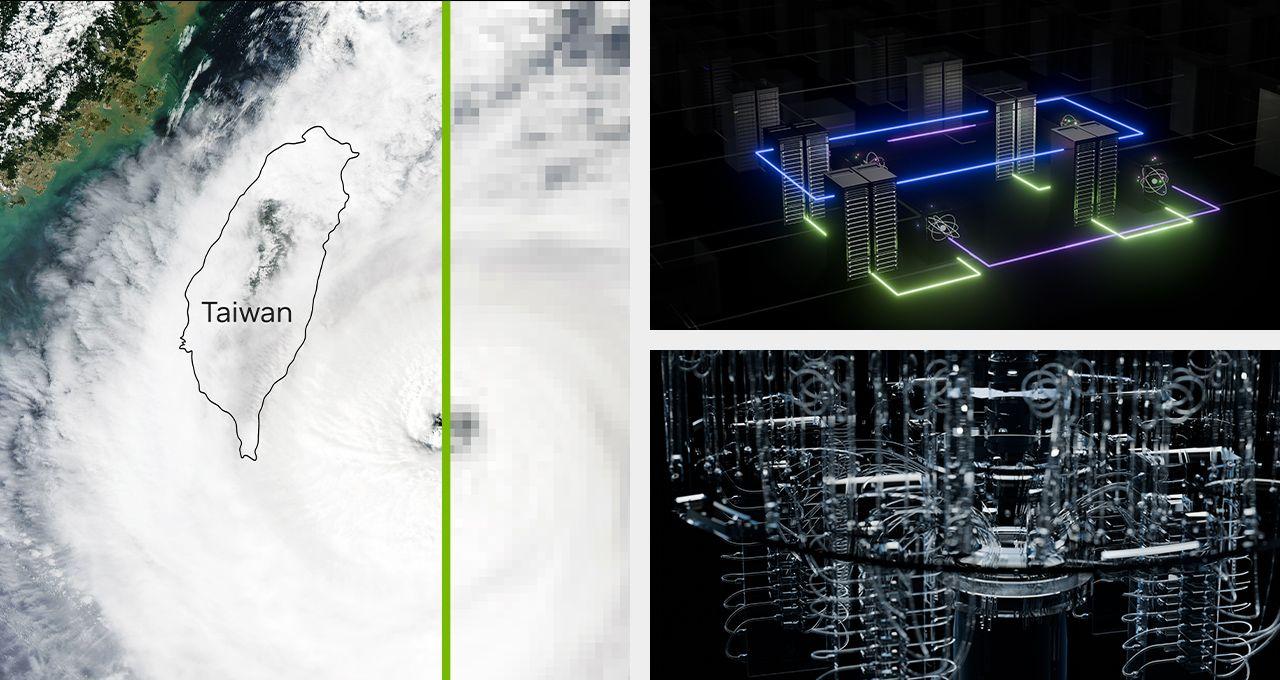

Nvidia's Ian Buck on the importance of FP64 to power research, in a world that's hot for inferencing Interview Scientific computing is about to undergo a period of rapid change as workloads inject AI. So says Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, who told The Register he expects that within a year or two, the application of AI will be pervasive throughout high performance computing and scientific workloads. "Today we're in the phase where we have luminary workloads. We have amazing examples of where AI is going to make scientific discovery so much faster and more productive," he said. He therefore predicts that scientific computer designs will change to run those workloads, and cited the gradually-then-suddenly appearance of GPU-powered machines on the Top500 list of earth's mightiest supercomputers as an example of the change he expects. "Just like accelerated computing took maybe five years to hit a curve where the Top500 started to flip over and today it's like 80 plus percent [GPU accelerated]." While Buck expects AI to play a much bigger role in scientific computing, he said Nvidia isn't trying to replace workloads with generative AI models. "In the beginning there was a lot of confusion. AI was confused with replacing simulation and that is an inexact science," he said. "AI is statistics. It's machine learning. It's taking data and making a prediction. Statistics works in probabilities, which is not 64-bit floating point, it's how close to 1.0 are you. "The narrative of 'Will AI replace simulation?' was the wrong question. It wasn't ever going to replace simulation. AI is a tool, one of many tools to be able to do scientific discovery." One way Nvidia is looking to do this is by using AI and machine learning help researchers focus their attention on the most likely candidates worthy of deeper investigation. "Trying to figure out what is the crystalline structure of a new alloy of aluminum or metal or steel that's going to make jet engines run faster, lighter, hotter, and more efficiently requires searching the space for every possible molecular compound," Buck explained. "We can simulate all those, but it would take millennia. We're using AI to predict, or approximate, or weed out the ones that we should simulate." Nvidia has already developed a wide variety of software frameworks and models to assist researchers, including frameworks like Holoscan for sensor processing, BioNeMo for drug discovery, and Alchemi for computational chemistry. On Monday, the company unveiled Apollo - a new family of open models designed to accelerate industrial and computation engineering. Nvidia has integrated Apollo into industrial design software suites from Cadence, Synopsys, and Siemens. In the quantum realm, a field Nvidia expects will play a key role in scientific computing, the GPU giant unveiled NVQLink, a system connecting quantum processing units with Nvidia-based systems to enable large-scale quantum-classic workloads using its CUDA-Q platform. While these models have the potential to accelerate scientific discovery they don't replace the need for simulation. "In order to build a great supercomputer, it needs to be good at three things: It has to be great at simulation; it has to be great at AI; and it also has to be a quantum supercomputer," Buck said. That means Nvidia accelerators must support both the ultra-low precision datatypes favored by generative AI models and the hyper-precise FP64 compute traditionally associated with academic supercomputers. FP64 is a requirement, Buck says. Nvidia's commitment to the data type has been called into question in recent years, particularly as AI models have trended toward low-precision data types like FP8 or FP4. When Blackwell was revealed in early 2024, Nvidia confused many in the scientific community by cutting the FP64 matrix performance from 67 teraFLOPS on Hopper to 40 to 45 teraFLOPS depending on the SKU. But while FP64 matrix performance used by benchmarks like high-performance linpack declined gen-on-gen, FP64 vector performance - a category better suited to workloads like the High Performance Conjugate gradient (HPCG) benchmark - rose from 34 teraFLOPS to 45. This increase didn't become apparent until months after Nvidia unveiled the chip. Further complicating the matter, Nvidia's Blackwell Ultra accelerators announced a year later, effectively reclaimed the die area previously dedicated to FP64 to boost dense 4-bit floating point performance for AI inference. While this certainly gave the impression that Nvidia had ceded the high-performance computing market to rival AMD in its pursuit of the broader AI market, Buck insists this is simply not the case. Instead, he explains, Blackwell Ultra was simply a specialized design aimed at AI inference. "For those customers that wanted the max inference performance, and now actually some of the training capabilities with NVFP4, we had it available as GB300." We're told Nvidia's next-generation AI accelerators, codenamed Rubin, will follow a similar trend by offering some devices that offer a blend of hyper-precise and low-precision data types, and others optimized primarily for AI applications. "For cases where we can provide a version of the architecture which maxes out in one direction and maybe it doesn't have the FP64, we'll do that too." Nvidia has already announced one such chip with Rubin CPX, which is specifically designed to offload LLM inference prefill operations from the GPUs, speeding up prompt processing and token generation in the process. Buck's vision for AI accelerated supercomputing is already catching on with national research labs and academic institutions around the world. In the last year Nvidia has won more than 80 new supercomputing contracts totaling 4,500 exaFLOPS of AI compute. The latest of these is the Texas Advanced Computing Center's (TACC) Horizon supercomputer. Due to come online in 2026, the machine will comprise 4,000 Blackwell GPUs plus 9,000 of Nvidia's next-gen Vera CPUs. The resulting system will pack 300 petaFLOPS of FP64 compute or 80 exaFLOPS of AI compute (FP4). Horizon will support the simulation of molecular dynamics to advance research into viruses, explore the formation of stars and galaxies, and map seismic waves to provide advanced warning in the advance of earthquakes. ®

[2]

The Great Flip: How Accelerated Computing Redefined Scientific Systems -- and What Comes Next

It used to be that computing power trickled down from hulking supercomputers to the chips in our pockets. Over the past 15 years, innovation has changed course: GPUs, born from gaming and scaled through accelerated computing, have surged upstream to remake supercomputing and carry the AI revolution to scientific computing's most rarefied systems. JUPITER at Forschungszentrum Jülich is the emblem of this new era. Not only is it among the most efficient supercomputers -- producing 63.3 gigaflops per watt -- but it's also a powerhouse for AI, delivering 116 AI exaflops, up from 92 at ISC High Performance 2025. This is the "flip" in action. In 2019, nearly 70% of the TOP100 high-performance computing systems were CPU-only. Today, that number has plunged below 15%, with 88 of the TOP100 systems accelerated -- and 80% of those powered by NVIDIA GPUs. Across the broader TOP500, 388 systems, 78%, now use NVIDIA technology, including 218 GPU-accelerated systems (up 34 systems year over year) and 362 systems connected by high-performance NVIDIA networking. The trend is unmistakable: accelerated computing has become the standard. But the real revolution is in AI performance. With architectures like NVIDIA Hopper and Blackwell and systems like JUPITER, researchers now have access to orders of magnitude more AI compute than ever. AI FLOPS have become the new yardstick, enabling breakthroughs in climate modeling, drug discovery and quantum simulation -- problems that demand both scale and efficiency. At SC16, years before today's generative AI wave, NVIDIA founder and CEO Jensen Huang saw what was coming. He predicted that AI would soon reshape the world's most powerful computing systems. "Several years ago, deep learning came along, like Thor's hammer falling from the sky, and gave us an incredibly powerful tool to solve some of the most difficult problems in the world," Huang declared. The math behind computing power consumption had already made the shift to GPUs inevitable. But it was the AI revolution, ignited by the NVIDIA CUDA-X computing platform built on those GPUs, that extended the capabilities of these machines dramatically. Suddenly, supercomputers could deliver meaningful science at double precision (FP64) as well as at mixed precision (FP32, FP16) and even at ultra-efficient formats like INT8 and beyond -- the backbone of modern AI. This flexibility allowed researchers to stretch power budgets further than ever to run larger, more complex simulations and train deeper neural networks, all while maximizing performance per watt. But even before AI took hold, the raw numbers had already forced the issue. Power budgets don't negotiate. Supercomputer researchers -- inside NVIDIA and across the community -- were coming to grips with the road ahead, and it was paved with GPUs. To reach exascale without a Hoover Dam‑sized electric bill, researchers needed acceleration. GPUs delivered far more operations per watt than CPUs. That was the pre‑AI tell of what was to come, and that's why when the AI boom hit, large-scale GPU systems already had momentum. The seeds were planted with Titan in 2012 at the Oak Ridge National Laboratory, one of the first major U.S. systems to pair CPUs with GPUs at unprecedented scale -- showing how hierarchical parallelism could unlock huge application gains. In Europe in 2013, Piz Daint set a new bar for both performance and efficiency, then proved the point where it matters: real applications like COSMO forecasting for weather prediction. By 2017, the inflection was undeniable. Summit at Oak Ridge National Laboratory and Sierra at Lawrence Livermore Laboratory ushered in a new standard for leadership‑class systems: acceleration first. They didn't just run faster; they changed the questions science could ask for climate modeling, genomics, materials and more. These systems are able to do much more with much less. On the Green500 list of the most efficient systems, the top eight are NVIDIA‑accelerated, with NVIDIA Quantum InfiniBand connecting 7 of the Top 10. But the story behind these headline numbers is how AI capabilities have become the yardstick: JUPITER delivers 116 AI exaflops alongside 1 EF FP64 -- a clear signal of how science now blends simulation and AI. Power efficiency didn't just make exascale attainable; it made AI at exascale practical. And once science had AI at scale, the curve bent sharply upward. What It Means Next This isn't just about benchmarks. It's about real science: * Faster, more accurate weather and climate models * Breakthroughs in drug discovery and genomics * Simulations of fusion reactors and quantum systems * New frontiers in AI-driven research across every discipline The shift started as a power-efficiency imperative, became an architectural advantage and has matured into a scientific superpower: simulation and AI, together, at unprecedented scale. It starts with scientific computing. Now, the rest of computing will follow.

Share

Share

Copy Link

NVIDIA's Ian Buck forecasts widespread AI integration in scientific computing within two years, while the company's GPU-accelerated systems now power 80% of the world's top supercomputers, fundamentally transforming how researchers approach complex simulations and discoveries.

The AI-Accelerated Transformation of Scientific Computing

NVIDIA's Ian Buck, VP and General Manager of Hyperscale and HPC, predicts that artificial intelligence will become pervasive throughout high-performance computing and scientific workloads within the next one to two years

1

. This transformation represents a fundamental shift in how researchers approach complex scientific problems, moving from traditional CPU-only systems to GPU-accelerated architectures that can handle both precision simulation and AI workloads.

Source: The Register

"Today we're in the phase where we have luminary workloads. We have amazing examples of where AI is going to make scientific discovery so much faster and more productive," Buck explained

1

. He draws parallels to the gradual adoption of GPU-powered machines on the Top500 list, which took approximately five years to reach a tipping point where accelerated computing became dominant.The Great Flip: From CPU to GPU Dominance

The transformation of supercomputing has been dramatic and swift. In 2019, nearly 70% of the TOP100 high-performance computing systems were CPU-only. Today, that number has plummeted below 15%, with 88 of the TOP100 systems now accelerated, and 80% of those powered by NVIDIA GPUs

2

. Across the broader TOP500, 388 systems (78%) now use NVIDIA technology, including 218 GPU-accelerated systems and 362 systems connected by high-performance NVIDIA networking.

Source: NVIDIA

This shift wasn't driven solely by AI enthusiasm but by fundamental power efficiency requirements. As NVIDIA's blog notes, "Power budgets don't negotiate"

2

. To reach exascale computing without requiring massive power infrastructure, researchers needed the superior operations-per-watt performance that GPUs could deliver compared to traditional CPUs.AI as a Tool, Not a Replacement

Contrary to early speculation, Buck emphasizes that AI won't replace traditional scientific simulation but will serve as a complementary tool. "AI is statistics. It's machine learning. It's taking data and making a prediction," he explained

1

. "The narrative of 'Will AI replace simulation?' was the wrong question. It wasn't ever going to replace simulation. AI is a tool, one of many tools to be able to do scientific discovery."One practical application involves using AI to help researchers focus their attention on the most promising candidates for deeper investigation. For example, when trying to determine the crystalline structure of new metal alloys for more efficient jet engines, researchers can use AI to predict and filter potential molecular compounds before running detailed simulations, rather than attempting to simulate every possible combination, which "would take millennia"

1

.Specialized Frameworks and Future Technologies

NVIDIA has developed numerous software frameworks to support this AI-enhanced scientific computing approach, including Holoscan for sensor processing, BioNeMo for drug discovery, and Alchemi for computational chemistry

1

. The company recently unveiled Apollo, a new family of open models designed to accelerate industrial and computational engineering, which has been integrated into industrial design software suites from major vendors like Cadence, Synopsys, and Siemens.In the quantum computing realm, NVIDIA introduced NVQLink, a system connecting quantum processing units with NVIDIA-based systems to enable large-scale quantum-classical workloads using its CUDA-Q platform

1

.Related Stories

The Precision Challenge

A critical aspect of this transformation involves maintaining support for both ultra-low precision datatypes favored by AI models and the hyper-precise FP64 compute traditionally required for academic supercomputers. Buck emphasizes that FP64 remains a requirement, despite some confusion in the scientific community when NVIDIA's Blackwell architecture showed reduced FP64 matrix performance compared to its predecessor Hopper

1

.However, while FP64 matrix performance declined, FP64 vector performance actually improved from 34 teraFLOPS to 45 teraFLOPS, better suited to workloads like the High Performance Conjugate Gradient benchmark

1

.Real-World Impact

The JUPITER supercomputer at Forschungszentrum Jülich exemplifies this new era, serving as both one of the most efficient supercomputers at 63.3 gigaflops per watt and a powerhouse for AI, delivering 116 AI exaflops

2

. This dual capability enables breakthroughs across multiple scientific domains, including faster and more accurate weather and climate models, advances in drug discovery and genomics, simulations of fusion reactors and quantum systems, and new frontiers in AI-driven research across every discipline.References

Summarized by

Navi

[1]

Related Stories

US National Labs Launch Massive AI Supercomputing Initiative to Maintain Global Technological Leadership

26 Nov 2025•Technology

AMD's Ambitious 20x30 AI Efficiency Goal: Embracing Rack-Scale Architecture and Power Constraints

13 Jun 2025•Technology

Nvidia and HPE to Build Blue Lion Supercomputer in Germany, Powered by Next-Gen Vera Rubin Architecture

10 Jun 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology