Nvidia H100 GPUs Set to Launch Into Orbit as Startups Pioneer Space-Based AI Data Centers

7 Sources

7 Sources

[1]

Nvidia GPU Heads Into Orbit on a Mission to Test Data Centers in Space

When he's not battling bugs and robots in Helldivers 2, Michael is reporting on AI, satellites, cybersecurity, PCs, and tech policy. Don't miss out on our latest stories. Add PCMag as a preferred source on Google. A US startup is preparing to take an early step toward creating a data center in space by flying a $30,000 Nvidia enterprise GPU, the H100, into Earth's orbit. The Redmond, Washington, startup Starcloud, formerly known as Lumen Orbit, is scheduled to launch a demo satellite carrying the H100 GPU sometime next month, according to Nvidia. In a blog post, Nvidia revealed that Starcloud plans to pack the H100 chip inside the Starcloud-1 satellite, which weighs approximately 130 pounds and is about the size of a small refrigerator. It promises to "offer 100x more powerful GPU compute than any previous space-based operation." Currently, data centers are built on the ground in large facilities. However, the growing demand for AI compute has raised concerns about the environmental toll of these facilities as the entire tech industry scrambles to construct even larger, next-generation data centers that'll consume gigawatts of electricity, enough to power entire cities. Placing data centers in space could be a solution to the energy demands, as they could be fitted with solar panels and harness the sun's energy. The vacuum of space could also act as a heat sink by equipping the orbiting data centers with "deployable radiator," ditching the need to rely on traditional liquid cooling. Amazon founder Jeff Bezos is among the supporters of the approach. "The only cost on the environment will be on the launch, then there will be 10x carbon-dioxide savings over the life of the data center compared with powering the data center terrestrially on Earth," Starcloud CEO Philip Johnston told Nvidia. The startup also predicts that the space-based approach can reduce the energy costs of running a data center by 10 times, even when accounting for the required rocket launches. "For connectivity, we envision using laser-based connectivity with other constellations," such as SpaceX's satellite internet system Starlink or Amazon's Project Kuiper, Starcloud's white paper adds. Such laser connectivity would pave the way for customers on the ground to easily communicate and run workloads from Starcloud's orbiting data centers. Starcloud's CEO also told the YouTube channel HyperChange that the company has already been preparing to fit the satellite on a SpaceX Falcon 9 rocket for next month's launch. The startup plans to launch a larger satellite next year. The Starcloud-2 has been designed to be the company's first commercial satellite.

[2]

Nvidia's H100 GPUs are going to space -- Crusoe and Starcloud pioneer space-based solar-powered AI compute cloud data centers

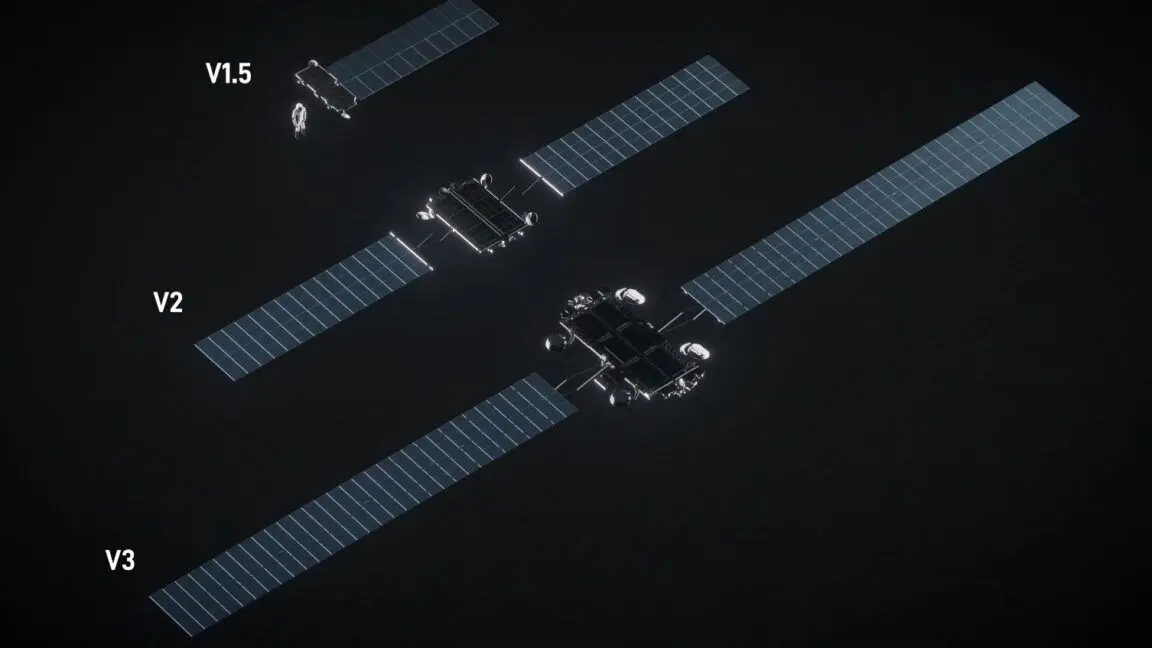

Space age partners claim the H100 delivers '100x more powerful GPU (AI) compute than has been in space before.' The first Nvidia H100 GPUs are heading into space next month. This out-of-this-world news comes as AI factory company, Crusoe, reveals its plans to become "the first public cloud operator to run workloads in outer space." The orbital Crusoe Cloud deployment will be powered by Nvidia H100 GPUs and AI accelerators in strategic partner Starcloud's AI data center satellites. Crusoe and Starcloud share an energy-first vision of an AI data center infrastructure that leverages solar power in space. The rays of energy-rich light emitted by the sun are unencumbered by the Earth's atmosphere in the Starcloud's orbit. Thus, companies that establish these kinds of resources in space can enjoy "almost unlimited, low-cost renewable energy." Estimates suggest space-based solar-powered data centers will benefit from 10x lower energy costs (including launch cost) vs those on Earth. As you can see in our embedded image, space allows for massive solar panel arrays, with no concerns about land use. Moreover, operating such systems puts no strain on the Earth's energy grid. However, we would be interested to learn more about GPU cooling in space-based data centers, given that there is no air for the Earth-like traditional convection of heat, but also very little heat. Nvidia claims Starcloud will use the vacuum of deep space as "an infinite heat sink." Crusoe has a long history of co-locating its compute infrastructure near novel energy sources. Thus, GPUs in space are just a logical step for the company, albeit quite a large one. Cully Cavness, co-founder, president and COO of Crusoe, sai,d "By partnering with Starcloud, we will extend our energy-first approach from Earth to the next frontier: outer space." Starcloud is a Redmond-based Nvidia Inception program backed company. It is focused on building data centers in space, scalable to gigawatt capacities, making it a natural fit for Crusoe's ambitions. "Having Crusoe as the foundational cloud provider on our platform is a perfect alignment of vision and execution," said Philip Johnston, CEO of Starcloud. "Crusoe's expertise in building rugged, efficient, and scalable computing solutions makes them the ideal partner to pioneer this new era." Johnston also underlined the possibilities of unlocking cloud computing power in space for research, discovery, and innovation. The partners seem to have even more ambitious plans, going forward, with regard to scaling. However, at this stage, it is rather premature to talk of plans more astronomical than those already sketched out from now until early 2027. Starcloud will be the first to launch Nvidia H100s into space in November 2025. Crusoe will deploy its Crusoe Cloud service on a Starcloud satellite scheduled to launch in late 2026. Subsequently, Crusoe plans to offer limited GPU capacity from space by early 2027, thus "pioneering a new paradigm for AI factories."

[3]

Nvidia GPUs head to space next month in first step toward AI data centers in orbit

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Forward-looking: Forget about putting data centers underwater; the next step could be placing them in outer space. A US startup aims to prove this is possible by launching a satellite carrying H100 GPUs and AI accelerators next month. Assuming all goes to plan, it could be a major step toward addressing rising AI demands here on Earth. Redmond, Washington, startup Starcloud, formerly known as Lumen Orbit, will launch the 130-pound Starcloud-1 satellite in November. Nvidia says the small fridge-sized object is expected to offer 100x more powerful GPU compute than any previous space-based operation. Starcloud-1 Space-based data centers have several advantages over their earth-bound counterparts. One of the biggest is that the constant exposure to the sun means there is an unlimited supply of low-cost renewable energy. Earth's atmosphere reduces the efficiency of solar power by absorbing and scattering sunlight, blocking portions of the spectrum, and limiting how much energy reaches the surface - especially under clouds, pollution, or when the sun is low on the horizon. Heat further decreases solar panel performance, and variable weather makes output inconsistent. A space-based solar-powered data center would avoid all these problems: without an atmosphere, sunlight is constant, unfiltered, and far more intense. There's no cloud cover, day-night cycle (in the right orbit), or temperature-induced inefficiency, allowing continuous, stable, and highly efficient energy generation. The emptiness of Space also allows for the use of massive solar arrays (below). The other big advantage is that the orbiting data centers can be equipped with a deployable radiator. Emitting waste heat from infrared radiation into space conserves significant water resources on Earth, as water isn't needed for cooling. Nvidia says the vacuum of space can act as an infinite heat sink. That sounds good, but what about the costs associated with getting the data centers into space? Starcloud estimates that even with launch expenses included, they will be 10x cheaper than land-based facilities. "In 10 years, nearly all new data centers will be being built in outer space," said Philip Johnston, cofounder and CEO of Starcloud. "The only cost on the environment will be on the launch, then there will be 10x carbon-dioxide savings over the life of the data center compared with powering the data center terrestrially on Earth." Johnston says that Starcloud has been preparing to attach the satellite to a SpaceX Falcon 9 rocket for next month's launch. It plans to launch the larger Starcloud-2, its first commercial satellite, next year. With companies pouring trillions into data-center growth, it's estimated that power demand for these facilities will increase 165% by 2030. Some locations are turning to renewable energy, but it all requires physical space. There are still plenty of issues with putting data centers in outer space, including the potential for solar flares to cause mass disruption, collisions with the increasing amount of space junk, and even attacks by adversary countries. But proponents say the benefits outweigh the risks.

[4]

Space data centers could satiate 165% surge in AI power hunger

Though launching data centers to space would be expensive, the LEO environment would provide two key benefits. Firstly, data centers in space would harness the effects of natural radiative cooling due to the extreme cold temperatures. On Earth, expensive cooling systems that consume enormous amounts of water are required to run data centers effectively. Secondly, operating in space would offer virtually unlimited solar energy. The team claims these conditions would allow an orbital data center to operate with net-zero carbon emissions. "Space offers a true sustainable environment for computing," study lead Professor Wen Yonggang explained in a press statement. "We must dream boldly and think unconventionally, if we want to build a better future for humanity." "By harnessing the sun's energy and the cold vacuum of space, orbital data centers could transform global computing," he continued. "Our goal is to turn space into a renewable resource for humanity, expanding AI capacity without increasing carbon emissions or straining Earth's limited land and energy resources." The NTU Singapore team proposed two different methods for deploying data centers in space. The first would utilize orbital edge data centers. These would harness imaging or sensing satellites equipped with AI accelerators to process raw data in orbit. By only transmitting the essential processed information to Earth, they would reduce data transmission volumes a hundredfold, significantly lowering energy requirements.

[5]

NVIDIA Takes AI to Space with Solar-Powered Data Center

NVIDIA's powerful H100 GPU is officially heading to space, thanks to a partnership with AI startup Starcloud. The two companies are teaming up to build the first generation of space-based data centers, a bold move meant to solve the growing energy and cooling problems that Earth-bound data centers face. The project starts with the Starcloud-1 satellite, a 60-kilogram platform equipped with an NVIDIA H100 GPU. According to NVIDIA, this small satellite will deliver computing performance that's about 100 times stronger than any current space-based system. But this is just the beginning -- Starcloud's bigger plan is to build a gigawatt-scale orbital data center powered by a giant solar array stretching 4 kilometers on each side. The idea is simple but ambitious: use unlimited solar power in orbit and get rid of the need for batteries, fuel, or backup generators. Because the satellite will receive constant sunlight while in orbit, it can run indefinitely without worrying about Earth's energy limitations or power grid stability. Cooling -- a huge challenge for modern data centers -- is handled in a completely new way. Instead of using fans or water, the Starcloud-1 satellite will rely on what NVIDIA calls "cosmic-grade air conditioning." Basically, all the heat produced by the GPUs will be released directly into space as infrared radiation. The vacuum of space acts like a perfect heat sink, turning the satellite itself into a natural radiator. This setup removes the need for complex water-cooling systems and eliminates water waste entirely. For NVIDIA, this mission isn't just a publicity stunt. As AI workloads keep growing, traditional data centers are consuming massive amounts of electricity and cooling resources. Taking computing to orbit could bypass these limits while providing stable, renewable power and near-zero cooling costs.

[6]

NVIDIA officially goes extraterrestrial to absorb the power of the Sun

TL;DR: NVIDIA supports Starcloud's innovative AI-powered satellite data centers in low-Earth orbit, leveraging solar energy and space's natural cooling to reduce Earth-based data center energy costs and environmental impact. Starcloud's compact satellite offers 100x more GPU power, promising a future where most data centers operate in space. In an effort to curb the exponential demands of data centers to power artificial intelligence models, NVIDIA has backed a company that has a solution, and that solution is going off planet. In a new blog post shared to the NVIDIA website, Team Green explains how it has backed the company Starcloud to create a new AI-equipped satellite that will be launched in low-Earth orbit, and be powered exclusively by the Sun. Starcloud is a company dedicated to bringing state-of-the-art data centers to outer space, in a bid to solve the growing demand for data centers on Earth, which come with significant downsides such as energy consumption, cooling requirements, and the impact they have on Earth's environment. Starcloud intends to solve, or at least mitigate the reliance on data centers by moving them to space where there is almost unlimited, low-cost renewable energy, and the vacuum of space acts as an infinite heatsink. According to the CEO of Starcloud, Philip Johnston, "In 10 years, nearly all new data centers will be being built in outer space," and that energy costs in space will be 10x cheaper than land-based options, even including the cost to launch the data center. NVIDIA's blog post states the Starcloud-1 satellite is about 60-kilograms in weight (132.28 lb), and is approximately the size of a small fridge. Despite its size, NVIDIA states that it offers 100x more powerful GPU compute than any previous-based operation. Starcloud is also a recent graduate of the Google for Startups Cloud AI Accelerator plans to run Gemma, an open AI model from Google, in orbit, on H100 GPUs, showcasing that AI models can even run in space.

[7]

NVIDIA's AI Chips Blast Off to Space, Aiming to Harness Unlimited Solar Power and Use the Deep-Space Vacuum as a 'Celestial' Heatsink

NVIDIA's AI chips will witness their first 'outer space' debut soon, as the AI startup Starcloud plans to launch data centers out of Earth, claiming to yield tremendous benefits. Well, data center expansion on planet Earth is moving at a massive pace, with Big Tech being involved in deploying 'multi-GW' AI facilities all across the world. While this buildout certainly gives the world enormous computing power, it does come with rather 'important' tradeoffs, whether it is growing electricity requirements and the land usage, which means that the approach adopted by firms out there isn't sustainable. However, the startup Starcloud has a promising solution to this problem, which involves building data centers in Earth's orbit, and NVIDIA appears to be on board with the firm. Team Green has mentioned Starcloud in a dedicated blog post, celebrating the firm's upcoming launch, which will feature the H100 AI GPUs deployed in the 60-kilogram Starcloud-1 satellite. It is claimed that the computing power on this launch device is '100x higher' than any other space-based operation. Now interesting, NVIDIA claims that with this approach, they get "infinite solar power", and the data centers in deep space won't rely on external batteries. Team Green has also figured out the solution to all their cooling problems, and that is through the usage of deep space vacuum. Emitting waste heat from infrared radiation into space can conserve significant water resources on Earth, since water isn't needed for cooling. Constant exposure to the sun in orbit also means nearly infinite solar power -- aka no need for the data centers to rely on batteries or backup power. Starcloud has been part of NVIDIA's Inception program, designed to enable startups and connect them with experts from Team Green. The CEO of the firm, Philip Johnston, predicts that nearly all data centers will be built in outer space within the next ten years, implying a growing interest in this segment. Of course, there are limitations involved in deploying huge AI factories on land; hence, Starcloud's efforts are something that we would like to call 'thinking out of the box'. However, if 'space-based' data centers are the next big thing, we are sure that Elon Musk won't be left behind.

Share

Share

Copy Link

Starcloud, a Washington-based startup, is preparing to launch the first Nvidia H100 GPU into space next month aboard the Starcloud-1 satellite. This marks a significant step toward establishing orbital data centers that could harness unlimited solar power and natural space cooling to address Earth's growing AI compute demands.

Historic Launch Marks New Era for AI Computing

Next month will witness a groundbreaking moment in artificial intelligence infrastructure as the first Nvidia H100 GPU prepares to enter Earth's orbit. Starcloud, a Redmond, Washington-based startup formerly known as Lumen Orbit, is scheduled to launch its Starcloud-1 satellite carrying the $30,000 enterprise-grade GPU

1

. The 130-pound satellite, roughly the size of a small refrigerator, represents what Nvidia claims will deliver "100x more powerful GPU compute than any previous space-based operation"1

.

Source: TweakTown

Revolutionary Energy and Cooling Solutions

The space-based approach addresses two critical challenges facing terrestrial data centers: energy consumption and cooling requirements. Unlike Earth-bound facilities that rely on massive amounts of electricity and water for cooling, orbital data centers can harness unlimited solar power without atmospheric interference

3

. Philip Johnston, Starcloud's CEO, explains that "the only cost on the environment will be on the launch, then there will be 10x carbon-dioxide savings over the life of the data center compared with powering the data center terrestrially on Earth"1

.

Source: Guru3D

The cooling solution is equally innovative. Rather than traditional liquid cooling systems, the satellites will utilize what Nvidia describes as "cosmic-grade air conditioning," where waste heat is released directly into space through infrared radiation

5

. The vacuum of space acts as an infinite heat sink, eliminating the need for water-intensive cooling systems that plague Earth-based facilities.Strategic Partnerships and Commercial Vision

Starcloud has partnered with AI factory company Crusoe to develop the first public cloud operator capable of running workloads in outer space

2

. Crusoe, known for co-locating compute infrastructure near novel energy sources, will deploy its Crusoe Cloud service on a Starcloud satellite scheduled for late 2026, with limited GPU capacity from space expected by early 20272

.The startup's ambitious roadmap includes launching the larger Starcloud-2 satellite next year as its first commercial offering. Long-term plans envision gigawatt-scale orbital data centers powered by massive solar arrays stretching 4 kilometers on each side

5

.Related Stories

Addressing Earth's Growing AI Demands

The timing of this initiative coincides with unprecedented growth in AI computing requirements. Industry estimates suggest power demand for data centers will increase 165% by 2030

3

. Research from Nanyang Technological University Singapore supports the orbital approach, proposing orbital edge data centers that could reduce data transmission volumes by a hundredfold while achieving net-zero carbon emissions4

.For connectivity, Starcloud envisions laser-based communication systems linking with existing satellite constellations like SpaceX's Starlink or Amazon's Project Kuiper, enabling seamless ground-to-orbit workload management

1

. This infrastructure would allow customers to easily communicate with and run computations on orbiting data centers.

Source: Interesting Engineering

Johnston boldly predicts that "in 10 years, nearly all new data centers will be being built in outer space," positioning this November launch as the first step toward fundamentally transforming how humanity approaches large-scale computing infrastructure

3

References

Summarized by

Navi

[2]

[4]

Related Stories

Google Announces Project Suncatcher: Ambitious Plan for AI Data Centers in Space

04 Nov 2025•Technology

Space-Based Data Centers Gain Momentum as Tech Giants Eye Orbital Computing Solutions

31 Oct 2025•Technology

AI Trained in Space as Tech Giants Race to Build Orbiting Data Centers Powered by Solar Energy

11 Dec 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology