Nvidia's Memory Chip Shift Could Double Server Prices by 2026

6 Sources

6 Sources

[1]

Nvidia chip shift to smartphone-style memory to double server-memory prices by end-2026 - Counterpoint

BEIJING, Nov 19 (Reuters) - Nvidia's (NVDA.O), opens new tab move to use smartphone-style memory chips in its artificial intelligence servers could cause server-memory prices to double by late 2026, according to a report published on Wednesday by Counterpoint Research. In the past two months, electronics supply chains around the world have been hit by a shortage of legacy memory chips as manufacturers turned their focus to high-end memory chips suited to semiconductors designed for AI applications. Sign up here. But Counterpoint, a technology-focused market research firm, said there is a new problem on the horizon. Nvidia recently decided to reduce AI server power costs by changing the kind of memory chip it uses to LPDDR, a type of low-power memory chip normally found in phones and tablets, from DDR5, which are typically used in servers. Nvidia is scheduled to release its earnings report later on Wednesday. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Memory suppliers like Samsung Electronics (005930.KS), opens new tab, SK Hynix (000660.KS), opens new tab and Micron (MU.O), opens new tab are already facing shortages of older dynamic random-access memory products after reducing production to focus on high-bandwidth memory, which is necessary to make the advanced accelerators that power the global AI boom. Counterpoint said tightness at the low end of the market is at risk of spreading upward as chipmakers weigh whether to divert more factory capacity to LPDDR to meet Nvidia's needs. "The bigger risk on the horizon is with advanced memory, as Nvidia's recent pivot to LPDDR means they're a customer on the scale of a major smartphone maker - a seismic shift for the supply chain which can't easily absorb this scale of demand," Counterpoint said. The firm said it expected prices for server-memory chips to double by the end of 2026. Higher server-memory prices would raise costs for cloud providers and AI developers, potentially adding pressure to data-centre budgets that are already stretched by record spending on graphics processing units and power upgrades. Reporting by Eduardo Baptista; Editing by Thomas Derpinghaus Our Standards: The Thomson Reuters Trust Principles., opens new tab

[2]

Nvidia shift to smartphone-style memory could double server-memory prices by end-2026

Nvidia's move to use smartphone-style memory chips in its artificial intelligence servers could cause server-memory prices to double by late 2026. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Nvidia's move to use smartphone-style memory chips in its artificial intelligence servers could cause server-memory prices to double by late 2026, according to a report published on Wednesday by Counterpoint Research. In the past two months, electronics supply chains around the world have been hit by a shortage of legacy memory chips as manufacturers turned their focus to high-end memory chips suited to semiconductors designed for AI applications. But Counterpoint, a technology-focused market research firm, said there was a new problem on the horizon. Nvidia recently decided to reduce AI server power costs by changing the kind of memory chip it uses to LPDDR, a type of low-power memory chip normally found in phones and tablets, from DDR5 chips, which are typically used in servers. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Nvidia is scheduled to release its earnings report later on Wednesday. Memory suppliers like Samsung Electronics, SK Hynix and Micron are already facing shortages of older dynamic random-access memory products after reducing production to focus on high-bandwidth memory, which is necessary to make the advanced accelerators that power the global AI boom. Counterpoint said tightness at the low end of the market was at risk of spreading upward as chipmakers weigh whether to divert more factory capacity to LPDDR to meet Nvidia's needs. "The bigger risk on the horizon is with advanced memory, as Nvidia's recent pivot to LPDDR means they're a customer on the scale of a major smartphone maker - a seismic shift for the supply chain which can't easily absorb this scale of demand," Counterpoint said. The firm said it expected prices for server-memory chips to double by the end of 2026. It also forecast that overall memory chip prices were likely to rise 50% from current levels through the second quarter of 2026. Higher server-memory prices would raise costs for cloud providers and AI developers, potentially adding pressure to data-centre budgets that are already stretched by record spending on graphics processing units and power upgrades.

[3]

NVIDIA's Shift to Consumer-Grade LPDDR For AI Servers Could Spell Massive Trouble for Buyers, as It Could Send PC and Mobile Prices Skyrocketing

It appears that NVIDIA is making a drastic change within the AI memory supply chain, as the firm is now looking to integrate LPDDR modules into AI servers, switching from the traditional DDR technology. Memory modules have now entered the 'era of shortages', driven by massive DRAM demand the industry has been witnessing for the past few months, and initially, it was estimated that the impact wouldn't be too much considering that DRAM capacity was in the phase of expansion; however, it appears that the datacenter buildout has reached new levels. This has prompted manufacturers like NVIDIA to make drastic decisions, one of them being the use of LPDDR memory type for AI servers. Based on what Counterpoint Research has disclosed, this move will trigger a 'seismic shift'. The bigger risk on the horizon is with advanced memory as NVIDIA's recent pivot to LPDDR means it is a customer on the scale of a major smartphone maker -- a seismic shift for the supply chain which can't easily absorb this scale of demand. - Counterpoint As far as memory prices are concerned, it is reported that they are expected to rise by up to 50% within just a few quarters, adding on to the already-estimated 50% YoY increase, which means that within a span of a few months, we are looking at a gigantic 100% rise. One of the main reasons why NVIDIA intends to switch to LPDDR memory over the traditional DDR5 solutions is that it is a more power-efficient platform, and at the same time, features effective "error correction" mechanisms. While for the AI industry, this is an optimistic move, for consumers, this is another trouble. LPDDR5 and higher-level models have seen widespread adoption in the PC and mobile supply chains, particularly in modern-day smartphones. And more importantly, the capacity that NVIDIA requires is significantly higher compared to what memory suppliers can offer to the company, which means that the supply chain will remain in a 'highly constrained' scenario for months to come. With this, it would not be incorrect to say that HBM, DDR, LPDDR, GDDR, and even RDIMM modules will be in short supply, affecting every user out there. We hope that the memory markets will rapidly adjust to the evolving supply chain situation, but from the looks of it, it will take several quarters before things return to normal.

[4]

Nvidia's Smartphone-Style Memory Shift To Trigger A 100% Price Surge In Server Memory By 2026: Report - Samsung Electronics Co (OTC:SSNLF), NVIDIA (NASDAQ:NVDA)

The decision by Nvidia Corp (NASDAQ:NVDA) to integrate smartphone-style memory chips into its AI servers could potentially double the prices of server memory by the end of 2026, according to a report. Demand Spike Pressures Market The move by Nvidia to use Low-Power Double Data Rate (LPDDR) memory chips, commonly found in smartphones and tablets, instead of the traditional DDR5 chips used in servers, is expected to cause a surge in demand that the industry may not be equipped to handle, according to a Counterpoint Research report on Wednesday. "..a seismic shift for the supply chain which can't easily absorb this scale of demand," wrote Research Director MS Hwang. This shift comes amid a global shortage of legacy memory chips, with manufacturers prioritizing advanced AI-ready components. Nvidia's sudden surge in demand for LPDDR chips could strain the already tight supply chain even further. Counterpoint Research cautioned that the low-end shortage could move up the market as chipmakers weigh shifting more production to LPDDR to support Nvidia's demand. The firm expects this pivot could drive server-memory chip prices to double by late 2026. "2x increase in DRAM module prices for DDR5 64GB RDIMM across Q1 2025 to the end of 2026 in a highly constrained scenario," stated Hwang. See Also: Elon Musk Back At White House For Trump's Dinner With Saudi Crown Prince In First Visit Since Public Feud AI Chip Demand Triggers Global Shortage The global semiconductor industry has been experiencing significant disruptions due to the increasing demand for AI-related chips. In response to the AI boom, Samsung Electronics (OTC:SSNLF) hiked its key memory chip prices by up to 60% in September, triggering a global shortage. This crisis has led to a "panic ordering" situation across the industry. Meanwhile, SK Hynix, a major supplier to Nvidia, has sold out its chip supply for 2026, driven by the AI boom, and is planning to increase investments. This surge in demand for AI-related chips has created a "super cycle" in the memory chip industry, leading to record profits for key suppliers like SK Hynix. Recently, Billionaire Peter Thiel fully exited his Nvidia stake and sharply reduced his Tesla (NASDAQ:TSLA) holdings, reflecting what appears to be rising concern over a potential AI market bubble. Benzinga's Edge Rankings place Nvidia in the 98th percentile for growth and the 3rd percentile for value, reflecting a mixed performance profile. Check the detailed report here. Nvidia is set to report its third-quarter earnings after market close on Wednesday. Price Action: On a year-to-date basis, Nvidia stock climbed 31.13%. On Tuesday, it fell 2.81% to close at $181.36. READ NEXT: Elon Musk Says This Is When Tesla Will Have Its Nvidia Moment: 'Major Valuation Change...' Image via Shutterstock Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. NVDANVIDIA Corp$183.351.10%OverviewSSNLFSamsung Electronics Co Ltd$65.2154.0%TSLATesla Inc$403.860.65%Market News and Data brought to you by Benzinga APIs

[5]

Nvidia shift to smartphone-style memory could double server-memory prices by end-2026

BEIJING -- Nvidia's move to use smartphone-style memory chips in its artificial intelligence servers could cause server-memory prices to double by late 2026, according to a report published on Wednesday by Counterpoint Research. In the past two months, electronics supply chains around the world have been hit by a shortage of legacy memory chips as manufacturers turned their focus to high-end memory chips suited to semiconductors designed for AI applications. But Counterpoint, a technology-focused market research firm, said there was a new problem on the horizon. Nvidia recently decided to reduce AI server power costs by changing the kind of memory chip it uses to LPDDR, a type of low-power memory chip normally found in phones and tablets, from DDR5 chips, which are typically used in servers. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Nvidia is scheduled to release its earnings report later on Wednesday. Memory suppliers like Samsung Electronics, SK Hynix and Micron are already facing shortages of older dynamic random-access memory products after reducing production to focus on high-bandwidth memory, which is necessary to make the advanced accelerators that power the global AI boom. Counterpoint said tightness at the low end of the market was at risk of spreading upward as chipmakers weigh whether to divert more factory capacity to LPDDR to meet Nvidia's needs. "The bigger risk on the horizon is with advanced memory, as Nvidia's recent pivot to LPDDR means they're a customer on the scale of a major smartphone maker - a seismic shift for the supply chain which can't easily absorb this scale of demand," Counterpoint said. The firm said it expected prices for server-memory chips to double by the end of 2026. It also forecast that overall memory chip prices were likely to rise 50 per cent from current levels through the second quarter of 2026. Higher server-memory prices would raise costs for cloud providers and AI developers, potentially adding pressure to data-center budgets that are already stretched by record spending on graphics processing units and power upgrades.

[6]

Nvidia chip shift to smartphone-style memory to double server-memory prices by end-2026 - Counterpoint

BEIJING (Reuters) -Nvidia's move to use smartphone-style memory chips in its artificial intelligence servers could cause server-memory prices to double by late 2026, according to a report published on Wednesday by Counterpoint Research. In the past two months, electronics supply chains around the world have been hit by a shortage of legacy memory chips as manufacturers turned their focus to high-end memory chips suited to semiconductors designed for AI applications. But Counterpoint, a technology-focused market research firm, said there is a new problem on the horizon. Nvidia recently decided to reduce AI server power costs by changing the kind of memory chip it uses to LPDDR, a type of low-power memory chip normally found in phones and tablets, from DDR5, which are typically used in servers. Nvidia is scheduled to release its earnings report later on Wednesday. Because each AI server needs more memory chips than a handset, the change is expected to create sudden demand that the industry is not equipped to handle, according to Counterpoint. Memory suppliers like Samsung Electronics, SK Hynix and Micron are already facing shortages of older dynamic random-access memory products after reducing production to focus on high-bandwidth memory, which is necessary to make the advanced accelerators that power the global AI boom. Counterpoint said tightness at the low end of the market is at risk of spreading upward as chipmakers weigh whether to divert more factory capacity to LPDDR to meet Nvidia's needs. "The bigger risk on the horizon is with advanced memory, as Nvidia's recent pivot to LPDDR means they're a customer on the scale of a major smartphone maker - a seismic shift for the supply chain which can't easily absorb this scale of demand," Counterpoint said. The firm said it expected prices for server-memory chips to double by the end of 2026. Higher server-memory prices would raise costs for cloud providers and AI developers, potentially adding pressure to data-centre budgets that are already stretched by record spending on graphics processing units and power upgrades. (Reporting by Eduardo Baptista; Editing by Thomas Derpinghaus)

Share

Share

Copy Link

Nvidia's transition from DDR5 to smartphone-style LPDDR memory chips in AI servers is expected to create massive supply chain disruptions and potentially double server memory prices by late 2026, according to Counterpoint Research.

Nvidia's Strategic Memory Shift Creates Supply Chain Crisis

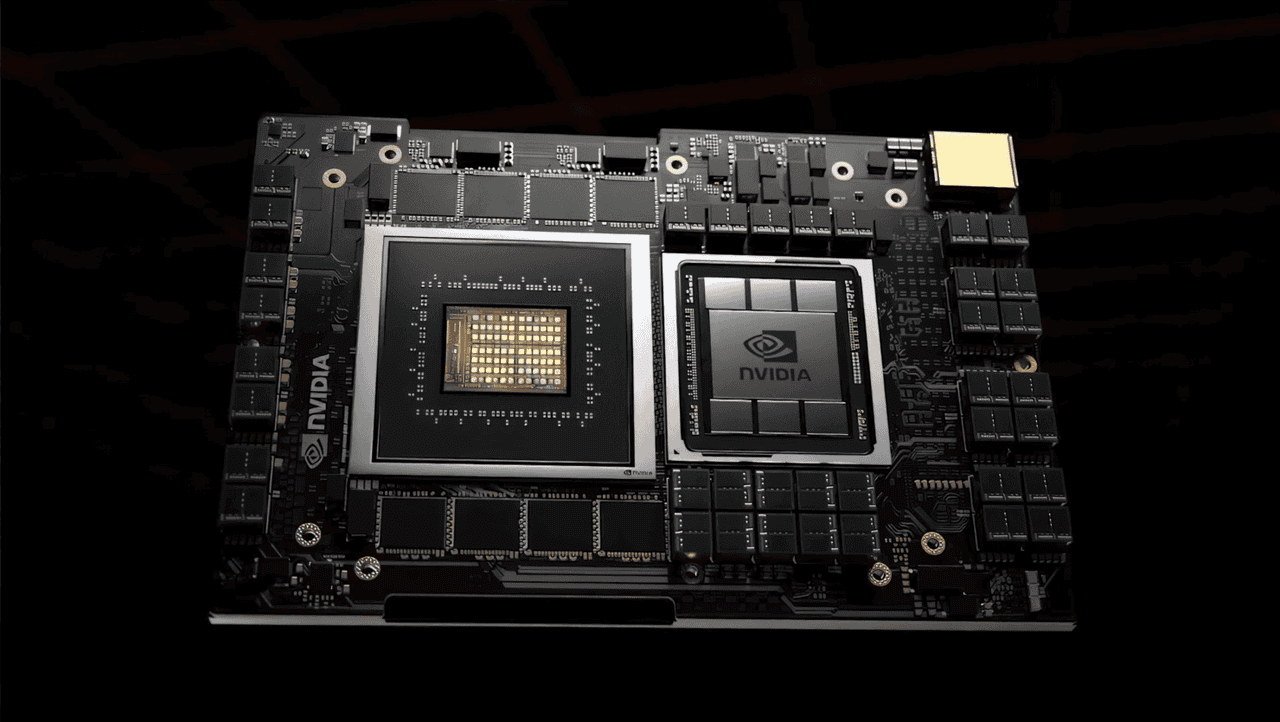

Nvidia has made a pivotal decision that could reshape the global memory chip market, transitioning from traditional DDR5 memory chips to smartphone-style LPDDR (Low-Power Double Data Rate) memory in its artificial intelligence servers. This strategic move, aimed at reducing AI server power costs, is expected to trigger a seismic shift in the semiconductor supply chain with far-reaching economic implications

1

.

Source: BNN

The chip giant's decision comes at a time when electronics supply chains worldwide are already experiencing significant strain. Over the past two months, manufacturers have been grappling with shortages of legacy memory chips as they redirect their focus toward high-end memory components designed specifically for AI applications

2

.Industry Impact and Price Projections

According to Counterpoint Research, a technology-focused market research firm, this transition represents "a seismic shift for the supply chain which can't easily absorb this scale of demand." The fundamental challenge lies in the volume requirements: each AI server demands significantly more memory chips than a typical smartphone or tablet, creating sudden demand that the industry is not equipped to handle

3

.

Source: Wccftech

The financial implications are staggering. Counterpoint Research projects that server-memory chip prices could double by the end of 2026, with overall memory chip prices likely to rise 50% from current levels through the second quarter of 2026

4

. Some analysts suggest the combined impact could result in a 100% price increase within just a few quarters.Supply Chain Constraints and Market Dynamics

Major memory suppliers including Samsung Electronics, SK Hynix, and Micron are already facing significant challenges. These companies have been reducing production of older dynamic random-access memory products to focus on high-bandwidth memory, which is essential for manufacturing the advanced accelerators powering the global AI boom

5

.The situation has created what industry experts describe as a "panic ordering" scenario. Samsung Electronics notably hiked its key memory chip prices by up to 60% in September, while SK Hynix, a major Nvidia supplier, has reportedly sold out its chip supply for 2026. This surge in demand has created what analysts term a "super cycle" in the memory chip industry, leading to record profits for key suppliers.

Related Stories

Broader Economic Implications

The ripple effects of Nvidia's decision extend far beyond the immediate AI server market. Higher server-memory prices will inevitably raise costs for cloud providers and AI developers, potentially adding pressure to data-center budgets that are already stretched by record spending on graphics processing units and power upgrades

1

.Counterpoint Research warns that the tightness at the low end of the market risks spreading upward as chipmakers weigh whether to divert more factory capacity to LPDDR production to meet Nvidia's substantial requirements. This capacity reallocation could affect the entire spectrum of memory products, including HBM, DDR, LPDDR, GDDR, and RDIMM modules, potentially impacting every segment of the technology market

3

.

Source: ET

References

Summarized by

Navi

Related Stories

Memory shortage to persist beyond 2026 as AI demand reshapes chip industry and drives prices up

16 Jan 2026•Business and Economy

AI Demand Triggers Component Shortage as Dell and Lenovo Plan 15% Price Increases for Servers

03 Dec 2025•Business and Economy

DRAM prices surge up to 95% in Q1 2026 as artificial intelligence boom strains global supply

02 Feb 2026•Business and Economy

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation