Qualcomm's AR1+ Gen 1 Chip Brings On-Device AI to Smart Glasses

7 Sources

7 Sources

[1]

How a Tiny Chip Could Power Up Smart Glasses Like Meta Ray-Bans Next Year

That might be changing soon. Qualcomm just announced its newest chip for smart glasses, which will roll out in products starting next year. It promises more power efficiency, support for better cameras and even AI that'll work offline on glasses without a cloud connection. The new AR1 Plus Gen 1 chip is an upgrade from chips on smaller smart glasses like Meta Ray-Bans. It's not meant for higher-end AR glasses that project 3D images, but it could very well show up on the types of display-enabled glasses that Google recently showed off at its developer conference and on Meta's next generation of Ray-Bans. I spoke to Qualcomm's head of XR, Ziad Asghar, about the news and what it'll mean for glasses you could be buying soon. The Meta Ray-Bans I wear these days already look like real glasses, just slightly chunky ones. Asghar emphasizes that the new chip is 20% smaller. That's going to help future smart glasses look even more like regular glasses, with thinner frames, for example. "If I've got to make it fit on the side of the glass," Asghar told me about the chip, "I need to get that dimension really small." A tinier chip also leaves more room to pack in battery capacity. The power efficiency of the new chip will actually be around 5% better despite its smaller size. That doesn't sound like much, but Asghar also sees a future shift to more off-glasses processing on phones that will also help battery life. At the moment, devices like Meta Ray-Bans use cloud processing for AI. "What's truly different now is AI," Asghar says about the current state of smart glasses. "I keep saying this, but I truly believe it, that it's going to help XR use cases probably more than any other space." One of the new tricks Qualcomm's new chip can do is on-device AI running small language models, letting it do more offline things with voice commands. Qualcomm's showing off some of these demos at the Augmented World Expo conference in Long Beach, California, I'm attending, so I'll share thoughts on how it works soon. Meta Ray-Bans can use voice commands to take photos and while offline, but the bag of voice tricks for future glasses could be even more expanded. Think fitness, music playback and more. "This device has a character as a standalone device as well. This is not just always linked or tethered to another device or tethered to the cloud. And I think that will open up quite a lot of scenarios," says Asghar. Meta Ray-Bans take better photos and videos than you might expect, but the video quality is wide-angle and still sometimes jittery when I'm moving. Asghar says the new chips will improve camera and image stabilization. "Let's say you're sitting in a restaurant at night, in dim light, and I have a menu in front of me that I want to translate. That's what we're working on, how to improve the low-light capability." Asghar also says camera stabilization will be better for videos and photos. I ask about whether zoom features could come to glasses like these, which would likely require in-glasses displays, something these chips support. Asghar points to digital cropping as an option, and even possibly eye-tracking-based zoom control in the future. Smart glasses are headed toward a deeper relationship with our phones and other wearables, and Qualcomm's already a player in all those spaces: it's also one of the key partners with Google's Android XR. Asghar sees watches as being a part of a wearable halo of gear that'll all work with glasses soon enough, but he sees even more potential in smaller wearables like rings today. "You could essentially use a ring, and you could use it very discreetly," he says. Your hand could be down, and nobody knows," Asghar says. Qualcomm's demos at AWE show off a Bluetooth smart ring for gesture controls with glasses, hinting at decisions that could be coming soon from other partners. Upcoming glasses could use their own processing pucks. Xreal is adopting a puck for its first experimental Android XR device, Project Aura, and Meta's already demo done for its concept Orion glasses. Part of the appeal of that puck is that it could allow glasses to more easily work around the limits of phones and their OSes (basically, Android and iOS) right now. "The great thing about glasses is it gives our cloud players the path to be able to bring their AI and LLMs and agents to consumers," Asghar says. "Not every cloud player has smartphone assets." Pucks could be a sign that while Android XR has now been announced as a future bridge to AR and VR devices, Google's still going to need some time to figure out how the phone-to-glasses link actually works. Apple, meanwhile, hasn't enabled any integrated smart glasses support for iOS at all, at least beyond individual apps like Meta's that are walled off from Siri and deeper phone access. I can't get through more than half a day of Ray-Bans use at best before I need a recharge. Asghar admits that glasses have a battery life problem at the moment but says some solutions could pop up. This new chip may not boost battery life that much, but more on-phone processing or connected pucks might help. "Today, the battery mostly resides on just one side. And we know that people are looking at basically doubling up the battery, having a dual-cell battery on both of the glasses legs to increase the capacity," he says. "Or maybe they can come up with an interesting design that allows a replaceable battery." AI leader OpenAI bought Jony Ive's hardware company, which is working on some sort of AI device that might be pendant-based instead of sitting on your face, or could sit on a desk. It's entirely possible that more camera-based AI wearables like the failed Humane AI Pin will be coming too. Asghar acknowledges that Qualcomm's processors could end up in devices other than glasses. "This is the great part, right?" he told me. "People are gonna really experiment with form factors. You don't get this opportunity very often. The smartphone is pretty settled. This [XR] space is not settled. An AI agent device can be smart glasses. It can also be something on your body." But Asghar knows whatever comes has to raise the bar better than AI device failures of the past. "There have been pretty significant failures. People do have very high expectations from products like this. They need to run, and they need to run very well." And hopefully last longer than a few hours on a charge.

[2]

Qualcomm says its new AR1+ Gen 1 chip can handle AI directly on smart glasses

It's also promising improved power management and premium image quality. Qualcomm's has launched its latest processor for smart glasses, and though it's a modest upgrade over the previous chip, it has a new trick. The Snapdragon AR1+ Gen 1 can run AI directly on devices with no need for a smartphone or cloud connection, allowing users to go out or do chores with only their smart glasses, the company claims. The chip could appear in next-gen AR glasses from the likes of Meta and XReal. Smart glasses often require large temple arms to accommodate chips and other components, but the AR1+ Gen 1 is 28 percent smaller than the the AR1 Gen 1, so it allows for a 20 percent temple height reduction. At the same time, it requires less power across key use cases including computer vision, wake with voice, Bluetooth playback and video streaming. Qualcomm also promises "premium" image quality via technologies like binocular display support, image stabilization and a massive multi-frame engine. The key feature, though, is the on-glass AI powered by Qualcomm's 3rd-gen Hexagon NPU, with 1 billion small language model (SLM) parameters on-glass. That allows it to run AI assistants that use SLMs like Llama 1B, with users speaking commands and seeing the results displayed on the glasses as text. "While on stage, I was at the 'supermarket' and asked my glasses for help with fettuccine alfredo I needed to make for my daughter's birthday party," wrote Qualcomm SVP of XR Ziad Asghar. "This demonstration was a world's first: an Autoregressive Generative AI model running completely on a pair of smart glasses." Qualcomm shouted out Meta's Ray-Ban glasses as well as its chunky Orion AR glasses prototype as examples of where smart glass technology is heading. It then added that tech like its Snapdragon AR1+ Gen 1 chip will enable "sleeker form factors that don't compromise on the ability to run AI models." Reading between the lines, you can expect the chip to appear in ever-slimmer standalone AI-powered smart glasses in the near future.

[3]

Qualcomm announces smaller Snapdragon AR1+ Gen 1 chip for smart glasses

At the Augmented World Expo (AWE) 2025 today, Qualcomm announced the Snapdragon AR1+ Gen 1 chip for smart glasses, while sharing its broader vision for the form factor. In 2023, Qualcomm announced the Snapdragon AR1 Gen 1, which powers Ray-Ban Meta glasses. The chipmaker is now following that with the AR1+ Gen 1. With a 26% smaller package, you can expect an up to 20% temple height reduction for more compact glasses. Meanwhile, better power management means Bluetooth playback, video streaming, computer vision, and wake with voice are less intensive. The Snapdragon AR1+ Gen 1 also improves image quality, but Qualcomm is particularly highlighting the ability to run a small language model (SML) on-device. It demoed an assistant powered by Llama 1B where "AI inferencing [was] done on the glasses without relying on the cloud or an internet connection." The premise is simple: AI glasses are set to operate independently without needing to be paired with a smartphone or the cloud. In the near future, I will be able to leave my phone in my pocket or in the car and just wear my smart glasses during a supermarket run, as I showed off during my AWE demo. However, Qualcomm does see smart glasses working with your other devices, while it's particularly interested in how smartwatches and smart rings could "enable new modalities of input." Besides gesture and 3DoF (rotational movements) control, they could provide motion tracking and health monitoring. Qualcomm also has the AR2 Gen 1 as its more premium glasses chip for immersive augmented experiences. For headsets, its lineup spans the XR1 Gen 1 to the XR2+ Gen 2. Qualcomm reiterated its work with Google and Samsung on Android XR, and will be sharing how Snapdragon Spaces developers can create apps for the upcoming platform at AWE.

[4]

Snapdragon AR1+ is the smart glasses breakthrough I've been waiting for - here's why

I just saw the future of smart glasses, and it's powered by Qualcomm's new chip Qualcomm has just announced the Snapdragon AR+1 Gen 1 chipset -- sure to power many of the best smart glasses of the near future with offline AI capabilities and entirely solo operation. So far, smart glasses like the Ray-Ban Meta specs need a smartphone connection to run all their tasks. And looking ahead, companies are looking to a computational puck that you pocket to take the heavy lifting off your glasses. That's the imbalance that Qualcomm's new chip aims to start fixing -- bringing us closer to my dream future for smart glasses in a device that does it all directly on the specs without needing an additional component. And after seeing a demo myself, I believe the team's onto something. Qualcomm's making a bet on augmented reality being done in three different ways for smart glasses so far: However, the chip company also sees a fourth, one that doesn't rely on some form of external device or cloud processing. That is, of course, having the computational potential built into the glasses themselves, but getting it down to a small enough size while maintaining sufficient performance for day-to-day use has not been possible...well, that is, until now. Snapdragon AR+1 Gen 1 is launching alongside the pre-existing XR2+ to provide enough power to run multi-modal AI capabilities entirely on-device for ultra-low latency, all while being 26% smaller than previous generations of this chip. AR+1 Gen 1 also brings an enhanced image quality for displays, performance improvements and drastic power efficiency boosts too. To make it work, you need models on the smaller side to fit - so-called Small Language Models (SLMs), but the result is, frankly, incredible. Running Llama 1B on the RayNeo X3 Pro smart glasses, SVP of Product Management Ziad Asghar ran through a complex set of queries to make fettuccine alfredo for his daughter's birthday party. Throughout each bit, Asghar is receiving both an audio response and text appearing on the glasses' lenses. The demonstration I saw is the kind of thing you'd expect to see at a lot ot AI-based keynotes, but the real magic here is that all of this is happening entirely locally on the device, no phone or cloud needed. Just the processor on the specs themselves! This is a world's first, and it's proof that we're heading in the right direction. Way back at CES 2024, I predicted an AR glasses revolution. Granted, I was about a year too early, but it's finally beginning to happen -- those parallel paths of development that you see VR headsets and smart glasses on are starting to merge. Qualcomm is hedging its bets at the moment, but the finish line everyone is racing towards is something with the power of a VR system crammed into something as small as a pair of specs. Snapdragon AR1+ Gen 1 is a huge step towards that, and I'm looking forward to seeing exactly what this silicon is able to pull off in glasses -- maybe giving Android XR a handy boost.

[5]

Qualcomm shares its vision for the future of smart glasses with on-glass Gen AI

Qualcomm has enabled what one of its executives said was a strange and "most interesting conversations- and it was with a pair of generative AI-powered smart glasses." In a talk at Augmented World Expo, Ziad Asghar, senior vice president of XR & spatial computing at Qualcomm, said that the chat wasn't just a simple demo. It was a glimpse into how we're turning AI glasses, which have long been considered an accessory, into a standalone, comprehensive capable device. The company also unveiled its Snapdragon AR1+ Gen 1 processor, which is 26% smaller than the previous generations, to power the demo. "On Tuesday, as I stood on stage at AWE USA, the world's largest XR conference, I chatted with an AI assistant through a pair of RayNeo X3 Pro smart glasses powered by Snapdragon technology, - with AI inferencing done on the glasses without relying on the cloud or an internet connection," said Ziad Asghar, senior vice president of XR & spatial computing at Qualcomm, in a statement. He said the premise is simple: AI glasses are set to operate independently without needing to be paired with a smartphone or the cloud. "In the near future, I will be able to leave my phone in my pocket or in the car and just wear my smart glasses during a supermarket run, as I showed off during my AWE demo," Asghar said. "While on stage, I was at the 'supermarket' and asked my glasses for help with fettuccine alfredo I needed to make for my daughter's birthday party." In response, the AI assistant, running Llama 1B, a small language model (SLM), understood the specific request and provided him with the information he needed through audio and text displayed in the lens of his glasses. This demonstration was a world's first: an Autoregressive Generative AI model running completely on a pair of smart glasses. No phone. No cloud. Just the processor powering the glasses themselves. And this industry milestone was pulled off in front of a live audience, he said. Topping this off was the announcement of our Snapdragon AR1+ Gen 1 processor, which is 26% smaller than the previous generations and brings enhanced image quality, size, power improvement and the ability to run SLMs. All four of these traits are critical for compact smart glasses. Together, they open the door to a revolution in AI smart glasses, with thinner, lighter and more varied glass designs paired with enough power to run AI assistants right on the device. So, while the demo was just one example of what you can do with completely on-device AI on smart glasses, the benefits that stem from the work going on at Qualcomm, are long-lasting and massive. Expand and evolve There isn't one path that XR headsets and smart glasses will take, especially since we also offer mixed reality processors such as Snapdragon XR2 and Snapdragon XR2+ that also have significant inferencing capability on the device. Asghar said he anticipates several different form factors, from standalone glasses powerful enough to run AI models themselves, to more lightweight frames linked to phones or nearby small computing "pucks" that can link anything from a car to a tablet. What Qualcomm is doing with the portfolio is getting ready for the future. Whether it's cloud computing, on-device, or a hybrid path that incorporates both, the boost in on-device AI capabilities will offer a seamless and ultra-low latency user experience that's also security-focused. That will be critical as AI-powered smart glasses find their way into sectors with mission-critical needs and users demand more personalization, more privacy features, and an end-to-end agentic experience. "We've already seen significant momentum in the XR industry over the last year. In December, we collaborated with Google and Samsung to launch Android XR, an operating system designed with AI at the core of the XR experience," he said. This comes as the industry continues to expand, with Meta's Ray-Ban Glasses, as well as more ambitious hardware such as Meta Orion, which it bills as the company's first true augmented reality glasses with their own digital overlay. In addition, Asghar said we've seen glasses from Rokid, RayNeo, XREAL and more. In March, BleeqUp launched a pair of AI-powered sports glasses. Imagine what these companies will be able to accomplish with smaller, more powerful platforms like Snapdragon AR1+ Gen 1, enabling sleeker form factors that don't compromise on the ability to run AI models, Asghar said. Smarter, more aware While getting smart glasses down to a reasonable size and fit is critical, another advance that Snapdragon AR1+ Gen 1 brings is camera capabilities typically found in premium smartphones, which is equally important to where they're evolving. That ability to see the world that you see - with every minute detail - will open new avenues of multimodal inputs. That capability is critical for AI to not only better understand what you see, but also connect the dots in a way that lets it proactively offer suggestions or additional context on an object or location. And while smart glasses will be able to run SLMs on their own, that doesn't mean they won't work in tandem with a constellation of devices around you, whether it's your smartphone or PC. In fact, I see smart watches and new devices such as smart rings or other wearable sensors that will enable new modalities of input as they work in concert with your glasses. At Qualcomm Technologies, we are preparing for a multifaceted future with a wide range of device combinations by creating a modular architecture that allows our partners to tap into the spatial computing industry to deliver a superior experience to consumers. That's why I know this conversation with my smart glasses' AI assistant is such a pivotal moment - it really marked the beginning of something huge. The work we're doing is just starting to unlock the game-changing potential of a deeper and more personalized agentic experience. "A world's first on-glass Gen AI demonstration: Qualcomm's vision for the future of smart glasses," said Qualcomm. "Our live demonstration of a generative AI assistant running completely on smart glasses - without the aid of a phone or the cloud -- and the reveal of the new Snapdragon AR1+ platform spark new possibilities for augmented reality."

[6]

Qualcomm announces its new and smaller Snapdragon AR1+ Gen 1 for smart glasses - Phandroid

Qualcomm just took the stage at AWE 2025 to unveil the Snapdragon AR1+ Gen 1, its newest chip for smart glasses -- and it's clear the company sees a big future for wearable AR. This update builds on the original AR1 Gen 1, which already powers the Ray-Ban Meta glasses. The new AR1+ Gen 1 is even smaller, cutting the chip size by 26%. That means smart glasses with a 20% reduction in temple height, making them noticeably more compact and wearable. According to Qualcomm, "The premise is simple: AI glasses are set to operate independently without needing to be paired with a smartphone or the cloud. In the near future, I will be able to leave my phone in my pocket or in the car and just wear my smart glasses during a supermarket run, as I showed off during my AWE demo." Qualcomm isn't just making things smaller. The Snapdragon AR1+ Gen 1 delivers improved power management for longer battery life, even with Bluetooth streaming, video, and computer vision running. Wake with voice is also less demanding, so you won't kill your battery just asking questions. The new chip can run a small language model (SML) directly on the glasses. In its demo, Qualcomm showed an assistant using Llama 1B that answered questions entirely on-device -- no cloud or internet required. The company also teased how smartwatches and smart rings might add even more ways to control and monitor smart glasses, from gestures to motion tracking and health data. On the premium side, Qualcomm still has the AR2 Gen 1 chip for more immersive AR, plus its XR lineup for headsets. Qualcomm also reaffirmed its close work with Google and Samsung on Android XR, promising more developer details soon.

[7]

Qualcomm Unveils Smart Glasses Gen AI Without Phone Or Cloud: 'Beginning Of Something Huge' - Qualcomm (NASDAQ:QCOM)

Technology giant Qualcomm Inc. QCOM aims to diversify its handset revenue in the future, and smart glasses could be one area of growth. What Happened: Qualcomm demonstrated on-glass Gen AI without a phone or the cloud at the Augmented World Expo this week. The live demonstration from Qualcomm Head of XR Ziad Asghar included a hypothetical scenario of leaving a phone in the car, running into the supermarket to get some items, and asking the glasses about making fettuccine alfredo. "My chat wasn't just a simple demo, but a glimpse into how we're turning AI glasses, which have long been considered an accessory, into a standalone, comprehensive capable device," Asghar said. The RayNeo X3 Pro smart glasses used in the demonstration are powered by Qualcomm's Snapdragon technology. Tuesday's demonstration is the world's first Gen AI model running on a pair of smart glasses without being paired with a phone or the cloud. "Just the processor powering the glasses themselves. And this industry milestone was pulled off in front a live audience," Asghar added. Qualcomm also announced the Snapdragon AR1+ Gen 1 processor at the Augmented World Expo. The company said the processor is 26% smaller than previous generations and brings enhanced image quality, size, power improvement and the ability to run SLMs. "All four of these traits are critical for compact smart glasses." Asghar said these features can help open the door "to a revolution in AI smart glasses" and run AI assistants right on the device. Read Also: Qualcomm Bets $2.4 Billion On Alphawave To Power AI Data Centers Why It's Important: Asghar said Tuesday's demonstration was one of many examples of what's capable with on-device AI on smart glasses and the work being done by Qualcomm in the augmented reality and mixed reality spaces. "I anticipate several different form factors, from standalone glasses powerful enough to run AI models themselves, to more lightweight frames linked to phones or nearby small computing 'pucks' that can link anything from a car to a tablet. What we're doing with our portfolio is getting ready for the future." Asghar said on-device AI capabilities for smart glasses could also be critical for sectors that need more security and privacy functions. The demonstration and new Snapdragon processor announcement came as Qualcomm gained momentum in the smart glasses sector. The company collaborated with Alphabet Inc GOOGGOOGL and Samsung in December to launch Android XR, an operating system for extended reality with AI at the core of the experience. Qualcomm has also partnered with Meta Platforms META on projects like the Meta Quest 3 and Ray-Ban Meta glasses. "Imagine what these companies will be able to accomplish with smaller, more powerful platforms like Snapdragon AR1+ Gen 1, enabling sleeker form factors that don't compromise on the ability to run AI models." Asghar said Qualcomm is launching a future of device combinations for its partners to provide superior experiences to customers. "That's why I know this conversation with my smart glasses' AI assistant is such a pivotal moment - it really marked the beginning of something huge." Asghar said Qualcomm is just beginning to unlock "the game-changing potential." QCOM Price Action: Qualcomm stock has traded between $120.80 and $230.63 over the last year. Qualcomm stock is up 2.7% year-to-date in 2025. Read Next: Qualcomm Beats Q2 Expectations On Earnings, Revenue: CEO Highlights Focus On 'Critical Factors We Can Control' Photo courtesy of Qualcomm. QCOMQualcomm Inc$158.702.12%Stock Score Locked: Want to See it? Benzinga Rankings give you vital metrics on any stock - anytime. Reveal Full ScoreEdge RankingsMomentum24.40Growth78.12Quality84.38Value50.74Price TrendShortMediumLongOverviewGOOGAlphabet Inc$181.492.17%GOOGLAlphabet Inc$180.002.22%METAMeta Platforms Inc$698.060.58%Market News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

Qualcomm unveils the Snapdragon AR1+ Gen 1 chip for smart glasses, enabling on-device AI processing and improved performance in a smaller package.

Qualcomm Unveils Next-Generation Chip for Smart Glasses

Qualcomm has announced its latest processor for smart glasses, the Snapdragon AR1+ Gen 1, promising significant advancements in on-device artificial intelligence (AI) capabilities and improved performance. This new chip is set to power the next generation of smart glasses, potentially including products from Meta and XReal

1

2

.Key Features and Improvements

The AR1+ Gen 1 chip boasts several notable enhancements over its predecessor:

-

Size Reduction: The chip is 26% smaller than the previous generation, allowing for a 20% reduction in temple height on smart glasses

2

3

. This advancement paves the way for sleeker and more compact designs that closely resemble traditional eyewear. -

Power Efficiency: Despite its smaller size, the new chip offers improved power management across various use cases, including computer vision, voice activation, Bluetooth playback, and video streaming

2

4

. -

Image Quality: Qualcomm promises "premium" image quality through technologies such as binocular display support, image stabilization, and a multi-frame engine

2

.

On-Device AI Capabilities

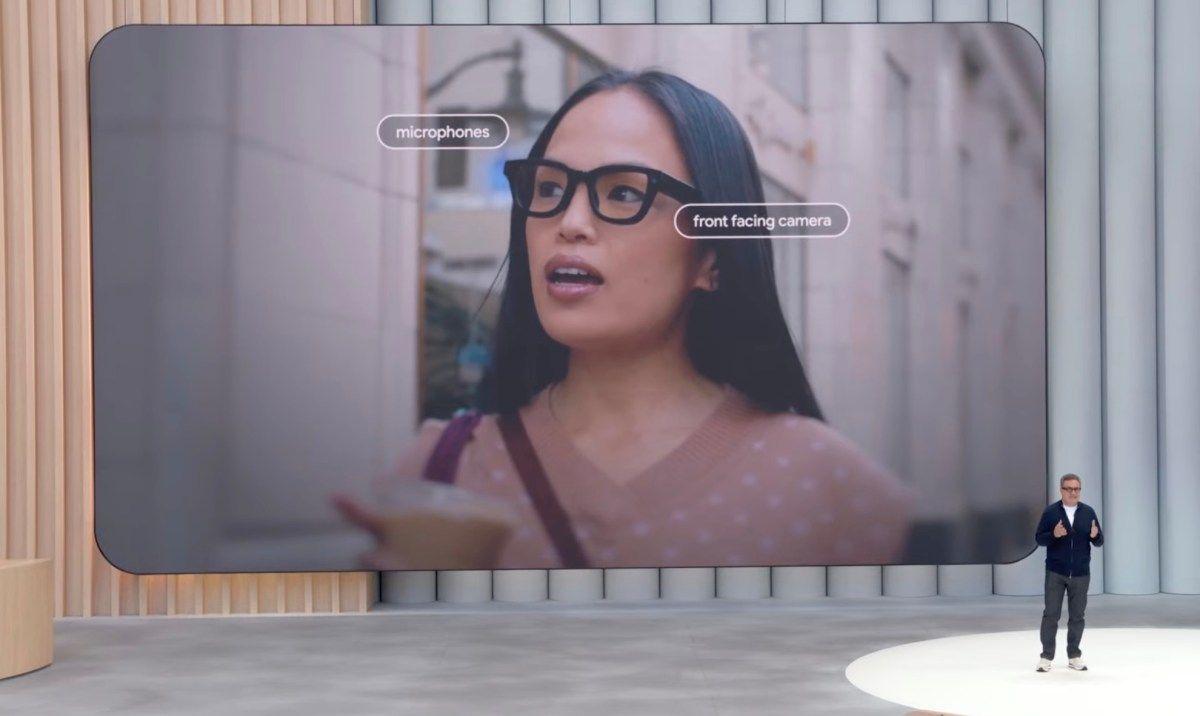

Source: Engadget

The standout feature of the AR1+ Gen 1 is its ability to run AI directly on the device without requiring a smartphone or cloud connection

1

2

4

. This is made possible by Qualcomm's 3rd-gen Hexagon NPU, which can handle up to 1 billion small language model (SLM) parameters on-glass2

.Ziad Asghar, Qualcomm's Senior Vice President of XR & Spatial Computing, demonstrated this capability at the Augmented World Expo (AWE) 2025. Using RayNeo X3 Pro smart glasses powered by the new chip, Asghar showcased an AI assistant running the Llama 1B model completely on-device

4

5

.Implications for Future Smart Glasses

The AR1+ Gen 1 chip represents a significant step towards more capable and independent smart glasses. Key implications include:

-

Standalone Functionality: Future smart glasses may operate independently without needing to be paired with a smartphone or relying on cloud processing

3

5

. -

Improved User Experience: On-device AI processing enables ultra-low latency and enhanced privacy for users

5

. -

Diverse Applications: The chip's capabilities open up possibilities for use in various sectors, including those with mission-critical needs

5

.

Related Stories

Industry Outlook and Partnerships

Source: Tom's Guide

Qualcomm's announcement comes amid growing momentum in the XR (extended reality) industry. The company is collaborating with Google and Samsung on Android XR, an operating system designed with AI at the core of the XR experience

5

.Several companies are already developing smart glasses that could benefit from this new chip, including Meta's Ray-Ban Glasses and more ambitious projects like Meta Orion

5

. Other players in the market include Rokid, RayNeo, XREAL, and BleeqUp5

.Future Vision for Smart Glasses

Source: CNET

Qualcomm envisions a future where smart glasses work in tandem with other devices, such as smartphones, PCs, smartwatches, and even smart rings

5

. The company is preparing for this multifaceted future by creating a modular architecture that allows partners to tap into the spatial computing industry5

.As the technology continues to evolve, we can expect to see smart glasses become more powerful, versatile, and integrated into our daily lives, potentially revolutionizing how we interact with digital information and AI assistants.

References

Summarized by

Navi

Related Stories

Qualcomm's Snapdragon XR Day Showcases AI-Powered Smart Glasses, Including Lenskart Partnership

21 Jul 2025•Technology

Samsung and Google Collaborate on Mixed Reality Smart Glasses, Qualcomm CEO Reveals

06 Sept 2024

Google AI glasses set to launch in 2026 with Gemini and Android XR across multiple partners

08 Dec 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation