Study Reveals Parents Trust AI Over Health Professionals for Children's Health Information

2 Sources

2 Sources

[1]

Research reveals AI's influence on parents seeking health care information

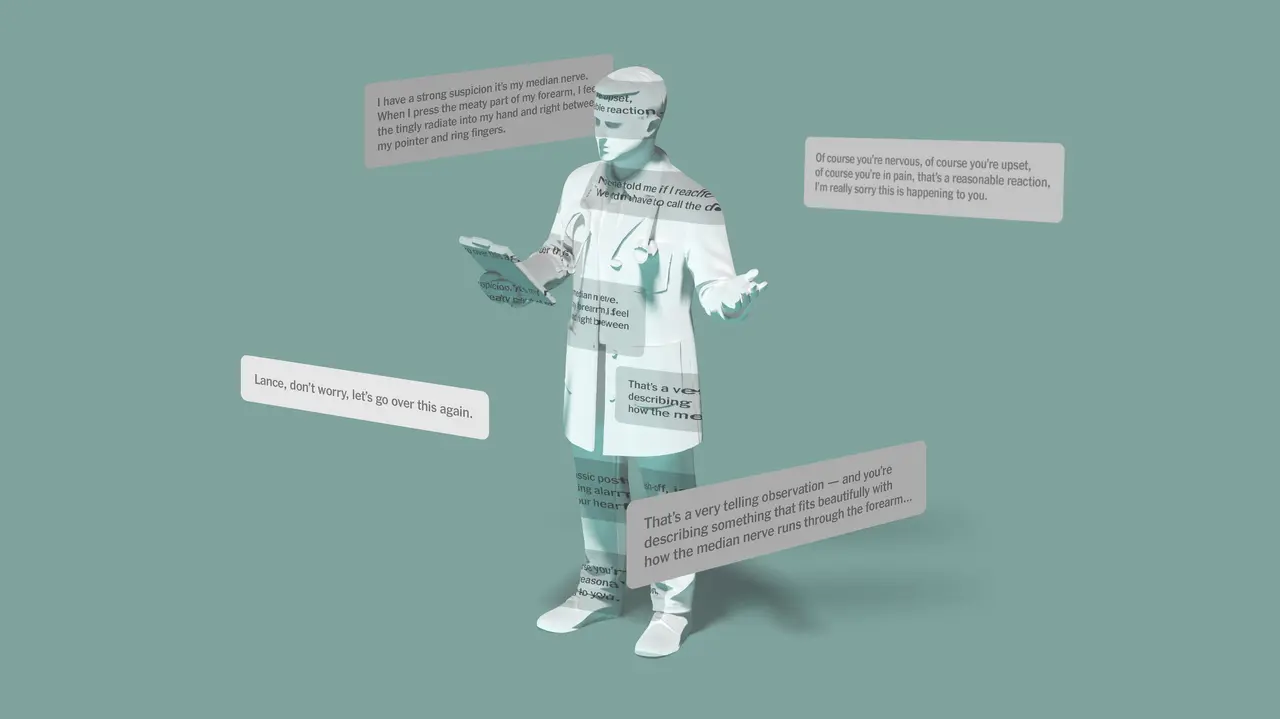

University of KansasOct 9 2024 New research from the University of Kansas Life Span Institute highlights a key vulnerability to misinformation generated by artificial intelligence and a potential model to combat it. The study, appearing in the Journal of Pediatric Psychology, reveals parents seeking health care information for their children trust AI more than health care professionals when the author is unknown, and parents also rate AI generated text as credible, moral and trustworthy. "When we began this research, it was right after ChatGPT first launched -; we had concerns about how parents would use this new, easy method to gather health information for their children," said lead author Calissa Leslie-Miller, KU doctoral student in clinical child psychology. "Parents often turn to the internet for advice, so we wanted to understand what using ChatGPT would look like and what we should be worried about." Leslie-Miller and her colleagues conducted a cross-sectional study with 116 parents, aged 18 to 65, who were given health-related text, such as information on infant sleep training and nutrition. They reviewed content generated by both ChatGPT and by health care professionals, though participants were not informed of the authorship. "Participants rated the texts based on perceived morality, trustworthiness, expertise, accuracy and how likely they would be to rely on the information," Leslie-Miller said. According to the KU researcher, in many cases parents couldn't distinguish between the content generated by ChatGPT and that by experts. When there were significant differences in ratings, ChatGPT was rated as more trustworthy, accurate and reliable than the expert-generated content. "This outcome was surprising to us, especially since the study took place early in ChatGPT's availability," said Leslie-Miller. "We're starting to see that AI is being integrated in ways that may not be immediately obvious, and people may not even recognize when they're reading AI-generated text versus expert content." Leslie-Miller said the findings raise concerns, because generative AI now powers responses that appear to come from apps or the internet but are actually conversations with an AI. "During the study, some early iterations of the AI output contained incorrect information," she said. "This is concerning because, as we know, AI tools like ChatGPT are prone to 'hallucinations' -; errors that occur when the system lacks sufficient context." Although ChatGPT performs well in many cases, Leslie-Miller said the AI model isn't an expert and is capable of generating wrong information. "In child health, where the consequences can be significant, it's crucial that we address this issue," she said. "We're concerned that people may increasingly rely on AI for health advice without proper expert oversight." Leslie-Miller's co-authors were Stacey Simon of the Children's Hospital Colorado & University of Colorado School of Medicine in Aurora, Colorado; Kelsey Dean of the Center for Healthy Lifestyles and Nutrition at Children's Mercy Hospital in Kansas City, Missouri; Dr. Nadine Mokhallati of Altasciences Clinical Kansas in Overland Park; and Christopher Cushing, associate professor of clinical child psychology at KU and associate scientist with the Life Span Institute. "Results indicate that prompt engineered ChatGPT is capable of impacting behavioral intentions for medication, sleep and diet decision-making," the authors report. Leslie-Miller said the life-and-death importance of pediatric health information helps to amplify the problem, but that the possibility that generative AI can be wrong and users may not have the expertise to identify inaccuracies extends to all topics. She suggested consumers of AI information need to be cautious and only rely on information that is consistent with expertise that comes from a nongenerative AI source. "There are still differences in the trustworthiness of sources," she said. "Look for AI that's integrated into a system with a layer of expertise that's double-checked -; just as we've always been taught to be cautious about using Wikipedia because it's not always verified. The same applies now with AI -; look for platforms that are more likely to be trustworthy, as they are not all equal." Indeed, Leslie-Miller said AI could be a benefit to parents looking for health information so long as they understand the need to consult with health professionals as well. "I believe AI has a lot of potential to be harnessed. Specifically, it is possible to generate information at a much higher volume than before," she said. "But it's important to recognize that AI is not an expert, and the information it provides doesn't come from an expert source." University of Kansas

[2]

Study finds parents relying on ChatGPT for health guidance about children

New research from the University of Kansas Life Span Institute highlights a key vulnerability to misinformation generated by artificial intelligence and a potential model to combat it. The study, appearing in the Journal of Pediatric Psychology, reveals parents seeking health care information for their children trust AI more than health care professionals when the author is unknown, and parents also rate AI generated text as credible, moral and trustworthy. "When we began this research, it was right after ChatGPT first launched -- we had concerns about how parents would use this new, easy method to gather health information for their children," said lead author Calissa Leslie-Miller, KU doctoral student in clinical child psychology. "Parents often turn to the internet for advice, so we wanted to understand what using ChatGPT would look like and what we should be worried about." Leslie-Miller and her colleagues conducted a cross-sectional study with 116 parents, aged 18 to 65, who were given health-related text, such as information on infant sleep training and nutrition. They reviewed content generated by both ChatGPT and by health care professionals, though participants were not informed of the authorship. "Participants rated the texts based on perceived morality, trustworthiness, expertise, accuracy and how likely they would be to rely on the information," Leslie-Miller said. According to the KU researcher, in many cases parents couldn't distinguish between the content generated by ChatGPT and that by experts. When there were significant differences in ratings, ChatGPT was rated as more trustworthy, accurate and reliable than the expert-generated content. "This outcome was surprising to us, especially since the study took place early in ChatGPT's availability," said Leslie-Miller. "We're starting to see that AI is being integrated in ways that may not be immediately obvious, and people may not even recognize when they're reading AI-generated text versus expert content." Leslie-Miller said the findings raise concerns, because generative AI now powers responses that appear to come from apps or the internet but are actually conversations with an AI. "During the study, some early iterations of the AI output contained incorrect information," she said. "This is concerning because, as we know, AI tools like ChatGPT are prone to 'hallucinations' -- errors that occur when the system lacks sufficient context." Although ChatGPT performs well in many cases, Leslie-Miller said the AI model isn't an expert and is capable of generating wrong information. "In child health, where the consequences can be significant, it's crucial that we address this issue," she said. "We're concerned that people may increasingly rely on AI for health advice without proper expert oversight." Leslie-Miller's co-authors were Stacey Simon of the Children's Hospital Colorado & University of Colorado School of Medicine in Aurora, Colorado; Kelsey Dean of the Center for Healthy Lifestyles and Nutrition at Children's Mercy Hospital in Kansas City, Missouri; Dr. Nadine Mokhallati of Altasciences Clinical Kansas in Overland Park; and Christopher Cushing, associate professor of clinical child psychology at KU and associate scientist with the Life Span Institute. "Results indicate that prompt engineered ChatGPT is capable of impacting behavioral intentions for medication, sleep and diet decision-making," the authors report. Leslie-Miller said the life-and-death importance of pediatric health information helps to amplify the problem, but that the possibility that generative AI can be wrong and users may not have the expertise to identify inaccuracies extends to all topics. She suggested consumers of AI information need to be cautious and only rely on information that is consistent with expertise that comes from a nongenerative AI source. "There are still differences in the trustworthiness of sources," she said. "Look for AI that's integrated into a system with a layer of expertise that's double-checked -- just as we've always been taught to be cautious about using Wikipedia because it's not always verified. The same applies now with AI -- look for platforms that are more likely to be trustworthy, as they are not all equal." Indeed, Leslie-Miller said AI could be a benefit to parents looking for health information so long as they understand the need to consult with health professionals as well. "I believe AI has a lot of potential to be harnessed. Specifically, it is possible to generate information at a much higher volume than before," she said. "But it's important to recognize that AI is not an expert, and the information it provides doesn't come from an expert source."

Share

Share

Copy Link

A University of Kansas study finds that parents tend to trust AI-generated health information for their children more than content from health professionals when the author is unknown, raising concerns about the potential spread of misinformation.

AI Outperforms Health Professionals in Parent Trust for Child Health Information

A groundbreaking study from the University of Kansas Life Span Institute has uncovered a concerning trend in how parents seek health information for their children. The research, published in the Journal of Pediatric Psychology, reveals that parents are more likely to trust AI-generated content over that produced by health care professionals when the author is unknown

1

2

.Study Methodology and Key Findings

Led by Calissa Leslie-Miller, a doctoral student in clinical child psychology, the cross-sectional study involved 116 parents aged 18 to 65. Participants were presented with health-related texts on topics such as infant sleep training and nutrition, generated by both ChatGPT and health care professionals

1

.Key findings include:

- Parents often couldn't distinguish between AI-generated and expert-created content.

- When differences were noted, ChatGPT's content was rated as more trustworthy, accurate, and reliable.

- AI-generated text was perceived as credible, moral, and trustworthy by parents

2

.

Implications and Concerns

The study's results raise significant concerns about the potential spread of misinformation in child health care. Leslie-Miller emphasized the risks associated with AI's "hallucinations" - errors occurring when the system lacks sufficient context

1

."In child health, where the consequences can be significant, it's crucial that we address this issue," Leslie-Miller stated. "We're concerned that people may increasingly rely on AI for health advice without proper expert oversight."

2

AI's Impact on Health Decision-Making

The research team, including experts from various institutions, concluded that "prompt engineered ChatGPT is capable of impacting behavioral intentions for medication, sleep and diet decision-making"

1

2

. This finding underscores the potential influence of AI on critical health choices made by parents for their children.Related Stories

Recommendations for Consumers

While acknowledging AI's potential benefits in generating high volumes of information, the researchers advise caution:

- Verify information with non-AI expert sources.

- Seek AI integrated with expert oversight and fact-checking.

- Treat AI-generated health information similarly to crowd-sourced platforms like Wikipedia

1

.

Future Implications

The study highlights the need for increased awareness and education about AI's limitations in providing expert health advice. As AI continues to integrate into various information channels, distinguishing between AI-generated and expert-created content becomes increasingly crucial for consumers, especially in sensitive areas like child health care

2

.This research serves as a wake-up call for both the healthcare industry and AI developers to ensure that AI-generated health information is properly vetted and contextualized to prevent the spread of misinformation and potential health risks.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation