EU Unveils AI Code of Practice: Balancing Innovation with Regulation

18 Sources

18 Sources

[1]

Everything tech giants will hate about the EU's new AI rules

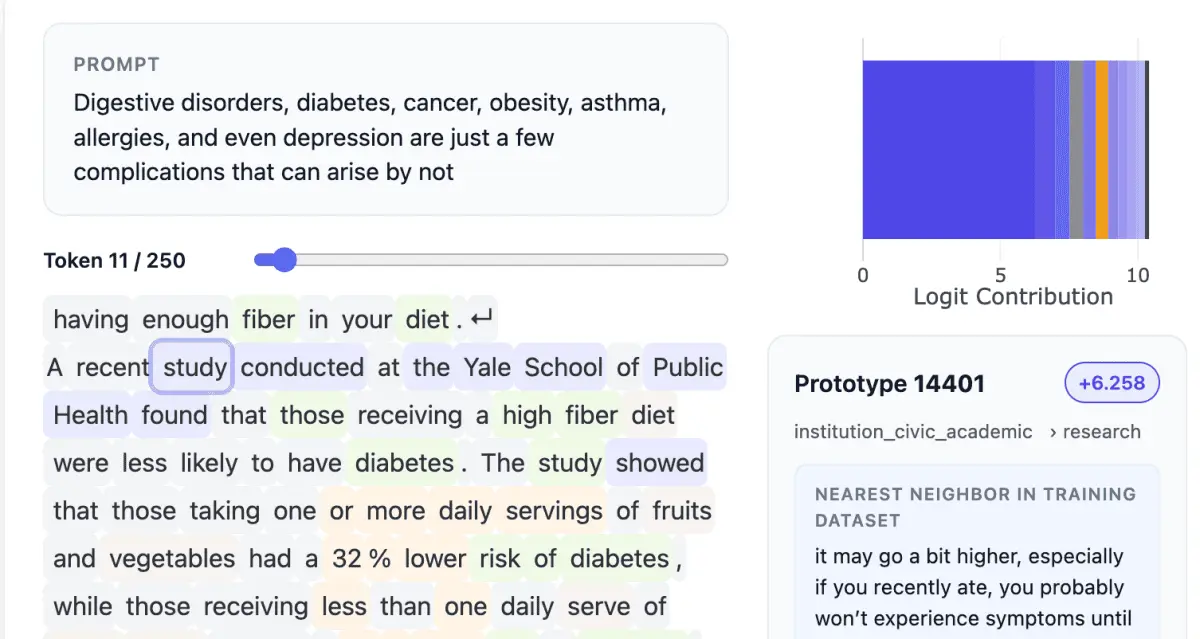

The European Union is moving to force AI companies to be more transparent than ever, publishing a code of practice Thursday that will help tech giants prepare to comply with the EU's landmark AI Act. These rules -- which have not yet been finalized and focus on copyright protections, transparency, and public safety -- will initially be voluntary when they take effect for the biggest makers of "general purpose AI" on August 2. But the EU will begin enforcing the AI Act in August 2026, and the Commission has noted that any companies agreeing to the rules could benefit from a "reduced administrative burden and increased legal certainty," The New York Times reported. Rejecting the voluntary rules could force companies to prove their compliance in ways that could be more costly or time-consuming, the Commission suggested. The AI industry participated in drafting the AI Act, but some companies have recently urged the EU to delay enforcement of the law, warning that the EU may risk hampering AI innovation by placing heavy restrictions on companies. Among the most controversial commitments that the EU is asking companies like Google, Meta, and OpenAI to voluntarily make is a promise to never pirate materials for AI training. Many AI companies have controversially used pirated book datasets to train AI, including Meta, which suggested that individual books are individually worthless to train AI after being called out for torrenting unauthorized book copies. But the EU doesn't agree, recommending that tech companies designate staffers and create internal mechanisms to field complaints "within a reasonable timeframe" from rightsholders, who must be allowed to opt their creative works out of AI training data sets. The EU rules pressure AI makers to take other steps the industry has mostly resisted. Most notably, AI companies will need to share detailed information about their training data, including providing a rationale for key model design choices and disclosing precisely where their training data came from. That could make it clearer how much of each company's models depend on publicly available data versus user data, third-party data, synthetic data, or some emerging new source of data. The code also details expectations for AI companies to respect paywalls, as well as robots.txt instructions restricting crawling, which could help confront a growing problem of AI crawlers hammering websites. It "encourages" online search giants to embrace a solution that Cloudflare is currently pushing: allowing content creators to protect copyrights by restricting AI crawling without impacting search indexing. Additionally, companies are asked to disclose total energy consumption for both training and inference, allowing the EU to detect environmental concerns while companies race forward with AI innovation. More substantially, the code's safety guidance provides for additional monitoring for other harms. It makes recommendations to detect and avoid "serious incidents" with new AI models, which could include cybersecurity breaches, disruptions of critical infrastructure, "serious harm to a person's health (mental and/or physical)," or "a death of a person." It stipulates timelines of between five and 10 days to report serious incidents with the EU's AI Office. And it requires companies to track all events, provide an "adequate level" of cybersecurity protection, prevent jailbreaking as best they can, and justify "any failures or circumventions of systemic risk mitigations." Ars reached out to tech companies for immediate reactions to the new rules. OpenAI, Meta, and Microsoft declined to comment. A Google spokesperson confirmed that the company is reviewing the code, which still must be approved by the European Commission and EU member states amid expected industry pushback. "Europeans should have access to first-rate, secure AI models when they become available, and an environment that promotes innovation and investment," Google's spokesperson said. "We look forward to reviewing the code and sharing our views alongside other model providers and many others." These rules are just one part of the AI Act, which will start taking effect in a staggered approach over the next year or more, the NYT reported. Breaching the AI Act could result in AI models being yanked off the market or fines "of as much as 7 percent of a company's annual sales or 3 percent for the companies developing advanced AI models," Bloomberg noted.

[2]

EU Rolls Out AI Code With Broad Copyright, Transparency Rules

The European Union published a code of practice to help companies follow its landmark AI Act that includes copyright protections for creators and transparency requirements for advanced models. The code will require developers to provide up-to-date documentation describing their AI's features to regulators and third parties looking to integrate it in their own products, the European Commission said Thursday. Companies also will be banned from training AI on pirated materials and must respect requests from writers and artists to keep copyrighted work out of datasets. If AI produces material that infringes copyright rules, the code of practice will require companies to have a process in place to address it.

[3]

EU explains how to do AI without breaking the law

The EU has a new set of AI regulations poised to take effect soon. While debate over them continues, Brussels has put out a handy guidebook to help companies make sense of what they can and cannot do. The European Commission announced the publication of the General-Purpose AI Code of Practice on Wednesday with the goal of helping folks comply with the AI Act. Parties subject to the Act will be able to sign on to the Code to indicate that they're in compliance, but it's purely voluntary. Broken into three parts, the Code has two brief chapters outlining responsibilities AI companies face regarding transparency and obeying copyright, as well as a much longer section on safety and security that the Commission noted is "relevant only to a limited number of providers of the most advanced models." The same goes for the AI Act in general. As we've noted in our prior coverage, it puts the greatest onus for compliance on the largest, most powerful frontier AI models, and those operating in the most critical sectors or whose operations have the greatest potential for harm. The Code's three chapters include all the things you'd expect from a typical AI regulation, like preventing the output of copyrighted content, requiring AI scraping bots to obey robots.txt, and mandating risk assessments. Beyond those basic rules, the Code also calls for companies to fill out a documentation form that details all the intricacies of a model (including energy consumption data) and keep model documentation on file for a decade for each version. It even recommends that AI companies that "also provide an online search engine" refrain from downranking pages that refuse to be ingested by the company's AI - and no, that's not targeted at anyone in particular, why do you ask? The Code's publication doesn't mean it's official yet - that'll require endorsement by the EU states and the Commission - but even then it won't mean all that much. As the EC noted, there's no push for companies to sign on if they don't want to. That said, the Code does reflect the AI Act as its written, so they're more than just guidelines - companies ignore them at their peril. This all assumes the AI Act's general-purpose rules enter into application on 2 August as planned. A number of European companies have come out against the AI Act, calling for its delay and simplification to ensure continental AI firms would be able to compete with unnamed "AI behemoths," some of whom have also called for a pause on enforcement. The European arm of the Computer and Communications Industry Association (CCIA), a pro-tech trade association, expressed disappointment in the Code, saying it added further confusion to a set of rules that are badly in need of clarification. As one example, the CCIA says, the Code imposes rules that aren't requirements in the Act, while other requirements from the Act are missing. If it's that obviously messy, why would any company want to sign on? "Without meaningful improvements, signatories remain at a disadvantage compared to non-signatories, thereby undermining the Commission's competitiveness and simplification agenda," said Boniface de Champris, a senior policy manager at CCIA Europe. Others are urging the EU not to bow to corporate pressure. A group of academics, watchdog groups, and privacy advocates signed an open letter to the Commission urging it not to delay enforcement, as doing so would call into question Europe's dedication to its own principles of "putting consumer and fundamental rights at the center of all legislation." "We call upon the Commission to prioritise the full implementation and proper enforcement of the AI Act instead of re-opening or delaying its implementation," the open letter pleaded. Whichever way the Commission swings on AI Act enforcement, it'll still take some time for punishments to be meted out: While the AI Act applies as of next month, the Commission won't have enforcement power for some time. According to the EC, "new models" released on or after August 2, 2025, will have one year to get fully compliant, while older models get two years. A lot could change between now and then. ®

[4]

EU code of practice to help firms with AI rules will focus on copyright, safety

BRUSSELS, July 10 (Reuters) - A code of practice designed to help thousands of companies comply with the European Union's landmark artificial intelligence rules will focus on transparency, copyright, safety and security, the European Commission said on Thursday. The comments came as the EU executive presented a final draft of the guidance, which will apply from Aug. 2 but will only be enforced a year later. Signing up to the code is voluntary, but companies who decline to do so, as some Big Tech firms have indicated, will not benefit from the legal certainty provided to a signatory. While the guidance on transparency and copyright will apply to all providers of general-purpose AI models, the chapters on safety and security target providers of the most advanced models. "Co-designed by AI stakeholders, the Code is aligned with their needs. Therefore, I invite all general-purpose AI model providers to adhere to the Code. Doing so will secure them a clear, collaborative route to compliance with the EU's AI Act," EU tech chief Henna Virkkunen said. Reporting by Foo Yun Chee; Editing by GV De Clercq Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Artificial Intelligence Foo Yun Chee Thomson Reuters An agenda-setting and market-moving journalist, Foo Yun Chee is a 21-year veteran at Reuters. Her stories on high profile mergers have pushed up the European telecoms index, lifted companies' shares and helped investors decide on their next move. Her knowledge and experience of European antitrust laws and developments helped her break stories on Microsoft, Google, Amazon, Meta and Apple, numerous market-moving mergers and antitrust investigations. She has previously reported on Greek politics and companies, when Greece's entry into the eurozone meant it punched above its weight on the international stage, as well as on Dutch corporate giants and the quirks of Dutch society and culture that never fail to charm readers.

[5]

EU pushes ahead with AI code of practice

The EU has unveiled its code of practice for general purpose artificial intelligence, pushing ahead with its landmark regulation despite fierce lobbying from the US government and Big Tech groups. The final version of the code, which helps explain rules that are due to come into effect next month for powerful AI models such as OpenAI's GPT-4 and Google's Gemini, includes copyright protections for creators and potential independent risk assessments for the most advanced systems. The EU's decision to push forward with its rules comes amid intense pressure from US technology groups as well as European companies over its AI act, considered the world's strictest regime regulating the development of the fast-developing technology. This month the chief executives of large European companies including Airbus, BNP Paribas and Mistral urged Brussels to introduce a two-year pause, warning that unclear and overlapping regulations were threatening the bloc's competitiveness in the global AI race. Brussels has also come under fire from the European parliament and a wide range of privacy and civil society groups over moves to water down the rules from previous draft versions, following pressure from Washington and Big Tech groups. The EU had already delayed publishing the code, which was due in May. Henna Virkkunen, the EU's tech chief, said the code was important "in making the most advanced AI models available in Europe not only innovative, but also safe and transparent". Tech groups will now have to decide whether to sign the code, and it still needs to be formally approved by the European Commission and member states. The Computer & Communications Industry Association, whose members include many Big Tech companies, said the "code still imposes a disproportionate burden on AI providers". "Without meaningful improvements, signatories remain at a disadvantage compared to non-signatories, thereby undermining the commission's competitiveness and simplification agenda," it said. As part of the code, companies will have to commit to putting in place technical measures that prevent their models from generating content that reproduces copyrighted content. Signatories also commit to testing their models for risks laid out in the AI act. Companies that provide the most advanced AI models will agree to monitor their models after they have been released, including giving external evaluators access to their most capable models. But the code does give them some leeway in identifying risks their models might pose. Officials within the European Commission and in different European countries have been privately discussing streamlining the complicated timeline of the AI act. While the legislation entered into force in August last year, many of its provisions will only come into effect in the years to come. European and US companies are putting pressure on the bloc to delay upcoming rules on high-risk AI systems, such as those that include biometrics and facial recognition, which are set to come into effect in August next year.

[6]

EU unveils AI code of practice to help businesses comply with bloc's rules

LONDON (AP) -- The European Union on Thursday released a code of practice on general purpose artificial intelligence to help thousands of businesses in the 27-nation bloc using the technology comply with the bloc's landmark AI rule book. The EU code is voluntary and complements the EU's AI Act, a comprehensive set of regulations that was approved last year and is taking effect in phases. The code focuses on three areas: transparency requirements for providers of AI models that are looking to integrate them into their products; copyright protections; and safety and security of the most advanced AI systems The AI Act's rules on general purpose artificial intelligence are set to take force on Aug. 2. The bloc's AI Office, under its executive Commission, won't start enforcing them for at least a year. General purpose AI, exemplified by chatbots like OpenAI's ChatGPT, can do many different tasks and underpin many of the AI systems that companies are using across the EU. Under the AI Act, uses of artificial intelligence face different levels of scrutiny depending on the level of risk they pose, with some uses deemed unacceptable banned entirely. Violations could draw fines of up to 35 million euros ($41 million), or 7% of a company's global revenue. Some Big Tech companies such as Meta have resisted the regulations, saying they're unworkable, and U.S. Vice President JD Vance, speaking at a Paris summit in February, criticized "excessive regulation" of AI, warning it could kill "a transformative industry just as it's taking off." More recently, more than 40 European companies, including Airbus, Mercedes-Benz, Philips and French AI startup Mistral, urged the bloc in an open letter to postpone the regulations for two years. They say more time is needed to simplify "unclear, overlapping and increasingly complex EU regulations" that put the continent's competitiveness in the global AI race at risk. There was no sign that Brussels was prepared to stop the clock. "Today's publication of the final version of the Code of Practice for general-purpose AI marks an important step in making the most advanced AI models available in Europe not only innovative but also safe and transparent," the commission's executive vice president for tech sovereignty, security and democracy, Henna Virkkunen, said in a news release.

[7]

European Union Unveils Rules for Powerful A.I. Systems

Makers of the most advanced artificial intelligence systems will face new obligations for transparency, copyright protection and public safety. The rules are voluntary to start. European Union officials unveiled new rules on Thursday to regulate artificial intelligence. Makers of the most powerful A.I. systems will have to improve transparency, limit copyright violations and protect public safety. The rules, which are voluntary to start, come during an intense debate in Brussels about how aggressively to regulate a new technology seen by many leaders as crucial to future economic success in the face of competition with the United States and China. Some critics accused regulators of watering down the rules to win industry support. The guidelines apply only to a small number of tech companies like OpenAI, Microsoft and Google that make so-called general-purpose A.I. These systems underpin services like ChatGPT, and can analyze enormous amounts of data, learn on their own and perform some human tasks. The so-called code of practice represents some of the first concrete details about how E.U. regulators plan to enforce a law, called the A.I. Act, that was passed last year. Tech companies played a major role in drafting the rules, which will be voluntary when they take effect on Aug. 2, before becoming enforceable in August 2026, according to the European Commission, the executive branch of the 27-nation bloc. The European Commission said companies that agreed to the voluntary code of practice would benefit from a "reduced administrative burden and increased legal certainty." Officials said those that do not would have to prove compliance through other means, which could potentially be more costly and time-consuming. It was not immediately clear which companies would join. Google and OpenAI said they were reviewing the final text. Microsoft declined to comment. Meta, which had signaled it will not agree to the code of conduct, did not have an immediate comment. Amazon and Mistral, a leading A.I. company in France, did not respond to a request for comment. Under the guidelines, tech companies will have to provide detailed summaries about the content used for training their algorithms, something long sought by media publishers concerned that their intellectual property is being used to trained the A.I. systems. Other rules would require the companies to conduct risk assessments to see how their services could be misused for things like creating biological weapons that pose a risk to public safety. (The New York Times has sued OpenAI and its partner, Microsoft, claiming copyright infringement of news content related to A.I. systems. The two companies have denied the suit's claims.) What is less clear is how the law will address issues like the spread of misinformation and harmful content. This week, Grok, a chatbot created by Elon Musk's artificial intelligence company, xAI, shared several antisemitic comments on X, including praise of Hitler. Henna Virkkunen, the European Commission's executive vice president for tech sovereignty, security and democracy, said the policy was "an important step in making the most advanced A.I. models available in Europe not only innovative but also safe and transparent." The guidelines introduced on Thursday are just one part of a sprawling law that will take full effect over the next year or more. The act was intended to prevent the most harmful effects of artificial intelligence, but European officials have more recently been weighing the consequences of regulating such a fast-moving and competitive technology. Leaders across the continent are increasingly worried about Europe's economic position against the United States and China. Europe has long struggled to produce large tech companies, making it dependent on services from foreign corporations. Tensions with the Trump administration over tariffs and trade have intensified the debate. Groups representing many European businesses have urged policymakers to delay implementation of the A.I. Act, saying the regulation threatens to slow innovation, while putting their companies at a disadvantage against foreign competition. "Regulation should not be the best export product from the E.U.," said Aura Salla, a member of the European Parliament from Finland who was previously a top lobbyist for Meta in Brussels. "It's hurting our own companies."

[8]

Europe's AI code urges companies to disclose training data and avoid copyright violations

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Recap: The European Union introduced what it calls the world's first comprehensive legal framework last year to regulate AI development. Although the regulations will not be enforceable for some time, recently introduced guidelines aim to facilitate compliance from tech giants, including OpenAI, Microsoft, and Google, which will likely attempt to resist or circumvent the new rules. The European Commission recently introduced an AI code of practice to help large language models comply with last year's AI Act. The conditions are voluntary, but the commission suggests that agreeing to them is the easiest way for tech giants to adhere to the AI Act. Although the new regulations enter into effect on August 2, new AI models have one year to comply with them, and existing models have a two-year grace period. The code of practice, which targets generalized LLMs from companies like OpenAI, Microsoft, and Google, consists of three sections, covering transparency, copyright, and safety. The transparency section asks applicants to disclose details about how they develop and train their AI models, including energy usage, required processing power, data points, and other information. The dominant AI giants will likely bristle at the requirement to reveal where they obtained their training data. Furthermore, the new EU rules include demands that LLMs respect paywalls, refrain from circumventing crawl denials, and otherwise observe copyright law, which AI companies are already fighting against. European internet publishers recently filed an antitrust complaint against Google's AI Overviews, a feature that summarizes information from various websites without users having to visit them. Denmark also recently proposed a law granting citizens copyright over their likenesses, allowing them to file claims against deepfakes made without their consent. Moreover, AI companies have admitted that the technology might be incompatible with existing copyright law. A former Meta executive warned that AI might die "overnight" if LLMs were forced to obtain permission from every copyright holder. The CEO of Getty Images also disclosed that the company cannot contest every copyright claim related to AI. The AI code's safety section addresses potential threats to citizens' personal safety and rights. For example, language in the AI Act mentions high-risk implications associated with the technology, including surveillance, weapons development, fraud, and misinformation.

[9]

EU unveils strict AI code targeting OpenAI, Google, Microsoft

European Union flags outside the European Commission headquarters in Brussels. The European Union has introduced a voluntary code of practice for general-purpose artificial intelligence. The guidelines aim to help companies comply with the bloc's AI Act, set to take effect next month. The new rules target a small number of powerful tech firms like OpenAI, Microsoft, Google, and Meta, which develop foundational AI models used across multiple products and services. While the code is not legally binding, it lays out requirements for transparency, copyright protection, and safety. Officials say companies that adopt the code will benefit from a "reduced administrative burden and increased legal certainty."

[10]

EU unveils AI code of practice to help businesses comply with bloc's rules

The European Union on Thursday released a code of practice on general purpose artificial intelligence to help thousands of businesses in the 27-nation bloc using the technology comply with the bloc's landmark AI rule book. The EU code is voluntary and complements the EU's AI Act, a comprehensive set of regulations that was approved last year and is taking effect in phases. The code focuses on three areas: transparency requirements for providers of AI models that are looking to integrate them into their products; copyright protections; and safety and security of the most advanced AI systems The AI Act's rules on general purpose artificial intelligence are set to take force on Aug. 2. The bloc's AI Office, under its executive Commission, won't start enforcing them for at least a year. General purpose AI, exemplified by chatbots like OpenAI's ChatGPT, can do many different tasks and underpin many of the AI systems that companies are using across the EU. Under the AI Act, uses of artificial intelligence face different levels of scrutiny depending on the level of risk they pose, with some uses deemed unacceptable banned entirely. Violations could draw fines of up to 35 million euros ($41 million), or 7% of a company's global revenue. Some Big Tech companies such as Meta have resisted the regulations, saying they're unworkable, and U.S. Vice President JD Vance, speaking at a Paris summit in February, criticized "excessive regulation" of AI, warning it could kill "a transformative industry just as it's taking off." More recently, more than 40 European companies, including Airbus, Mercedes-Benz, Philips and French AI startup Mistral, urged the bloc in an open letter to postpone the regulations for two years. They say more time is needed to simplify "unclear, overlapping and increasingly complex EU regulations" that put the continent's competitiveness in the global AI race at risk. There was no sign that Brussels was prepared to stop the clock. "Today's publication of the final version of the Code of Practice for general-purpose AI marks an important step in making the most advanced AI models available in Europe not only innovative but also safe and transparent," the commission's executive vice president for tech sovereignty, security and democracy, Henna Virkkunen, said in a news release. © 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

[11]

Industry calls for delay as EU's AI crackdown begins

The EU has released the Code of Practice ("Code") for General-Purpose AI Models (GPAI), a preparatory measure for the AI Act set to take effect on August 2, 2025. The Code aims to guide companies in complying with the upcoming regulations. However, the timeline is tight, and the industry is calling for a delay in enforcement, claiming that the preparation time is insufficient. Originally scheduled for release in May, the Code was delayed but finalized in July. It is led by the European Commission (EC) and developed in collaboration with AI labs, tech companies, academia, and digital rights organizations. It focuses on transparency, copyright protection, and enhanced safety and safeguards for advanced models. Models such as OpenAI's ChatGPT, Anthropic's Claude, and Google's Gemini all fall under the GPAI classification. Henna Virkkunen, executive vice president for digital policy at the EC, stated that the Code ensures that AI innovation remains safe and transparent. The EU is currently conducting risk assessments to determine whether it can be implemented as scheduled in August. Companies are encouraged to sign the code voluntarily, but violators of the AI Act may face fines of up to 7% of their global annual revenue. It is currently not disclosed how many companies are willing to sign. The EU will require companies to disclose extensive information about their models, including training processes, data volume and sources, energy usage, computational resources, distribution methods, and licensing. The most contentious requirement among companies involves disclosing the sources of training, testing, and validation data, with an explicit ban on the use of pirated content. Where a company's data comes from must also be specified, be it via web crawlers, publicly available datasets, non-public datasets obtained from third parties, user data, privately sourced datasets, synthetic data, or more. Companies must also indicate whether inappropriate data sources, such as involuntarily shared intimate images or child abuse content, have been identified and removed. Developers must respect creators' requests to exclude copyrighted content and must implement mechanisms to address AI-generated copyright violations. For high-capability models, companies are required to monitor deployment continuously and allow independent external evaluators access to model systems for audit purposes. The AI Act's four risk levels are unacceptable, high, limited, and minimal. GPAI's regulations are to be rolled out in stages, with high-risk applications involving facial or biometric recognition to be set in place in August 2026. Forty-five European companies, including ASML, Mistral AI, SAP, Philips, Airbus, and Mercedes-Benz, signed an open letter urging the EU to delay enforcement of GPAI and high-risk AI regulations by two years to give the industry more time to prepare. According to Euronews, companies that sign the Code will not be expected to fully comply by August 2. The EU is also considering delaying certain obligations for high-risk systems. For GPAI models that were launched in Europe before the AI Act takes effect, companies will be granted a 36-month transition period to bring their products into compliance.

[12]

EU waiting for companies to sign delayed AI Code

It remains unclear which and how many companies will sign the code of practice. AI providers can soon sign up to the European Commission's Code of Practice for General Purpose AI (GPAI), a voluntary set of rules aiming to help providers of AI models such as ChatGPT and Gemini, comply with the AI Act. On Thursday the Commission published the Code, less than a month before the rules on GPAI will start applying on 2 August. The document includes three chapters: Transparency and Copyright, both addressing all providers of general-purpose AI models, and Safety and Security, relevant only to a limited number of providers of the most advanced models. Companies that sign up are expected to be compliant with the AI Act and are expected to have more legal certainty, others will face more scrutiny from the Commission. Providers of AI systems previously said they do not have enough time to comply before the rules kick and asked the EU executive for a grace period. Once companies sign, they do not have to be fully compliant with new rules on 2 August, a source familiar with the issue told Euronews. The AI Act itself - rules that regulate artificial intelligence systems according to the risk they pose to society - entered into force in August 2024 but will fully apply in 2027. The Code of Practice on GPAI, drafted by experts appointed by the Commission, was supposed to come out in May but faced delays and heavy criticism. Tech giants as well as publishers and rights-holders are concerned that the rules violate the EU's Copyright laws, and restrict innovation. Earlier this week CEOs from more than 40 European companies including ASML, Philips, Siemens and Mistral asked the Commission to impose a "two-year clock-stop" on the AI Act to give them more time to comply with the obligations on high-risk AI systems, due to take effect as of August 2026, and to obligations for GPAI models, due to enter into force this August. A source told Euronews that the Commission is considering a possible delay of these high-risk system obligations in case the underlying standards are not ready in time. This would only apply to these specific models and not affect other parts of the Act. The Commission and the EU member states will now need to carry out a risk assessment to check the adequacy of the Code. It remains unclear if it will be ready before the entry into force in August. It also remains unclear how many companies will sign the code, and whether they have the possibility to adhere to just some elements of the document.

[13]

EU's AI Code ready for companies to sign next week

Both the member states and the European Commission need to formally give the green light to the Code, which helps providers of AI systems comply with the EU's AI Act. Member states' formal approval of the Code of Practice for General Purpose AI (GPAI) could come as early as 22 July, paving the way for providers of AI systems to sign up, sources familiar with the matter told Euronews. It will be just days before the entry into force of the AI Act's provisions affecting GPAI systems, on 2 August. The European Commission last week presented the Code, a voluntary set of rules drafted by experts appointed by the EU executive, aiming to help providers of AI models such as ChatGPT and Gemini comply with the AI Act. Companies that sign up are expected to be compliant with the AI Act and are expected to have more legal certainty, others will face more inspections. The Code requires a sign off by EU member states, which are represented in a subgroup of the AI Board, as well as by the Commission's own AI Office. The 27 EU countries are expected to finalise assessment of the Code next week and if the Commission also completes its assessment by then too, the providers can formally sign up. The document, which was supposed to come out in May, faced delays and heavy criticism. Tech giants as well as publishers and rights-holders are concerned that the rules violate the EU's Copyright laws, and restrict innovation. The EU's AI Act, that regulates AI systems according to the risk they pose to society and is coming into force in stages beginning in August last year. In the meantime, OpenAI, the parent company of ChatGPT, has said it will sign up to the code once its ready. "The Code of Practice opens the door for Europe to move forward with the EU AI Continent Action Plan that was announced in April -- and to build on the impact of AI that is already felt today," the statement said. The publication drew mixed reactions, with consumer group BEUC and Center for Democracy and Technology (CDT) hesitant about the final version of the code. BEUC Senior Legal Officer, Cláudio Teixeira called the development a "step in the right direction", but underlined that voluntary initiatives like the Code of Practice "can be no substitute for binding EU legislation: they must complement and reinforce, not dilute, the law's core protections for consumers." CDT Europe's Laura Lazaro Cabrera said the final draft "stops short of requiring their in-depth assessment and mitigation in all cases." "The incentive for providers to robustly identify these risks will only be as strong as the AI Office's commitment to enforce a comprehensive, good-faith approach," she said.

[14]

EU's AI Code of Practice tackles transparency, copyright and safety

The EU's General-Purpose AI Code of Practice which aims to help businesses comply with the EU AI Act has been finalised. The European Commission has today received the final version of the General-Purpose AI Code of Practice, a voluntary tool designed to help industry comply with the EU AI Act's rules on general-purpose AI, which enter into application on 2 August 2025. The rules will become enforceable by the AI Office of the Commission one year later in August 2026 for new models and August 2027 for existing models. "This aims to ensure that general-purpose AI models placed on the European market - including the most powerful ones - are safe and transparent," the Commission said in a statement. In the coming weeks, Member States and the Commission will have the opportunity to assess the adequacy of the guidelines, and further complementary Commission guidelines on key concepts related to general-purpose AI models are expected later this month. These will clarify who is in and out of scope of the AI Act's general-purpose AI rules. Back in April the Commission launched its consultation process on the guidelines that aimed to clarify key concepts underlying the provisions in the AI Act on general-purpose AI (GPAI) models. It invited stakeholders to "bring their practical experience to shape clear, accessible EU rules on general-purpose AI (GPAI) models in a targeted consultation that will contribute to the upcoming Commission guidelines". The Guidelines can be downloaded here, and they come under three main chapters: Transparency and Copyright, which apply to all providers of general-purpose AI models, and Safety and Security which is relevant only to a limited number of providers of the most advanced models. "Today's publication of the final version of the Code of Practice for general-purpose AI marks an important step in making the most advanced AI models available in Europe not only innovative but also safe and transparent," said Henna Virkkunen, executive vice-president for tech sovereignty, security and democracy. "Co-designed by AI stakeholders, the Code is aligned with their needs. Therefore, I invite all general-purpose AI model providers to adhere to the Code. Doing so will secure them a clear, collaborative route to compliance with the EU's AI Act." Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[15]

EU Unveils AI Code of Practice to Help Businesses Comply With Bloc's Rules

LONDON (AP) -- The European Union on Thursday released a code of practice on general purpose artificial intelligence to help thousands of businesses in the 27-nation bloc using the technology comply with the bloc's landmark AI rule book. The EU code is voluntary and complements the EU's AI Act, a comprehensive set of regulations that was approved last year and is taking effect in phases. The code focuses on three areas: transparency requirements for providers of AI models that are looking to integrate them into their products; copyright protections; and safety and security of the most advanced AI systems The AI Act's rules on general purpose artificial intelligence are set to take force on Aug. 2. The bloc's AI Office, under its executive Commission, won't start enforcing them for at least a year. General purpose AI, exemplified by chatbots like OpenAI's ChatGPT, can do many different tasks and underpin many of the AI systems that companies are using across the EU. Under the AI Act, uses of artificial intelligence face different levels of scrutiny depending on the level of risk they pose, with some uses deemed unacceptable banned entirely. Violations could draw fines of up to 35 million euros ($41 million), or 7% of a company's global revenue. Some Big Tech companies such as Meta have resisted the regulations, saying they're unworkable, and U.S. Vice President JD Vance, speaking at a Paris summit in February, criticized "excessive regulation" of AI, warning it could kill "a transformative industry just as it's taking off." More recently, more than 40 European companies, including Airbus, Mercedes-Benz, Philips and French AI startup Mistral, urged the bloc in an open letter to postpone the regulations for two years. They say more time is needed to simplify "unclear, overlapping and increasingly complex EU regulations" that put the continent's competitiveness in the global AI race at risk. There was no sign that Brussels was prepared to stop the clock. "Today's publication of the final version of the Code of Practice for general-purpose AI marks an important step in making the most advanced AI models available in Europe not only innovative but also safe and transparent," the commission's executive vice president for tech sovereignty, security and democracy, Henna Virkkunen, said in a news release. Copyright 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed.

[16]

EU code of practice to help firms with AI rules will focus on copyright, safety - The Economic Times

A code of practice designed to help thousands of companies comply with the European Union's landmark artificial intelligence rules will focus on transparency, copyright, safety and security, the European Commission said on Thursday. The comments came as the EU executive presented a final draft of the guidance, which will apply from August 2 but will only be enforced a year later. Signing up to the code is voluntary, but companies who decline to do so, as some Big Tech firms have indicated, will not benefit from the legal certainty provided to a signatory. While the guidance on transparency and copyright will apply to all providers of general-purpose AI models, the chapters on safety and security target providers of the most advanced models. "Co-designed by AI stakeholders, the Code is aligned with their needs. Therefore, I invite all general-purpose AI model providers to adhere to the Code. Doing so will secure them a clear, collaborative route to compliance with the EU's AI Act," EU tech chief Henna Virkkunen said.

[17]

European AI Law to Prioritize Openness, Copyright Protection and Model Safety | PYMNTS.com

By completing this form, you agree to receive marketing communications from PYMNTS and to the sharing of your information with our sponsor, if applicable, in accordance with our Privacy Policy and Terms and Conditions. The code was developed by a panel of 13 independent experts and forms part of the EU's broader ambition to establish a global benchmark for AI governance. While adherence to the code is not mandatory, the European Commission noted that companies choosing not to participate will not benefit from the legal clarity afforded to those who do, per Reuters. This latest move comes ahead of the phased implementation of the EU's AI Act, which officially came into force in June 2024. The regulation imposes tiered requirements based on the risk profile of AI systems, with the strictest rules reserved for applications deemed high-risk. General-purpose AI models, such as those powering widely used chatbots and language generators, are subject to more moderate obligations. Read more: Federal Judge Sides with Meta in Authors' AI Copyright Lawsuit Beginning August 2, 2025, compliance will become mandatory for new general-purpose AI models released to the market. Existing models will have until August 2, 2027, to align with the legislation. The guidance on transparency and copyright will be applicable across all providers of general-purpose AI, while directives on safety and security will specifically target developers of advanced systems like OpenAI's ChatGPT, Google's Gemini, Meta's Llama, and Anthropic's Claude. The code's final approval still hinges on formal endorsement by EU member states and the European Commission, a step that is anticipated by the end of 2025. EU digital policy head Henna Virkkunen urged providers to take part, describing the code as a practical and collaborative tool for navigating regulatory expectations.

[18]

EU Publishes Final AI Code of Practice to Guide Compliance for AI Companies | PYMNTS.com

The code's publication "marks an important step in making the most advanced AI models available in Europe not only innovative but also safe and transparent," Henna Virkkunen, executive vice president for tech sovereignty, security and democracy for the commission, which is the EU's executive arm, said in a statement. The code was developed by 13 independent experts after hearing from 1,000 stakeholders, which included AI developers, industry organizations, academics, civil society organizations and representatives of EU member states, according to a Thursday (July 10) press release. Observers from global public agencies also participated. The EU AI Act, which was approved in 2024, is the first comprehensive legal framework governing AI. It aims to ensure that AI systems used in the EU are safe and transparent, as well as respectful of fundamental human rights. The act classifies AI applications into risk categories -- unacceptable, high, limited and minimal -- and imposes obligations accordingly. Any AI company whose services are used by EU residents must comply with the act. Fines can go up to 7% of global annual revenue. The code is voluntary, but AI model companies who sign on will benefit from lower administrative burdens and greater legal certainty, according to the commission. The next step is for the EU's 27 member states and the commission to endorse it. Read also: European Commission Says It Won't Delay Implementation of AI Act The code is structured into three core chapters: Transparency; Copyright; and Safety and Security. The Transparency chapter includes a model documentation form, described by the commission as "a user-friendly" tool to help companies demonstrate compliance with transparency requirements. The Copyright chapter offers "practical solutions to meet the AI Act's obligation to put in place a policy to comply with EU copyright law." The Safety and Security chapter, aimed at the most advanced systems with systemic risk, outlines "concrete state-of-the-art practices for managing systemic risks." The drafting process began with a plenary session in September 2024 and proceeded through multiple working group meetings, virtual drafting rounds and provider workshops. The code takes effect Aug. 2, but the commission's AI Office will enforce the rules on new AI models after one year and on existing models after two years.

Share

Share

Copy Link

The European Union has published a code of practice to help tech companies comply with its upcoming AI Act, focusing on transparency, copyright protection, and safety measures for advanced AI models.

EU's New AI Code of Practice

The European Union has unveiled a comprehensive code of practice aimed at helping tech companies navigate the complexities of the upcoming AI Act

1

. This move marks a significant step in the EU's efforts to regulate the rapidly evolving field of artificial intelligence, balancing innovation with concerns over transparency, copyright, and public safety.

Source: Interesting Engineering

Key Features of the Code

The code, set to take effect on August 2, 2025, initially on a voluntary basis, outlines several crucial requirements for AI companies

2

:- Transparency: Companies must provide detailed documentation about their AI models, including design choices and training data sources.

- Copyright Protection: AI developers are prohibited from using pirated materials for training and must respect requests from creators to exclude their work from datasets.

- Safety Measures: The code recommends procedures for detecting and avoiding "serious incidents" with new AI models, including cybersecurity breaches and potential harm to individuals.

Industry Reactions and Concerns

The AI industry's response to the new code has been mixed. While some companies are reviewing the guidelines, others have expressed concerns about potential impacts on innovation and competitiveness

3

:- Google stated they are reviewing the code and emphasized the importance of secure AI models and innovation-friendly environments.

- The Computer and Communications Industry Association (CCIA) criticized the code for adding confusion to existing rules and potentially disadvantaging signatories.

Timeline and Enforcement

The EU's approach to implementing these regulations follows a staggered timeline

4

:- August 2, 2025: Voluntary adoption begins

- August 2026: Full enforcement of the AI Act commences

- New models released after August 2, 2025, have one year to achieve compliance

- Older models are granted a two-year compliance period

Source: Euronews

Related Stories

Global Impact and Future Implications

The EU's AI regulations are poised to have far-reaching effects beyond Europe. As the world's strictest AI regulatory regime, it may set a precedent for other regions

5

:- US tech companies and the government have lobbied against aspects of the regulations.

- European companies have called for a two-year pause, citing concerns about competitiveness in the global AI race.

- The European Parliament and civil society groups have criticized attempts to water down the rules.

Source: PYMNTS

Conclusion

As the EU pushes forward with its AI regulations, the tech industry faces a pivotal moment. The success of this regulatory framework could shape the future of AI development globally, striking a balance between innovation and responsible use of this powerful technology.

References

Summarized by

Navi

[1]

[3]

Related Stories

Google to Sign EU's AI Code of Practice, Highlighting Big Tech Divide on AI Regulation

30 Jul 2025•Policy and Regulation

EU's AI Act Code of Practice: Balancing Innovation and Regulation in Latest Draft

12 Mar 2025•Policy and Regulation

EU AI Act Takes Effect: New Rules and Implications for AI Providers and Users

01 Aug 2025•Policy and Regulation

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation